Learn how to generate high-quality synthetic datasets from real data samples without coding or credit cards in your pocket. Here is everything you need to know to get started.

In this blogpost you will learn:

- What do you need to generate synthetic data?

- What is a data sample?

- What are data subjects?

- What is a subject table?

- How and when to synthesize a single subject table?

- What is not a subject table?

- How to launch your first synthetic data generation?

- How and when to synthesize data in two tables?

- What are linked tables?

- How to create linked tables?

- What is the perfect setup for synthetic data generation?

- How to connect subject and linked tables for synthesization?

- What types of synthetic data can you synthesize?

- How to optimize synthetic data generation for speed or accuracy?

- What are the most common synthetic data use cases?

- How to get expert help with synthetic data generation?

What do you need to generate synthetic data?

If you want to know how to generate synthetic data, the good news is, that you need absolutely no coding knowledge to be able to synthesize datasets on MOSTLY AI’s synthetic data platform. What is even better news is that you can have access to the world’s best quality synthetic data generation for free, generating up to 100K rows daily. Not even a credit card is required, only a suitable sample dataset.

First, you need to register a free synthetic data generation account using your email address. Second, you need a suitable data sample. If you want to know how to generate synthetic data using an AI-powered tool, like MOSTLY AI, you need to know how to prepare your sample dataset, that the AI algorithm will learn from. We'll tell you all about what makes a dataset ready for synthesization in this blogpost.

When do you need a synthetic data generation tool?

Generating synthetic data based on real data makes sense in a number of different use cases and data protection is only one of them. Thanks to how the process of synthetic data generation works, you can use an AI-powered synthetic data generator to create bigger, smaller or more balanced, yet realistic versions of your original data. It’s not rocket science. It’s better than that - it’s data science automated.

When choosing a synthetic data generation tool, you should take two very important benchmarks into consideration: accuracy and privacy. Some synthetic data generators are better than others, but all synthetic data should be quality assured and MOSTLY AI’s platform generates an automatic privacy and accuracy report for each synthetic data set. What’s more, MOSTLY AI’s synthetic data is better quality than open source synthetic data.

If you know what you are doing, it's really easy to generate realistic and privacy-safe synthetic data alternatives for your structured datasets. MOSTLY AI's synthetic data platform offers a user interface that is easy to navigate and requires absolutely no coding. All you need is sample data and a good understanding of the building blocks of synthetic data generation. Here is what you need to understand how to generate synthetic data.

Generate synthetic data straight from your browser

What is a data sample?

Generative tabular data is based on real data samples. In order to create AI-generated synthetic data, you need to provide a data sample of your original data to the synthetic data generator to learn its statistical properties, like correlations, distributions and hidden patterns.

Ideally your sample data set should contain at least 5,000 data subjects (= rows of data). If you don't have that much data that doesn't mean you shouldn’t try - go ahead and see what happens. But don't be too disappointed if the achieved data quality is not satisfactory. Automatic privacy protection mechanisms are in place to protect your data subjects, so you won’t end up with something potentially dangerous in any case.

What are data subjects?

The data subject is the person or entity whose identity you want to protect. Before considering synthetic data generation, always ask yourself whose privacy you want to protect. Do you want to protect the anonymity of the customers in your webshop? Or the employees in your company? Think about whose data is included in the data samples you will be using for synthetic data generation. They or those will be your data subjects.

The first step of privacy protection is to clearly define the protected entity. Before starting the synthetic data generation process, make sure you know who or what the protected entities of a given synthesization are.

What is a subject table?

The data subjects are defined in the subject table. The subject table has one very crucial requirement: one row is one data subject. All the information which belongs to a single subject - e.g. a customer, or an employee - needs to be contained in the row that belongs to the specific data subject. In the most basic data synthesization process, there is only one table, the subject table.

This is called a single-table synthesization and is commonly used to quickly and efficiently anonymize datasets describing certain populations or entities. In contrast with old data anonymization techniques like data masking, aggregation or randomization, the utility of your data will not be affected by the synthesization.

How and when to synthesize a single subject table?

Synthesizing a single subject table is the easiest and fastest way to generate highly realistic and privacy safe synthetic datasets. If you are new to synthetic data generation, a single table should be the first thing you try.

If you want to synthesize a single subject table, your original or sample table needs to meet the following criteria:

- Each subject is a distinct, real-world entity. A customer, a patient, an employee, or any other real entity.

- Each row describes only one subject.

- Each row can be treated independently - the content of one row does not affect the content of other rows. In other words, there are no dependencies between the rows.

- When synthesizing single tables, the order of the rows will not be preserved throughout the synthesization process. For example, alphabetical order will not be maintained. However, you can re-introduce such ordering in post-processing.

Information entered into the subject table should not be time-dependent. Time-series data should be handled in two or more tables, called linked tables, which we will talk about later.

What is not a subject table?

So, how do you spot it if your table that you would like to synthesize is not a subject table? If you see the same data subjects in the table twice, in different rows, it’s fair to say that the table you have cannot be a subject table as it is. In the example below, you can see a company’s records of sick leaves. Since more than one row belongs to the same person, this table would not work as a subject table.

There are some other examples when a table cannot be considered a subject table. For example, when the rows contain overlapping groups, the table cannot be used as a subject table, because the requirement of independent rows is not met.

Another example of datasets not suitable for single-table synthesization are datasets that contain behavioral or time-series data. Here the different rows come with time dependencies. Tables containing data about events need to be synthesized in a two-table set up.

If your dataset is not suitable as a subject table "out of the box" you will need to perform some pre-processing of the data to make it suitable for data synthesization.

It’s time to launch your first synthetic data generation job!

How to generate synthetic data step by step

The good news is, that you need absolutely no coding knowledge to be able to synthesize datasets on MOSTLY AI’s synthetic data platform. What is even better news is that you can have access to the world’s best quality synthetic data generation for free, generating up to 100K rows daily. Not even a credit card is required, only a suitable subject table. First, you need to register a free synthetic data generation account using your email address.

Step 1 - Upload your subject table

Once you are inside MOSTLY AI’s synthetic data platform, you can upload your subject table. Click on Jobs, then on Launch a new job. Your subject table needs to be in CSV or Parquet format. We recommend using Parquet files.

Feel free to upload your own dataset - it will be automatically deleted once the synthetic data generation has taken place. MOSTLY AI’s synthetic data platform runs in a secure cloud environment and your data is kept safe by the strictest data protection laws and policies on the globe, since we are a company with roots in the EU.

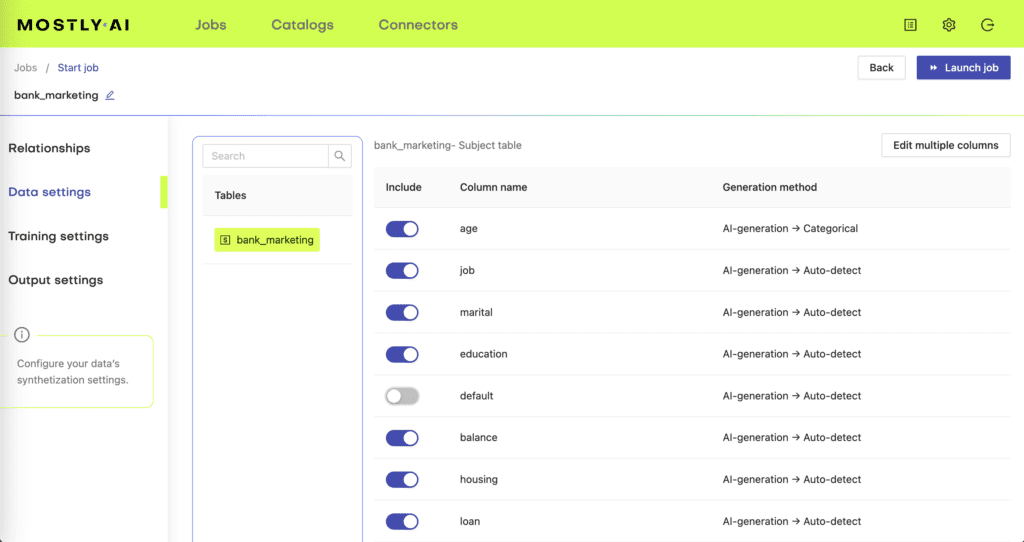

Step 2 - Check column types

Once you upload your subject table, it’s time to check your table’s columns under the Table details tab. MOSTLY AI’s synthetic data platform automatically identifies supported column types. However, you might want to change these from what was automatically detected. Your columns can be:

- Numerical - any number with up to eight digits after the decimal point

- Datetime - with hours, minutes, seconds or milliseconds if available, but a simple date format with YYYY-MM-DD also works

- Categorical - with defined categories, such as gender, marital status, language or educational level

There are other column types you can use too, for example, text or location coordinates, but these are the main column types automatically detected by the synthetic data generator.

Step 3 - Train and generate

Under the Settings tabs you have the option to change how the synthesization is done. You can specify how many data subjects you want the synthetic data generator to learn from and how many you want to generate. Changing these would make sense for different use cases.

For example, if you want to generate synthetic data for software testing, you might choose to downsample your original dataset into smaller, more manageable chunks. You can do this by entering a smaller number of generated subjects under Output settings than what is in your original subject tables.

Pro tip from our data scientists: enter a smaller number of training subjects than what your original dataset has to launch a trial synthesization. Let’s say you have 1M rows of data. Use only 10K of the entire data set for a test run. This way you can check for any issues quickly. Once you complete a successful test run, you can use all of your data for training. If you leave the Number of training subjects field empty, the synthetic data generator will use all of the subjects of your original dataset for the synthesization.

Generating more data samples than what was in the original dataset can be useful too. Using synthetic data for machine learning model training can significantly improve model performance. You can simply boost your model with more synthetic samples than what you have in production or upsample minority records with synthetic examples.

You can also optimize for a quicker synthesization by changing the Training goal to Speed from Accuracy.

Once the process of synthesization is complete, you can download your very own synthetic data! Your trained model is saved for future synthetic data generation jobs, so you can always go back and generate more synthetic records based on the same original data. You can also choose to generate more data or to download the Quality Assurance report.

Step 4 - Check the quality of your synthetic data

Each synthetic data set generated on MOSTLY AI’s synthetic data platform comes with an interactive quality assurance report. If you are new to synthetic data generation or less interested in the data science behind generative AI, simply check if the synthetic data set passed your accuracy expectations. If you would like to dive deeper into the specific distributions and correlations, take a closer look at the interactive dashboards of the QA report.

How and when to synthesize data in two tables?

Synthesizing data in two separate tables is necessary when your data set contains temporal information. In more simple terms, to synthesize events, you need to separate your data subjects - the people or entities to whom the events or behavior belong to - and the events themselves. For example, credit card transaction data or patient journeys are events that need to be handled differently than descriptive subject tables. This so-called time-series or behavioral data needs to be included in linked tables.

What are linked tables?

Now we are getting to the exciting part of synthetic data generation, that is able to unlock the most valuable behavioral datasets, like transaction data, CRM data or patient journeys. Linked tables containing rich behavioral data is where AI-powered synthetic data generators really shine. This is due to their ability to pick up on patterns in massive data sets that would otherwise be invisible to the naked eyes of data scientists and BI experts.

These are also among the most sensitive data types, full of extremely valuable (think customer behavior), yet off-limits, personally identifiable, juicy details. Behavioral data is hard to anonymize without destroying the utility of the data. Synthetic behavioral data generation is a great tool for overcoming this so-called privacy-utility trade off.

How to create linked tables?

The structure of your sample needs to follow the subject table - linked table framework. We already discussed subject tables - here the trick is to make sure that information about one data subject must be contained in one row only. You should move columns that are static to the subject table and model the rest as a linked table.

MOSTLY AI’s algorithm learns statistical patterns distributed in rows, so if you have information that belongs to a single individual across multiple rows, you’ll be creating phantom data subjects. The resulting synthetic data might include phantom behavioral patterns not present in the original data.

The perfect set up for synthetic data generation

Your ideal synthetic data generation set up is where the subject table’s IDs refer to the events contained in the linked table. The linked table contains several rows that refer to the same subject ID - these are events that belong to the same individual.

Keeping your subject table and linked table aligned is the most important part of a successful synthetic data generation. Include the ID columns in both tables as primary and foreign keys for establishing the referential relationship.

How to connect subject and linked tables for synthesization?

MOSTLY AI’s synthetic data generation platform offers an easy-to-use, no-code interface where tables can be linked and synthesized. Simply upload your subject table and linked tables.

The platform automatically detects primary keys (the id column) and foreign keys (the <subject_table_name> _id column) once the subject table and the linked tables are specified. You can also select these manually. Once you defined the relationship between your tables, you are ready to launch your synthesization for two tables.

Synthetic data types and what you should know about them

The most common data types - numerical, categorical and datetime - are recognized by MOSTLY AI and handled accordingly. Here is what you should know when generating synthetic data from different types of input data.

Synthetic numeric data

Numeric data contains only numbers and are automatically treated as numeric columns. Synthetic numeric data keeps all the variable statistics such as mean, variance and quantiles. N/A values are handled separately and the proportion of that is retained in the synthetic data. MOSTLY AI automatically detects missing values and reproduces it in the synthetic data, for example, if the likelihood of N/A changes depending on other variables. N/A needs to be encoded as empty strings.

Extreme values in numeric data have a high risk of disclosing sensitive information, for example, by exposing the CEO in a payroll database as the highest earner. MOSTLY AI’s built-in privacy mechanism replaces the smallest and largest outliers with the smallest and largest non-outliers to protect the subjects’ privacy.

If the synthetic data generation relies on only a few individuals for minimum and maximum values, the synthetic data can differ in these. One more reason to give the CEO’s salary to as many people in your company as possible is to protect his or her privacy - remember this argument next time equal payment comes up. 🙂 Kidding aside, removing these extreme outliers is a necessary step to protect from membership inference attacks. MOSTLY AI’s synthetic data platform does this automatically, so you don’t have to worry about outliers.

Synthetic datetime data type

Columns in datetime format are treated automatically as datetime columns. Just like in the case of synthetic numeric data, extreme datetime values are also protected and the distribution of N/As is preserved. In linked tables, using the ITT encoding for inter-transaction time improves the accuracy of your synthetic data on time between events, for example when synthesizing ecommerce data with order statuses of order time, dispatch time, arrival time.

Synthetic categorical data type

Categorical data comes with a fixed number of possible values. For example, marital status, qualifications or gender in a database describing a population of people. Synthetic data retains the probability distribution of the categories, containing only those categories present in the original data. Rare categories are protected independently for each categorical column.

Synthetic location data type

MOSTLY AI’s synthetic data generator can synthesize geolocation coordinates with high accuracy. You need to make sure that latitude and longitude coordinates are in a single field, separated by a comma, like this: 37.311, 173.8998

Synthetic text data type

MOSTLY AI’s synthetic data generator can synthesize up to 1000 character long unstructured texts. The resulting synthetic text is representative of the terms, tokens, their co-occurrence and sentiment of the original. Synthetic text is especially useful for machine learning use cases, such as sentiment analysis and named-entity recognition. You can use it to generate synthetic financial transactions, user feedback or even medical assessments. MOSTLY AI is language agnostic, so you won’t experience biases in synthetic text.

You can improve the quality of your text columns that include specific patterns, like email addresses, phone numbers, transaction IDs, social security numbers, if you change these to character sequence data type.

Configure synthetic data generation model training

You can optimize the synthetic data generation process for accuracy or speed, depending on what you need the most. Since the main statistical patterns are learned by the synthetic data generator in the first epochs, you can choose to stop the training by selecting the Speed option if you don’t need to include minority classes and detailed relationships in your synthetic data.

When you optimize for accuracy, the training continues until no further model improvement can be achieved. Optimizing for accuracy is a good idea when you are generating synthetic data for data analytics use cases or outlier detection. If you want to generate synthetic data for software testing, you can optimize for speed, since high statistical accuracy is not an important feature of synthetic test data.

Synthetic data use cases from no-brainer to genius

The most common synthetic data use cases range from simple, like data sharing to complex, like explainable AI, which is part data sharing and part data simulation.

Another entry level synthetic data generation project can be generating realistic synthetic test data, for example for stress testing and for the delight of your off-shore QA teams. As we all know, production data should never, ever see the inside of test environments (right?). However, mock data generators cannot mimic the complexity of production data. Synthetic test data is the perfect solution combining realism and safety in one.

Synthetic data is also one of the most mature privacy-enhancing technologies. If you want to share your data with third parties safely, it’s a good idea to run it through a synthetic data generator first. And the genius part? Synthetic data is set to become a fundamental part of explainable and fair AI, ready to fix human biases embedded in datasets and to provide a data window into the souls of algorithms.

Expert synthetic data help every step of the way

No matter which use case you decide to tackle first, we are here for you from the first steps to the last and beyond! If you would like to dive deeper into synthetic data generation, please feel free to browse and search through MOSTLY AI’s Documentation. But most importantly, practice makes best, so register your free forever account and launch your first synthetic data generation job now.

✅ Data prep checklist for synthetic data generation

1. SPLIT SINGLE SEQUENTIAL DATASETS INTO SUBJECT AND LINKED TABLES

If your raw data includes events and is contained in a single table, you need to split it into a subject table and a linked table. If your single table contains event data, move these sequential data points into another table. Make sure that the new table is linked by the foreign key to the primary key in the subject table. That is, each individual or entity in the subject table is referred to by the linked table with the relevant ID.

How sequential data is structured also matters. If your events are contained in columns, make sure you model them into rows. Each row should describe a separate event.

Some examples of typical dataset synthesized for a wide variety of use cases include different types of time series data, like patient journeys where a list of medical events are linked to individual patients. Synthetic data in banking is often created from transaction datasets where subject tables contain accounts and the linked table contains the transactions that belong to certain accounts, referenced in the subject table. These are all time-dependent, sequential datasets where chronological order is an important part of the data’s intelligence.

When preparing your datasets for synthesization, always consider the following list of requirements:

| Subject Table | Linked table |

| Each row belongs to a different individual | Several rows belong to the same individual |

| The subject ID (primary key in SQL) must be unique | Each row needs to be linked to one of the unique IDs in the subject table (foreign key in SQL) |

| Rows should be treated independently | Several rows can be interrelated |

| Includes only static information | Includes only dynamic information where sequences must be time-ordered if available |

| The focus is on columns | The focus is on rows and columns |

2. MOVE ALL STATIC DATA TO THE SUBJECT TABLE

Check your linked table containing events. If you have static information in the linked table, that is describing the subject, you should move that column to the subject table. A good example would be a list of page visits, where each page visit is an event that belongs to certain users. The IP address of users is the same across different events. It’s static and describes the user, not the event. In this case, the IP_address column needs to be moved over to the subject table.

3. CHECK AND CONFIGURE DATA TYPES

The most common data types, numerical, categorical and datetime are automatically detected by MOSTLY AI’s synthetic data platform. Check if the data types were detected correctly and change the encoding where you have to. If the automatically detected data types don’t match your expectations, double check the input data. Chances are, that a formatting error is leading detection astray and you might want to fix that before synthesization. Here are the cases when you should check and manually adjust data types:

- If your categories are defined by numbers, those will be automatically detected as digit

- If your column format is not correct or if empty data is encoded as non-empty string/token, your digit/datetime column might be treated as a categorical column