The agile and DevOps transformation of software testing has been accelerating since the pandemic and there is no slowing down. Applications need to be tested faster and earlier in the software development lifecycle, while customer experience is a rising priority. However, good quality, production-like test data is still hard to come by. Up to 50% of the average tester‘s time is spent waiting for test data, looking for it, or creating it by hand. Test data challenges plague companies of all sizes from the smaller organizations to enterprises. What is true in most fields, also applies in software testing: AI will revolutionize testing. AI-powered testing tools will improve quality, velocity, productivity and security. In a 2021 report, 75% of QA experts said that they plan to use AI to generate test environments and test data. Saving time and money is already possible with readily available tools like synthetic test data generators. According to Gartner, 20% of all test data will be synthetically generated by 2025. And the tools are already here. But let's start at the beginning.

What is test data?

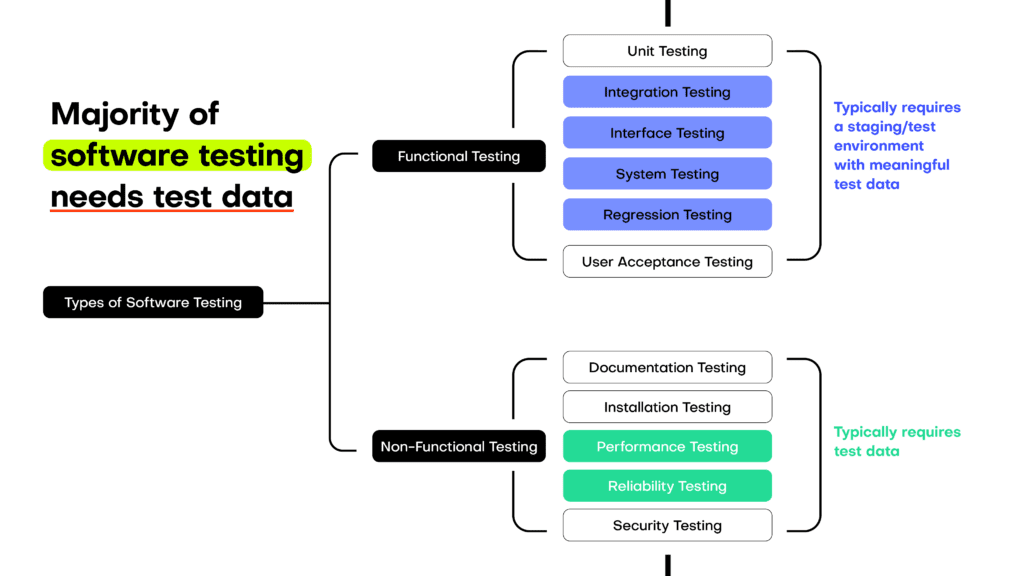

The definition of test data depends on the type of test. Application testing is made up of lots of different parts, many of which require test data. Unit tests are the first to take place in the software development process. Test data for unit tests consist of simple, typically small samples of test data. However, realism might already be an important test data quality. Performance testing or load testing requires large batches of test data. Whichever stage we talk about, one thing is for sure. Production data is not test data. Production data should never be in test environments. Data masking, randomization, and other common techniques do not anonymize data adequately. Mock data and AI-generated synthetic data are privacy-safe options. The type of test should decide which test data generation should be used.

What is a synthetic test data generator?

Synthetic test data is an essential part of the software testing process. Mobile banking apps, insurance software, retail and service providers all need meaningful, production-like test data for high quality QA. There is a confusion around the synthetic data term with many still thinking of synthetic data as mock or fake data. While mock data generators are still useful in unit tests, their usefulness is limited elsewhere. Similar to mock data generators, AI-powered synthetic test data generators are available online, in the cloud or on premise, depending on the use case. However, the quality of the resulting synthetic data varies widely. Use a synthetic test data generator that is truly AI-powered, retains the data structures, the referential integrity of the sample database and has additional, built-in privacy checks when generating synthetic data.

Accelerate your testing with synthetic test data

Get hands-on with MOSTLY AI's AI-powered platform and generate your first synthetic data set!

What is synthetic test data? The definition of AI-generated synthetic test data (TL;DR: it's NOT mock data)

Synthetic test data is generated by AI, that is trained on real data. It is structurally representative, referential integer data with support for relational structures. AI-generated synthetic data is not mock data or fake data. It is as much a representation of the behavior of your customers as production data. It’s not generated manually, but by a powerful AI engine that is capable of learning all the qualities of the dataset it is trained on, providing 100% test coverage. A good quality synthetic data generator can automate test data generation with high efficiency and without privacy concerns. Customer data should always be used in its synthetic form to protect privacy and to retain business rules embedded in the data. For example, mobile banking apps should be tested with synthetic transaction data, that is based on real customer transactions.

Test data types, challenges and their synthetic test data solutions

Synthetic data generation can be useful in all kinds of tests and provide a wide variety of test data. Here is an overview of different test data types, their applications, main challenges of data generation and how synthetic data generation can help create test data with the desired qualities.

| Test Data Types | Application | Challenge | Solution |

|---|---|---|---|

| Valid test data The combination of all possible inputs. | Integration, interface, system and regression testing | It’s challenging to cover all scenarios with manual data generation. Maintaining test data is also extremely hard. | Generate synthetic data based on production data. |

| Invalid (erroneous) test data Data that cannot and should not be processed by the software. | Unit, integration, interface, system testing, security testing | It is not always easy to identify error conditions to test because you don't know them a priori. Access to production errors is necessary but will also not yield previously unknown error scenarios. | Create more diverse test cases with synthetic data based on production data. |

| Huge test data Large volume test data for load and stress testing. | Performance testing, stress testing | Lack of sufficiently large and varied batches of data. Simply multiplying production data does not simulate all the components of the architecture correctly. Recreating real user scenarios with the right timing and general temporal distribution with manual scripts is hard. | Upsample production data via synthesization. |

| Boundary test data Data that is at the upper or lower limits of expectations. | Reliability testing | Lack of sufficiently extreme data. It’s impossible to know the difference between unlikely and impossible for values not defined within lower and upper limits, such as prices or transaction amounts. | Generate synthetic data in creative mode or use contextual generation. |

How to generate synthetic test data using AI

Generate synthetic data for testing using a purpose-built, AI-powered synthetic data platform. Some teams opt to build their own synthetic data generators in-house, only to realize that the complexity of the job is way bigger than what they signed up for. MOSTLY AI’s synthetic test data generator offers a free forever option for those teams looking to introduce synthetic data into their test data strategy.

This online test data generator is extremely simple to use:

- Connect your source database

- Define the tables where you want to protect privacy

- Start the synthesization

- Save the synthetic data to your target database

The result is structurally representative, referential integer data with support for relational structures. Knowing how to generate synthetic data starts with some basic data preparation. Fear not, it's easy and straightforward, once you understand the principles, it will be a breeze.

Do you need a synthetic test data generator?

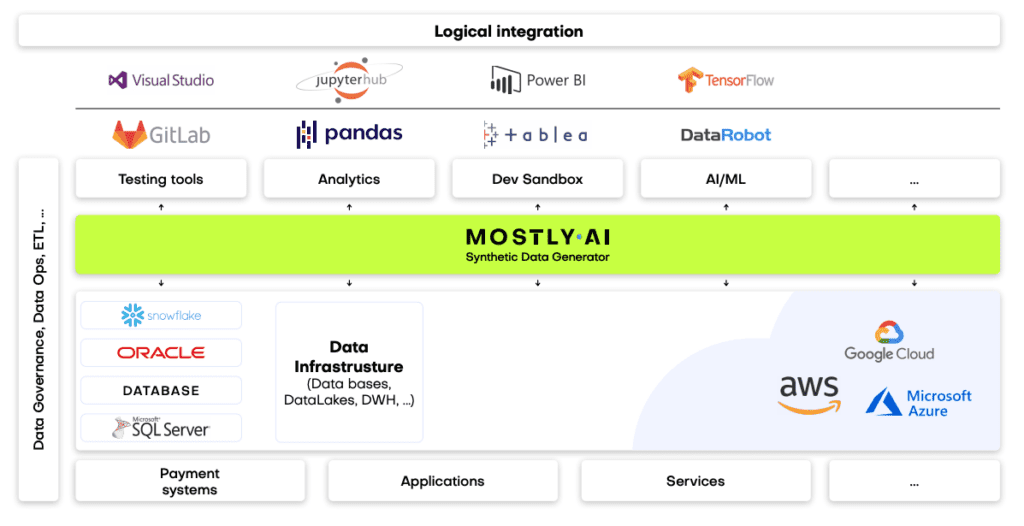

If you are a company building a modern data stack to work with data that contains PII (personally identifiable information), you need a high quality synthetic data generator. Why? Because AI-generated synthetic test data is a different level of beast where generating a few tables won’t cut it. To keep referential integrity, MOSTLY AI can directly connect to the most popular databases and synthesize directly from your database. If you are operating in the cloud, it makes even more sense to test with synthetic data for security purposes.

Synthetic test data advantages

Synthetic data is smarter

Thanks to the powerful learning capabilities of the AI, synthetic data offers better test coverage, resulting in fewer bugs and higher reliability. You’ll be able to test with real customer stories and improve customer experience with unprecedented accuracy. High-quality synthetic test data is mission-critical for the development of cutting edge digital products.

Synthetic data is faster

Accelerated data provisioning is a must-have for agile software development. Instead of tediously building a dataset manually, you can let AI do the heavy lifting for you in a fraction of the time.

Synthetic data is safer

Built-in privacy mechanisms prevent privacy leaks and protect your customers in the most vulnerable phases of development. Radioactive production data should never be in test environments in the first place, no matter how secure you think it is. Legacy anonymization techniques fail to provide privacy, so staying away from data masking and other simple technuiques is a must.

Synthetic data is flexible

Synthesization is a process that can change the size of the data to match your needs. Upscale for performance testing or subset for a smaller, but referentially correct dataset.

Synthetic data is low-touch

Data provisioning can be easily automated by using MOSTLY AI’s Data Catalog function. You can save your settings and reuse them, allowing your team to generate data on-demand.

What’s wrong with how test data has been generated so far?

A lot of things. Test data management is riddled with costly bad habits. Quality, speed and productivity suffer unnecessarily if your team does any or all of the following:

1.) Using production data in testing

Just take a copy of production and pray that the unsecure test environment won’t leak any. It’s more common than you’d think, but that doesn’t make it ok. It’s only a matter of time before something goes wrong and your company finds itself punished by customers and regulators for mishandling data. What’s more, actual data doesn’t cover all possible test cases and it’s difficult to test new features with data that doesn’t yet exist.

2.) Using legacy anonymization techniques

Contrary to popular belief, adding noise to the data, masking data or scrambling it doesn’t make it anonymous. These legacy anonymization techniques have been shown time and again to endanger privacy and destroy data utility at the same time. Anonymizing time-series, behavioral datasets, like transaction data, is notoriously difficult. Pro-tip: don’t even try, synthesize your data instead. Devs often have unrestricted access to production data in smaller companies, which is extremely dangerous. According to Gartner, 59% of privacy incidents originate with an organization’s own employees. It may not be malicious, but the result is just as bad.

3.) Generate fake data

Another very common approach is to use fake data generators like Mockito or Mockaroo. While there are some test cases, like in the case of a brand new feature, when fake data is the only solution, it comes with serious limitations. Building datasets out of thin air costs companies a lot of time and money. Using scripts built in-house or mock data generation tools takes a lot of manual work and the result is far from sophisticated. It’s cumbersome to recreate all the business rules of production data by hand, while AI-powered synthetic data generators learn and retain them automatically. What’s more, individual data points might be semantically correct, but there is no real "information" coming with fake data. It's just random data after all. The biggest problem with generating fake data is the maintenance cost. You can start testing a new application with fake data, but updating it will be a challenge. Real data changes and evolves while your mock test data will become legacy quickly.

4.) Using fake customers or users to generate test data

If you have an army of testers, you could make them behave like end users and create production like data through those interactions. It takes time and a lot of effort, but it could work if you are willing to throw enough resources in. Similarly, your employees could become these testers, however, test coverage will be limited and outside your control. If you need a quick solution for a small app, it could be worth a try, but protecting your employees’ privacy is still important.

5.) Canary releases for performance and regression tests

Some app developers push a release to a small subset of their users first and consider performance and regression testing done. While canary testing can save you time and money in the short run, long term your user base might not appreciate the bugs they encounter on your live app. What’s more, there is no guarantee that all issues will be detected.

It’s time to develop healthy test data habits! AI-generated synthetic test data is based on production data. As a result, the data structure is 100% correct and it’s really easy to generate on-demand. What’s more, you can create even more varied data than with production data covering unseen test cases. If you choose a mature synthetic data platform, like MOSTLY AI’s, built-in privacy mechanisms will guarantee safety. The only downside you have to keep in mind is that for new features you’ll still have to create mock data, since AI-generated synthetic data needs to be based on already existing data.

Test data management in different types of testing

FUNCTIONAL TESTING

Unit testing

The smallest units of code tested with a single function. Mock data generators are often used for unit tests. However, AI-generated synthetic data can also work if you take a small subset of big original production datasets.

Integration testing

The next step in development is integrating the smallest units. The goal is to expose defects. Integration testing typically takes place in environments populated with meaningful, production-like test data. AI-generated synthetic data is the best choice, since relationships of the original data are kept without privacy sensitive information.

Interface testing

The application’s UI needs to be tested through all possible customer interactions and customer data variations. Synthetic customers can provide the necessary realism for UI testing and expose issues dummy data couldn’t.

System testing

System testing examines the entire application with different sets of inputs. Connected systems are also tested in this phase. As a result, realistic data can be mission-critical to success. Since data leaks are the most likely to occur in this phase, synthetic data is highly recommended for system testing.

Regression testing

Adding a new component could break old ones. Automated regression tests are the way forward for those wanting to stay on top of issues. Maximum test data coverage is desirable and AI-generated synthetic data offers just that.

User acceptance testing

In this phase, end-users test the software in alpha and beta tests. Contract and regulatory testing also falls under this category. Here, suppliers and vendors or regulators test applications. Demo data is frequently used at this stage. Populating the app with hyper-realistic synthetic data can make the product come to life, increasing the likelihood of acceptance.

NON-FUNCTIONAL TESTING

Documentation testing

The documentation detailing how to use the product needs to match how the app works in reality. The documentation of data-intensive applications often contain dataset examples for which synthetic data is a great choice.

Installation testing

The last phase of testing, before the end-user takes over, testing the installation process itself to see if it works as expected.

Performance testing

Tests how a product behaves in terms of speed and reliability. The data used in performance testing must be very close to the original. That’s why a lot of test engineers use production data treated with legacy anonymization techniques. However, these old-school technologies, like data masking and generalization, destroy both insights and come with very weak privacy.

Security testing

Security testing’s goal is to find risks and vulnerabilities. The test data used in security testing needs to cover authentication, like usernames and passwords as well as databases and file structures. High-quality AI-generated synthetic data is especially important to use for security testing.

Test data checklist

- Adopt an engineering mindset to test data across your team.

- Automate test data generation as much as you can.

- Develop a test data as a service mindset and provide on-demand access to privacy safe synthetic data sandboxes.

- Use meaningful, AI-generated smart synthetic test data whenever you can.

- Get management buy-in for modern privacy enhancing technologies (PETs) like synthetic data.

TL;DR: Synthetic financial data is the fuel banks need to become AI-first and to create cutting-edge services. In this report, you can read about:

- banking technology trends in 2022 from superapps to personalized digital banking

- data privacy legislations affecting the banking industry in 2022

- the challenges in AI/ML development, testing and data sharing that synthetic data can solve

- the most valuable data science and synthetic data use cases in banking: customer acquisition and advanced analytics, mortgage analytics, credit decisioning and limit assessment, risk management and pricing, fraud and anomaly detection, cybersecurity, monitoring and collections, churn reduction, servicing and engagement, enterprise data sharing, and synthetic test data for digital banking product development

- synthetic data engineering: how to integrate synthetic data in financial data architectures

Table of Contents

- Banking technology trends

- The state of data privacy in banking in 2022

- The most valuable synthetic data use cases in banking

- Synthetic data for AI, advanced analytics, and machine learning

- Synthetic data for enterprise data sharing

- Synthetic test data for digital banking products

- How to integrate synthetic data generators into financial systems?

- The future of financial data

Banks and financial institutions are aware of their data and innovation gaps and AI-generated synthetic data is their best bet. According to Gartner:

By 2030, 80 percent of heritage financial services firms will go out of business, become commoditized or exist only formally but not competing effectively.

A pretty dire prophecy, but nonetheless realistic, with small neobanks and big tech companies eyeing their market. There is no way to run but forward.

The future of banking is all about becoming AI-first and creating cutting-edge digital services coupled with tight cybersecurity. In the race to a tech-forward future, most consultants and business prophets forget about step zero: customer data. In this blog post, we will give an overview of the data science use cases in banking and attempt to offer solutions throughout the data lifecycle. We'll concentrate on the easiest to deploy and highest value synthetic data use cases in banking. We'll cover three clusters of synthetic data use cases: data sharing, AI, advanced analytics, machine learning, and software testing. But before we dive into the details, let's talk about the banking trends of today.

Banking technology trends

The pandemic accelerated digital transformation, and the new normal is here to stay. According to Deloitte, 44% of retail banking customers use their bank's mobile app more often. At Nubank, a Brazilian digital bank, the number of accounts rose by 50%, going up to 30 million. It is no longer the high-street branch that will decide the customer experience. Apps become the new high-touch, flagship branches of banks where the stakes are extremely high. If the app works seamlessly and offers personalized banking, customer lifetime value increases. If the app has bugs, frustration drives customers away. Service design is an excellent framework for creating distinctive personalized digital banking experiences. Designing the data is where it should all start.

A high-quality synthetic data generator is one mission-critical piece of the data design tech stack. Initially a privacy-enhancing technology, synthetic data generators can generate representative copies of datasets. Statistically the same, yet none of the synthetic data points match the original. Beyond privacy, synthetic data generators are fantastic data augmentation tools too. Synthetic data is the modeling clay that makes this data design process possible. Think moldable test data and training data for machine learning models based on real production data.

Download the Banking on synthetic data ebook!

Hands on advice from industry experts and a complete collection of synthetic data use cases in banking.

The rise of superapps is another major trend financial institutions should watch out for. Building or joining such ecosystems makes absolute sense if banks think of them as data sources. Data ecosystems are also potential spaces for customer acquisition. With tech giants entering the market with payment and retail banking products, data protectionism is rising. However, locking up data assets is counterproductive, limiting collaboration and innovation. Sharing data is the only way to unlock new insights. Especially for banks, whose presence in their customers' lives is not easy to scale unless via collaborations and new generation digital services. Insurance providers and telecommunications companies are the first obvious candidates. Other beyond-banking service providers could also be great partners, from car rental companies to real estate services, legal support, and utility providers. Imagine a mortgage product that comes with a full suite of services needed throughout a property purchase. Banks need to create a frictionless, hyper-personalized customer experience to harness all the data that comes with it.

Another vital part of this digital transformation story is AI adoption. In banking, it's already happening. According to McKinsey,

"The most commonly used AI technologies (in banking) are: robotic process automation (36 percent) for structured operational tasks; virtual assistants or conversational interfaces (32 percent) for customer service divisions; and machine learning techniques (25 percent) to detect fraud and support underwriting and risk management."

It sounds like banks are running full speed ahead into an AI future, but the reality is more complicated than that. Due to the legacy infrastructures of financial institutions, the challenges are numerous. Usually, there is no clear strategy or fragmented ones with no enterprise-wide scale. Different business units operate almost completely cut off with limited collaboration and practically no data sharing. These fragmented data assets are the single biggest obstacle to AI adoption. McKinsey estimates that AI technologies could potentially deliver up to $1 trillion of additional value in banking each year. It is well worth the effort to unlock the data AI and machine learning models so desperately need. Let's take a look at the number one reason or rather excuse banks and financial institutions hide behind when it comes to AI/AA/ML innovation: data privacy.

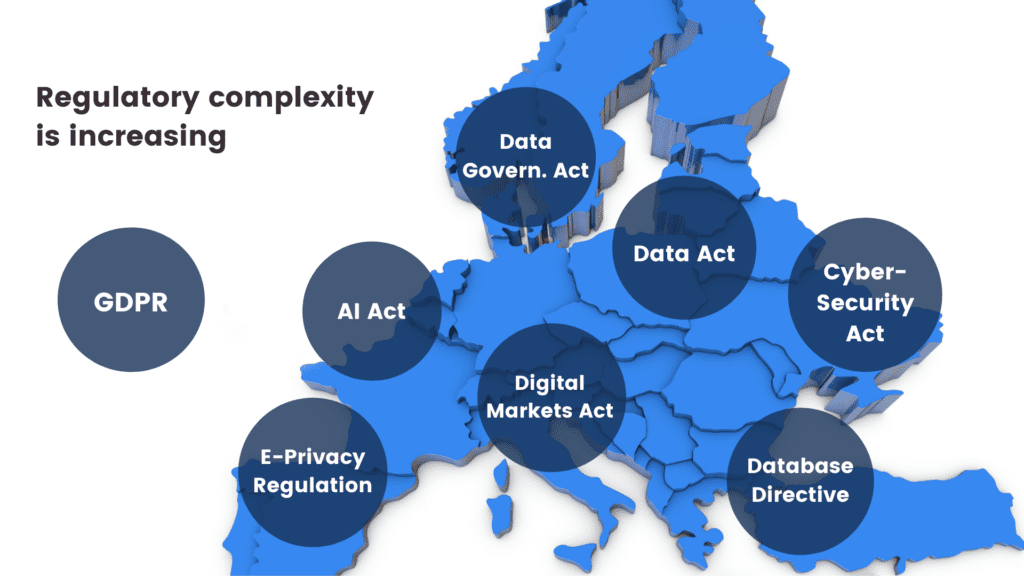

The state of data privacy in banking in 2022

Banks have always been the trustees of customer privacy. Keeping data and insights tightly secured has prevented banks from becoming data-centric institutions. What's more, an increasingly complex and restrictive legislative landscape makes it difficult to comply globally.

Let’s be clear. The ambition to secure customer data is the right one. Banks must take security seriously, especially in an increasingly volatile cybersecurity environment. However, this cannot take place at the expense of innovation. The good news is that there are tools to help. Privacy-enhancing technologies (PETs) are crucial ingredients of a tech-forward banking capability stack. It's high time for banking executives, CIOs, and CDOs to get rid of their digital banking blindspots. Banks must stop using legacy data anonymization tools that endanger privacy and hinder innovation. Data anonymization methods, like randomization, permutation, and generalization, carry a high risk of re-identification or destroy data utility.

Maurizio Poletto, Chief Platform Officer at Erste Group Bank AG, said in The Executive's Guide to Accelerating Artificial Intelligence and Data Innovation with Synthetic Data:

"In theory, in banking, you could take real account data, scramble it, and then put it into your system with real numbers, so it's not traceable. The problem is that obfuscation is nice, and anonymization is nice, but you can always find a way to get the original data back. We need to be thorough and cautious as a bank because it is sensitive data. Synthetic data is a good way to continue to create value and experiment without having to worry about privacy, particularly because society is moving toward better privacy. This is just the beginning, but the direction is clear."

Modern PETs include AI-generated synthetic data, homomorphic encryption, or federated learning. They offer the way out of the data dilemma in banking. Data innovators in banking should choose the appropriate PET for the appropriate use case. Encryption solutions should be looked at when necessary to unencrypt the original data. Anonymized computation, such as federated learning, is a great choice when models can get trained on users' mobile phones. AI-generated synthetic data is the most versatile privacy-enhancing technology with just one limitation. Synthetic datasets generated by AI models trained on original data cannot be reverted back to the original. Synthetic datasets are statistically identical to the original datasets they were modeled on. However, there is no 1:1 relationship between the original and the synthetic data points. This is the very definition of privacy. As a result, AI-generated synthetic data is great for specific use cases—advanced analytics, AI and machine learning training, software testing, and sharing realistic but unencryptable datasets. Synthetic data is not a good choice for use cases where the data needs to be reverted back to the original, such as information sharing for anti-money laundering purposes, where perpetrators need to be re-identified. Let's look at a comprehensive overview of the most valuable synthetic data use cases in banking!

The most valuable synthetic data use cases in banking

Synthetic data generators come in many shapes and forms. In the following, we will be referring to MOSTLY AI's synthetic data generator. It is the market-leading synthetic data solution able to generate synthetic data with high accuracy. MOSTLY AI's synthetic data platform comes with advanced features, such as direct database connection and the ability to synthesize complex data structures with referential integrity. As a result, MOSTLY AI can serve the broadest range of use cases with suitably generated synthetic data. In the following, we will detail the lowest hanging synthetic data fruits in banking. These are the use cases we have seen to work well in practice and generate a high ROI.

| CHALLENGES | HOW CAN SYNTHETIC DATA HELP? |

|---|---|

AI/AA/ML

|

|

TESTING

|

|

DATA SHARING

|

|

Synthetic data for AI, advanced analytics, and machine learning

Synthetic data for AI/AA/ML is one of the richest use case categories with many high-value applications. According to Gartner, by 2024, 60% of the data used for the development of AI and analytics projects will be synthetically generated. Machine learning and AI unlocks a range of business benefits for retail banks.

- Advanced analytics improves customer acquisition by optimizing the marketing engine with hyper-personalized messages and precise next best actions.

- Intelligence from the very first point of contact increases customer lifetime value.

- Operating costs will be lower if decision-making in acquisition and servicing is supported with well-trained machine learning algorithms. Lower credit risk is also a benefit that comes from early detection of behaviors that signal a higher risk of default.

Automated, personalized decisions across the entire enterprise can increase competitiveness. The data backbone, the appropriate tools, and talent need to be in place to make this happen. Synthetic data generation is one of those capabilities essential for an AI-first bank to develop. The reliability and trustworthiness of AI is a neglected issue. According to Gartner:

65% of companies can't explain how specific AI model decisions or predictions are made. This blindness is costly. AI TRiSM tools, such as MOSTLY AI's synthetic data platform, provide the Trust, Risk and Security Management needed for effective explainability, ModelOps, anomaly detection, adversarial attack resistance and data protection. Companies need to develop these new capabilities to serve new needs arising from AI adoption.

From explainability to performance improvement, synthetic data generators are one of the most valuable building tools. Data science teams need synthetic data to succeed with AI and machine learning use cases. Here is how to use synthetic data in the most common AI banking applications.

CUSTOMER ACQUISITION AND ADVANCED ANALYTICS

CRM data is the single most valuable data asset for customer acquisition and retention. A wonderful, rich asset that holds personal data and behavioral data of the bank's future prospects. However, due to privacy legislation, up to 80% of CRM data tends to be locked away. Compliant CRM data for advanced analytics and machine learning applications is hard to come by. Banks either comply with regulations and refrain from developing a modern martech platform altogether or break the rules and hope to get away with it. There is a third option. Synthetic customer data is as good as real when it comes to training machine learning models. Insights from these type of analytics can help identify new prospects and improve sign-up rates significantly.

MORTGAGE ANALYTICS, CREDIT DECISIONING AND LIMIT ASSESSMENT

AI in lending is a hot topic in finance. Banks want to reach out to the right people with the right mortgage and credit products. In order to increase precision in targeting, a lot of personal data is needed. The more complete the customer data profile, the more intelligent mortgage analytics becomes. Better models bring lower risk both for the bank and for the customer. Rule-based or logistic regression models rely on a narrow set of criteria for credit decision-making. Banks without advanced behavioral analytics and models underserve a large segment of customers. People lacking formal credit histories or deviating from typical earning patterns are excluded. AI-first banks utilize huge troves of alternative data sources. Modern data sources include social media, browsing history, telecommunications usage data, and more. However, using these highly personal data sources in their original for training AI models is often a challenge. Legacy data anonymization techniques destroy the very insights the model needs. Synthetic data versions retain all of these insights. Thanks to the granular, feature-rich nature of synthetic data, lending solutions can use all the intelligence.

RISK MANAGEMENT AND PRICING

Pricing and risk prediction models are some of the most important models to get right. Even a small improvement in their performance can lead to significant savings and/or higher revenues. Injecting additional domain knowledge into these models, such as synthetic geolocation data or synthetic text from customer conversations, significantly improves the model's ability to quantify a customer's propensity to default. MOSTLY AI's ability to provide the accuracy needed to generate synthetic geolocation data has been proven already. Synthetic text data can be used for training machine learning models in a compliant way on transcripts of customer service interactions. Virtual loan officers can automate the approval of low-risk loans reliably.

It is also mission-critical to be able to provide insight into the behavior of these models. Local interpretability is the best approach for explainable AI today, and synthetic data is a crucial ingredient of this transparency.

FRAUD AND ANOMALY DETECTION

Fraud is one of the most interesting AI/ML use cases. Fraud and money laundering operations are incredibly versatile, getting more and more sophisticated every day. Adversaries are using a lot of automation too to find weaknesses in financial systems. It's impossible to keep up with rule-based systems and manual follow-ups. False positives cost a lot of money to investigate, so it's imperative to continuously improve precision aided with machine learning models. To make matters even more challenging, fraud profiles vary widely between banks. The same recipe for catching fraudulent transactions might not work for every financial institution. Using machine learning to detect fraud and anomaly patterns for cybersecurity is one of the first synthetic data use cases banks usually explore. The fraud detection use case goes way beyond privacy and takes advantage of the data augmentation possibility during synthesization. Maurizio Poletto, CPO at Erste Group Bank, recommends synthetic data upsampling to improve model performance:

Synthetic data can be used to train AI models for scenarios for which limited data is available—such as fraud cases. We could take a fraud case using synthetic data to exaggerate the cluster, exaggerate the amount of people, and so on, so the model can be trained with much more accuracy. The more cases you have, the more detailed the model can be.

Training and retraining models with synthetic data can improve fraud detection model performance, leading to valuable savings on investigating false positives.

MONITORING AND COLLECTIONS

Transaction analysis for risk monitoring is one of the most privacy-sensitive AI use cases banks need to be able to handle. Apart from traditional monitoring data, like repayment history and credit bureau reports, banks should be looking to utilize new data sources, such as time-series bank data, complete transaction history, and location data. Machine learning models trained with these extremely sensitive datasets can reliably microsegment customers according to value at risk and introduce targeted interventions to prevent defaults. These highly sensitive and valuable datasets cannot be used for AI/ML training without effective anonymization. MOSTLY AI's synthetic data generator is one of the best on the market when it comes to synthesizing complex time-series, behavioral data, like transactions with high accuracy. Behavioral synthetic data is one of the most difficult synthetic data categories to get right, and without a sophisticated AI engine, like MOSTLY AI's, results won't be accurate enough for such use cases.

CHURN REDUCTION, SERVICING, AND ENGAGEMENT

Another high-value use case for synthetic behavioral data is customer retention. A wide range of tools can be put to good use throughout a customer's lifetime, from identifying less engaged customers to crafting personalized messages and product offerings. The success of those tools hinges on the level of personalization and accuracy the initial training data allows. Machine learning models are the most powerful at pattern recognition. ML's ability to identify microsegments no analyst would ever recognize is astonishing, especially when fed with synthetic transaction data. Synthetic data can also serve as a bridge of intelligence between different lines of business: private banking and business banking data can be a powerful combination to provide further intelligence, but strictly in synthetic form. The same applies to national or legislative borders: analytics projects with global scope can be a reality when the foundation is 100% GDPR compliant synthetic data.

ALGORITHMIC TRADING

Financial institutions can use synthetic data to generate realistic market data for training and validating algorithmic trading models, reducing the reliance on historical data that may not always represent future market conditions. This can lead to improved trading strategies and increased profitability.

STRESS TESTING

Banks can use synthetic data to create realistic scenarios for stress testing, allowing them to evaluate their resilience to various economic and financial shocks. This helps ensure the stability of the financial system and boosts customer confidence in the institution's ability to withstand adverse conditions.

Synthetic data for enterprise data sharing

Open financial data is the ultimate form of data sharing. According to McKinsey, economies embracing financial data sharing could see GDP gains of between 1 and 5 percent by 2030, with benefits flowing to consumers and financial institutions. More data means better operational performance, better AI models, more powerful analytics, and customer-centric digital banking products facilitating omnichannel experiences. The idea of open data cannot become a reality without a robust, accurate, and safe data privacy standard shared by all industry players in finance and beyond. This is a vision shared by Erste Group Bank's Chief Platform Officer:

Imagine if we in banking use synthetic data to generate realistic and comparable data from our customers, and the same thing is done by the transportation industry, the city, the insurance company, and the pharmaceutical company, and then you give all this data to someone to analyze the correlation between them. Because the relationship between well-being, psychological health, and financial health is so strong, I think there is a fantastic opportunity around the combination of mobility, health, and finance data.

It's an ambitious plan, and like all grand designs, it's best to start building the elements early. At this point, most banks are still struggling with internal data sharing with distinct business lines acting as separate entities and being data protectionist when open data is the way forward. Banks and financial institutions share little intelligence, citing data privacy and legislation as their main concern. However, data sharing might just become an obligation very soon with the EU putting data altruism on the map in the upcoming Data Governance Act. While sharing personal data will remain strictly forbidden and increasingly so, anonymized data sharing will be expected of companies in the near future. In the U.S., healthcare insurance companies and service providers are already legally bound to share their data with other healthcare providers. The same requirement makes a lot of sense in banking too where so much depends on credit history and risk prediction. While some data is shared, intelligence is still withheld. Cross-border data sharing is also a major challenge in banking. Subsidiaries either operate in a completely siloed way or share data illegally. According to Axel von dem Bussche, Partner at Taylor Wessing and IT lawyer, as much as 95% of international data sharing is illegal due to the destruction of the EU-US Privacy Shield by the Schrems II decision.

Some organizations fly analysts and data scientists to the off-shore data to avoid risky and forbidden cross-border data sharing. It doesn't have to be this complicated. Synthetic data sharing is compliant with all privacy laws across the globe. Setting up synthetic data sandboxes and repositories can solve enterprise-wide data sharing across borders since synthetic data does not qualify as personal data. As a result, it is out of scope of GDPR and the infamous Schrems II. ruling, which effectively prohibited all sharing of personal data outside the EU.

Third-party data sharing within the same legislative domain is also problematic. Banks buy many third-party AI solutions from vendors without adequately testing the solutions on their own data. The data used in procurement processes is hard to get, causing costly delays and heavily masked to prevent sensitive data leaks through third parties. The result is often bad business decisions and out-of-the-box AI solutions that fail to deliver the expected performance. Synthetic data sandboxes are great tools for speeding up and optimizing POC processes, saving 80% of the cost.

Synthetic test data for digital banking products

One of the most common data sharing use cases is connected to developing and testing digital banking apps and products. Banks accumulate tons of apps, continuously developing them, onboarding new systems, and adding new components. Manually generated test data for such complex systems is a hopeless task, and many revert to the old dangerous habit of using production data for testing systems. Banks and financial institutions tend to be more privacy-conscious, but their solutions to this conundrum are still suboptimal. Time and time again, we see reputable banks and financial institutions roll out apps and digital banking services after only testing them with heavily masked or manually generated data. One-cent transactions and mock data generators won't get you far when customer expectations for seamless digital experiences are sky-high.

To complicate things further, complex application development is rarely done in-house. Data owners and data consumers are not the same people, nor do they have the full picture of test scenarios and business rules. Labs and third-party dev teams rely on the bank to share meaningful test data with them, which simply does not happen. Even if testing is kept in-house, data access is still problematic. While in other, less privacy-conscious industries, developers and test engineers use radioactive test data in non-production environments, banks leave testing teams to their own devices. Manual test data generation with tools like Mockaroo and the now infamous Faker library misses most of the business rules and edge cases so vital for robust testing practices. Dynamic test users for notification and trigger testing are also hard to come by. To put it simply, it's impossible to develop intelligent banking products without intelligent test data. The same goes for the testing of AI and machine learning models. Testing those models with synthetically simulated edge cases is extremely important to do when developing from scratch and when recalibrating models to avoid drifting. Models are as good as the training data, and testing is as good as test data. Payment applications with or without personalized money management solutions need the synthetic approach: realistic synthetic test data and edge case simulations with dynamic synthetic test users. Synthetic test data is fast to generate and can create smaller or larger versions of the same dataset as needed throughout the testing pyramid from unit testing, through integration testing, UI testing to end-to-end testing.

Erste Bank's main synthetic data use case is test data management. The bank is creating synthetic segments and communities, building new features, and testing how certain types of customers would react to these features.

Normally, the data we use is static. We see everything from the past. But features like notifications and triggers—like receiving a notification when your salary comes in—can only be tested with dynamic test users. With synthetic data, you push a button to generate that user with an unlimited number of transactions in the past and a limited number of transactions in the future, and then you can put into your system a user which is alive.

These live, synthetic users can stand in for production data and provide a level of realism unheard of before while protecting customers' privacy. The Norwegian Data Protection Authority issued a fine for using production data in testing, adding that using synthetic data instead would have been the right course to take.

Testing is becoming a continuous process. Deploying fast and iterating early is the new mantra of DevOps teams. Setting up CI/CD (continuous integration and delivery) pipelines for continuous testing cannot happen without a stable flow of high-quality test data. Synthetic data generators trained on real data samples can provide just that – up-to-date, realistic, and flexible data generation on-demand.

How to integrate synthetic data generators into financial systems?

First and foremost, it's important to understand that not all synthetic data generators are created equal. It's particularly important to select the right synthetic data vendor who can match the financial institution's needs. If a synthetic data generator is inaccurate, the resulting synthetic datasets can lead your data science team astray. If it's too accurate, the generator overfits or learns the training data too well and could accidentally reproduce some of the original information from the training data. Open-source options are also available. However, the control over quality is fairly low. Until a global standard for synthetic data arrives, it's important to proceed with caution when selecting vendors. Opt for synthetic data companies, which already have extensive experience with sensitive financial data and know-how to integrate synthetic data successfully with existing infrastructures.

The future of financial data is synthetic

Our team at MOSTLY AI is working with large banks and financial organizations very closely. We know that synthetic data will be the data transformation tool that will change the financial data landscape forever, enabling the flow and agility necessary for creating competitive digital services. While we know that the direction is towards synthetic data across the enterprise, we know full well how difficult it is to introduce new technologies and disrupt the status quo in enterprises, even if everyone can see the benefits. One of the most important tasks of anyone looking to make a difference with synthetic data is to prioritize use cases in accordance with the needs and possibilities of the organization. Analytics use cases with the biggest impact can serve as flagship projects, establishing the foundations of synthetic data adoption. In most organizations, mortgage analytics, pricing, and risk prediction use cases can generate the highest immediate monetary value, while synthetic test data can massively accelerate the improvement of customer experience and reduce compliance and cybersecurity risk. It's good practice to establish semi-independent labs for experimentation and prototyping: Erste Bank's George Lab is a prime example of how successful digital banking products can be born of such ventures. The right talent is also a crucial ingredient of success. According to Erste Bank's CPO, Maurizio Poletto:

Talented data engineers want to spend 100% of their time in data exploration and value creation from data. They don't want to spend 50% of their time on bureaucracy. If we can eliminate that, we are better able to attract talent. At the moment, we may lose some, or they are not even coming to the banking industry because they know it's a super-regulated industry, and they won't have the same freedom they would have in a different industry.

Once you have the attraction of a state-of-the-art tech stack enabling agile data practices, you can start building cross-functional teams and capabilities across the organization. The data management status quo needs to be disrupted, and privacy, security, and data agility champions will do the groundwork. Legacy data architectures keeping banks and financial institutions back from innovating and endangering customers' privacy need to be dealt with soon. The future of data-driven banking is bright, and that future is synthetic.

Synthetic data in banking ebook

Would you like to know more about using synthetic data in banking?

We talked to test engineers, QA leads, test automation experts and CTOs to figure out what their most common test data generation issues were. There are some common themes, and it's clear that the test data space is ready for some AI help.

The biggest day-to-day test data challenges

Enterprise architectures are not prepared to provide useful test data for software development. From an organizational point of view, test data tends to be the proverbial hot potato no one is willing to handle. The lack of quality test data results in longer development times and suboptimal product quality. But what makes this potato too hot to touch? The answer lies in the complexity of test data provisioning. To find out what the most common blockers are for test architects, we first mapped out how test data is generated today.

1. Copy production data and pray for forgiveness

Let's face it. Lots of dev teams do this. With their eyes half-closed, they copy the production data to staging and hope against the odds that all will be fine. It never is, though. Even if you are lucky enough to dodge a cyberattack, 59% of privacy incidents originate in-house, and most often, they are not even intentional.

Our advice for these copy-pasting daredevils is simple: do not do that. Ever. Take your production data out of non-production environments and do it fast.

2. Using legacy data anonymization like data masking or obfuscation and destroy the data in the process

Others in more privacy-conscious industries, like insurance and banking, use legacy data anonymization techniques on production data. As a direct consequence of data masking, obfuscation, and the likes, they struggle with data quality issues. They neither have the amount nor the bandwidth of data they need to meaningfully test systems. Not to mention the privacy risk these seemingly safe and arguably widespread practices bring. Test engineers are not supposed to be data scientists well versed in the nuances of data anonymization. Nor are they necessarily aware of the internal and external regulations regarding data privacy. In reality, lots of test engineers just delete some columns they flagged as personally identifiable information (PII) and call it anonymized. Many test data creation tools out there do pretty much the same automatically, conveniently forgetting that simply masking PII does not qualify as privacy-safe.

3. Manually create test data

Manual test data creation has its place in projects where entirely new applications with no data history need to be tested. However, as most testers can attest, it is a cumbersome method with lots of limitations. Mock data libraries are handy tools, but can’t solve everything. Highly differentiated test data, for example, is impossible to construct by hand. Oftentimes, offshore development teams have no other choice but to generate random data themselves. The resulting test data doesn't represent production and lacks a balance between positive cases, negative cases, as well as unlikely edge cases. A successful and stress-free go-live is out of reach both for these off-shore teams and their home crew. Even when QA engineers crack the test data issues perfectly at first, keeping the test data consistent and up-to-date is a constant struggle. Things change, often. Test data generation needs to be flexible and dynamic enough to be able to keep up with the constantly moving goalposts. Application updates introduce new or changed inputs and outputs, and test data often fails to capture these movements.

The tragic heroes of software testing and development

It's clear that data issues permeate the day-to-day work of test engineers. They deal with these as best as they can, but it does look like they are often set up for unsolvable challenges and sleepless nights. In order to generate good quality test data, they need to understand both the product and its customers. Their attention to detail needs to border on unhealthy perfectionism. Strong coding skills need to be paired with exceptional analytical and advanced data science knowledge with a generous hint of privacy awareness. It looks like the good people of testing could use some sophisticated AI help.

What does the future of AI-generated test data look like?

Good test data can be generated without thinking about it and on the fly. Good test data is available in abundance, covering real-life scenarios as well as highly unlikely edge cases. Good test data leads to quantifiable, meaningful outcomes. Good test data is readily available when using platforms for test automation. AI to the rescue! Instead of expecting test engineers to figure out the nuances of logic and painstakingly crafting datasets by hand, they can use AI-generated synthetic data to increase their product quality without spending more time on solving data issues. AI-generated synthetic data will become an important piece of the testing toolbox. Just like mock data libraries, synthetic data generators will be a natural part of the test data generation process.

As one of our QA friends put it, he would like AI "to impersonate an array of different people and situations, creating consistent feedback on system reliability as well as finding circumstantial errors." We might just be able to make his dreams come true.

From where we stand, the test data of the future looks and feels like production data but is actually synthetic. Read more about the synthetic data use case for testing and software development!