In this tutorial, you will learn the key concepts behind MOSTLY AI’s synthetic data Quality Assurance (QA) framework. This will enable you to efficiently and reliably assess the quality of your generated synthetic datasets. It will also give you the skills to confidently explain the quality metrics to any interested stakeholders.

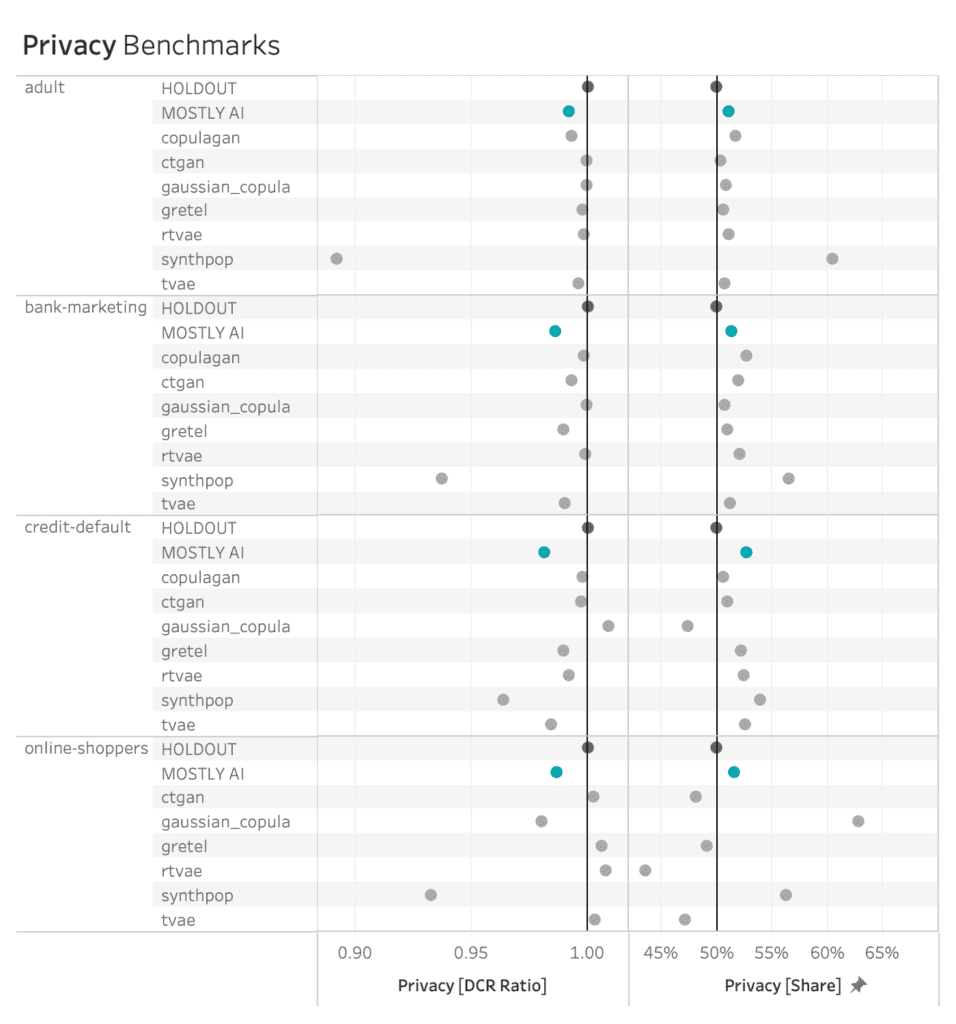

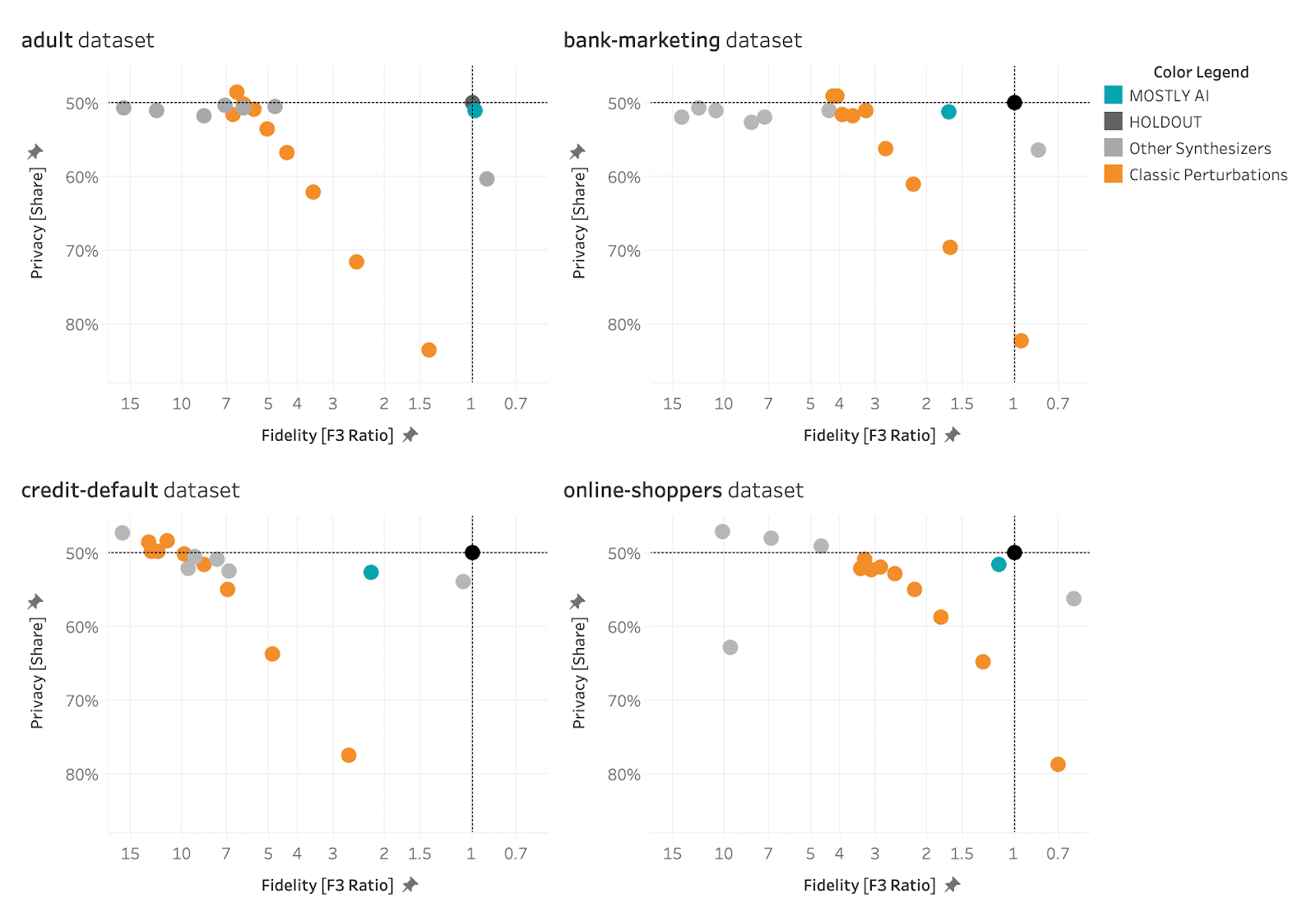

Using the code in this tutorial, you will replicate key parts of both the accuracy and privacy metrics that you will find in any MOSTLY AI QA Report. For a full-fledged exploration of the topic including a detailed mathematical explanation, see our peer-reviewed journal paper as well as the accompanying benchmarking study.

The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

QA reports for synthetic data sets

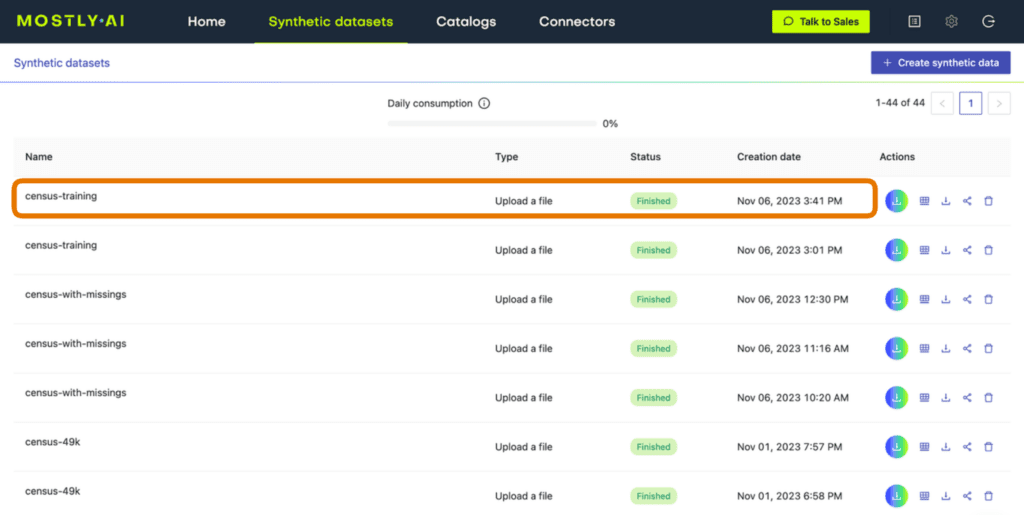

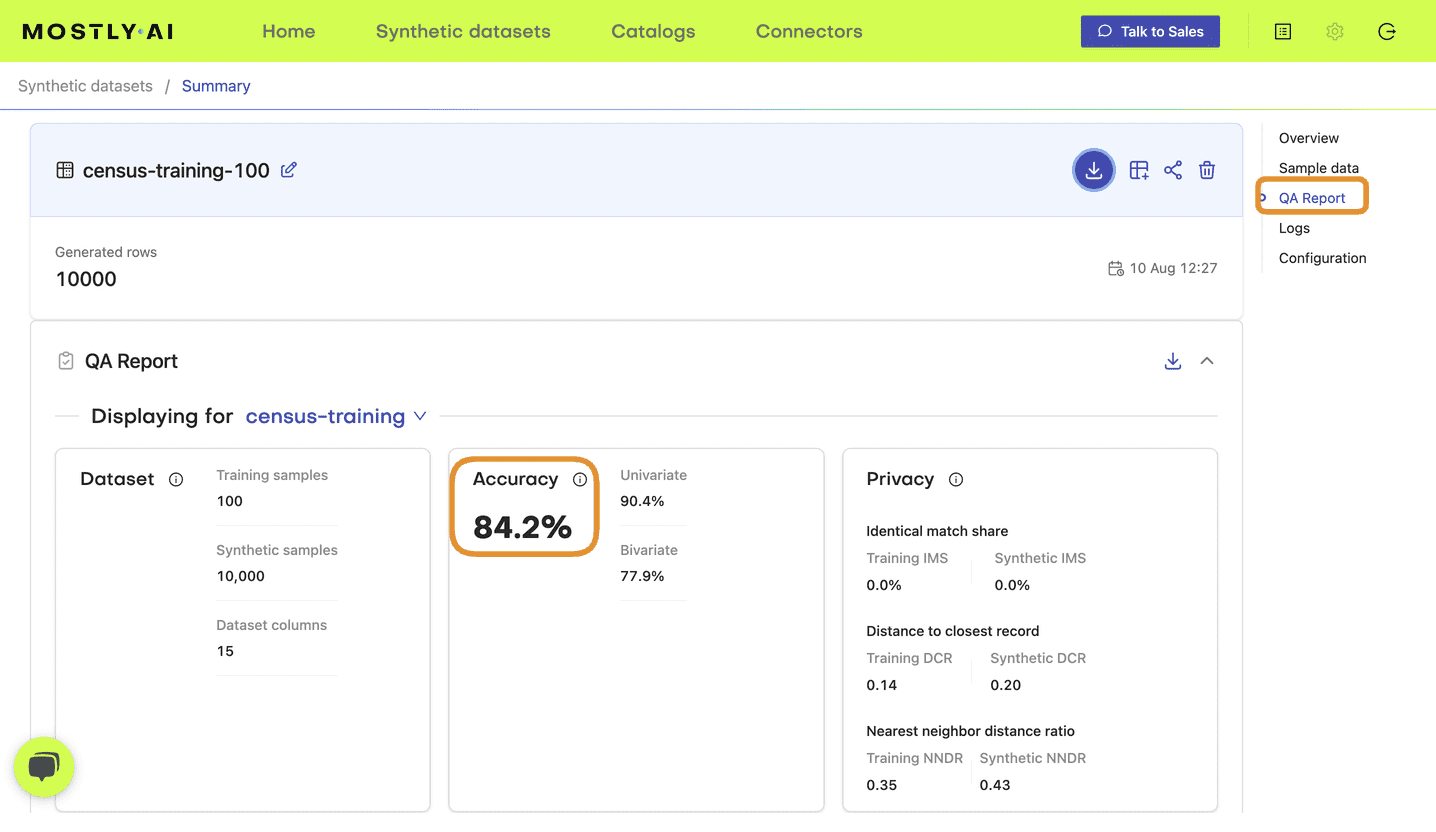

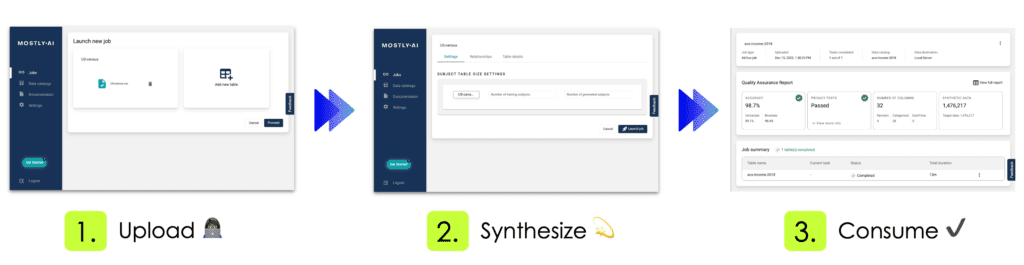

If you have run any synthetic data generation jobs with MOSTLY AI, chances are high that you’ve already encountered the QA Report. To access it, click on any completed synthesization job and select the “QA Report” tab:

Fig 1 - Click on a completed synthesization job.

Fig 2 - Select the “QA Report” tab.

At the top of the QA Report you will find some summary statistics about the dataset as well as the average metrics for accuracy and privacy of the generated dataset. Further down, you can toggle between the Model QA Report and the Data QA Report. The Model QA reports on the accuracy and privacy of the trained Generative AI model. The Data QA, on the other hand, visualizes the distributions not of the underlying model but of the outputted synthetic dataset. If you generate a synthetic dataset with all the default settings enabled, the Model and Data QA Reports should look the same.

Exploring either of the QA reports you will discover various performance metrics, such as univariate and bivariate distributions for each of the columns and well as more detailed privacy metrics. You can use these metrics to precisely evaluate the quality of your synthetic dataset.

So how does MOSTLY AI calculate these quality assurance metrics?

In the following sections you will replicate the accuracy and privacy metrics. The code is almost exactly the code that MOSTLY AI runs under the hood to generate the QA Reports – it has been tweaked only slightly to improve legibility and usability. Working through this code will give you a hands-on insight into how MOSTLY AI evaluates synthetic data quality.

Preprocessing the data

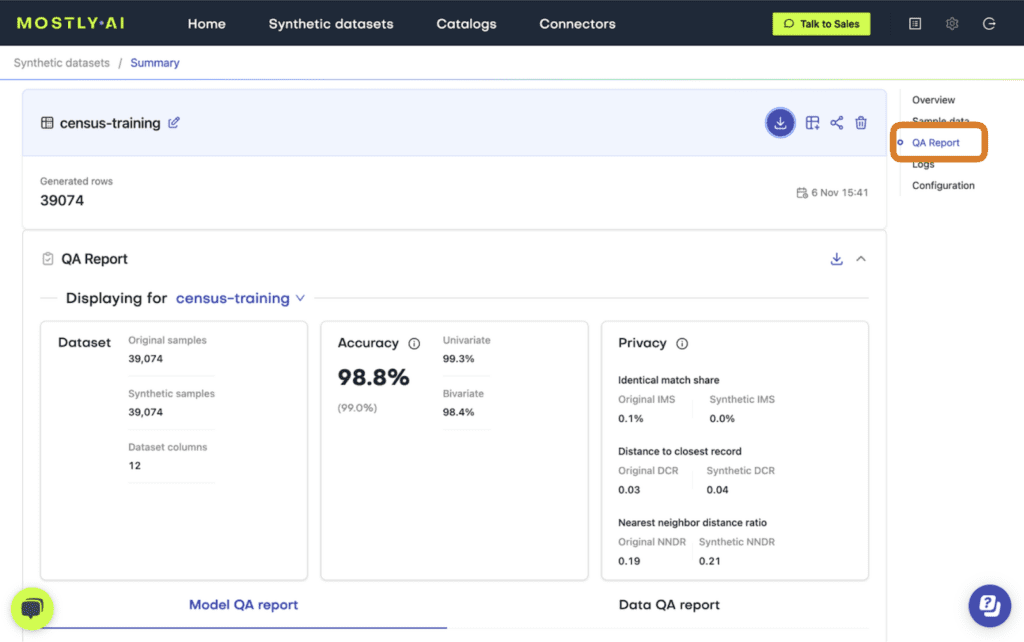

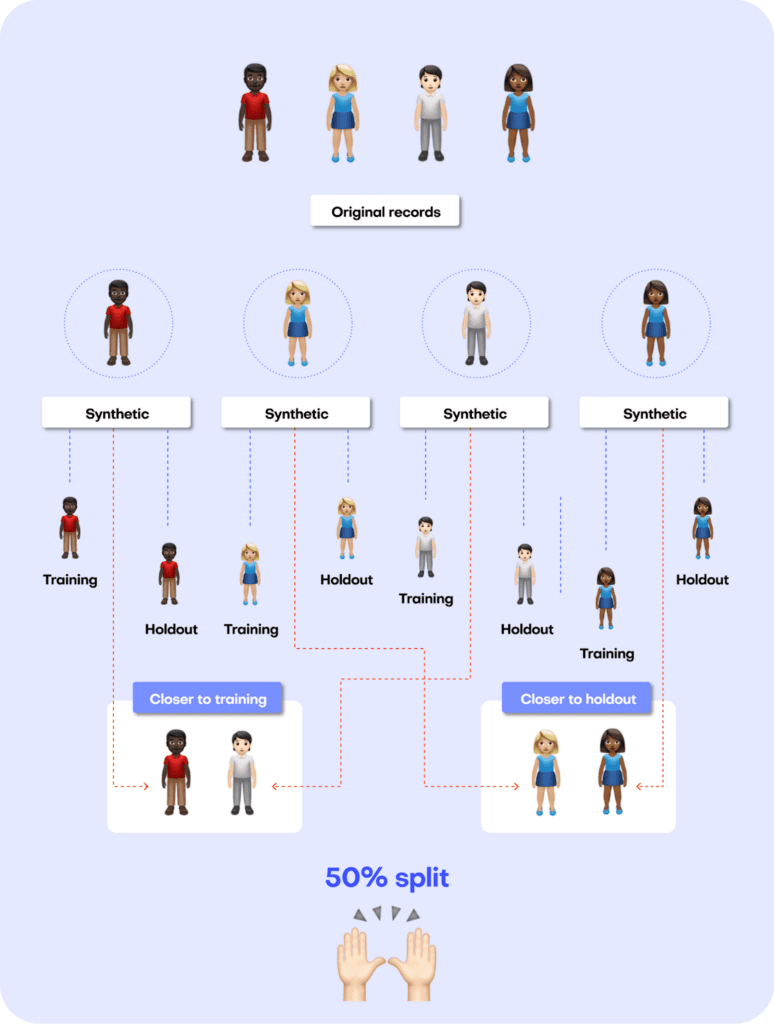

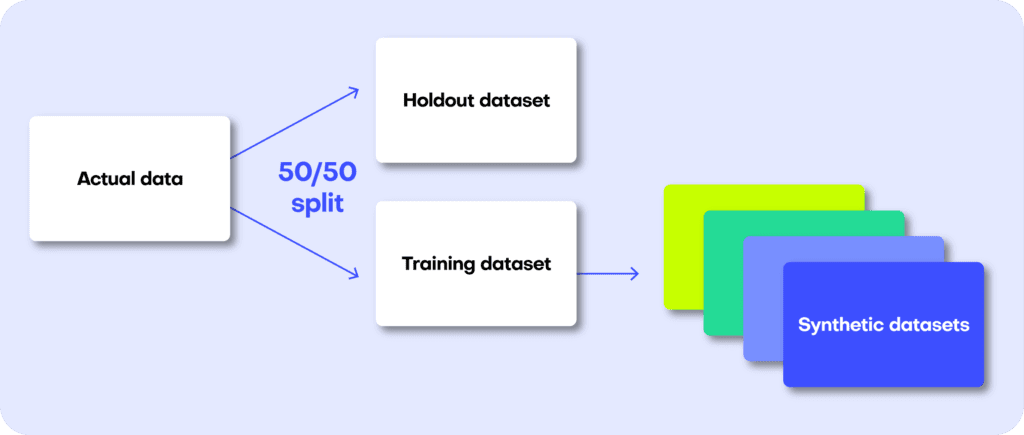

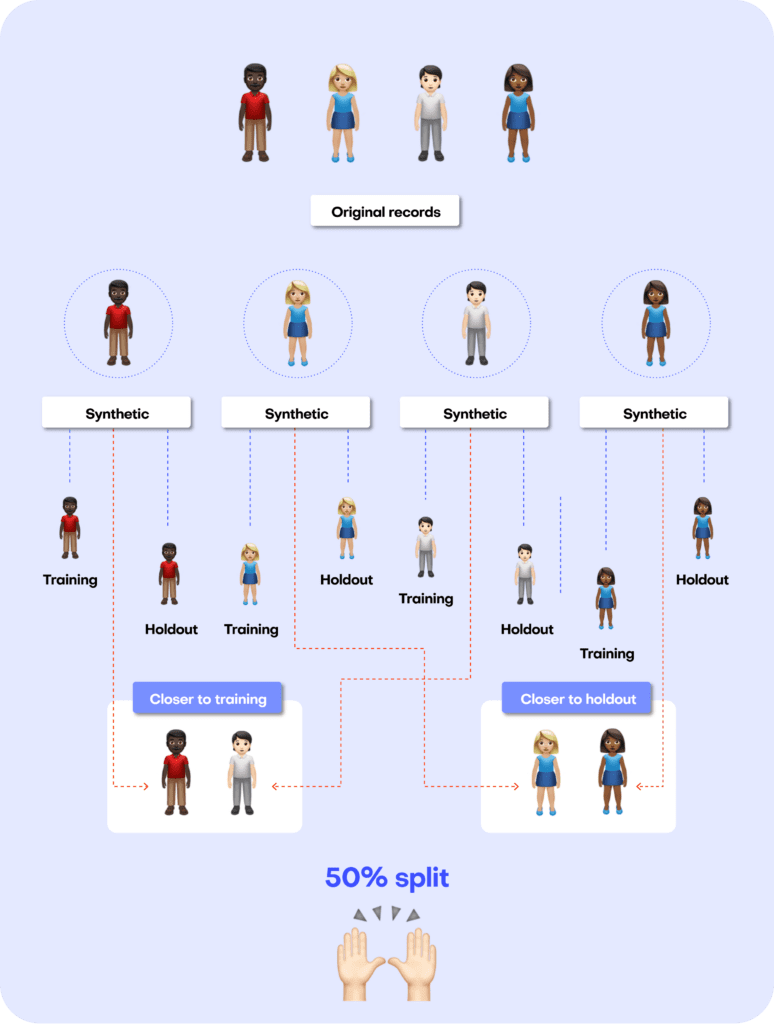

The first step in MOSTLY AI’s synthetic data quality evaluation methodology is to take the original dataset and split it in half to yield two subsets: a training dataset and a holdout dataset. We then use only the training samples (so only 50% of the original dataset) to train our synthesizer and generate synthetic data samples. The holdout samples are never exposed to the synthesis process but are kept aside for evaluation.

Fig 3 - The first step is to split the original dataset in two equal parts and train the synthesizer on only one of the halves.

Distance-based quality metrics for synthetic data generation

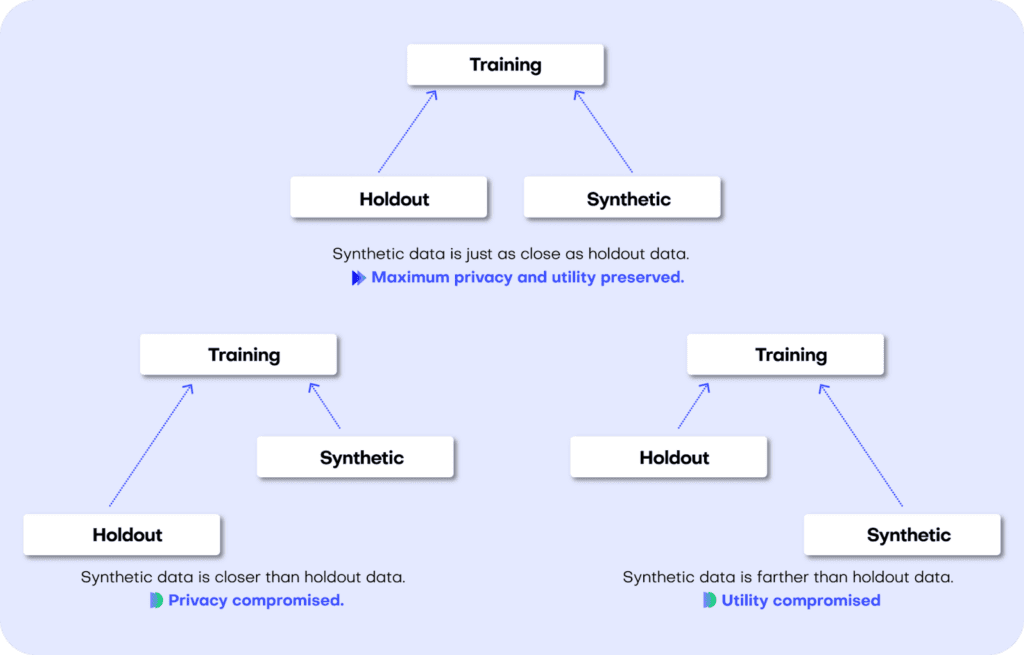

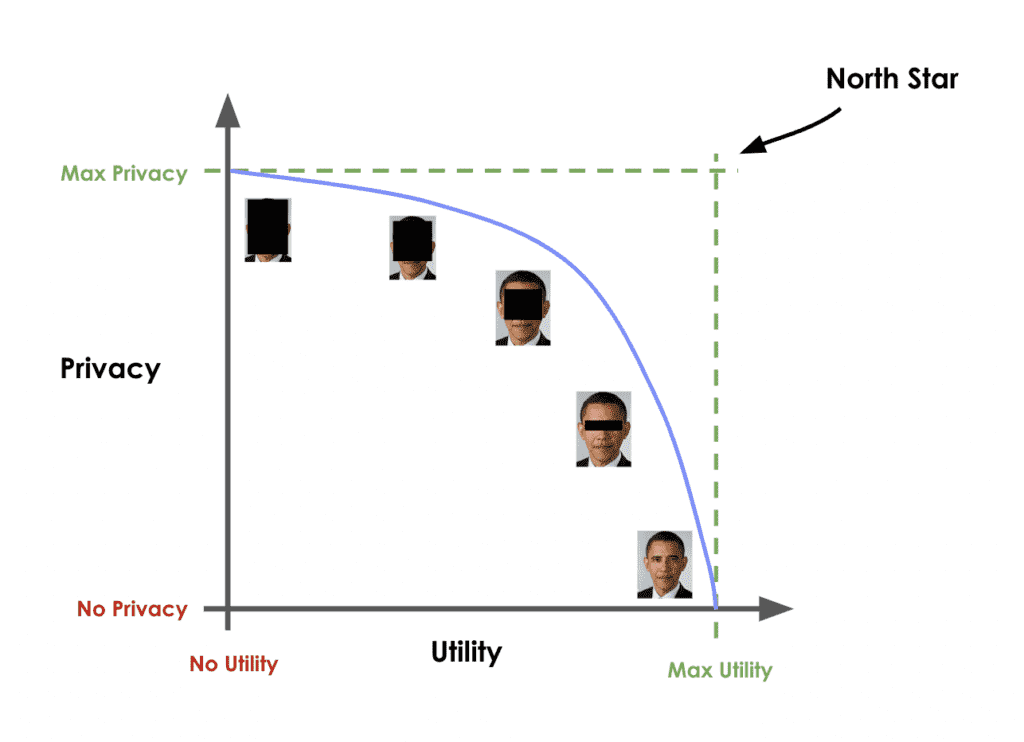

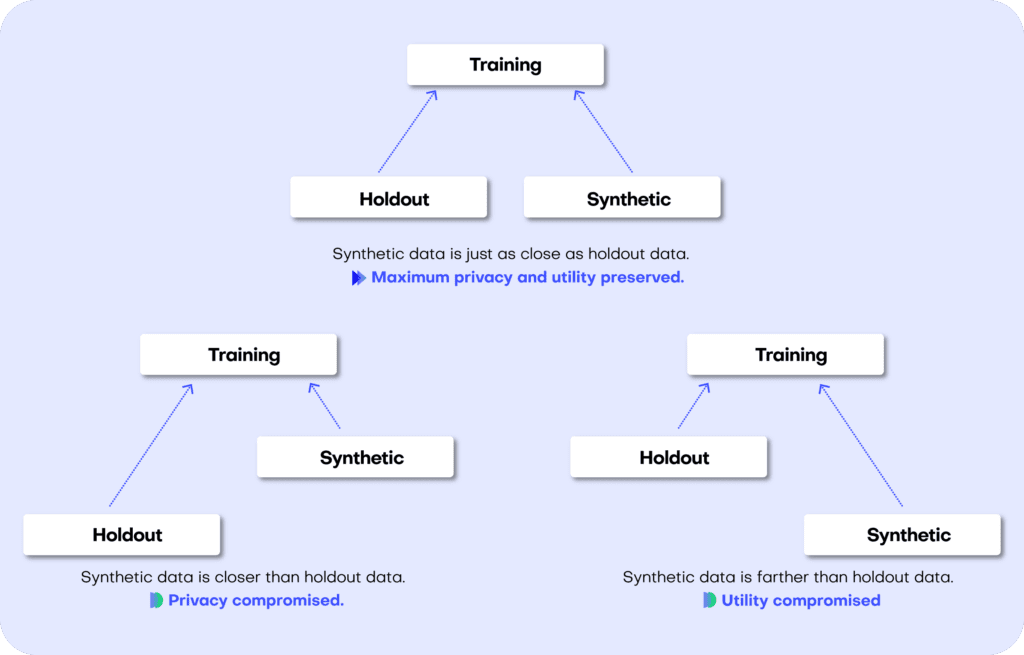

Both the accuracy and privacy metrics are measured in terms of distance. Remember that we split the original dataset into two subsets: a training and a holdout set. Since these are all samples from the same dataset, these two sets will exhibit the same statistics and the same distributions. However, as the split was made at random we can expect a slight difference in the statistical properties of these two datasets. This difference is normal and is due to sampling variance.

The difference (or, to put it mathematically: the distance) between the training and holdout samples will serve us as a reference point: in an ideal scenario, the synthetic data we generate should be no different from the training dataset than the holdout dataset is. Or to put it differently: the distance between the synthetic samples and the training samples should approximate the distance we would expect to occur naturally within the training samples due to sampling variance.

If the synthetic data is significantly closer to the training data than the holdout data, this means that some information specific to the training data has leaked into the synthetic dataset. If the synthetic data is significantly farther from the training data than the holdout data, this means that we have lost information in terms of accuracy or fidelity.

For more context on this distance-based quality evaluation approach, check out our benchmarking study which dives into more detail.

Fig 4 - A perfect synthetic data generator creates data samples that are just as different from the training data as the holdout data. If this is not the case, we are compromising on either privacy or utility.

Let’s jump into replicating the metrics for both accuracy and privacy 👇

Synthetic data accuracy

The accuracy of MOSTLY AI’s synthetic datasets is measured as the total variational distance between the empirical marginal distributions. It is calculated by treating all the variables in the dataset as categoricals (by binning any numerical features) and then measuring the sum of all deviations between the empirical marginal distributions.

The code below performs the calculation for all univariate and bivariate distributions and then averages across to determine the simple summary statistics you see in the QA Report.

First things first: let’s access the data. You can fetch both the original and the synthetic datasets directly from the Github repo:

repo = (

"https://github.com/mostly-ai/mostly-tutorials/raw/dev/quality-assurance"

)

tgt = pd.read_parquet(f"{repo}/census-training.parquet")

print(

f"fetched original data with {tgt.shape[0]:,} records and {tgt.shape[1]} attributes"

)

syn = pd.read_parquet(f"{repo}/census-synthetic.parquet")

print(

f"fetched synthetic data with {syn.shape[0]:,} records and {syn.shape[1]} attributes"

)fetched original data with 39,074 records and 12 attributes

fetched synthetic data with 39,074 records and 12 attributes

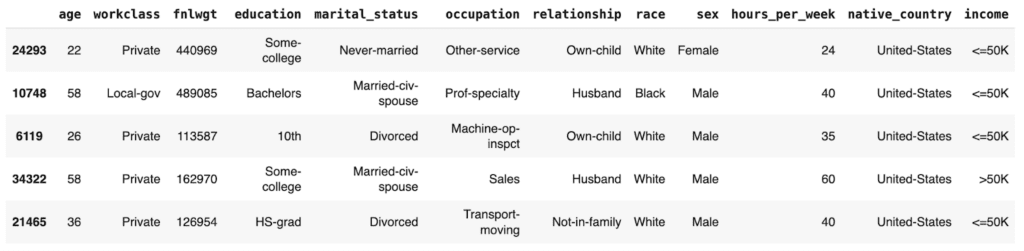

We are working with a version of the UCI Adult Income dataset. This dataset has just over 39K records and 12 columns. Go ahead and sample 5 random records to get a sense of what the data looks like:

tgt.sample(n=5)

Let’s define a helper function to bin the data in order treat any numerical features as categoricals:

def bin_data(dt1, dt2, bins=10):

dt1 = dt1.copy()

dt2 = dt2.copy()

# quantile binning of numerics

num_cols = dt1.select_dtypes(include="number").columns

cat_cols = dt1.select_dtypes(

include=["object", "category", "string", "bool"]

).columns

for col in num_cols:

# determine breaks based on `dt1`

breaks = dt1[col].quantile(np.linspace(0, 1, bins + 1)).unique()

dt1[col] = pd.cut(dt1[col], bins=breaks, include_lowest=True)

dt2_vals = pd.to_numeric(dt2[col], "coerce")

dt2_bins = pd.cut(dt2_vals, bins=breaks, include_lowest=True)

dt2_bins[dt2_vals < min(breaks)] = "_other_"

dt2_bins[dt2_vals > max(breaks)] = "_other_"

dt2[col] = dt2_bins

# top-C binning of categoricals

for col in cat_cols:

dt1[col] = dt1[col].astype("str")

dt2[col] = dt2[col].astype("str")

# determine top values based on `dt1`

top_vals = dt1[col].value_counts().head(bins).index.tolist()

dt1[col].replace(

np.setdiff1d(dt1[col].unique().tolist(), top_vals),

"_other_",

inplace=True,

)

dt2[col].replace(

np.setdiff1d(dt2[col].unique().tolist(), top_vals),

"_other_",

inplace=True,

)

return dt1, dt2And a second helper function to calculate the univariate and bivariate accuracies:

def calculate_accuracies(dt1_bin, dt2_bin, k=1):

# build grid of all cross-combinations

cols = dt1_bin.columns

interactions = pd.DataFrame(

np.array(np.meshgrid(cols, cols)).reshape(2, len(cols) ** 2).T

)

interactions.columns = ["col1", "col2"]

if k == 1:

interactions = interactions.loc[

(interactions["col1"] == interactions["col2"])

]

elif k == 2:

interactions = interactions.loc[

(interactions["col1"] < interactions["col2"])

]

else:

raise ("k>2 not supported")

results = []

for idx in range(interactions.shape[0]):

row = interactions.iloc[idx]

val1 = (

dt1_bin[row.col1].astype(str) + "|" + dt1_bin[row.col2].astype(str)

)

val2 = (

dt2_bin[row.col1].astype(str) + "|" + dt2_bin[row.col2].astype(str)

)

# calculate empirical marginal distributions (=relative frequencies)

freq1 = val1.value_counts(normalize=True, dropna=False).to_frame(

name="p1"

)

freq2 = val2.value_counts(normalize=True, dropna=False).to_frame(

name="p2"

)

freq = freq1.join(freq2, how="outer").fillna(0.0)

# calculate Total Variation Distance between relative frequencies

tvd = np.sum(np.abs(freq["p1"] - freq["p2"])) / 2

# calculate Accuracy as (100% - TVD)

acc = 1 - tvd

out = pd.DataFrame(

{

"Column": [row.col1],

"Column 2": [row.col2],

"TVD": [tvd],

"Accuracy": [acc],

}

)

results.append(out)

return pd.concat(results)Then go ahead and bin the data. We restrict ourselves to 100K records for efficiency.

# restrict to max 100k records

tgt = tgt.sample(frac=1).head(n=100_000)

syn = syn.sample(frac=1).head(n=100_000)

# bin data

tgt_bin, syn_bin = bin_data(tgt, syn, bins=10)Now you can go ahead and calculate the univariate accuracies for all the columns in the dataset:

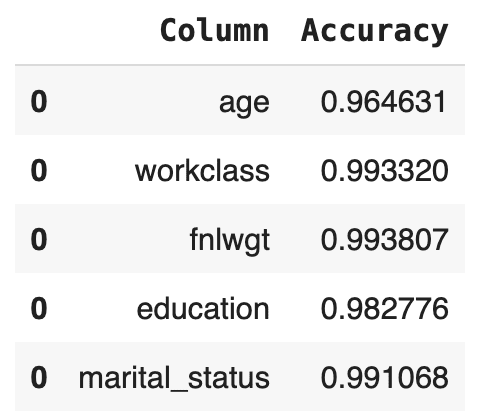

# calculate univariate accuracies

acc_uni = calculate_accuracies(tgt_bin, syn_bin, k=1)[['Column', 'Accuracy']]Go ahead and inspect the first 5 columns:

acc_uni.head()

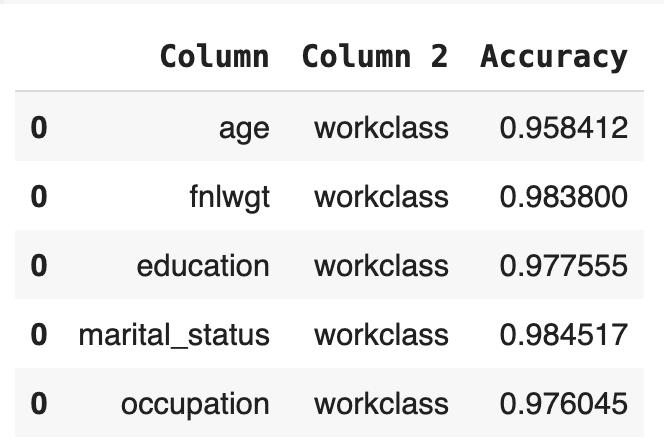

Now let’s calculate the bivariate accuracies as well. This measures how well the relationships between all the sets of two columns are maintained.

# calculate bivariate accuracies

acc_biv = calculate_accuracies(tgt_bin, syn_bin, k=2)[

["Column", "Column 2", "Accuracy"]

]

acc_biv = pd.concat(

[

acc_biv,

acc_biv.rename(columns={"Column": "Column 2", "Column 2": "Column"}),

]

)

acc_biv.head()

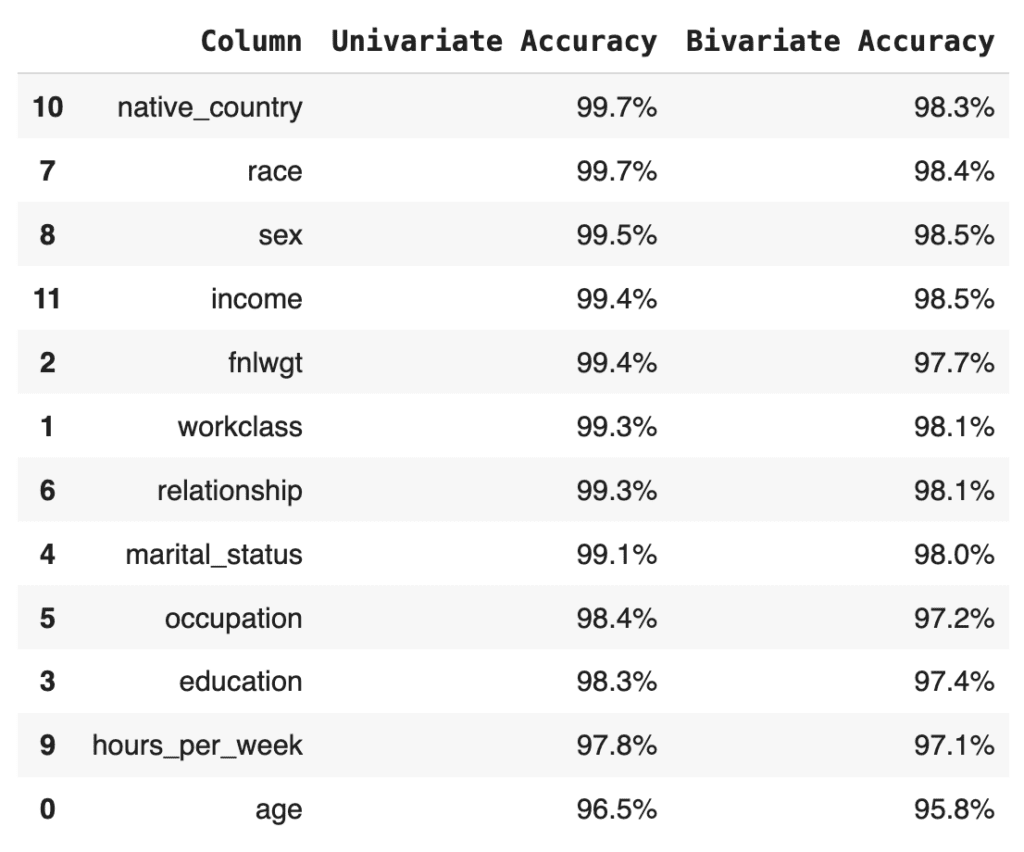

The bivariate accuracy that is reported for each column in the MOSTLY AI QA Report is an average over all of the bivariate accuracies for that column with respect to all the other columns in the dataset. Let’s calculate that value for each column and then create an overview table with the univariate and average bivariate accuracies for all columns:

# calculate the average bivariate accuracy

acc_biv_avg = (

acc_biv.groupby("Column")["Accuracy"]

.mean()

.to_frame("Bivariate Accuracy")

.reset_index()

)

# merge to univariate and avg. bivariate accuracy to single overview table

acc = pd.merge(

acc_uni.rename(columns={"Accuracy": "Univariate Accuracy"}),

acc_biv_avg,

on="Column",

).sort_values("Univariate Accuracy", ascending=False)

# report accuracy as percentage

acc["Univariate Accuracy"] = acc["Univariate Accuracy"].apply(

lambda x: f"{x:.1%}"

)

acc["Bivariate Accuracy"] = acc["Bivariate Accuracy"].apply(

lambda x: f"{x:.1%}"

)

acc

Finally, let’s calculate the summary statistic values that you normally see at the top of any MOSTLY AI QA Report: the overall accuracy as well as the average univariate and bivariate accuracies. We take the mean of the univariate and bivariate accuracies for all the columns and then take the mean of the result to arrive at the overall accuracy score:

print(f"Avg. Univariate Accuracy: {acc_uni['Accuracy'].mean():.1%}")

print(f"Avg. Bivariate Accuracy: {acc_biv['Accuracy'].mean():.1%}")

print(f"-------------------------------")

acc_avg = (acc_uni["Accuracy"].mean() + acc_biv["Accuracy"].mean()) / 2

print(f"Avg. Overall Accuracy: {acc_avg:.1%}")Avg. Univariate Accuracy: 98.9%

Avg. Bivariate Accuracy: 97.7%

------------------------------

Avg. Overall Accuracy: 98.3%

If you’re curious how this compares to the values in the MOSTLY AI QA Report, go ahead and download the tgt dataset and synthesize it using the default settings. The overall accuracy reported will be close to 98%.

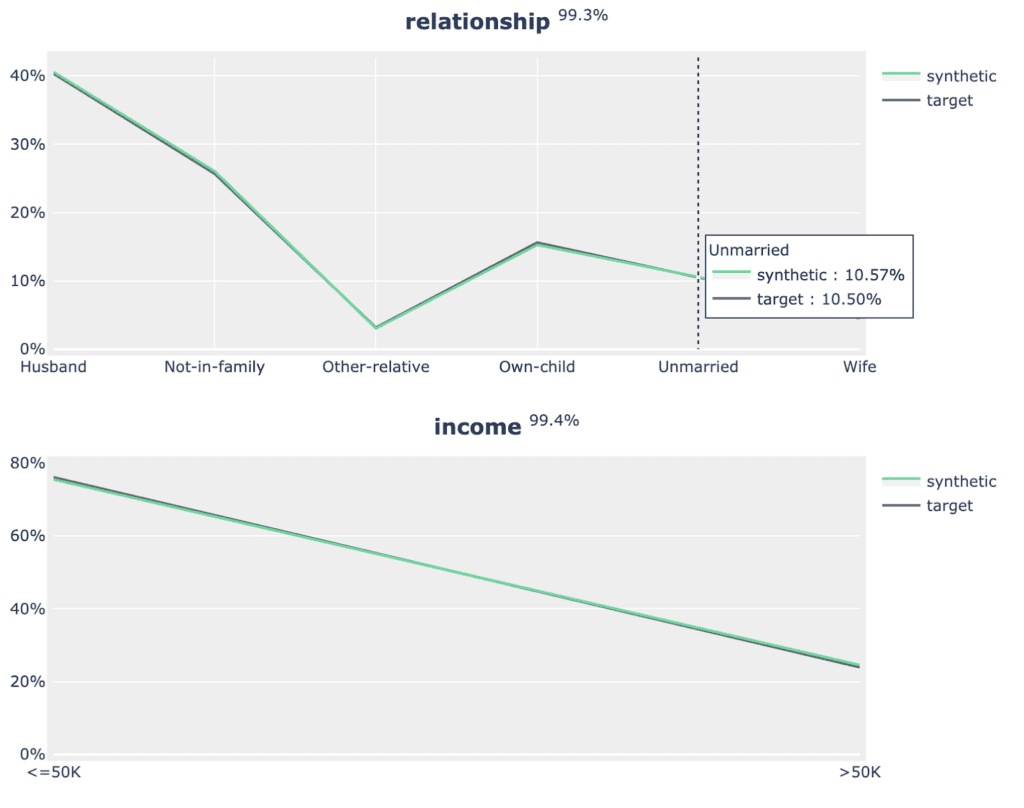

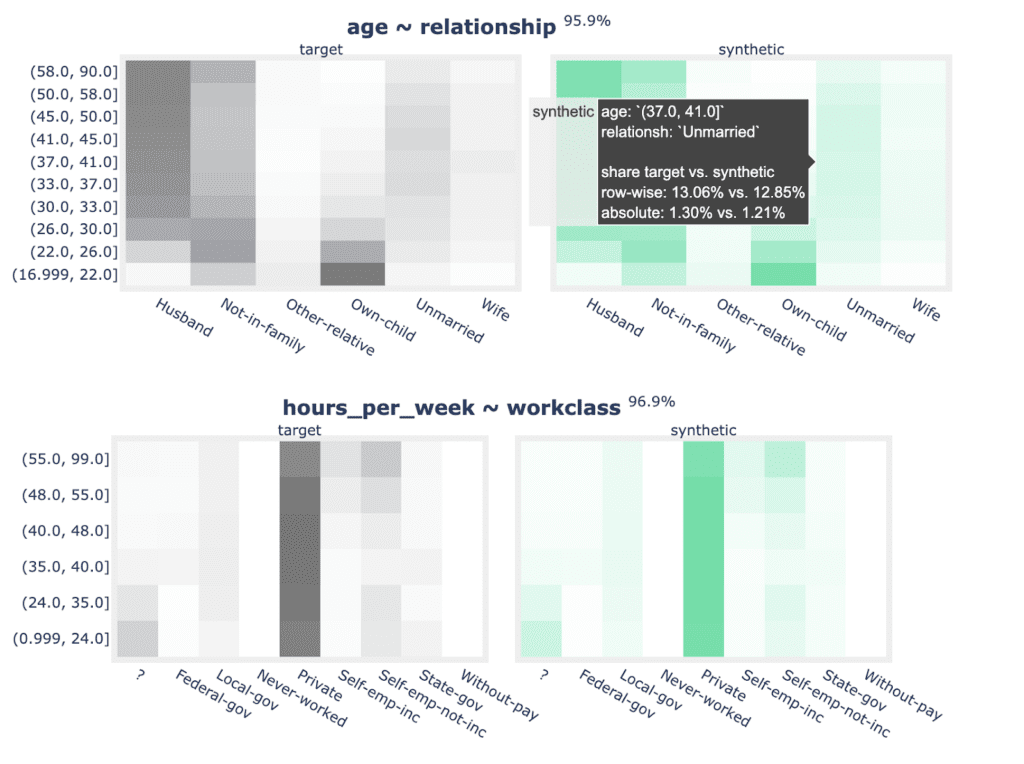

Next, let’s see how MOSTLY AI generates the visualization segments of the accuracy report. The code below defines two helper functions: one for the univariate and one for the bivariate plots. Getting the plots right for all possible edge cases is actually rather complicated, so while the code block below is lengthy, this is in fact the trimmed-down version of what MOSTLY AI uses under the hood. You do not need to worry about the exact details of the implementation here; just getting an overall sense of how it works is enough:

import plotly.graph_objects as go

def plot_univariate(tgt_bin, syn_bin, col, accuracy):

freq1 = (

tgt_bin[col].value_counts(normalize=True, dropna=False).to_frame("tgt")

)

freq2 = (

syn_bin[col].value_counts(normalize=True, dropna=False).to_frame("syn")

)

freq = freq1.join(freq2, how="outer").fillna(0.0).reset_index()

freq = freq.sort_values(col)

freq[col] = freq[col].astype(str)

layout = go.Layout(

title=dict(

text=f"<b>{col}</b> <sup>{accuracy:.1%}</sup>", x=0.5, y=0.98

),

autosize=True,

height=300,

width=800,

margin=dict(l=10, r=10, b=10, t=40, pad=5),

plot_bgcolor="#eeeeee",

hovermode="x unified",

yaxis=dict(

zerolinecolor="white",

rangemode="tozero",

tickformat=".0%",

),

)

fig = go.Figure(layout=layout)

trn_line = go.Scatter(

mode="lines",

x=freq[col],

y=freq["tgt"],

name="target",

line_color="#666666",

yhoverformat=".2%",

)

syn_line = go.Scatter(

mode="lines",

x=freq[col],

y=freq["syn"],

name="synthetic",

line_color="#24db96",

yhoverformat=".2%",

fill="tonexty",

fillcolor="#ffeded",

)

fig.add_trace(trn_line)

fig.add_trace(syn_line)

fig.show(config=dict(displayModeBar=False))

def plot_bivariate(tgt_bin, syn_bin, col1, col2, accuracy):

x = (

pd.concat([tgt_bin[col1], syn_bin[col1]])

.drop_duplicates()

.to_frame(col1)

)

y = (

pd.concat([tgt_bin[col2], syn_bin[col2]])

.drop_duplicates()

.to_frame(col2)

)

df = pd.merge(x, y, how="cross")

df = pd.merge(

df,

pd.concat([tgt_bin[col1], tgt_bin[col2]], axis=1)

.value_counts()

.to_frame("target")

.reset_index(),

how="left",

)

df = pd.merge(

df,

pd.concat([syn_bin[col1], syn_bin[col2]], axis=1)

.value_counts()

.to_frame("synthetic")

.reset_index(),

how="left",

)

df = df.sort_values([col1, col2], ascending=[True, True]).reset_index(

drop=True

)

df["target"] = df["target"].fillna(0.0)

df["synthetic"] = df["synthetic"].fillna(0.0)

# normalize values row-wise (used for visualization)

df["target_by_row"] = df["target"] / df.groupby(col1)["target"].transform(

"sum"

)

df["synthetic_by_row"] = df["synthetic"] / df.groupby(col1)[

"synthetic"

].transform("sum")

# normalize values across table (used for accuracy)

df["target_by_all"] = df["target"] / df["target"].sum()

df["synthetic_by_all"] = df["synthetic"] / df["synthetic"].sum()

df["y"] = df[col1].astype("str")

df["x"] = df[col2].astype("str")

layout = go.Layout(

title=dict(

text=f"<b>{col1} ~ {col2}</b> <sup>{accuracy:.1%}</sup>",

x=0.5,

y=0.98,

),

autosize=True,

height=300,

width=800,

margin=dict(l=10, r=10, b=10, t=40, pad=5),

plot_bgcolor="#eeeeee",

showlegend=True,

# prevent Plotly from trying to convert strings to dates

xaxis=dict(type="category"),

xaxis2=dict(type="category"),

yaxis=dict(type="category"),

yaxis2=dict(type="category"),

)

fig = go.Figure(layout=layout).set_subplots(

rows=1,

cols=2,

horizontal_spacing=0.05,

shared_yaxes=True,

subplot_titles=("target", "synthetic"),

)

fig.update_annotations(font_size=12)

# plot content

hovertemplate = (

col1[:10] + ": `%{y}`<br />" + col2[:10] + ": `%{x}`<br /><br />"

)

hovertemplate += "share target vs. synthetic<br />"

hovertemplate += "row-wise: %{customdata[0]} vs. %{customdata[1]}<br />"

hovertemplate += "absolute: %{customdata[2]} vs. %{customdata[3]}<br />"

customdata = df[

[

"target_by_row",

"synthetic_by_row",

"target_by_all",

"synthetic_by_all",

]

].apply(lambda x: x.map("{:.2%}".format))

heat1 = go.Heatmap(

x=df["x"],

y=df["y"],

z=df["target_by_row"],

name="target",

zmin=0,

zmax=1,

autocolorscale=False,

colorscale=["white", "#A7A7A7", "#7B7B7B", "#666666"],

showscale=False,

customdata=customdata,

hovertemplate=hovertemplate,

)

heat2 = go.Heatmap(

x=df["x"],

y=df["y"],

z=df["synthetic_by_row"],

name="synthetic",

zmin=0,

zmax=1,

autocolorscale=False,

colorscale=["white", "#81EAC3", "#43E0A5", "#24DB96"],

showscale=False,

customdata=customdata,

hovertemplate=hovertemplate,

)

fig.add_trace(heat1, row=1, col=1)

fig.add_trace(heat2, row=1, col=2)

fig.show(config=dict(displayModeBar=False))Now you can create the plots for the univariate distributions:

for idx, row in acc_uni.sample(n=5, random_state=0).iterrows():

plot_univariate(tgt_bin, syn_bin, row["Column"], row["Accuracy"])

print("")

Fig 5 - Sample of 2 univariate distribution plots.

As well as the bivariate distribution plots:

for idx, row in acc_biv.sample(n=5, random_state=0).iterrows():

plot_bivariate(

tgt_bin, syn_bin, row["Column"], row["Column 2"], row["Accuracy"]

)

print("")

Fig 6 - Sample of 2 bivariate distribution plots.

Now that you have replicated the accuracy component of the QA Report in sufficient detail, let’s move on to the privacy section.

Synthetic data privacy

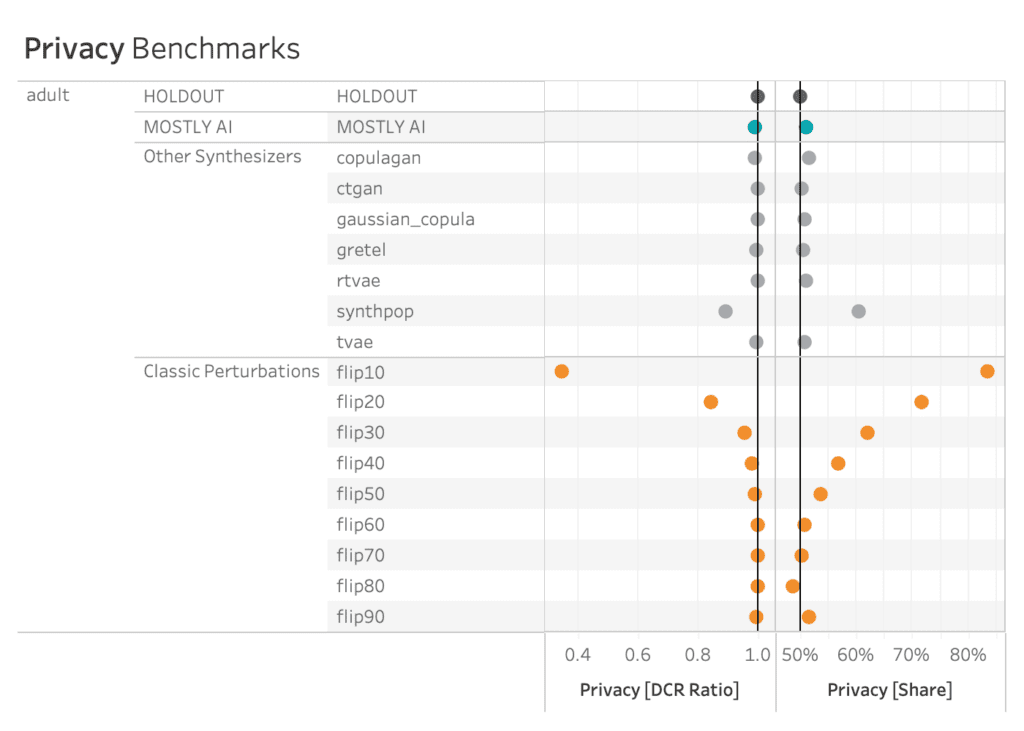

Just like accuracy, the privacy metric is also calculated as a distance-based value. To gauge the privacy risk of the generated synthetic data, we calculate the distances between the synthetic samples and their "nearest neighbor" (i.e., their most similar record) from the original dataset. This nearest neighbor could be either in the training split or in the holdout split. We then tally the ratio of synthetic samples that are closer to the holdout and the training set. Ideally, we will see an even split, which would mean that the synthetic samples are not systematically any closer to the original dataset than the original samples are to each other.

Fig 7 - A perfect synthetic data generator creates synthetic records that are just as different from the training data as from the holdout data.

The code block below uses the scikit-learn library to perform a nearest-neighbor search across the synthetic and original datasets. We then use the results from this search to calculate two different distance metrics: the Distance to the Closest Record (DCR) and the Nearest Neighbor Distance Ratio (NNDR), both at the 5-th percentile.

from sklearn.compose import make_column_transformer

from sklearn.neighbors import NearestNeighbors

from sklearn.preprocessing import OneHotEncoder

from sklearn.impute import SimpleImputer

no_of_records = min(tgt.shape[0] // 2, syn.shape[0], 10_000)

tgt = tgt.sample(n=2 * no_of_records)

trn = tgt.head(no_of_records)

hol = tgt.tail(no_of_records)

syn = syn.sample(n=no_of_records)

string_cols = trn.select_dtypes(exclude=np.number).columns

numeric_cols = trn.select_dtypes(include=np.number).columns

transformer = make_column_transformer(

(SimpleImputer(missing_values=np.nan, strategy="mean"), numeric_cols),

(OneHotEncoder(), string_cols),

remainder="passthrough",

)

transformer.fit(pd.concat([trn, hol, syn], axis=0))

trn_hot = transformer.transform(trn)

hol_hot = transformer.transform(hol)

syn_hot = transformer.transform(syn)

# calculcate distances to nearest neighbors

index = NearestNeighbors(

n_neighbors=2, algorithm="brute", metric="l2", n_jobs=-1

)

index.fit(trn_hot)

# k-nearest-neighbor search for both training and synthetic data, k=2 to calculate DCR + NNDR

dcrs_hol, _ = index.kneighbors(hol_hot)

dcrs_syn, _ = index.kneighbors(syn_hot)

dcrs_hol = np.square(dcrs_hol)

dcrs_syn = np.square(dcrs_syn)Now calculate the DCR for both datasets:

dcr_bound = np.maximum(np.quantile(dcrs_hol[:, 0], 0.95), 1e-8)

ndcr_hol = dcrs_hol[:, 0] / dcr_bound

ndcr_syn = dcrs_syn[:, 0] / dcr_bound

print(

f"Normalized DCR 5-th percentile original {np.percentile(ndcr_hol, 5):.3f}"

)

print(

f"Normalized DCR 5-th percentile synthetic {np.percentile(ndcr_syn, 5):.3f}"

)Normalized DCR 5-th percentile original 0.001

Normalized DCR 5-th percentile synthetic 0.009

As well as the NNDR:

print(

f"NNDR 5-th percentile original {np.percentile(dcrs_hol[:,0]/dcrs_hol[:,1], 5):.3f}"

)

print(

f"NNDR 5-th percentile synthetic {np.percentile(dcrs_syn[:,0]/dcrs_syn[:,1], 5):.3f}"

)NNDR 5-th percentile original 0.019

NNDR 5-th percentile synthetic 0.058

For both privacy metrics, the distance value for the synthetic dataset should be similar but not smaller. This gives us confidence that our synthetic record has not learned privacy-revealing information from the training data.

Quality assurance for synthetic data with MOSTLY AI

In this tutorial, you have learned the key concepts behind MOSTLY AI’s Quality Assurance framework. You have gained insight into the preprocessing steps that are required as well as a close look into exactly how the accuracy and privacy metrics are calculated. With these newly acquired skills, you can now confidently and efficiently interpret any MOSTLY AI QA Report and explain it thoroughly to any interested stakeholders.

For a more in-depth exploration of these concepts and the mathematical principles behind them, check out the benchmarking study or the peer-reviewed academic research paper to dive deeper.

You can also check out the other Synthetic Data Tutorials:

- Tackle missing data with smart data imputation

- Generate synthetic text data

- Explainable AI with synthetic data

- Perform conditional data generation

- Rebalancing your data for ML classification problems

- Optimize your training sample size for synthetic data accuracy

- Build a “fake vs real” ML classifier

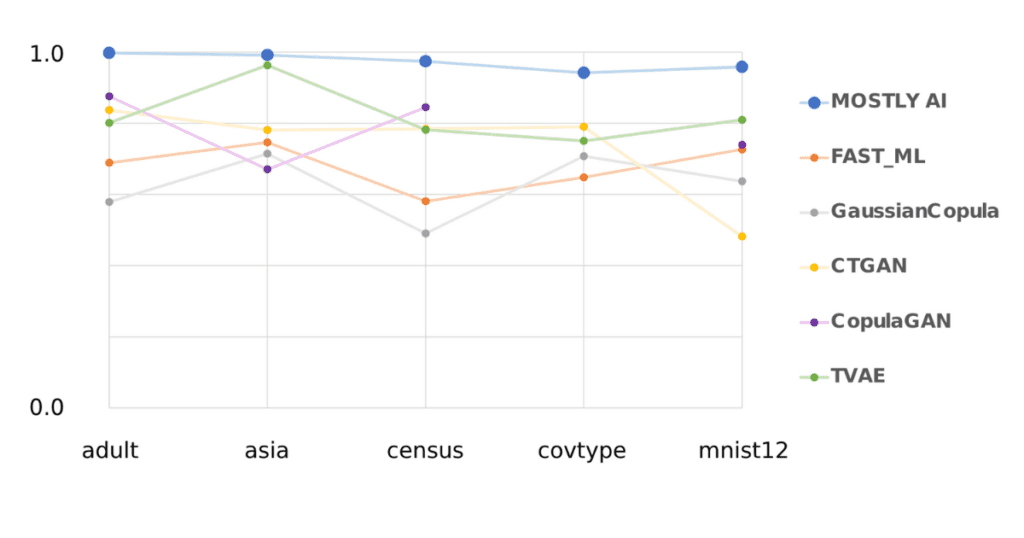

Synthetic data holds the promise of addressing the underrepresentation of minority classes in tabular data sets by adding new, diverse, and highly realistic synthetic samples. In this post, we'll benchmark AI-generated synthetic data for upsampling highly unbalanced tabular data sets. Specifically, we compare the performance of predictive models trained on data sets upsampled with synthetic records to that of well-known upsampling methods, such as naive oversampling or SMOTE-NC.

Our experiments are conducted on multiple data sets and different predictive models. We demonstrate that synthetic data can improve predictive accuracy for minority groups as it creates diverse data points that fill gaps in sparse regions in feature space.

Our results highlight the potential of synthetic data upsampling as a viable method for improving predictive accuracy on highly unbalanced data sets. We show that upsampled synthetic training data consistently results in top-performing predictive models, in particular for mixed-type data sets containing a very low number of minority samples, where it outperforms all other upsampling techniques.

Try upsampling on MOSTLY AI's synthetic data platform!

The definition of synthetic data

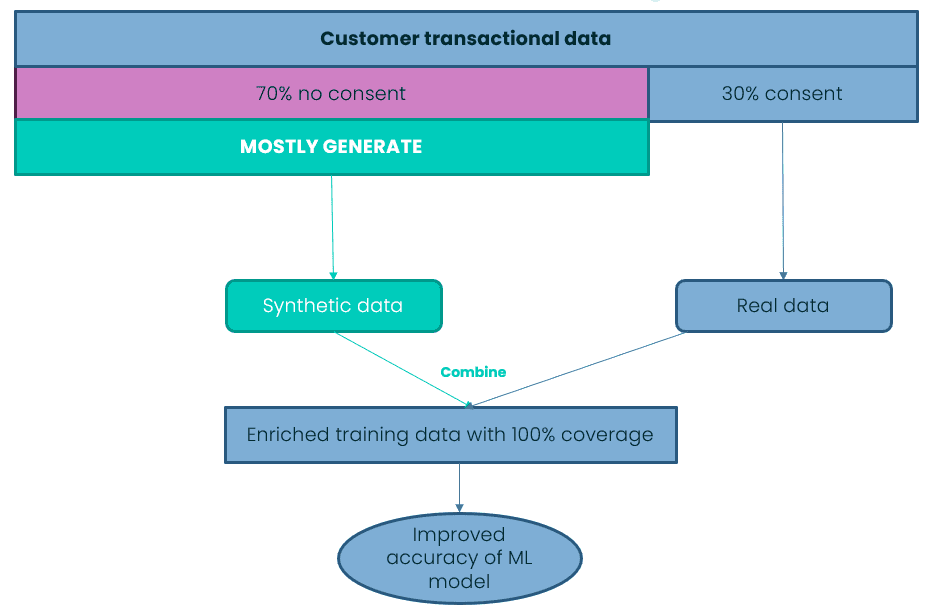

AI-generated synthetic data, which we refer to as synthetic data throughout, is created by training a generative model on the original data set. In the inference phase, the generative model creates statistically representative, synthetic records from scratch.

The use of synthetic data has gained increasing importance in various industries, particularly due to its primary use case of enhancing data privacy. Beyond privacy, synthetic data offers the possibility to modify and tailor data sets to our specific needs. In this blog post, we investigate the potential of synthetic data to improve the performance of machine learning algorithms on data sets with unbalanced class distributions, specifically through the synthetic upsampling of minority classes.

Upsampling for class imbalance

Class imbalance is a common problem in many real-world tabular data sets where the number of samples in one or more classes is significantly lower than the others. Such imbalances can lead to poor prediction performance for the minority classes, often of greatest interest in many applications, such as detecting fraud or extreme insurance claims.

Traditional upsampling methods, such as naive oversampling or SMOTE, have shown some success in mitigating this issue. However, the effectiveness of these methods is often limited, and they may introduce biases in the data, leading to poor model performance. In recent years, synthetic data has emerged as a promising alternative to traditional upsampling methods. By creating highly realistic samples for minority classes, synthetic data can significantly improve the accuracy of predictive models.

While upsampling methods like naive oversampling and SMOTE are effective in addressing unbalanced data sets, they also have their limitations. Naive oversampling mitigates class imbalance effects by simply duplicating minority class examples. Due to this strategy, they bear the risk of overfitting the model to the training data, resulting in poor generalization in the inference phase.

SMOTE, on the other hand, generates new records by interpolating between existing minority-class samples, leading to higher diversity. However, SMOTE’s ability to increase diversity is limited when the absolute number of minority records is very low. This is especially true when generating samples for mixed-type data sets containing categorical columns. For mixed-type data sets, SMOTE-NC is commonly used as an extension for handling categorical columns.

SMOTE-NC may not work well with non-linear decision boundaries, as it only linearly interpolates between minority records. This can lead to SMOTE-NC examples being generated in an “unfavorable” region of feature space, far from where additional samples would help the predictive model place a decision boundary.

All these limitations highlight the need for exploring alternative upsampling methods, such as synthetic data upsampling, that can overcome these challenges and improve the accuracy of minority group predictions.

The strength of upsampling minority classes with AI-generated synthetic data is that the generative model is not limited to upsampling or interpolating between existing minority classes. Most AI-based generators can create realistic synthetic data examples in any region of feature space and, thus, considerably increase diversity. Because they are not tied to existing minority samples, AI-based generators can also leverage and learn from the properties of (parts of) the majority samples that are transferable to minority examples.

An additional strength of using AI-based upsampling is that it can be easily extended to more complex data structures, such as sequential data, where not only one but many rows in a data set belong to a single data subject. This aspect of synthetic data upsampling is, however, out of the scope of this study.

In this post, we present a comprehensive benchmark study comparing the performance of predictive models trained on unbalanced data upsampled with AI-generated synthetic data, naive upsampling, and SMOTE-NC upsampling. Our experiments are carried out on various data sets and using different predictive models.

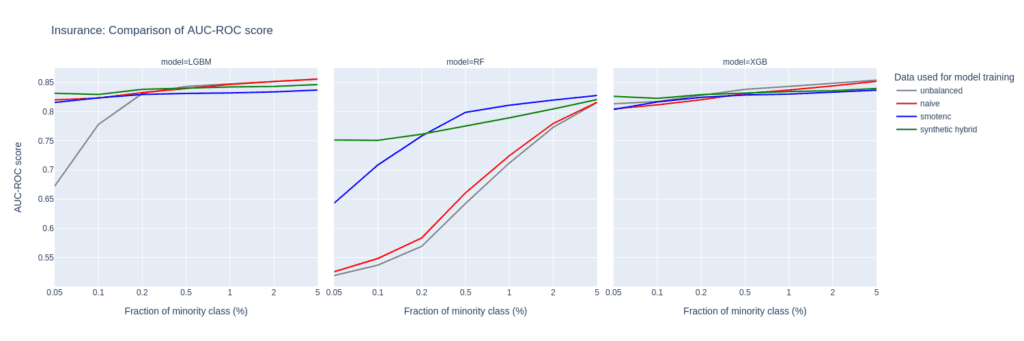

The upsampling experiment

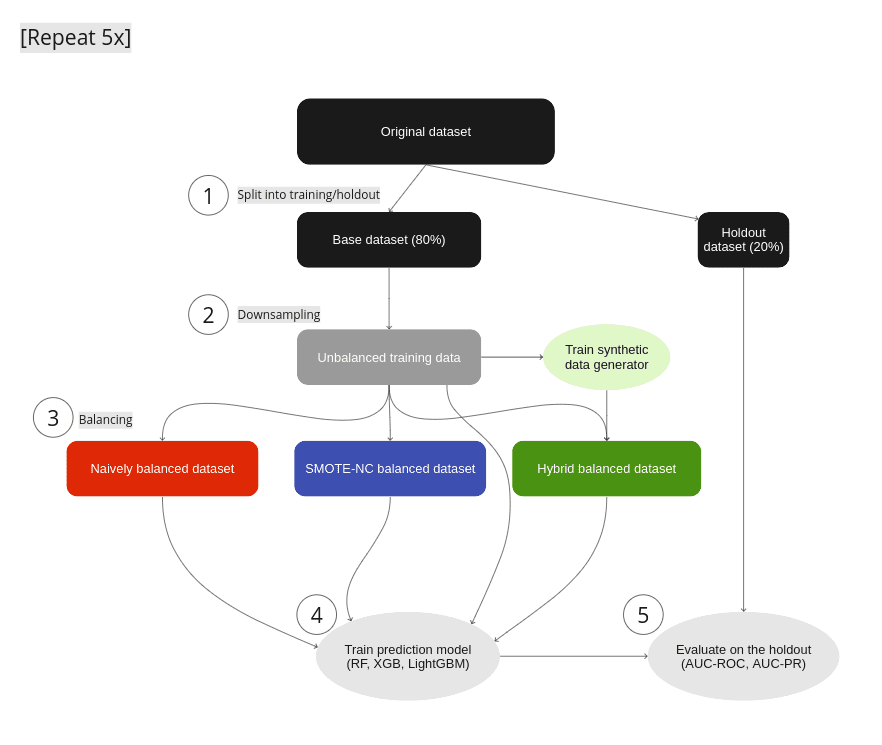

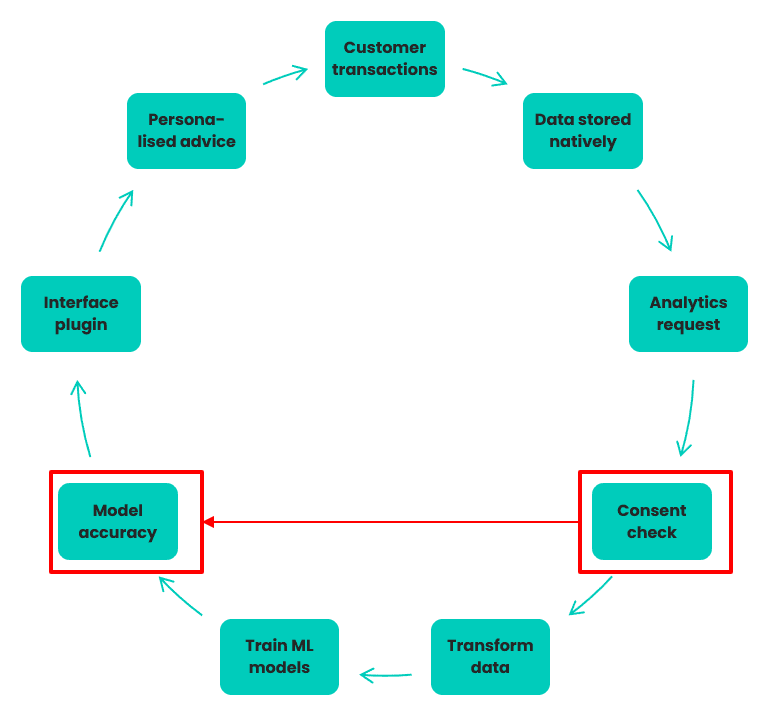

Figure 1: Experimental Setup: (1) We split the original data set into a base data set and a holdout. (2) Strong imbalances are introduced in the base data set by downsampling the minority classes to fractions as low as 0.05% to yield the unbalanced training data. (3) We test different mitigation strategies: balancing through naive upsampling, SMOTE-NC upsampling, and upsampling with AI-generated synthetic records (the hybrid data set). (4) We train LGBM, RandomForest, and XGB classifiers on the balanced and unbalanced training data. (5) We evaluate the properties of the upsampling techniques by measuring the performance of the trained classifier on the holdout set. Steps 1–5 are repeated five times, and we report the mean AUC-ROC as well as the AUC-PR.

For every data set we use in our experiments, we run through the following steps (see Fig. 1):

- We split the original data set into a base and a holdout set by using a five-fold stratified sampling approach to ensure that each class is represented proportionally.

- All of the original data sets have a binary target column and only a rather moderate imbalance with the fraction of the minority class ranging from 6% to 24% (see table 1 for data set details). We artificially induce different levels of strong imbalances to the base set by randomly down-sampling the minority class, resulting in unbalanced training data sets with minority fractions of 0.05%, 0.1%, 0.2%, 0.5%, 1%, 2%, and 5%.

- To mitigate the strong imbalances in the training data sets, we apply three different upsampling techniques:

- naive oversampling (red box in fig. 1) duplicating existing examples of the minority classes (scikit-learn, RandomOverSampler)

- SMOTE-NC (blue box in fig. 1): applying the SMOTE-NC upsampling technique (scikit-learn, SMOTENC)

- Hybrid (green box in fig. 1): The hybrid data set represents the concept of enriching unbalanced training data with AI-generated synthetic data. It is composed of the training data (including majority samples and a limited number of minority samples) along with additional synthetic minority samples that are created using an AI-based synthetic data generator. This generator is trained on the highly unbalanced training data set. In this study, we use the MOSTLY AI synthetic data platform. It is freely accessible for generating highly realistic AI-based synthetic data.

In all cases, we upsample the minority class to achieve a 50:50 balance between the majority and minority classes, resulting in the naively balanced, the SMOTE-NC balanced, and the balanced hybrid data set.

- We assess the benefits of the different upsampling techniques by training three popular classifiers: RandomForest, XGB, and LightGBM, on the balanced data sets. Additionally, we train the classifiers on the heavily unbalanced training data sets as a baseline in the evaluation of the predictive model performance.

- The classifiers are scored on the holdout set, and we calculate the AUC-ROC score and AUC-PR score across all upsampling techniques and initial imbalance ratios for all 5 folds. We report the average scores over five different samplings and model predictions. We opt for AUC metrics to eliminate dependencies on thresholds, as seen in, e.g., F1 scores.

The results of upsampling

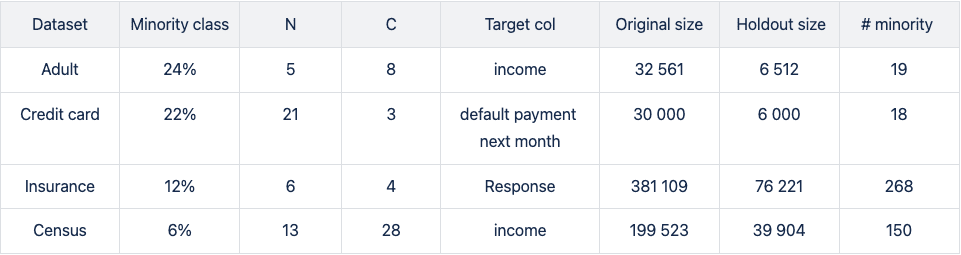

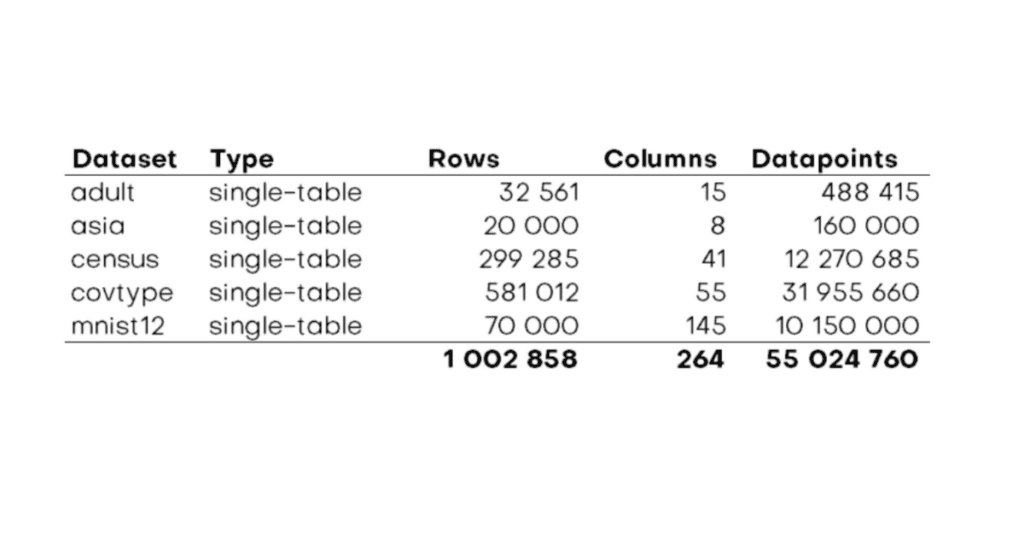

We run four publicly available data sets (Figure 1) of varying sizes through steps 1–5: Adult, Credit Card, Insurance, and Census (Kohavi and Becker). All data sets tested are of mixed type (categorical and numerical features) with a binary, that is, a categorical target column.

In step 2 (Fig. 1), we downsample minority classes to induce strong imbalances. For the smaller data sets with ~30k records, downsampling to minority-class fractions of 0.1% results in extremely low numbers of minority records.

The downsampled Adult and Credit Card unbalanced training data sets contain as little as 19 and 18 minority records, respectively. This scenario mimics situations where data is limited and extreme cases occur rarely. Such setups create significant challenges for predictive models, as they may encounter difficulty making accurate predictions and generalizing well on unseen data.

Please note that the holdout sets on which the trained predictive models are scored are not subject to extreme imbalances as they are sampled from the original data before downsampling is applied. The imbalance ratios of the holdout set are moderate and vary from 6 to 24%.

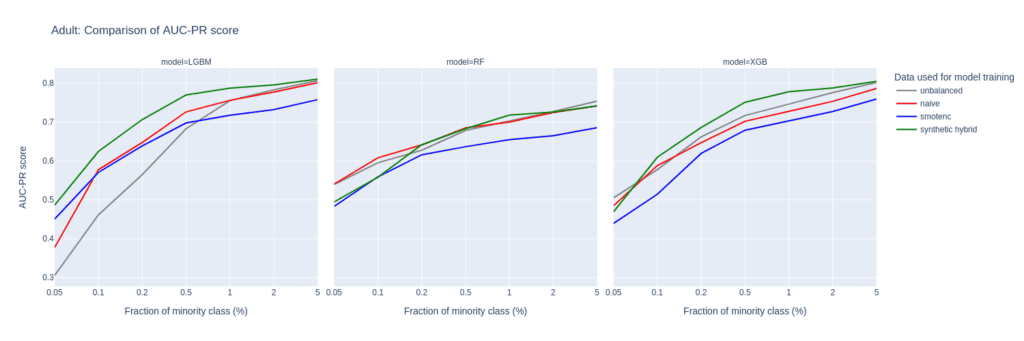

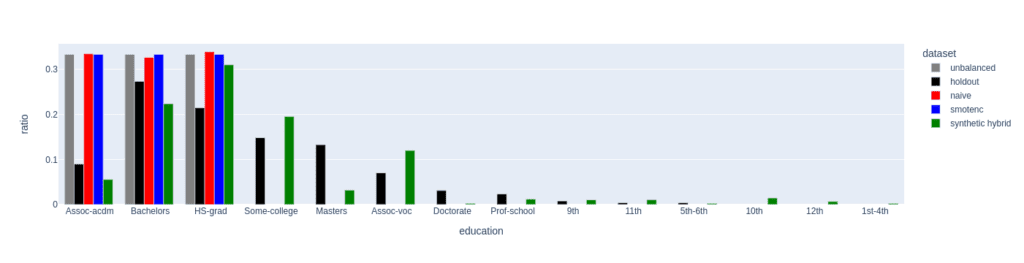

In the evaluation, we report both the AUC-ROC and the AUC-PR due to the moderate but inhomogeneous distribution of minority fractions in the holdout set. The AUC-ROC is a very popular and expressive metric, but it is known to be overly optimistic on unbalanced optimization problems. While the AUC-ROC considers both classes, making it susceptible to neglecting the minority class, the AUC-PR focuses on the minority class as it is built up by precision and recall.

Upsampling the Adult income dataset

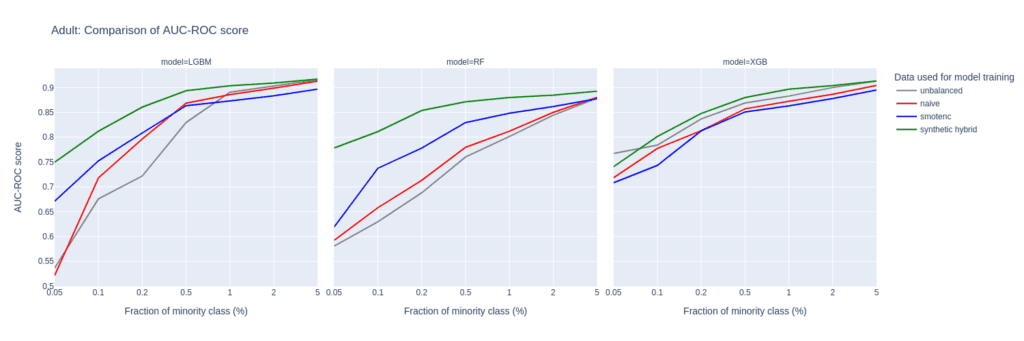

The largest differences between upsampling techniques are observed in the AUC-ROC when balancing training sets with a substantial class imbalance of 0.05% to 0.5%. This scenario involves a very limited number of minority samples, down to 19 for the Adult unbalanced training data set.

For the RF and the LGBM classifiers trained on the balanced hybrid data set, the AUC-ROC is larger than the ones obtained with other upsampling techniques. Differences can go up to 0.2 (RF classifier, minority fraction of 0.05%) between the AI-based synthetic upsampling and the second-best method.

The AUC-PR shows similar yet less pronounced differences. LGBM and XGB classifiers trained on the balanced hybrid data set perform best throughout almost all minority fractions. Interestingly, results for the RF classifier are mixed. Upsampling with synthetic data does not always lead to better performance, but it is always among the best-performing methods.

While synthetic data upsampling improves results through most of the minority fractions for the XGB classifier, too, the differences in performance are less pronounced. Especially the XGB classifier trained on the highly unbalanced training data performs surprisingly well. This suggests that the XGB classifier is better suited for handling unbalanced data.

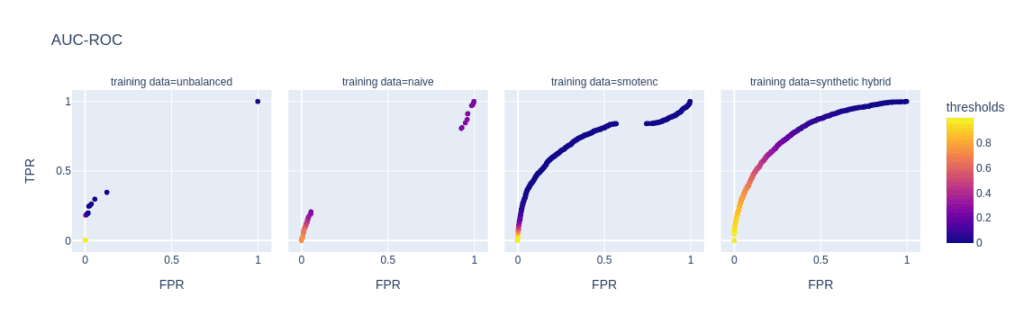

The reason for the performance differences in the AUC-ROC and AUC-PR is due to the low diversity and, consequently, overfitting when using naive or SMOTE-NC upsampling. These effects are visible in, e.g., the ROC and PR curves of the LGBM classifier for a minority fraction of 0.1% (fig. 3).

Every point on these curves corresponds to a specific prediction threshold for the classifier. The set of threshold values is defined by the variance of probabilities predicted by the models when scored on the holdout set. For both the highly unbalanced training data and the naively upsampled one, we observe very low diversity, with more than 80% of the holdout samples predicted to have an identical, very low probability of belonging to the minority class.

In the plot of the PR curve, this leads to an accumulation of points in the area with high precision and low recall, which means that the model is very conservative in making positive predictions and only makes a positive prediction when it is very confident that the data point belongs to the positive, that is, the minority class. This demonstrates the effect of overfitting on a few samples in the minority group.

SMOTE-NC has a much higher but still limited diversity, resulting in a smoother PR curve which, however, still contains discontinuities and has a large segment where precision and recall change rapidly with small changes in the prediction threshold.

The hybrid data set offers high diversity during model training, resulting in almost every holdout sample being assigned an unique probability of belonging to the minority class. Both ROC and PR curves are smooth and have a threshold of ~0.5 at the center, the point that is closest to the perfect classifier.

Figure 2: AUC-ROC (top) and AUC-PR (bottom) of classifiers LGBM, RandomForest (RF), and XGB trained on the Adult data set to predict the target feature income. The classifiers are trained on unbalanced data sets (grey) and data sets that are upsampled naively (red), with the SMOTE-NC algorithm (blue), and with AI-generated synthetic records (green). AUC values are reported for different fractions of the minority class in the unbalanced training data (x-axis).

Figure 3: ROC (top) and PR (bottom) curves of the Light GBM classifier trained on different versions of the adult data set (from left to right): unbalanced training data (minority class fraction 0.1%), naively upsampled training data, training data upsampled with SMOTE-NC, and training data enriched with AI-generated synthetic records (synthetic hybrid). Every point on the plots corresponds to a specific prediction threshold for the classifier.

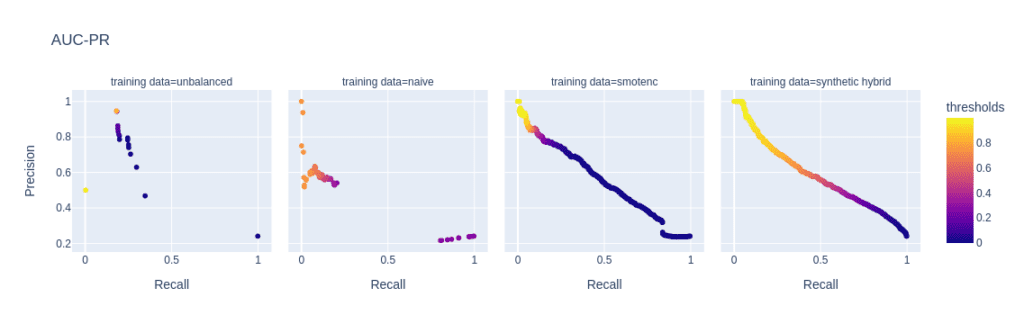

Figure 4: Distribution of the feature education for the female subgroup (sex equals female) of the minority class (income equals high). The distributions of the unbalanced (grey, minority class fraction of 0.1%), the naively upsampled (red), and the SMOTE-NC upsampled (blue) considerably differ from the holdout distribution. Only the data set upsampled with AI-generated synthetic records (synthetic hybrid) recovers the holdout distribution to a satisfactory degree and captures its diversity.

The limited power in creating diverse samples in situations where the minority class is severely underrepresented stems from naive upsampling and SMOTE-NC being limited to duplicating and interpolating between existing minority samples. Both methods are bound to a limited region in feature space.

Upsampling with AI-based synthetic minority samples, on the other hand, can, in principle, populate any region in feature space and can leverage and learn from properties of the majority samples which are transferable to minority examples, resulting in more diverse and realistic synthetic minority samples.

We analyze the difference in diversity by further “drilling down” the minority class (feature “income” equals “high”) and comparing the distribution of the feature “education” for the female subgroup (feature “sex” equals “female”) in the upsampled data sets (fig. 4).

For a minority fraction of 0.1%, this results in only three female minority records. Naive upsampling and SMOTE-NC have a very hard time generating diversity in such settings. Both just duplicate the existing categories “Bachelors”, “HS-grade”, and "Assoc-acdm,” resulting in a strong distortion of the distribution of the “education” feature as compared to the distribution in the holdout data set.

The distribution of the hybrid data has some imperfections, too, but it recovers the holdout distribution to a much better degree. Many more “education” categories are populated, and, with a few exceptions, the frequencies of the holdout data set are recovered to a satisfactory level. This ultimately leads to a larger diversity in the hybrid data set than in the naively balanced or SMOTE-NC balanced one.

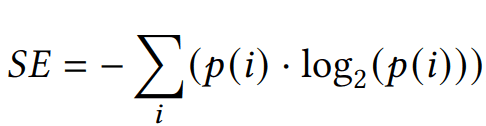

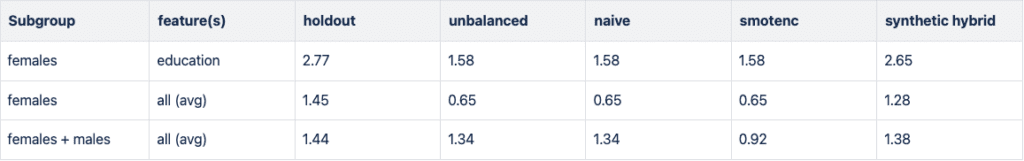

Diversity assessment with the Shannon entropy

We quantitatively assess diversity with the Shannon entropy, which measures the variability within a data set particularly for categorical data. It provides a measure of how uniformly the different categories of a specific feature are distributed within the data set.

The Shannon Entropy (SE) of a specific feature is defined as

where p(i) represents the probability of occurrence, i.e. the relative frequency of category i. SE ranges from 0 to log2(N), where N is the total number of categories. A value of 0 indicates maximum certainty with only one category, while higher entropy implies greater diversity and uncertainty, indicating comparable probabilities p(i) across categories.

In Figure 5, we report the Shannon entropy for different features and subgroups of the high-income population. In all cases, data diversity is the largest for the holdout data set. The downsampled training data set (unbalanced) has a strongly reduced SE, especially when focusing on the small group of high-income women. Naive and SMOTE-NC upsampling cannot recover any of the diversity in the holdout as both are limited to the categories present in the minority class. In line with the results presented in the paragraph above, synthetic data recovers the SE, i.e., the diversity of the holdout data set, to a large degree.

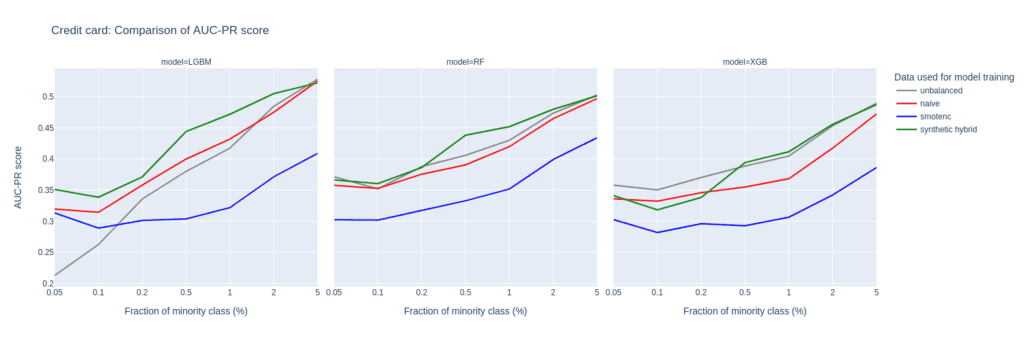

Upsampling the Credit Card data set

The Credit Card data set has similar properties as the Adult data set. The number of records, features, and the original, moderate imbalance are comparable. This again results in a very small number of minority records (18) after downsampling to a 0.1% minority fraction.

The main difference between them is the fact that Credit Card consists of more numeric features. The performance of different upsampling techniques on the unbalanced Credit Card training data set shows similar results to the Adult Data set, too. AUC-ROC and AUC-PR for both LGBM and RF classifiers improve over naive upsampling and SMOTE-NC when using the hybrid data set.

Again, the performance of the XGB model is more comparable between the different balanced data sets and we find very good performance for the highly-unbalanced training data set. Here, too, the hybrid data set is always among the best-performing upsampling techniques.

Interestingly, SMOTE-NC performs worst almost throughout all the metrics. This is surprising because we expect this data set, consisting mainly of numerical features, to be favorable for the SMOTE-NC upsampling technique.

Figure 6: AUC-ROC (top) and AUC-PR (bottom) of classifiers LGBM, RandomForest (RF), and XGB trained on the Credit Card data set to predict the target feature default payment. The classifiers are trained on unbalanced data sets (grey) and data sets that are upsampled naively (red), with the SMOTE-NC algorithm (blue), and with AI-generated synthetic records (green). AUC values are reported for different fractions of the minority class in the unbalanced training data (x-axis).

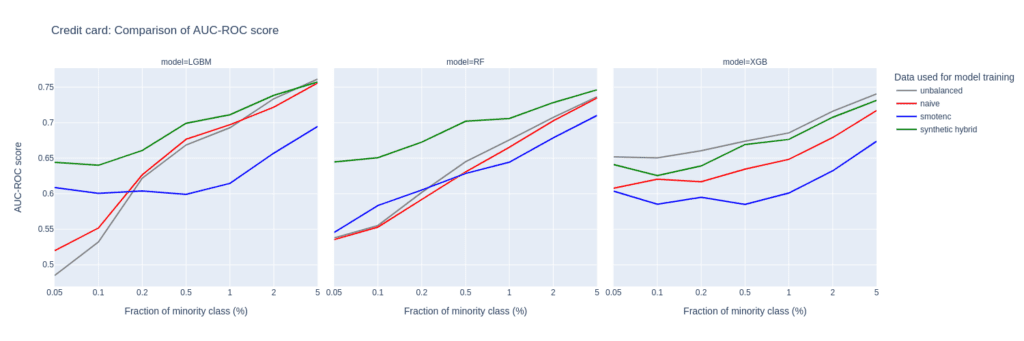

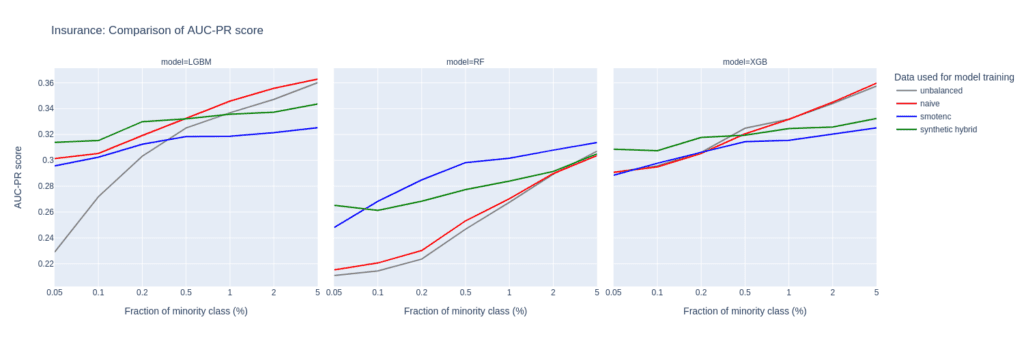

Upsampling the Insurance data set

The Insurance data set is larger than Adult and Census resulting in a larger number of minority records (268) when downsampling to the 0.1% minority fraction. This leads to a much more balanced performance between different upsampling techniques.

A notable difference in performance only appears for very small minority fractions. For minority fractions below 0.5%, both the AUC-ROC and AUC-PR of LGBM and XGB classifiers trained on the hybrid data set are consistently larger than for classifiers trained on other balanced data sets. The maximum performance gains, however, are smaller than those observed for “Adult” and “Credit Card”.

Figure 7: AUC-ROC (top) and AUC-PR (bottom) of classifiers LGBM, RandomForest (RF), and XGB trained on the Insurance data set to predict the target feature Response. The classifiers are trained on unbalanced data sets (grey) and data sets that are upsampled naively (red), with the SMOTE-NC algorithm (blue), and with AI-generated synthetic records (green). AUC values are reported for different fractions of the minority class in the unbalanced training data (x-axis).

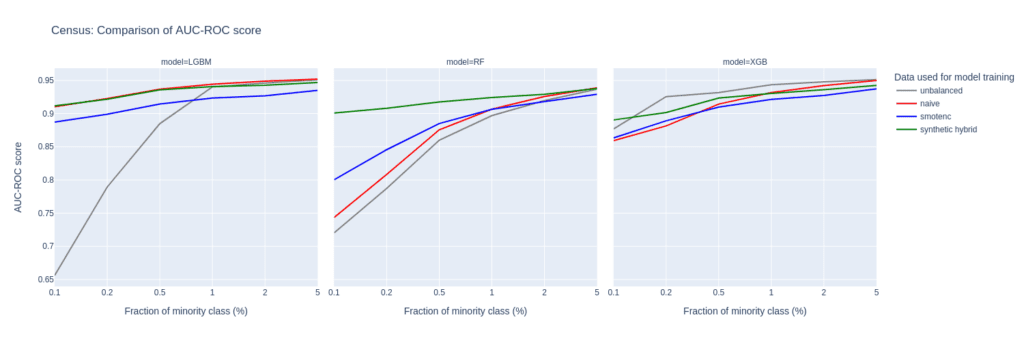

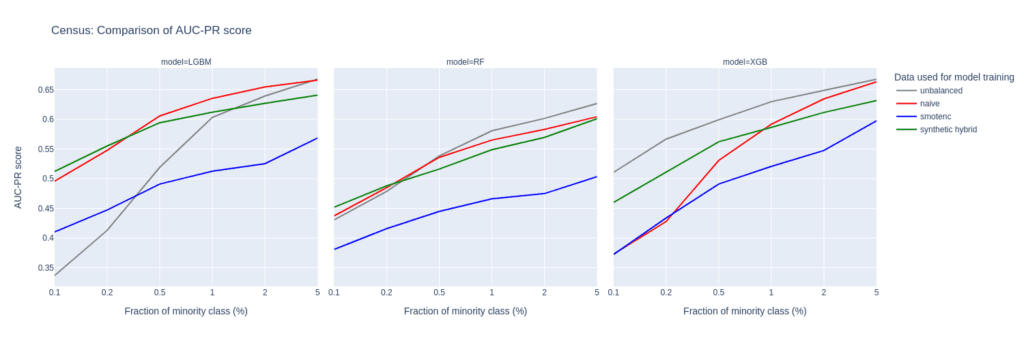

Upsampling the Census data set

The Census data set has the largest number of features of all the data sets tested in this study. Especially, the 28 categorical features pose a challenge for SMOTE-NC, leading to poor performance in terms of AUC-PR.

Comparably to the Insurance data set, the performance of the LGBM classifier severely deteriorates when trained on highly unbalanced data sets. On the other hand, the XGB model excels and performs very well even on unbalanced training sets.

The Census data set highlights the importance of carefully selecting the appropriate model and upsampling technique when working with data sets that have high dimensionality and unbalanced class distributions, as performances can vary a lot.

Upsampling with synthetic data mitigates this variance, as all models trained on the hybrid data set are among the best performers across all classifiers and ranges of minority fractions.

Figure 8: AUC-ROC (top) and AUC-PR (bottom) of classifiers LGBM, RandomForest (RF), and XGB trained on the Census data set to predict the target feature income. The classifiers are trained on unbalanced data sets (grey) and data sets that are upsampled naively (red), with the SMOTE-NC algorithm (blue), and with AI-generated synthetic records (green). AUC values are reported for different fractions of the minority class in the unbalanced training data (x-axis).

Synthetic data for upsampling

AI-based synthetic data generation can provide an effective solution to the problem of highly unbalanced data sets in machine learning. By creating diverse and realistic samples, upsampling with synthetic data generation can improve the performance of predictive models. This is especially true for cases where not only the minority fraction is low but also the absolute number of minority records is at a bare minimum. In such extreme settings, training on data upsampled with AI-generated synthetic records leads to better performance of prediction models than upsampling with SMOTE-NC or naive upsampling. Across all parameter settings explored in this study, synthetic upsampling resulted in predictive models which rank among the top-performing ones.

In this tutorial, you will learn how to use synthetic rebalancing to improve the performance of machine-learning (ML) models on imbalanced classification problems. Rebalancing can be useful when you want to learn more of an otherwise small or underrepresented population segment by generating more examples of it. Specifically, we will look at classification ML applications in which the minority class accounts for less than 0.1% of the data.

We will start with a heavily imbalanced dataset. We will use synthetic rebalancing to create more high-quality, statistically representative instances of the minority class. We will compare this method against 2 other types of rebalancing to explore their advantages and pitfalls. We will then train a downstream machine learning model on each of the rebalanced datasets and evaluate their relative predictive performance. The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

Fig 1 - Synthetic rebalancing creates more statistically representative instances of the minority class

Why should I rebalance my dataset?

In heavily imbalanced classification projects, a machine learning model has very little data to effectively learn patterns about the minority class. This will affect its ability to correctly class instances of this minority class in the real (non-training) data when the model is put into production. A common real-world example is credit card fraud detection: the overwhelming majority of credit card transactions are perfectly legitimate, but it is precisely the rare occurrences of illegitimate use that we would be interested in capturing.

Let’s say we have a training dataset with 100,000 credit card transactions which contains 999,900 legitimate transactions and 100 fraudulent ones. A machine-learning model trained on this dataset would have ample opportunity to learn about all the different kinds of legitimate transactions, but only a small sample of 100 records in which to learn everything it can about fraudulent behavior. Once this model is put into production, the probability is high that fraudulent transactions will occur that do not follow any of the patterns seen in the small training sample of 100 fraudulent records. The machine learning model is unlikely to classify these fraudulent transactions.

So how can we address this problem? We need to give our machine learning model more examples of fraudulent transactions in order to ensure optimal predictive performance in production. This can be achieved through rebalancing.

Rebalancing Methods

We will explore three types of rebalancing:

- Random (or “naive”) oversampling

- SMOTE upsampling

- Synthetic rebalancing

The tutorial will give you hands-on experience with each type of rebalancing and provide you with in-depth understanding of the differences between them so you can choose the right method for your use case. We’ll start by generating an imbalanced dataset and showing you how to perform synthetic rebalancing using MOSTLY AI's synthetic data generator. We will then compare performance metrics of each rebalancing method on a downstream ML task.

But first things first: we need some data.

Generate an Imbalanced Dataset

For this tutorial, we will be using the UCI Adult Income dataset, as well as the same training and validation split, that was used in the Train-Synthetic-Test-Real tutorial. However, for this tutorial we will work with an artificially imbalanced version of the dataset containing only 0.1% of high-income (>50K) records in the training data, by downsampling the minority class. The downsampling has already been done for you, but if you want to reproduce it yourself you can use the code block below:

def create_imbalance(df, target, ratio):

val_min, val_maj = df[target].value_counts().sort_values().index

df_maj = df.loc[df[target]==val_maj]

n_min = int(df_maj.shape[0]/(1-ratio)*ratio)

df_min = df.loc[df[target]==val_min].sample(n=n_min, random_state=1)

df_maj = df.loc[df[target]==val_maj]

df_imb = pd.concat([df_min, df_maj]).sample(frac=1, random_state=1)

return df_imb

df_trn = pd.read_csv(f'{repo}/census-training.csv')

df_trn_imb = create_imbalance(df_trn, 'income', 1/1000)

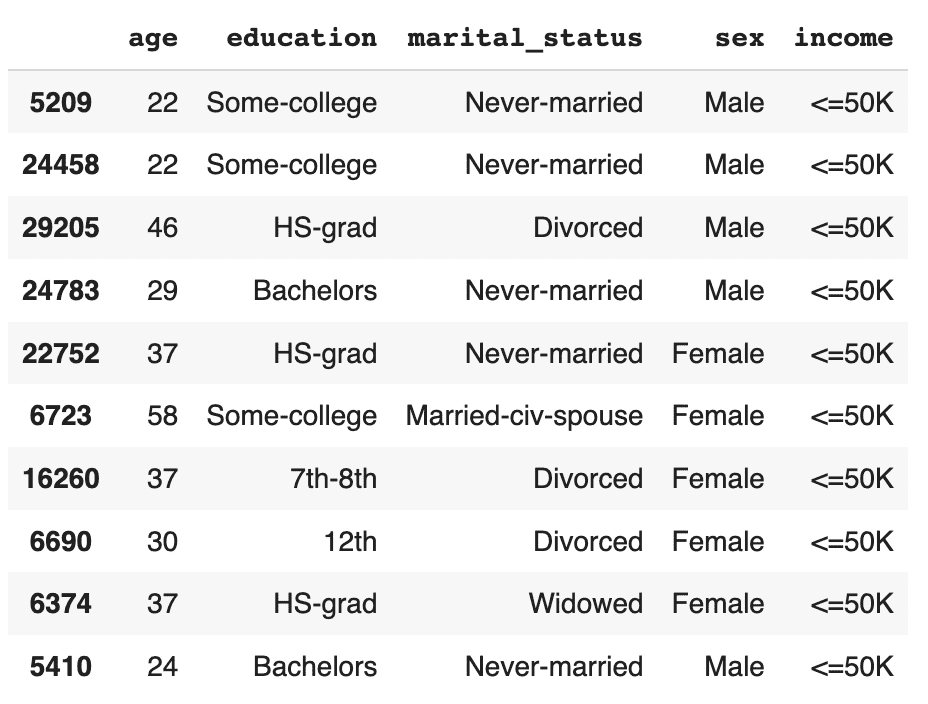

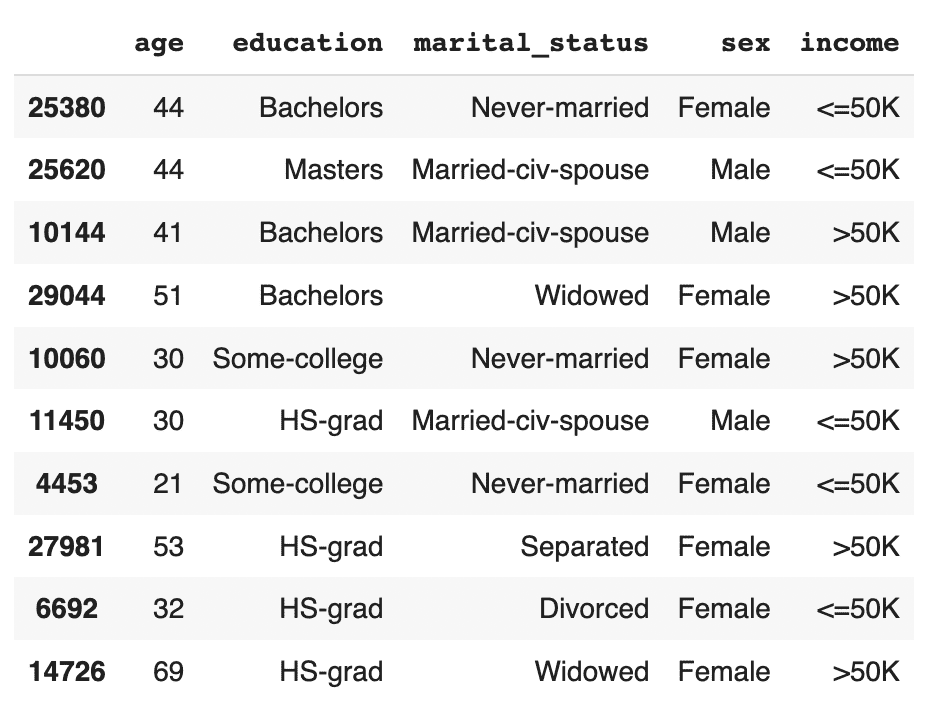

df_trn_imb.to_csv('census-training-imbalanced.csv', index=False)Let’s take a quick look at this imbalanced dataset by randomly sampling 10 rows. For legibility let’s select only a few columns, including the income column as our imbalanced feature of interest:

trn = pd.read_csv(f'{repo}/census-training-imbalanced.csv')

trn.sample(n=10)

You can try executing the line above multiple times to see different samples. Still, due to the strong class imbalance, the chance of finding a record with high income in a random sample of 10 is minimal. This would be problematic if you were interested in creating a machine learning model that could accurately classify high-income records (which is precisely what we’ll be doing in just a few minutes).

The problem becomes even more clear when we try to sample a specific sub-group in the population. Let’s sample all the female doctorates with a high income in the dataset. Remember, the dataset contains almost 30 thousand records.

trn[

(trn['income']=='>50K')

& (trn.sex=='Female')

& (trn.education=='Doctorate')

]

It turns out there are actually no records of this type in the training data. Of course, we know that these kinds of individuals exist in the real world and so our machine learning model is likely to encounter them when put in production. But having had no instances of this record type in the training data, it is likely that the ML model will fail to classify this kind of record correctly. We need to provide the ML model with a higher quantity and more varied range of training samples of the minority class to remedy this problem.

Synthetic rebalancing with MOSTLY AI

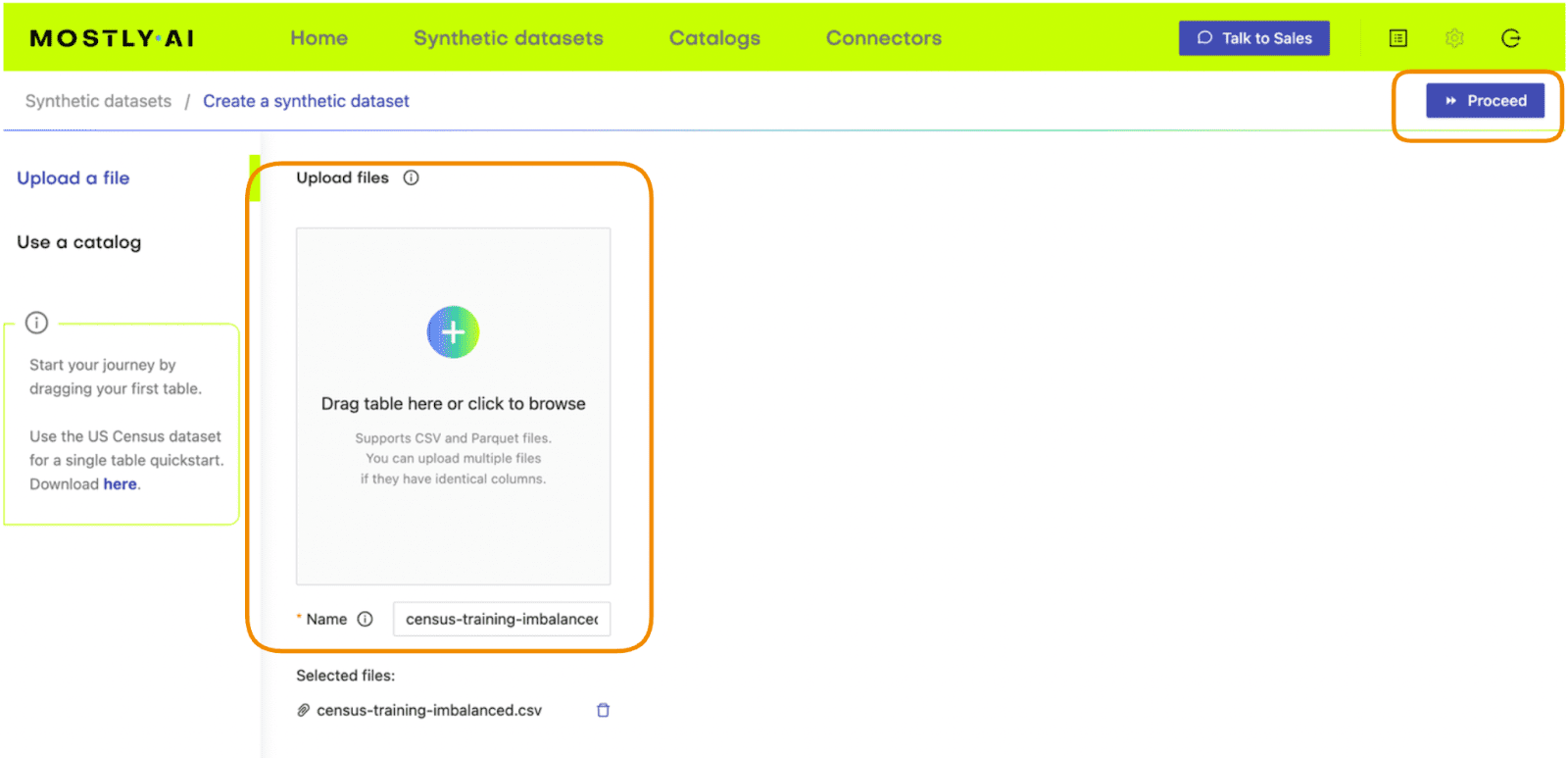

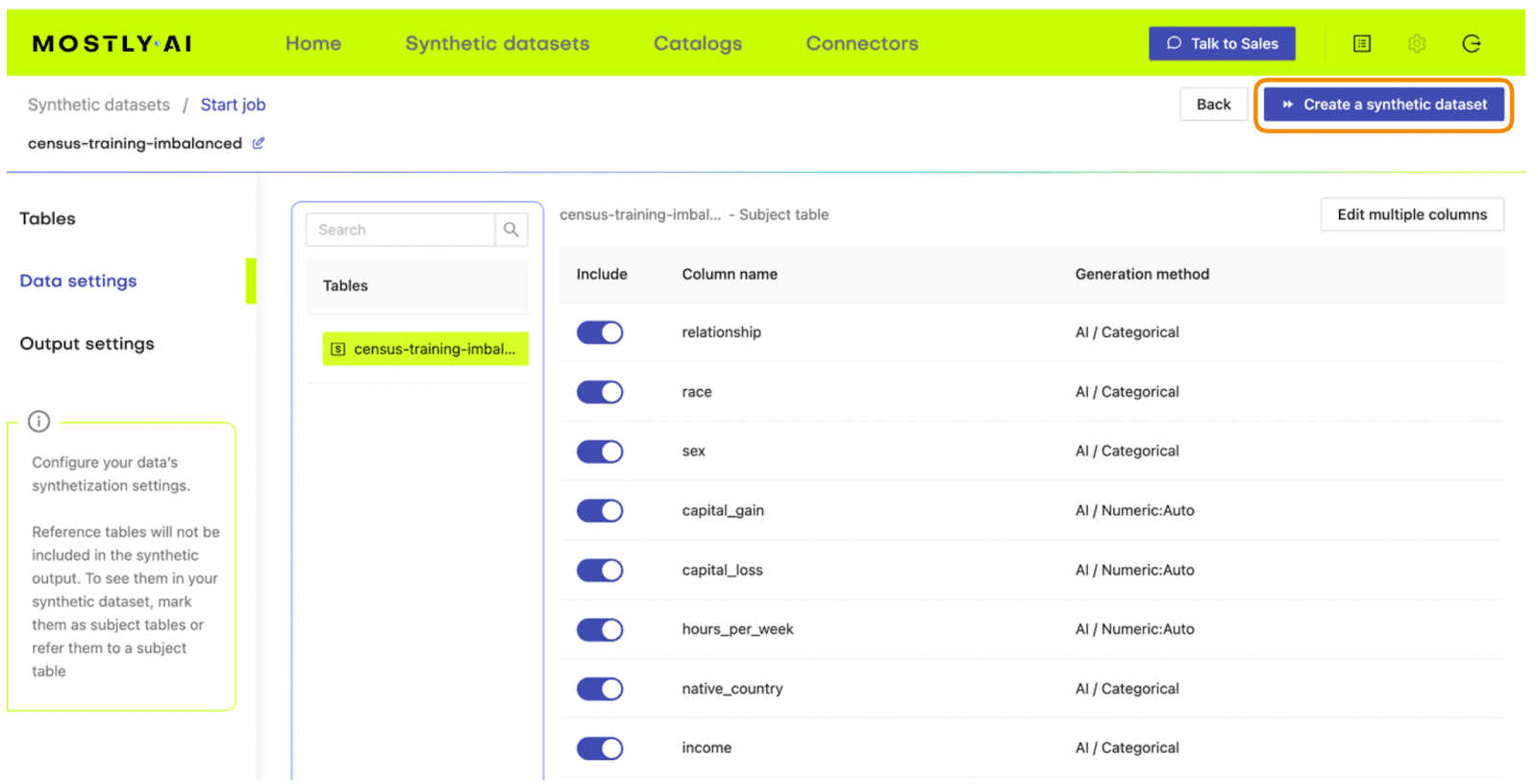

MOSTLY AI offers a synthetic rebalancing feature that can be used with any categorical column. Let’s walk through how this works:

- Download the imbalanced dataset here if you haven’t generated it yourself already. Use Ctrl+S or Cmd+S to save the file locally.

- Go to your MOSTLY AI account and navigate to “Synthetic Datasets”. Upload

census-training-imbalanced.csvand click “Proceed”.

Fig 2 - Upload the original dataset to MOSTLY AI’s synthetic data generator.

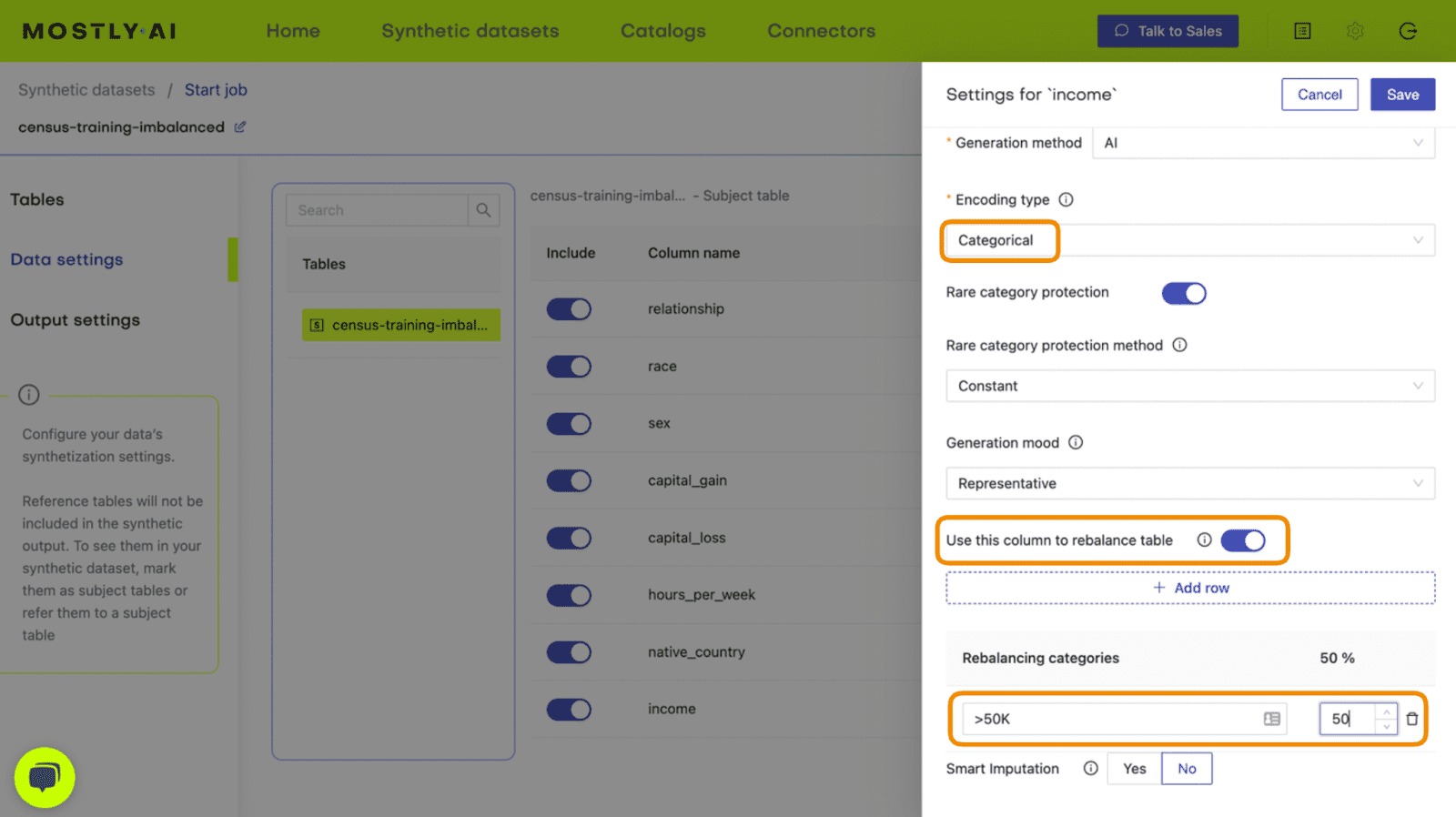

- On the next page, click “Data Settings” and then click on the “Income” column

Fig 3 - Navigate to the Data Settings of the Income column.

- Set the Encoding Type to “Categorical” and select the option to “Use this column to rebalance the table”. Then add a new row and rebalance the “>50K” column to be “50%” of the dataset. This will synthetically upsample the minority class to create an even split between high-income and low-income records.

Fig 4 - Set the relevant settings to rebalance the income column.

- Click “Save” and on the next page click “Create a synthetic dataset” to launch the job.

Fig 5 - Launch the synthetic data generation

Once the synthesization is complete, you can download the synthetic dataset to disk. Then return to wherever you are running your code and use the following code block to create a DataFrame containing the synthetic data.

# upload synthetic dataset

import pandas as pd

try:

# check whether we are in Google colab

from google.colab import files

print("running in COLAB mode")

repo = 'https://github.com/mostly-ai/mostly-tutorials/raw/dev/rebalancing'

import io

uploaded = files.upload()

syn = pd.read_csv(io.BytesIO(list(uploaded.values())[0]))

print(f"uploaded synthetic data with {syn.shape[0]:,} records and {syn.shape[1]:,} attributes")

except:

print("running in LOCAL mode")

repo = '.'

print("adapt `syn_file_path` to point to your generated synthetic data file")

syn_file_path = './census-synthetic-balanced.csv'

syn = pd.read_csv(syn_file_path)

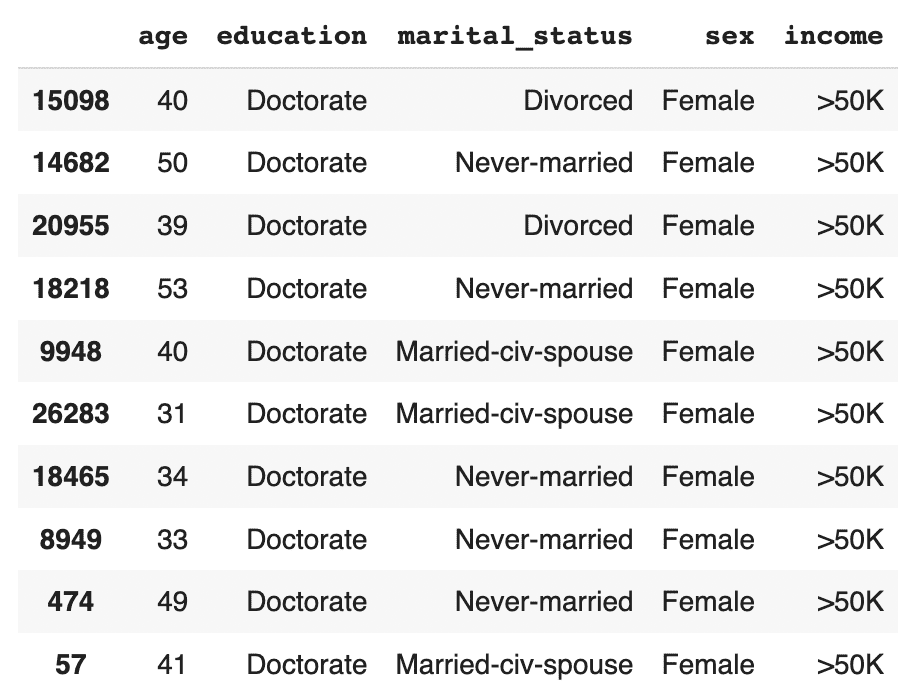

print(f"read synthetic data with {syn.shape[0]:,} records and {syn.shape[1]:,} attributes")Let's now repeat the data exploration steps we performed above with the original, imbalanced dataset. First, let’s display 10 randomly sampled synthetic records. We'll subset again for legibility. You can run this line multiple times to get different samples.

# sample 10 random records

syn_sub = syn[['age','education','marital_status','sex','income']]

syn_sub.sample(n=10)

This time, you should see that the records are evenly distributed across the two income classes.

Let's now investigate all female doctorates with a high income in the synthetic, rebalanced dataset:

syn_sub[

(syn_sub['income']=='>50K')

& (syn_sub.sex=='Female')

& (syn_sub.education=='Doctorate')

].sample(n=10)

The synthetic data contains a list of realistic, statistically sound female doctorates with a high income. This is great news for our machine learning use case because it means that our ML model will have plenty of data to learn about this particular important subsegment.

Evaluate ML performance using TSTR

Let’s now compare the quality of different rebalancing methods by training a machine learning model on the rebalanced data and evaluating the predictive performance of the resulting models.

We will investigate and compare 3 types of rebalancing:

- Random (or “naive”) oversampling

- SMOTE upsampling

- Synthetic rebalancing

The code block below defines the functions that will preprocess your data, train a LightGBM model and evaluate its performance using a holdout dataset. For more detailed descriptions of this code, take a look at the Train-Synthetic-Test-Real tutorial.

# import necessary libraries

import lightgbm as lgb

from lightgbm import early_stopping

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_auc_score, f1_score

import seaborn as sns

import matplotlib.pyplot as plt

# define target column and value

target_col = 'income'

target_val = '>50K'

# define preprocessing function

def prepare_xy(df: pd.DataFrame):

y = (df[target_col]==target_val).astype(int)

str_cols = [

col for col in df.select_dtypes(['object', 'string']).columns if col != target_col

]

for col in str_cols:

df[col] = pd.Categorical(df[col])

cat_cols = [

col for col in df.select_dtypes('category').columns if col != target_col

]

num_cols = [

col for col in df.select_dtypes('number').columns if col != target_col

]

for col in num_cols:

df[col] = df[col].astype('float')

X = df[cat_cols + num_cols]

return X, y

# define training function

def train_model(X, y):

cat_cols = list(X.select_dtypes('category').columns)

X_trn, X_val, y_trn, y_val = train_test_split(X, y, test_size=0.2, random_state=1)

ds_trn = lgb.Dataset(

X_trn,

label=y_trn,

categorical_feature=cat_cols,

free_raw_data=False

)

ds_val = lgb.Dataset(

X_val,

label=y_val,

categorical_feature=cat_cols,

free_raw_data=False

)

model = lgb.train(

params={

'verbose': -1,

'metric': 'auc',

'objective': 'binary'

},

train_set=ds_trn,

valid_sets=[ds_val],

callbacks=[early_stopping(5)],

)

return model

# define evaluation function

def evaluate_model(model, hol):

X_hol, y_hol = prepare_xy(hol)

probs = model.predict(X_hol)

preds = (probs >= 0.5).astype(int)

auc = roc_auc_score(y_hol, probs)

f1 = f1_score(y_hol, probs>0.5, average='macro')

probs_df = pd.concat([

pd.Series(probs, name='probability').reset_index(drop=True),

pd.Series(y_hol, name=target_col).reset_index(drop=True)

], axis=1)

sns.displot(

data=probs_df,

x='probability',

hue=target_col,

bins=20,

multiple="stack"

)

plt.title(f"AUC: {auc:.1%}, F1 Score: {f1:.2f}", fontsize = 20)

plt.show()

return auc

# create holdout dataset

df_hol = pd.read_csv(f'{repo}/census-holdout.csv')

df_hol_min = df_hol.loc[df_hol['income']=='>50K']

print(f"Holdout data consists of {df_hol.shape[0]:,} records",

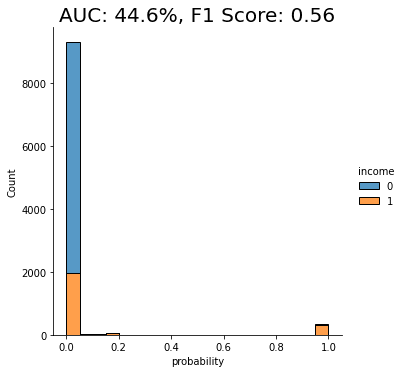

f"with {df_hol_min.shape[0]:,} samples from the minority class")ML performance of imbalanced dataset

Let’s now train a LightGBM model on the original, heavily imbalanced dataset and evaluate its predictive performance. This will give us a baseline against which we can compare the performance of the different rebalanced datasets.

X_trn, y_trn = prepare_xy(trn)

model_trn = train_model(X_trn, y_trn)

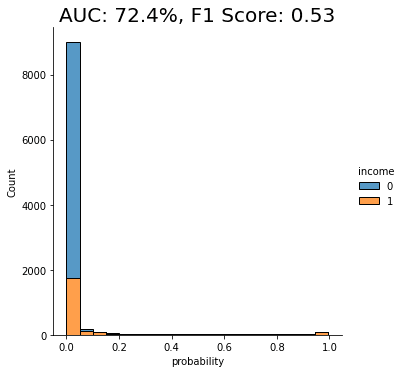

auc_trn = evaluate_model(model_trn, df_hol)

With an AUC of about 50%, the model trained on the imbalanced dataset is just as good as a flip of a coin, or, in other words, not worth very much at all. The downstream LightGBM model is not able to learn any signal due to the low number of minority-class samples.

Let’s see if we can improve this using rebalancing.

Naive rebalancing

First, let’s rebalance the dataset using the random oversampling method, also known as “naive rebalancing”. This method simply takes the minority class records and copies them to increase their quantity. This increases the number of records of the minority class but does not increase the statistical diversity. We will use the imblearn library to perform this step, feel free to check out their documentation for more context.

The code block performs the naive rebalancing, trains a LightGBM model using the rebalanced dataset and evaluates its predictive performance:

from imblearn.over_sampling import RandomOverSampler

X_trn, y_trn = prepare_xy(trn)

sm = RandomOverSampler(random_state=1)

X_trn_up, y_trn_up = sm.fit_resample(X_trn, y_trn)

model_trn_up = train_model(X_trn_up, y_trn_up)

auc_trn_up = evaluate_model(model_trn_up, df_hol)

We see a clear improvement in predictive performance, with an AUC score of around 70%. This is better than the baseline model trained on the imbalanced dataset, but still not great. We see that a significant portion of the “0” class (low-income) is being incorrectly classified as “1” (high-income).

This is not surprising because, as stated above, this rebalancing method just copies the existing minority class records. This increases their quantity but does not add any new statistical information into the model and therefore does not offer the model much data that it can use to learn about minority-class instances that are not present in the training data.

Let’s see if we can improve on this using another rebalancing method.

SMOTE rebalancing

SMOTE upsampling is a state-of-the art upsampling method which, unlike the random oversampling seen above, does create novel, statistically representative samples. It does so by interpolating between neighboring samples. It’s important to note, however, that SMOTE upsampling is non-privacy-preserving.

The following code block performs the rebalancing using SMOTE upsampling, trains a LightGBM model on the rebalanced dataset, and evaluates its performance:

from imblearn.over_sampling import SMOTENC

X_trn, y_trn = prepare_xy(trn)

sm = SMOTENC(

categorical_features=X_trn.dtypes=='category',

random_state=1

)

X_trn_smote, y_trn_smote = sm.fit_resample(X_trn, y_trn)

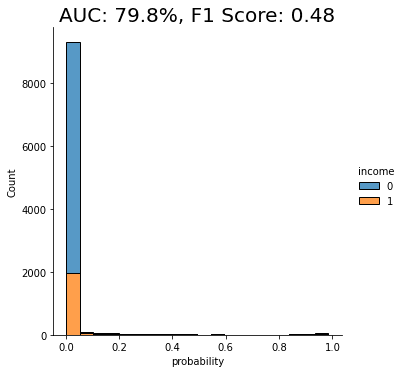

model_trn_smote = train_model(X_trn_smote, y_trn_smote)

auc_trn_smote = evaluate_model(model_trn_smote, df_hol)

We see another clear jump in performance: the SMOTE upsampling boosts the performance of the downstream model to close to 80%. This is clearly an improvement from the random oversampling we saw above, and for this reason, SMOTE is quite commonly used.

Let’s see if we can do even better.

Synthetic rebalancing with MOSTLY AI

In this final step, let’s take the synthetically rebalanced dataset that we generated earlier using MOSTLY AI to train a LightGBM model. We’ll then evaluate the performance of this downstream ML model and compare it against those we saw above.

The code block below prepares the synthetically rebalanced data, trains the LightGBM model, and evaluates it:

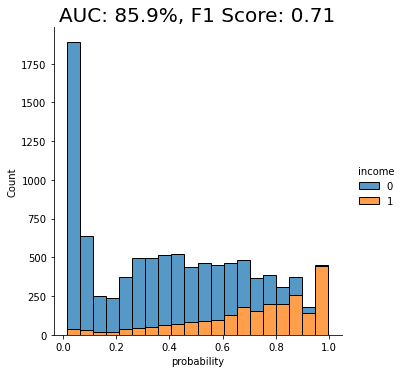

X_syn, y_syn = prepare_xy(syn)

model_syn = train_model(X_syn, y_syn)

auc_syn = evaluate_model(model_syn, df_hol)

Both performance measures, the AUC as well as the macro-averaged F1 score, are significantly better for the model that was trained on synthetic data than if it were trained on any of the other methods. We can also see that the portion of “0”s incorrectly classified as “1”s has dropped significantly.

The synthetically rebalanced dataset has enabled the model to make fine-grained distinctions between the high-income and low-income records. This is strong proof of the value of synthetic rebalancing for learning more about a small sub-group within the population.

The value of synthetic rebalancing

In this tutorial, you have seen firsthand the value of synthetic rebalancing for downstream ML classification problems. You have gained an understanding of the necessity of rebalancing when working with imbalanced datasets in order to provide the machine learning model with more samples of the minority class. You have learned how to perform synthetic rebalancing with MOSTLY AI and observed the superior performance of this rebalancing method when compared against other methods on the same dataset. Of course, the actual lift in performance may vary depending on the dataset, the predictive task, and the chosen ML model.

What’s next?

In addition to walking through the above instructions, we suggest experimenting with the following in order to get an even better grasp of synthetic rebalancing:

- repeat the experiments for different class imbalances,

- repeat the experiments for different datasets, ML models, and predictive tasks.

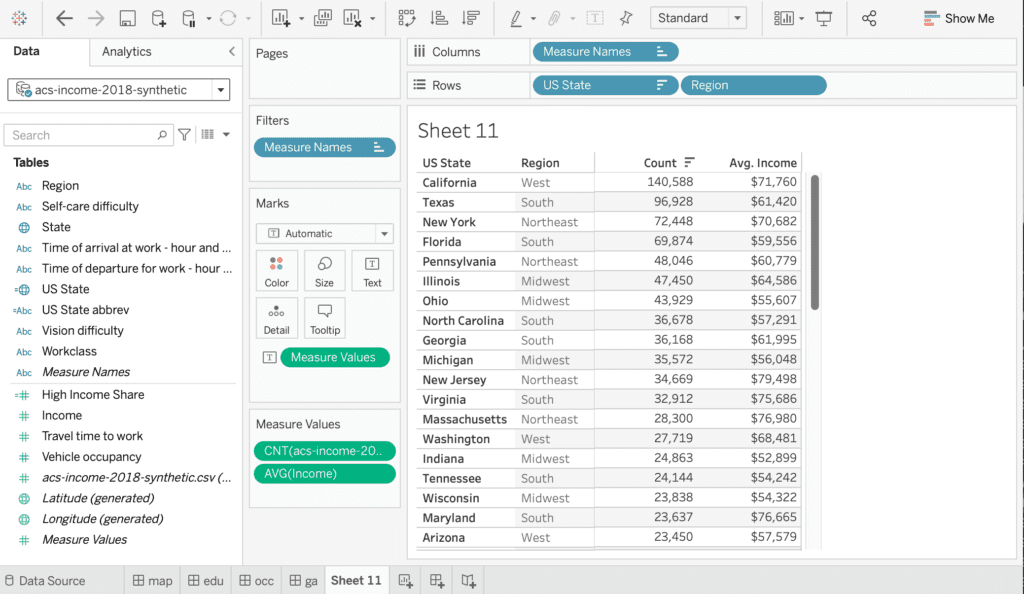

In this tutorial, you will explore the relationship between the size of your training sample and synthetic data accuracy. This is an important concept to master because it can help you significantly reduce the runtime and computational cost of your training runs while maintaining the optimal accuracy you require.

We will start with a single real dataset, which we will use to create 5 different synthetic datasets, each with a different training sample size. We will then evaluate the accuracy of the 5 resulting synthetic datasets by looking at individual variable distributions, by verifying rule-adherence and by evaluating their performance on a downstream machine-learning (ML) task. The Python code for this tutorial is runnable and publicly available in this Google Colab notebook.

Size vs synthetic data accuracy tradeoff

Our working hypothesis is that synthetic data accuracy will increase as the number of training samples increases: the more data the generative AI model has to learn from, the better it will perform.

Fig 1 - The Size vs Accuracy Tradeoff

But more training samples also means more data to crunch; i.e. more computational cost and a longer runtime. Our goal, then, will be to find the sweet spot at which we achieve optimal accuracy with the lowest number of training samples possible.

Note that we do not expect synthetic data to ever perfectly match the original data. This would only be satisfied by a copy of the data, which obviously would neither satisfy any privacy requirements nor would provide any novel samples. That being said, we shall expect that due to sampling variance the synthetic data can deviate. Ideally this deviation will be just as much, and not more, than the deviation that we would observe by analyzing an actual holdout dataset.

Synthesize your data

For this tutorial, we will be using the same UCI Adult Income dataset, as well as the same training and validation split, that was used in the Train-Synthetic-Test-Real tutorial. This means we have a total of 48,842 records across 15 attributes, and will be using up to 39,074 (=80%) of those records for the synthesis.

- Download the training data

census-training.csvby clicking here and pressing Ctrl+S or Cmd+S to save the file locally. This is an 80% sample of the full dataset. The remaining 20% sample (which we’ll use for evaluation later) can be fetched from here.

Fig 2 - Download the original training data and save it to disk.

- Synthesize census-training.csv via MOSTLY AI's synthetic data generator multiple times, each time with a different number of maximum training samples. We will use the following training sample sizes in this tutorial: 100, 400, 1600, 6400, 25600. Always generate a consistent number of subjects, e.g. 10,000. You can leave all other settings at their default.

- Download the generated datasets from MOSTLY AI as CSV files, and rename each CSV file with an appropriate name (eg. syn_00100.csv, syn_00400.csv, etc.)

- Now ensure you can access the synthetic datasets from wherever you are running the code for this tutorial. If you are working from the Colab notebook, you can upload the synthetic datasets by executing the code block below:

# upload synthetic dataset

import pandas as pd

try:

# check whether we are in Google colab

from google.colab import files

print("running in COLAB mode")

repo = 'https://github.com/mostly-ai/mostly-tutorials/raw/dev/size-vs-accuracy'

import io

uploaded = files.upload()

synthetic_datasets = {

file_name: pd.read_csv(io.BytesIO(uploaded[file_name]), skipinitialspace=True)

for file_name in uploaded

}

except:

print("running in LOCAL mode")

repo = '.'

print("upload your synthetic data files to this directory via Jupyter")

from pathlib import Path

syn_files = sorted(list(Path('.').glob('syn*csv')))

synthetic_datasets = {

file_name.name: pd.read_csv(file_name)

for file_name in syn_files

}

for k, df in synthetic_datasets.items():

print(f"Loaded Dataset `{k}` with {df.shape[0]:,} records and {df.shape[1]:,} attributes")

Evaluate synthetic data accuracy

Now that you have your 5 synthetic datasets (each trained on a different training sample size) let’s take a look at the high-level accuracy scores of these synthetic datasets.

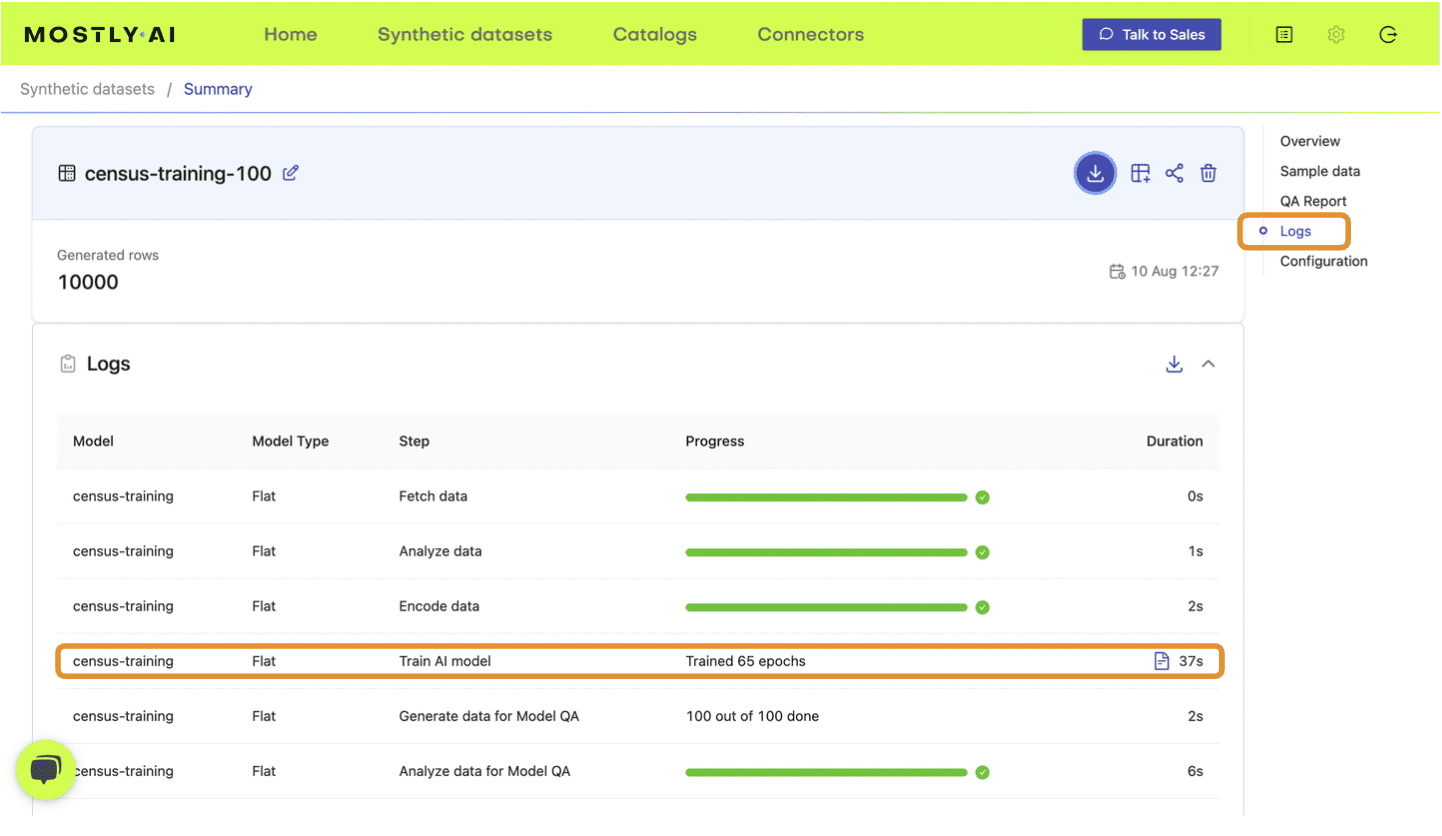

- Navigate to your MOSTLY AI account and note the reported overall synthetic data accuracy as well as the runtime of each job:

Fig 3 - Note the accuracy score in the QA Report tab of your completed synthetic dataset job.

Fig 4 - Note the training time from the Logs tab.

- Update the following DataFrame accordingly:

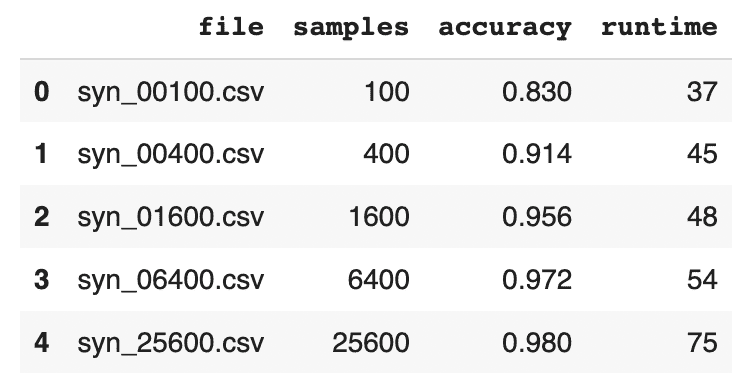

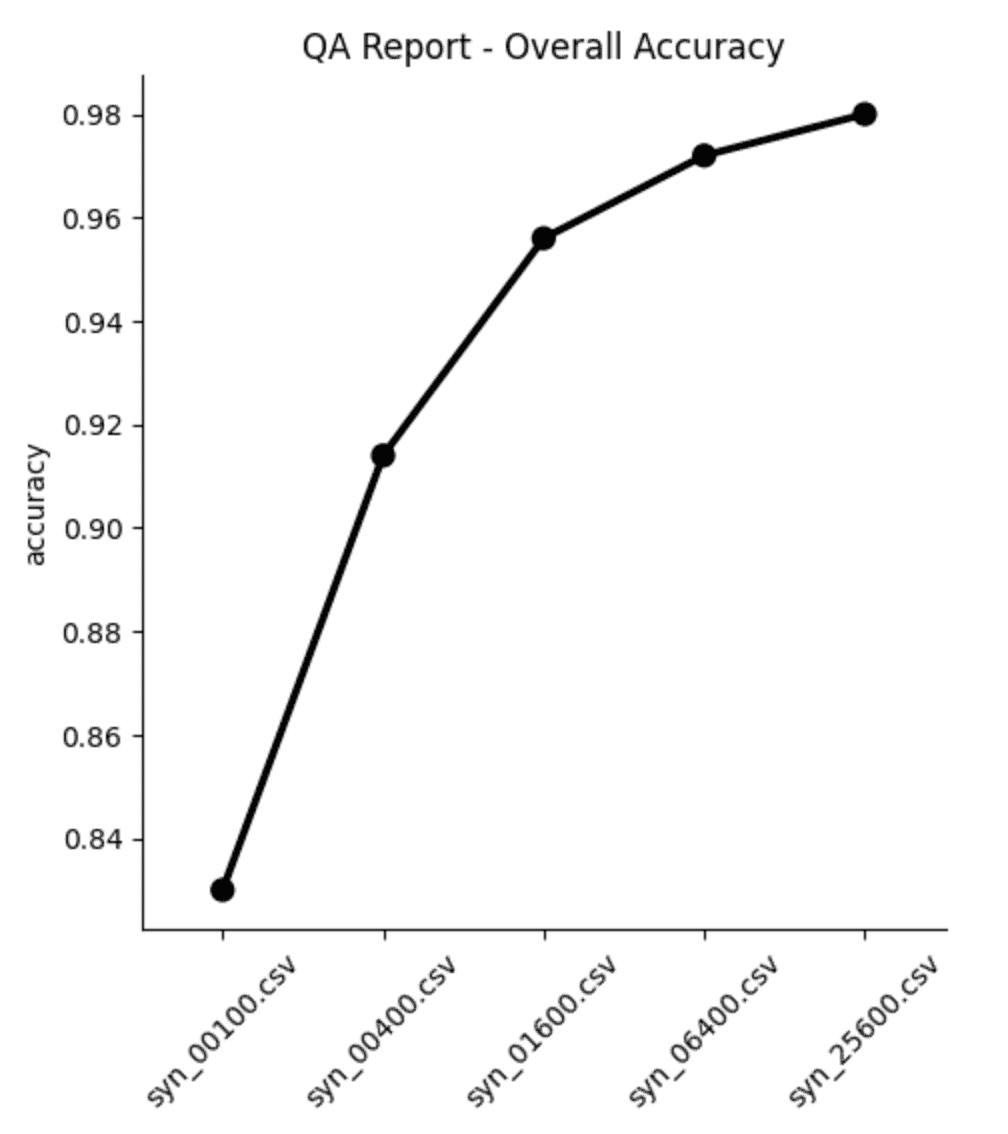

results = pd.DataFrame([

{'file': 'syn_00100.csv', 'samples': 100, 'accuracy': 0.830, 'runtime': 37},

{'file': 'syn_00400.csv', 'samples': 400, 'accuracy': 0.914, 'runtime': 45},

{'file': 'syn_01600.csv', 'samples': 1600, 'accuracy': 0.956, 'runtime': 48},

{'file': 'syn_06400.csv', 'samples': 6400, 'accuracy': 0.972, 'runtime': 54},

{'file': 'syn_25600.csv', 'samples': 25600, 'accuracy': 0.980, 'runtime': 75},

])

results

- Visualize the results using the code block below:

import seaborn as sns

import matplotlib.pyplot as plt

sns.catplot(data=results, y='accuracy', x='file', kind='point', color='black')

plt.xticks(rotation=45)

plt.xlabel('')

plt.title('QA Report - Overall Accuracy')

plt.show()

From both the table and the plot we can see that, as expected, the overall accuracy of the synthetic data improves as we increase the number of training samples. But notice that the increase is not strictly linear: while we see big jumps in accuracy performance between the first three datasets (100, 400 and 1,600 samples, respectively), the jumps get smaller as the training samples increase in size. Between the last two datasets (trained on 6,400 and 25,600 samples, respectively) the increase in accuracy is less than 0.1%, while the runtime increases by more than 35%.

Synthetic data quality deep-dive

The overall accuracy score is a great place to start when assessing the quality of your synthetic data, but let’s now dig a little deeper to see how the synthetic dataset compares to the original data from a few different angles. We’ll take a look at:

- Distributions of individual variables

- Rule adherence

- Performance on a downstream ML task

Before you jump into the next sections, run the code block below to concatenate all the 5 synthetic datasets together in order to facilitate comparison:

# combine synthetics

df = pd.concat([d.assign(split=k) for k, d in synthetic_datasets.items()], axis=0)

df['split'] = pd.Categorical(df['split'], categories=df["split"].unique())

df.insert(0, 'split', df.pop('split'))

# combine synthetics and original

df_trn = pd.read_csv(f'{repo}/census-training.csv')

df_hol = pd.read_csv(f'{repo}/census-holdout.csv')

dataset = synthetic_datasets | {'training': df_trn, 'holdout': df_hol}

df_all = pd.concat([d.assign(split=k) for k, d in dataset.items()], axis=0)

df_all['split'] = pd.Categorical(df_all['split'], categories=df_all["split"].unique())

df_all.insert(0, 'split', df_all.pop('split'))Single variable distributions

Let’s explore the distributions of some individual variables.

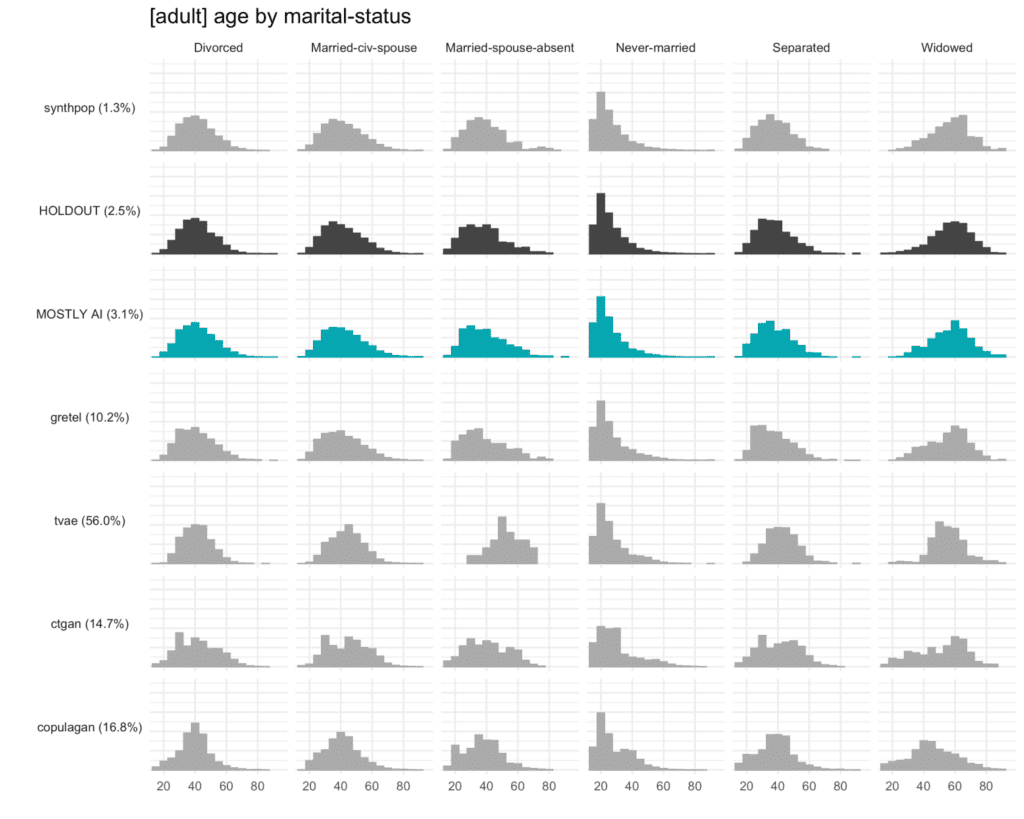

The more training samples have been used for the synthesis, the closer the synthetic distributions are expected to be to the original ones. Note that we can also see deviations within statistics between the target and the holdout data. This is expected due to the sampling variance. The smaller the dataset, the larger the sampling variance will be. The ideal synthetic dataset would deviate from the original dataset just as much as the holdout set does.

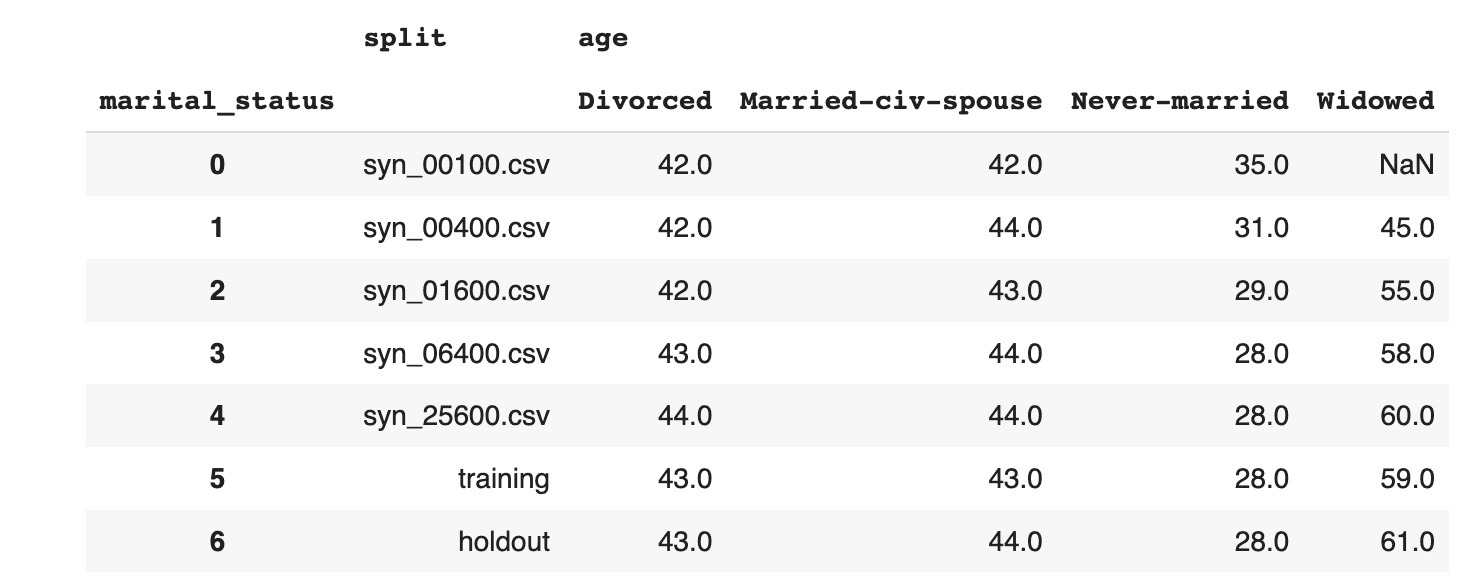

Start by taking a look at the average age, split by marital status:

stats = (

df_all.groupby(['split', 'marital_status'])['age']

.mean().round().to_frame().reset_index(drop=False)

)

stats = (

stats.loc[~stats['marital_status']

.isin(['_RARE_', 'Married-AF-spouse', 'Married-spouse-absent', 'Separated'])]

)

stats = (

stats.pivot_table(index='split', columns=['marital_status'])

.reset_index(drop=False)

)

stats

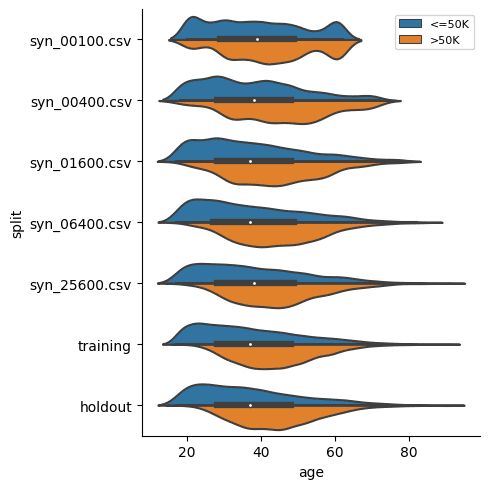

Then explore the age distribution, split by income:

sns.catplot(

data=df_all,

x='age',

y='split',

hue='income',

kind='violin',

split=True,

legend=None

)

plt.legend(loc='upper right', title='', prop={'size': 8})

plt.show()

In both of these cases we see, again, that the synthetic datasets trained on more training samples resemble the original dataset more closely. We also see that the difference between the dataset trained on 6,400 samples and that trained on 25,600 seems to be minimal. This means that if the accuracy of these specific individual variable distributions is most important to you, you could confidently train your synthetic data generation model using just 6,400 samples (rather than the full 39,074 records). This will save you significantly in computational costs and runtime.

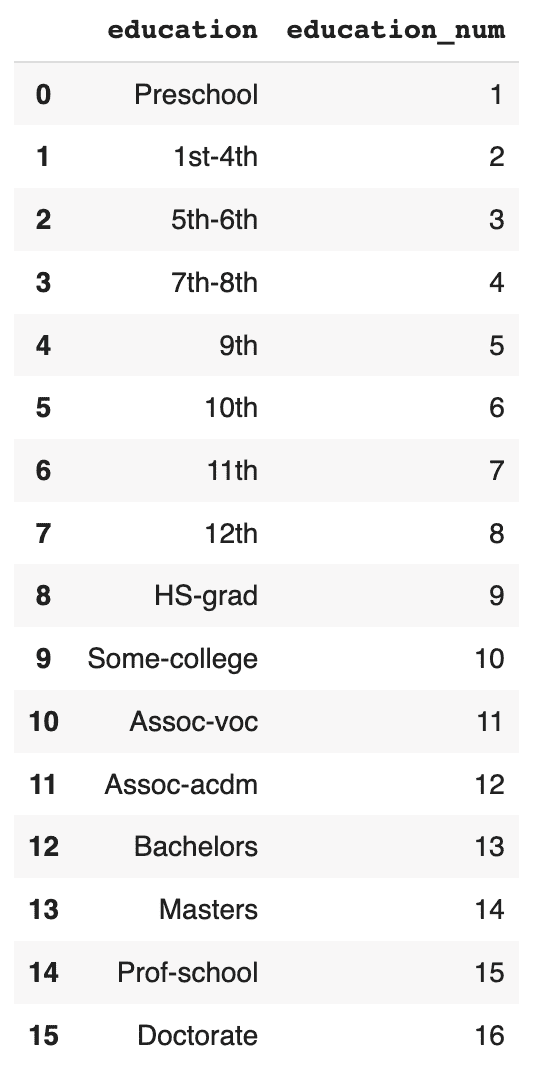

Rule Adherence

The original data has a 1:1 relationship between the education and education_num columns: each textual education level in the education column has a corresponding numerical value in the education_num column.

Let's check in how many cases the generated synthetic data has correctly retained that specific rule between these two columns.

First, display the matching columns in the original training data:

# display unique combinations of `education` and `education_num`

(df_trn[['education', 'education_num']]

.drop_duplicates()

.sort_values('education_num')

.reset_index(drop=True)

)

Now, convert the education column to Categorical dtype, sort and calculate the ratio of correct matches:

# convert `education` to Categorical with proper sort order

df['education'] = pd.Categorical(

df['education'],

categories=df_trn.sort_values('education_num')['education'].unique())

# calculate correct match

stats = (

df.groupby('split')

.apply(lambda x: (x['education'].cat.codes+1 == x['education_num']).mean())

)

stats = stats.to_frame('matches').reset_index()

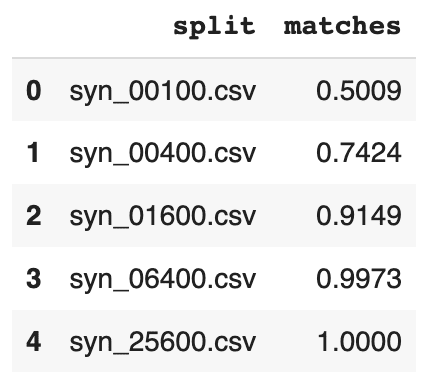

stats

Visualize the results:

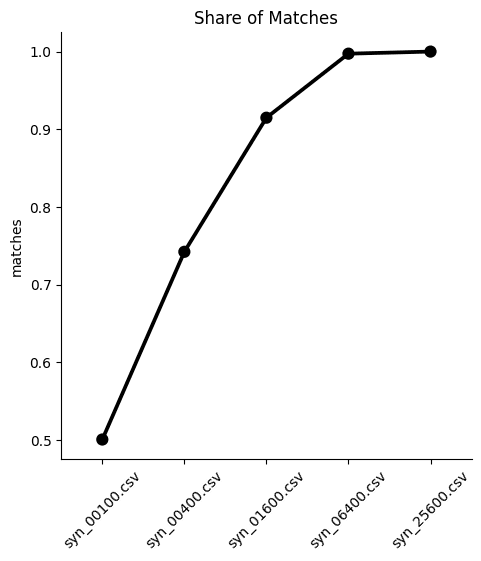

sns.catplot(

data=stats,

y='matches',

x='split',

kind='point',

color='black'

)

plt.xticks(rotation=45)

plt.xlabel('')

plt.title('Share of Matches')

plt.show()

We can see from both the table and the plot that the dataset trained on just 100 samples severely underperforms, matching the right values in the columns only half of the time. While performance improves as the training samples increase, only the synthetic dataset generated using 25,600 samples is able to reproduce this rule adherence 100%. This means that if rule adherence for these columns is crucial to the quality of your synthetic data, you should probably opt for a training size of 25,600.

Downstream ML task

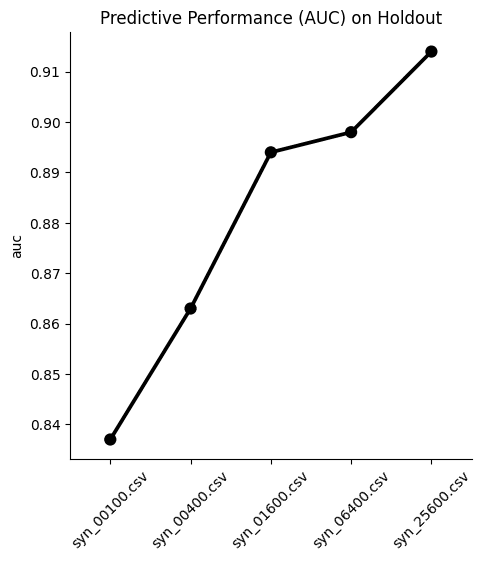

Finally, let’s evaluate the 5 synthetic datasets by evaluating their performance on a downstream machine learning task. This is also referred to as the Train-Synthetic-Test-Real evaluation methodology. You will train a ML model on each of the 5 synthetic datasets and then evaluate them on their performance against an actual holdout dataset containing real data which the ML model has never seen before (the remaining 20% of the dataset, which can be downloaded here).

The code block below defines the functions that will preprocess your data, train a LightGBM model and evaluate its performance. For more detailed descriptions of this code, take a look at the Train-Synthetic-Test-Real tutorial.

# import necessary libraries

import lightgbm as lgb

from lightgbm import early_stopping

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_auc_score

# define target column and value

target_col = 'income'

target_val = '>50K'

# prepare data, and split into features `X` and target `y`

def prepare_xy(df: pd.DataFrame):

y = (df[target_col]==target_val).astype(int)

str_cols = [

col for col in df.select_dtypes(['object', 'string']).columns if col != target_col

]

for col in str_cols:

df[col] = pd.Categorical(df[col])

cat_cols = [

col for col in df.select_dtypes('category').columns if col != target_col

]

num_cols = [

col for col in df.select_dtypes('number').columns if col != target_col

]

for col in num_cols:

df[col] = df[col].astype('float')

X = df[cat_cols + num_cols]

return X, y

# train ML model with early stopping

def train_model(X, y):

cat_cols = list(X.select_dtypes('category').columns)

X_trn, X_val, y_trn, y_val = train_test_split(X, y, test_size=0.2, random_state=1)

ds_trn = lgb.Dataset(

X_trn,

label=y_trn,

categorical_feature=cat_cols,

free_raw_data=False

)

ds_val = lgb.Dataset(

X_val,

label=y_val,

categorical_feature=cat_cols,

free_raw_data=False

)

model = lgb.train(

params={

'verbose': -1,

'metric': 'auc',

'objective': 'binary'

},

train_set=ds_trn,

valid_sets=[ds_val],

callbacks=[early_stopping(5)],

)

return model

# apply ML Model to some holdout data, report key metrics, and visualize scores

def evaluate_model(model, hol):

X_hol, y_hol = prepare_xy(hol)

probs = model.predict(X_hol)

preds = (probs >= 0.5).astype(int)

auc = roc_auc_score(y_hol, probs)

return auc

def train_and_evaluate(df):

X, y = prepare_xy(df)

model = train_model(X, y)

auc = evaluate_model(model, df_hol)

return aucNow calculate the performance metric for each of the 5 ML models:

aucs = {k: train_and_evaluate(df) for k, df in synthetic_datasets.items()}

aucs = pd.Series(aucs).round(3).to_frame('auc').reset_index()And visualize the results:

sns.catplot(

data=aucs,

y='auc',

x='index',

kind='point',

color='black'

)

plt.xticks(rotation=45)

plt.xlabel('')

plt.title('Predictive Performance (AUC) on Holdout')

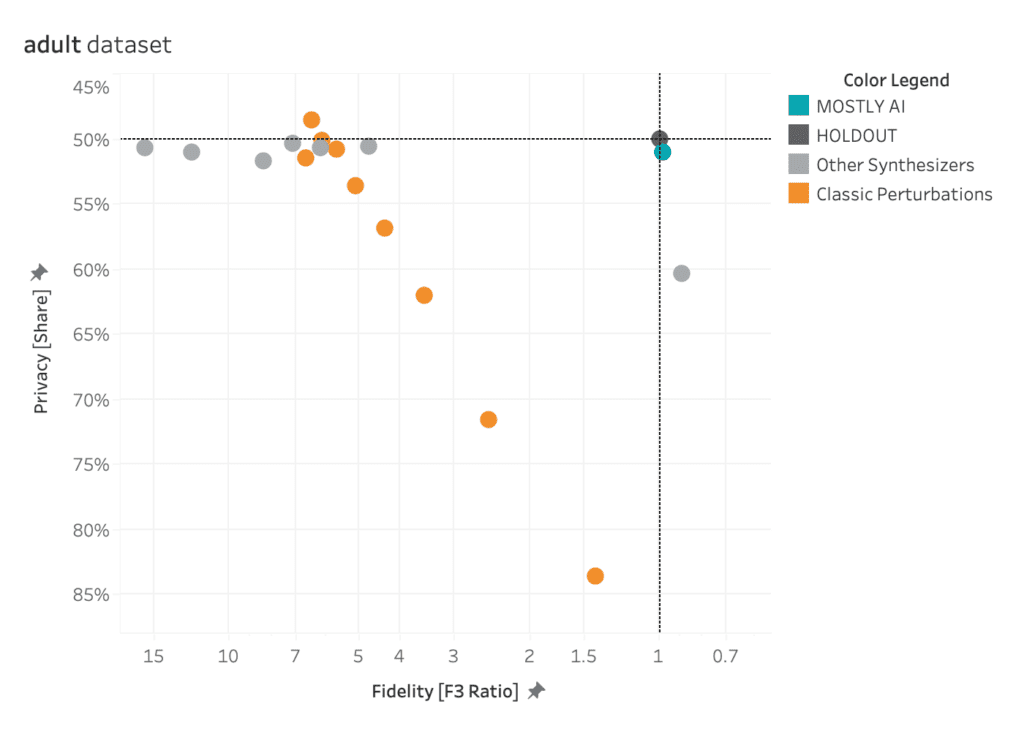

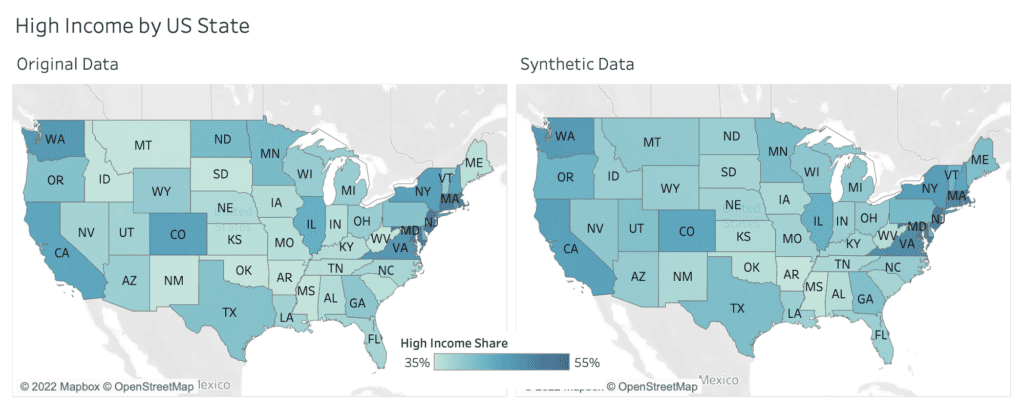

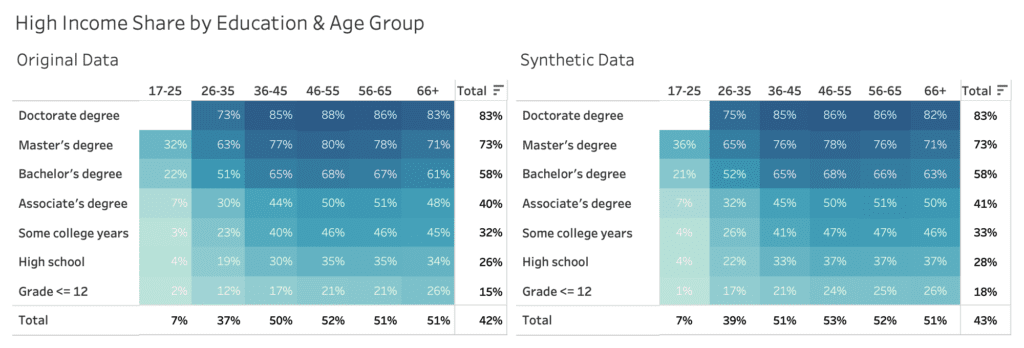

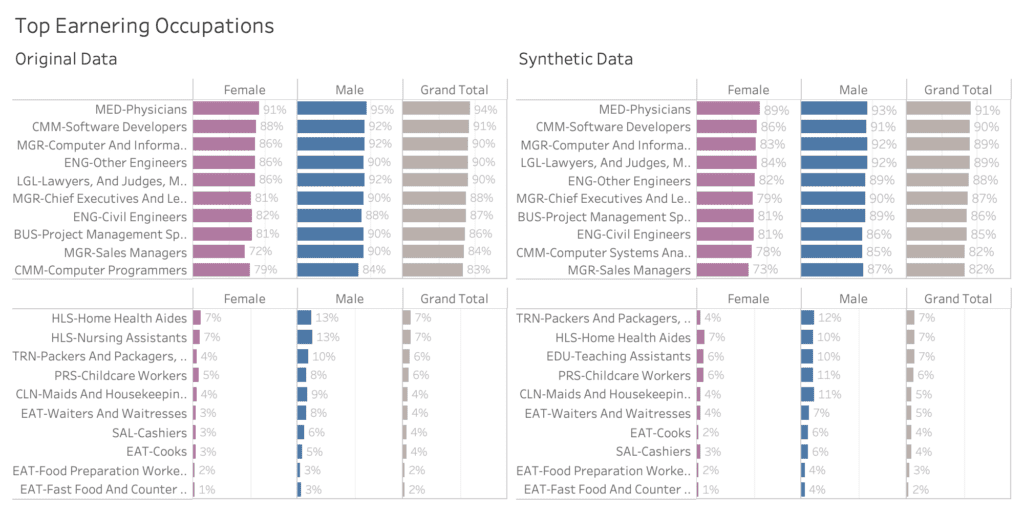

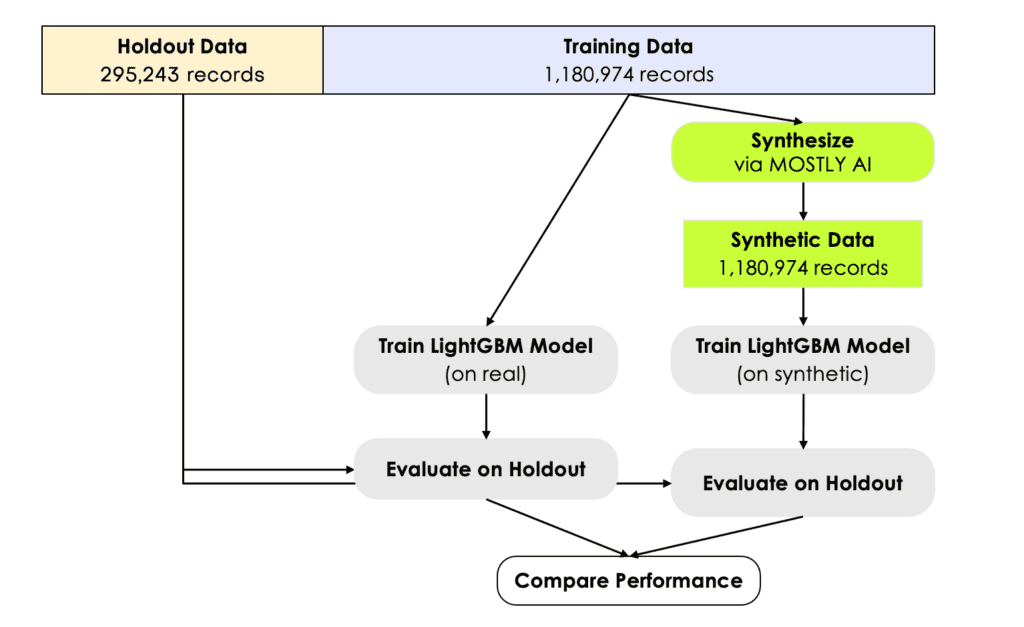

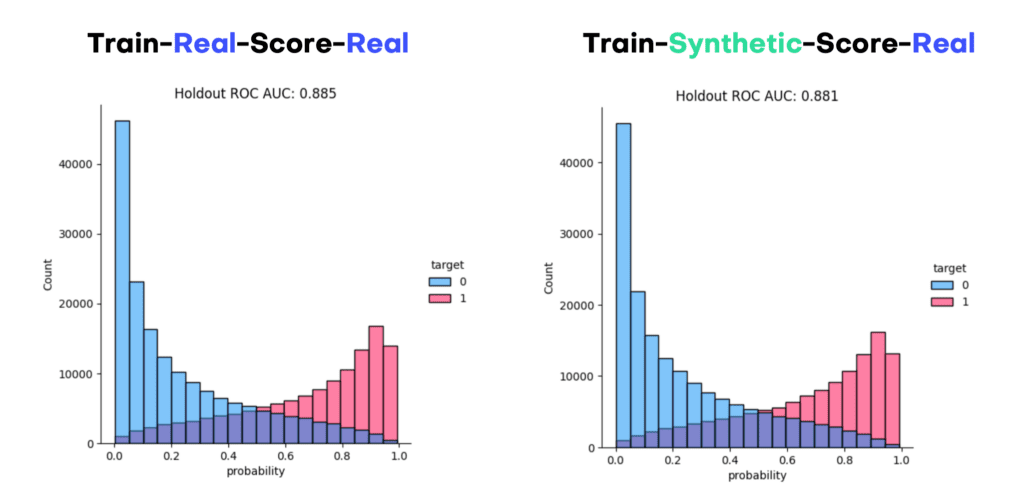

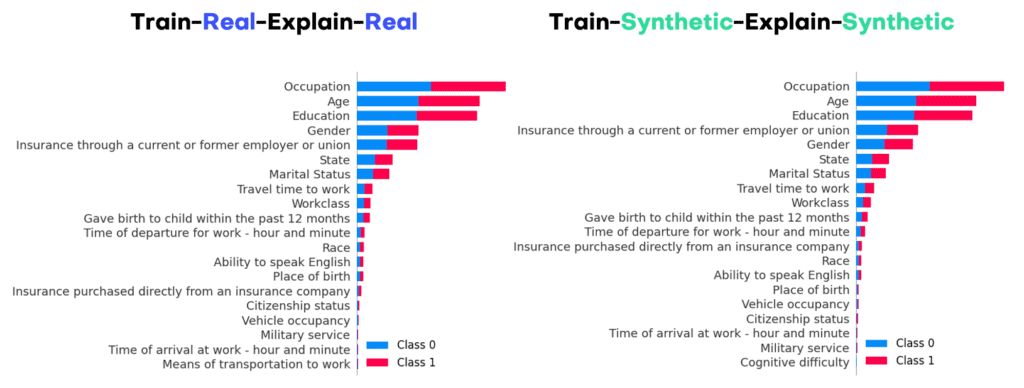

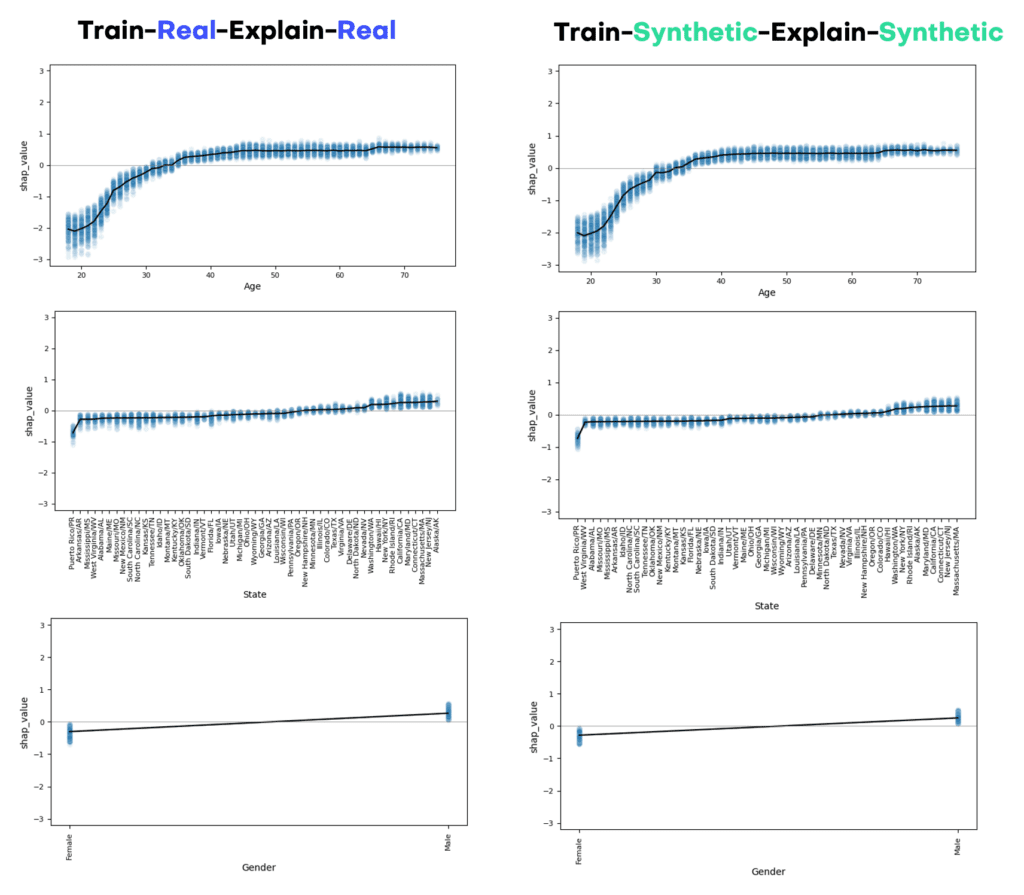

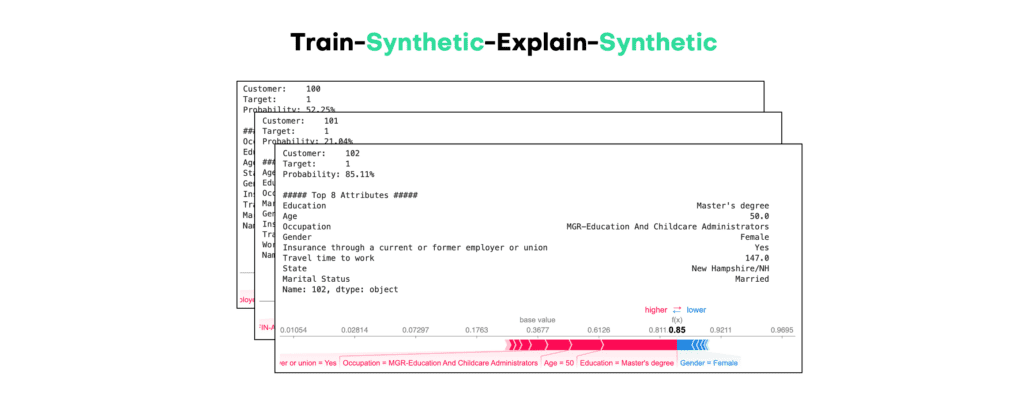

plt.show()