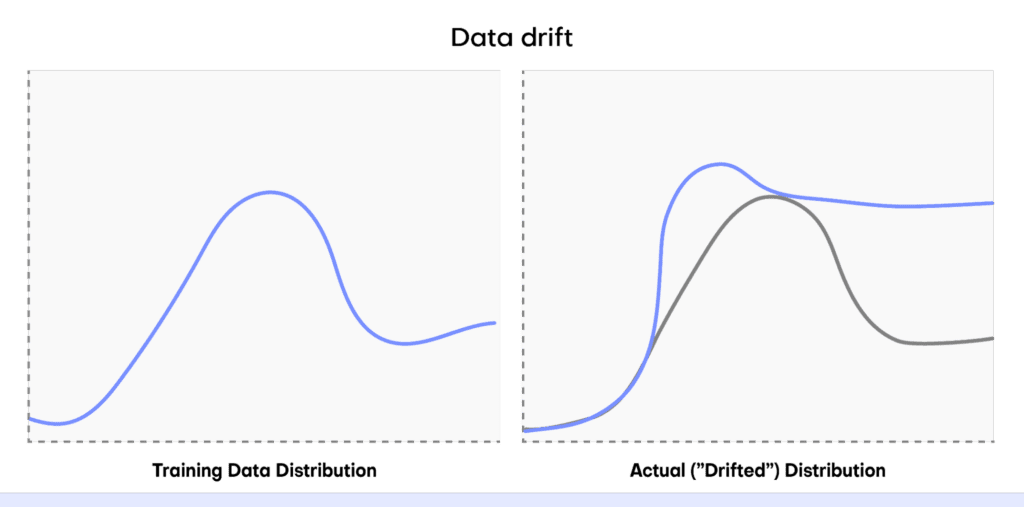

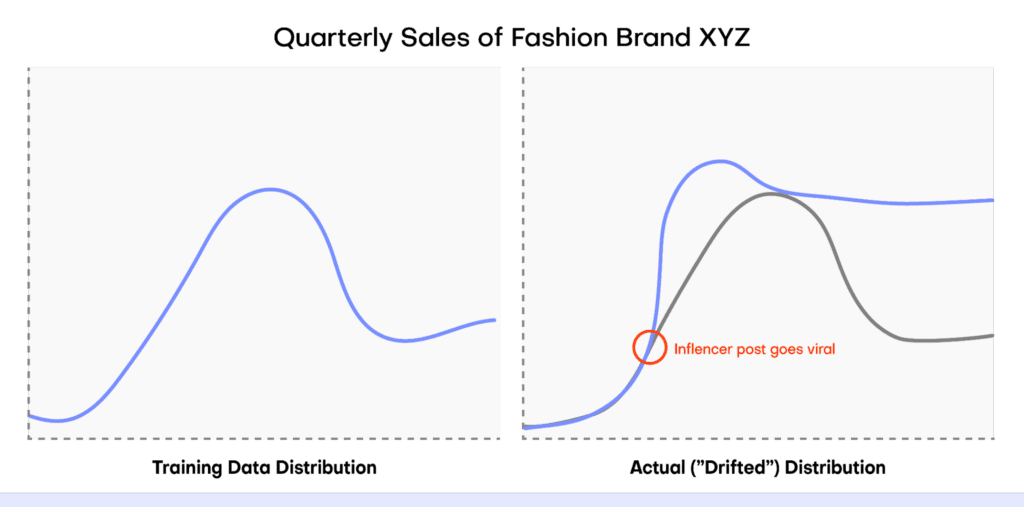

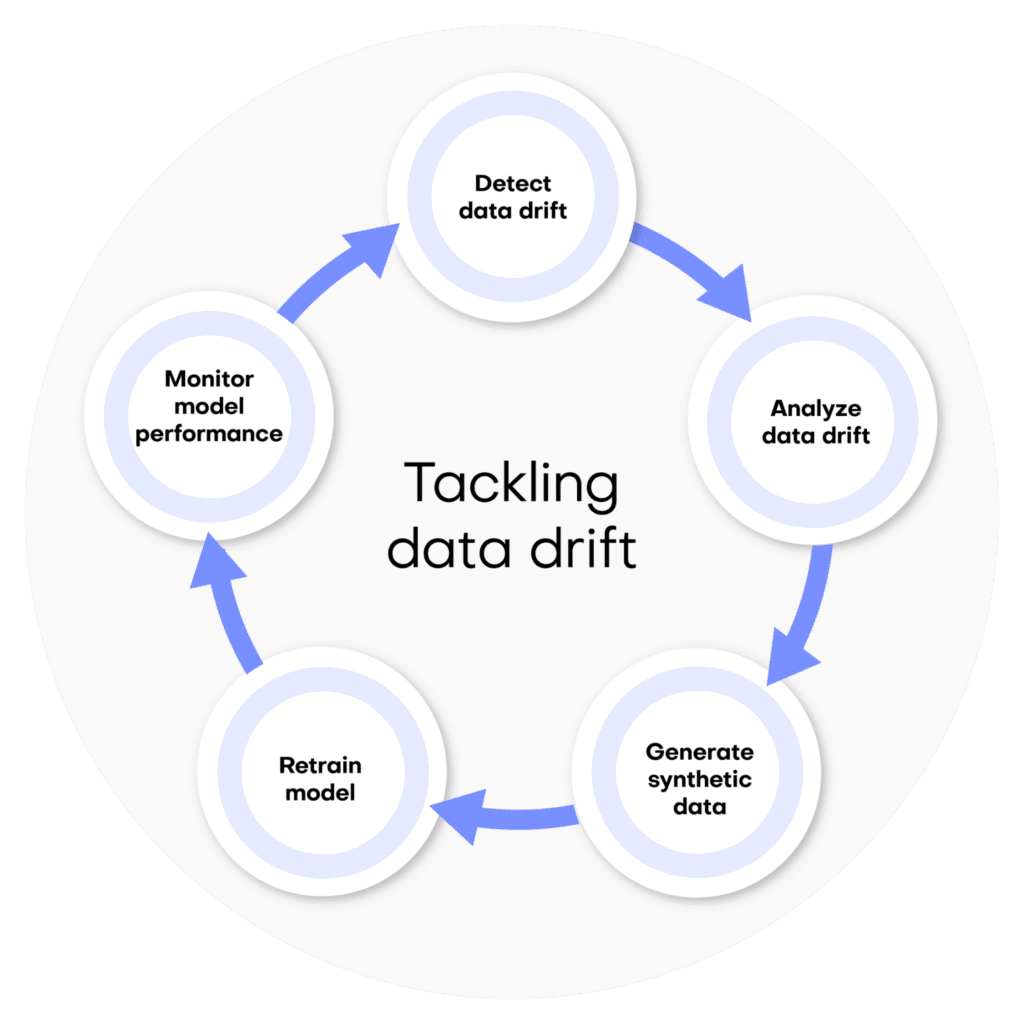

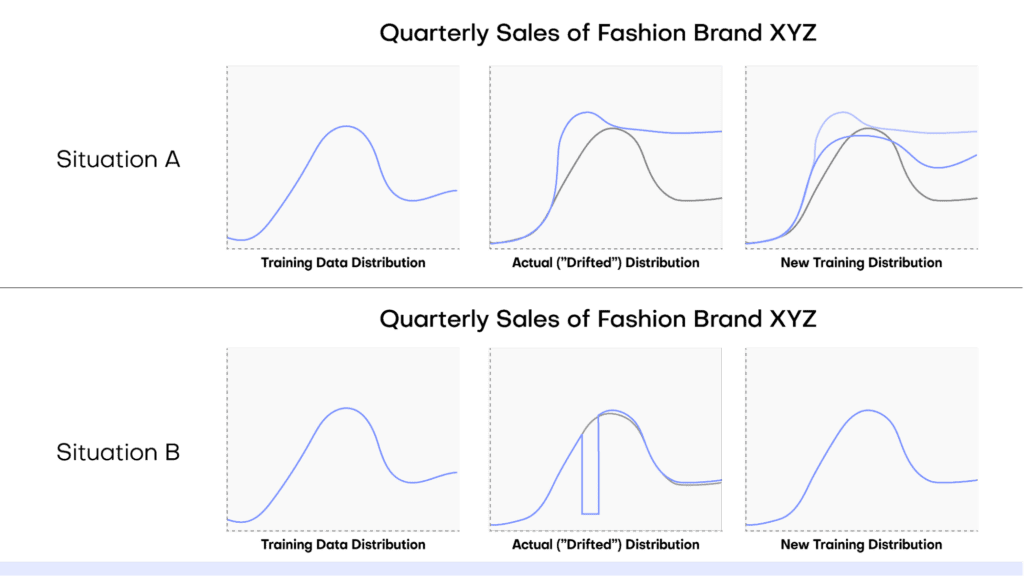

In this tutorial, you will learn how to perform conditional data generation. As the name suggests, this method of synthetic data generation is useful when you want to have more fine-grained control over the statistical distributions of your synthetic data by setting certain conditions in advance. This can be useful across a range of use cases, such as performing data simulation, tackling data drift, or when you want to retain certain columns as they are during synthesization.

You will work through two use cases in this tutorial. In the first use case, you will be working with the UCI Adult Income dataset in order to simulate what this dataset would look like if there was no gender income gap. In the second use case, you will be working with Airbnb accommodation data for Manhattan, which contains geolocation coordinates for each accommodation.

To gain useful insights from this dataset, this geolocation data will need to remain exactly as it is in the original dataset during synthesization. In both cases, you will end up with data that is partially pre-determined by the user (to either remain in its original form or follow a specific distribution) and partially synthesized.

It’s important to note that the synthetic data you generate using conditional data generation is still statistically representative within the conditional context that you’ve created. The degree of privacy preservation of the resulting synthetic dataset is largely dependent on the privacy of the provided fixed attributes.

The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

How does conditional data generation work?

We’ve broken down the process of conditional data generation with MOSTLY AI into 5 steps below. We’ll describe the steps here first, and in the following sections, you will get a chance to implement them yourself.

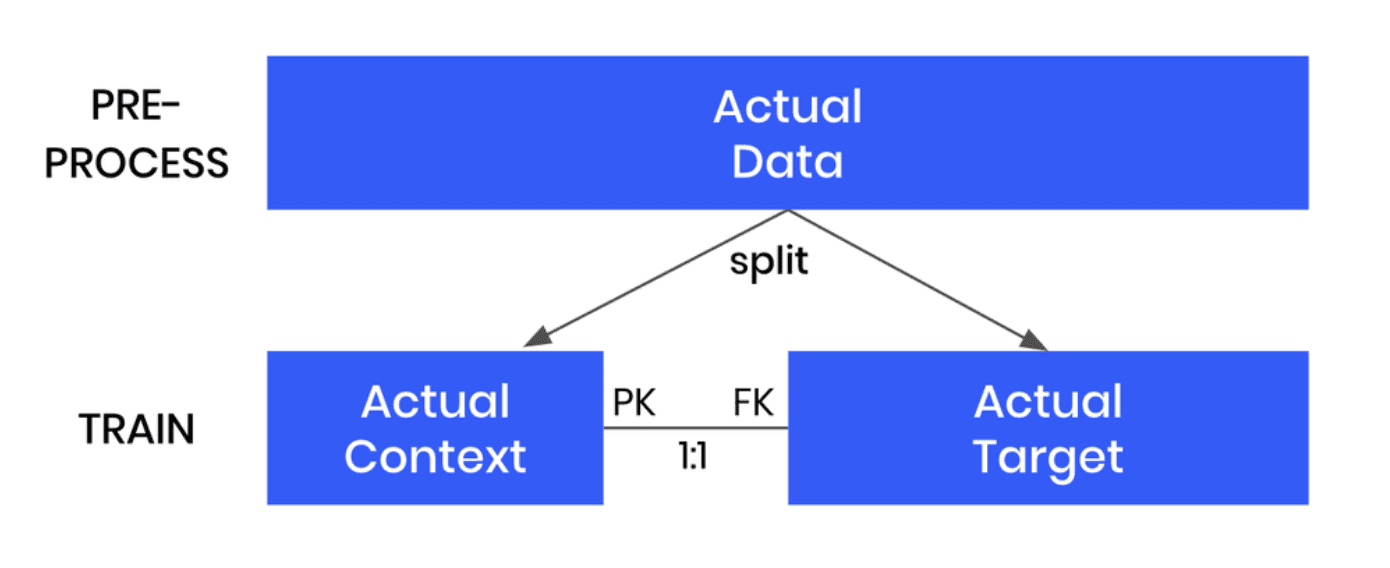

- We’ll start by splitting the original data table into two tables. The first table should contain all the columns that you want to hold fixed, the conditions based on which you want to generate your partially synthetic data. The second table will contain all the other columns, which will be synthesized within the context of the first table.

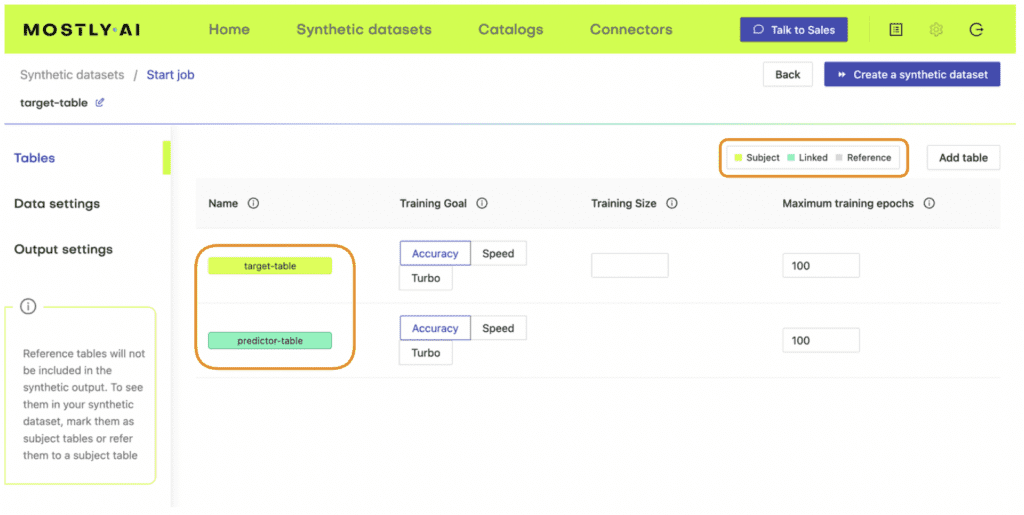

- We’ll then define the relationship between these two tables. The first table (containing the context data) should be set as a subject table, and the second table (containing the target data to be synthesized) as a linked table.

- Next, we will train a MOSTLY AI synthetic data generation model using this two-table setup. Note that this is just an intermediate step that will, in fact, create fully synthetic data since we are using the full original dataset (just split into two) and have not set any specific conditions yet.

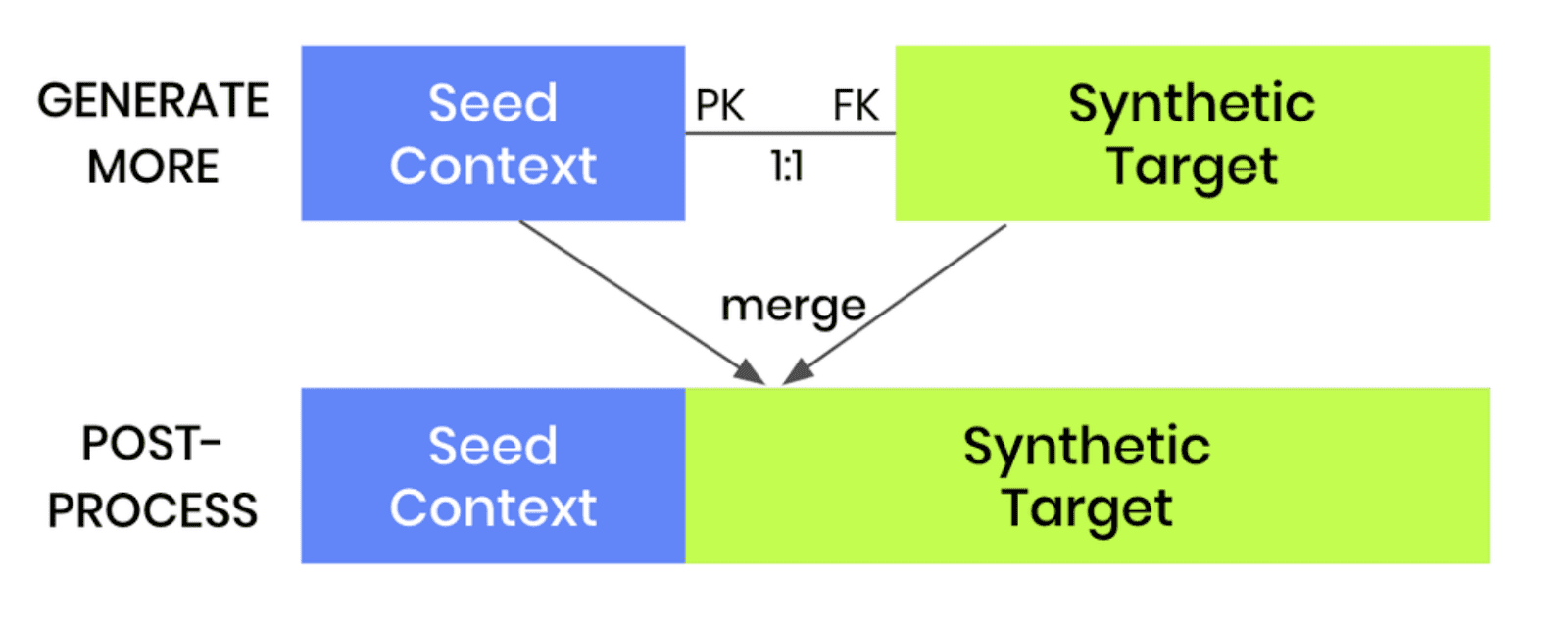

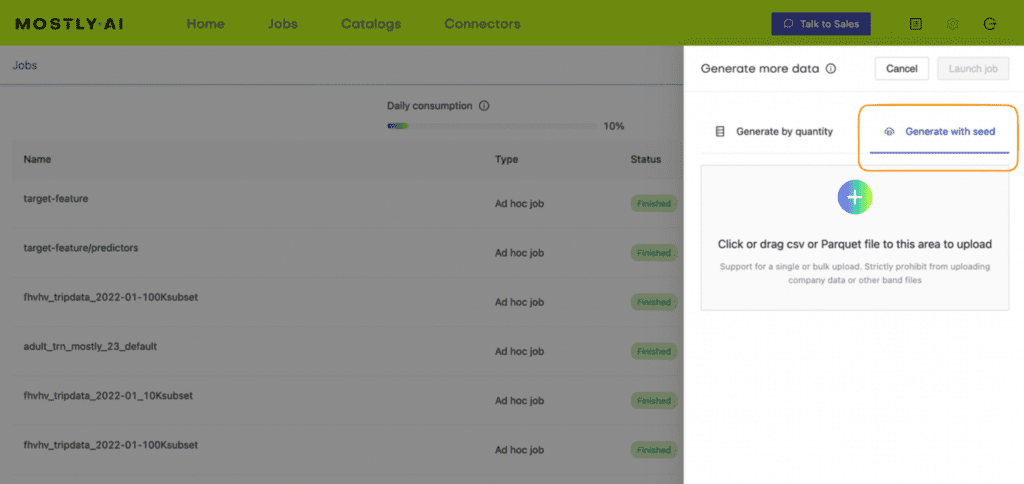

- Once the model is trained, we can then use it to generate more data by selecting the “Generate with seed” option. This option allows you to set conditions within which to create partially synthetic data. Any data you upload as the seed context will be used as fixed attributes that will appear as they are in the resulting synthetic dataset. Note that your seed context dataset must contain a matching ID column. The output of this step will be your synthetic target data.

- As a final post-processing step, we’ll merge the seed context with fixed attribute columns to your synthetic target data to get the complete, partially synthetic dataset created using conditional data generation.

Note that this same kind of conditional data generation can also be performed for two-table setups. The process is even easier in that case, as the pre-and post-processing steps are not required. Once a two-table model is trained, one can simply generate more data and provide a new subject table as the seed for the linked table.

Let’s see it in practice for our first use case: performing data simulation on the UCI Adult Income dataset to see what the data would look like if there was no gender income gap.

Conditional data generation for data simulation

For this use case, we will be using a subset of the UCI Adult Income dataset, consisting of 10k records and 10 attributes. Our aim here is to provide a specific distribution for the sex and income columns and see how the other columns will change based on these predetermined conditions.

Preprocess your data

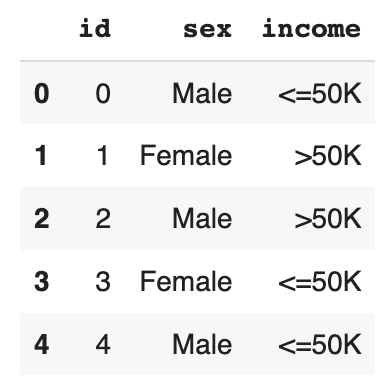

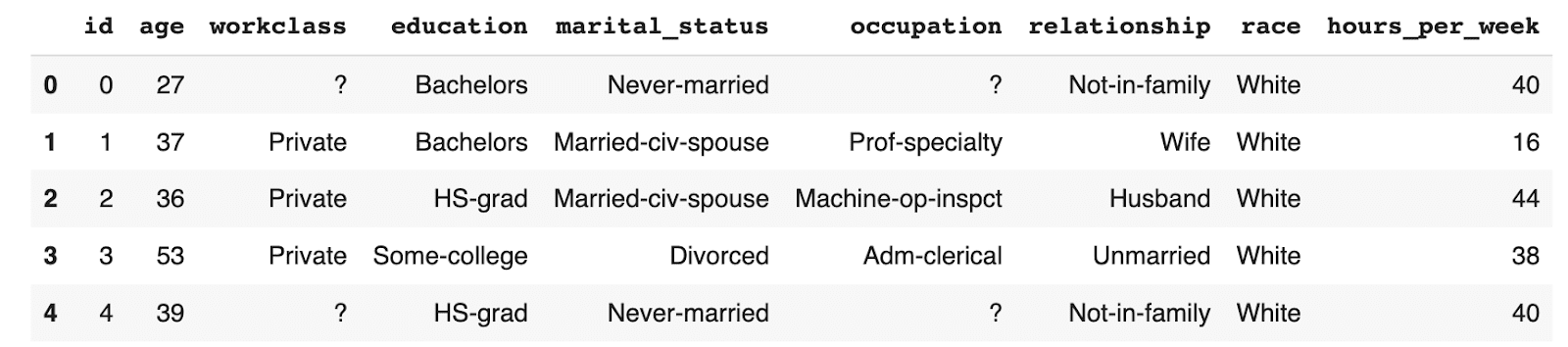

As described in the steps above, your first task will be to enrich the dataset with a unique ID column and then split the data into two tables, i.e. two CSV files. The first table should contain the columns you want to control, in this case, the sex and income columns. The second table should contain the columns you want to synthesize, in this case, all the other columns.

df = pd.read_csv(f'{repo}/census.csv')

# define list of columns, on which we want to condition on

ctx_cols = ['sex', 'income']

tgt_cols = [c for c in df.columns if c not in ctx_cols]

# insert unique ID column

df.insert(0, 'id', pd.Series(range(df.shape[0])))

# persist actual context, that will be used as subject table

df_ctx = df[['id'] + ctx_cols]

df_ctx.to_csv('census-context.csv', index=False)

display(df_ctx.head())

# persist actual target, that will be used as linked table

df_tgt = df[['id'] + tgt_cols]

df_tgt.to_csv('census-target.csv', index=False)

display(df_tgt.head())

Save the resulting tables to disk as CSV files in order to upload them to MOSTLY AI in the next step. If you are working in Colab this will require an extra step (provided in the notebook) in order to download the files from the Colab server to disk.

Train a generative model with MOSTLY AI

Use the CSV files you have just created to train a synthetic data generation model using MOSTLY AI.

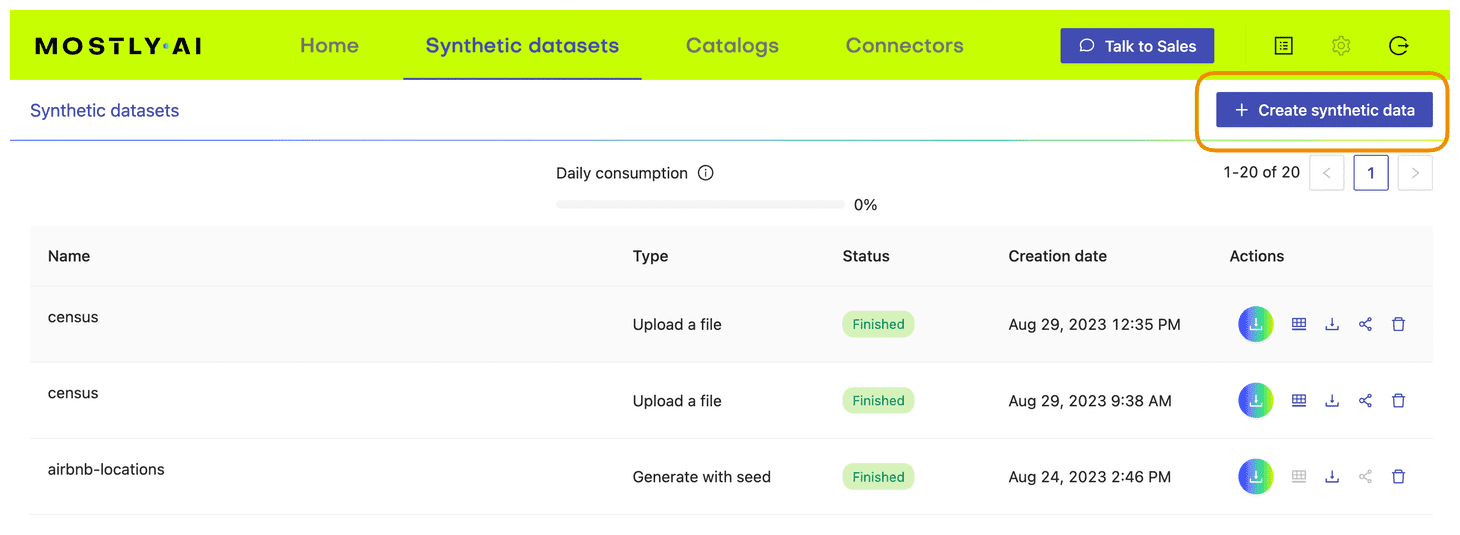

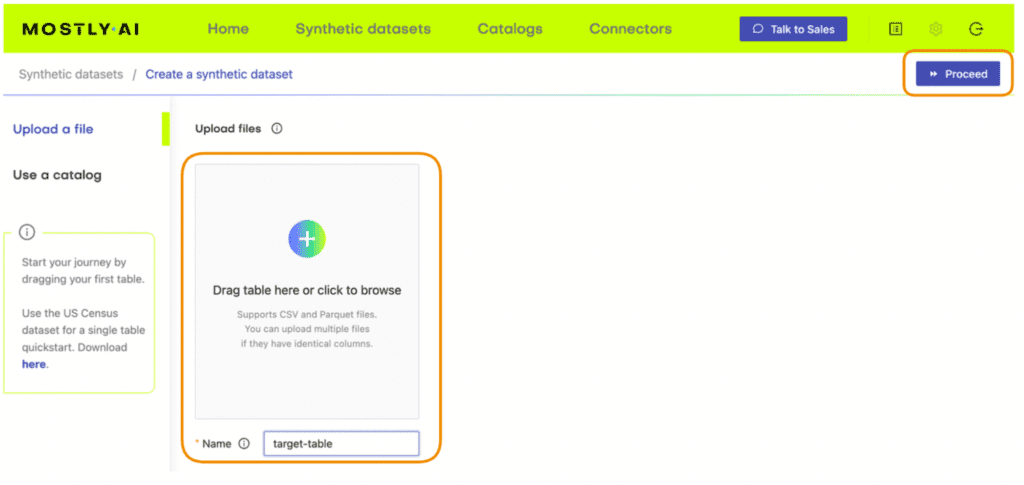

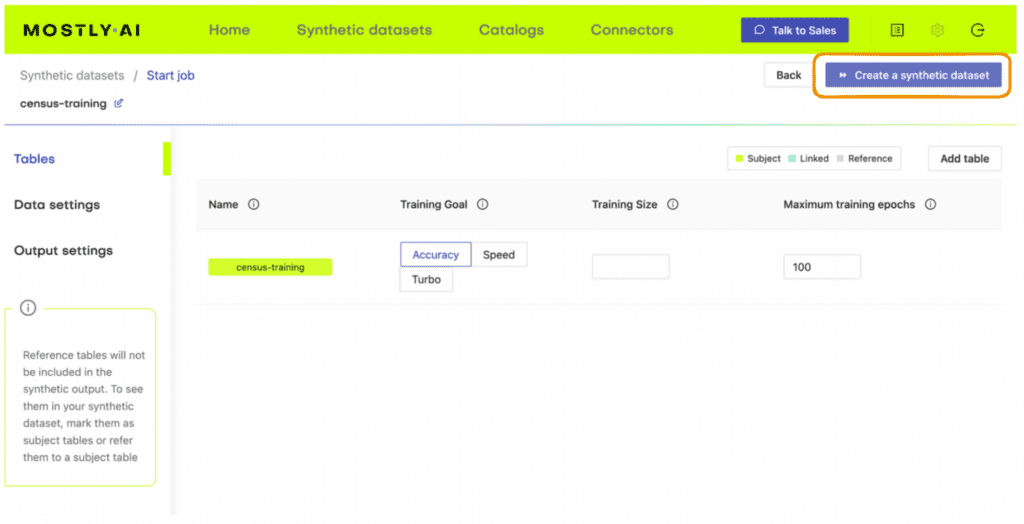

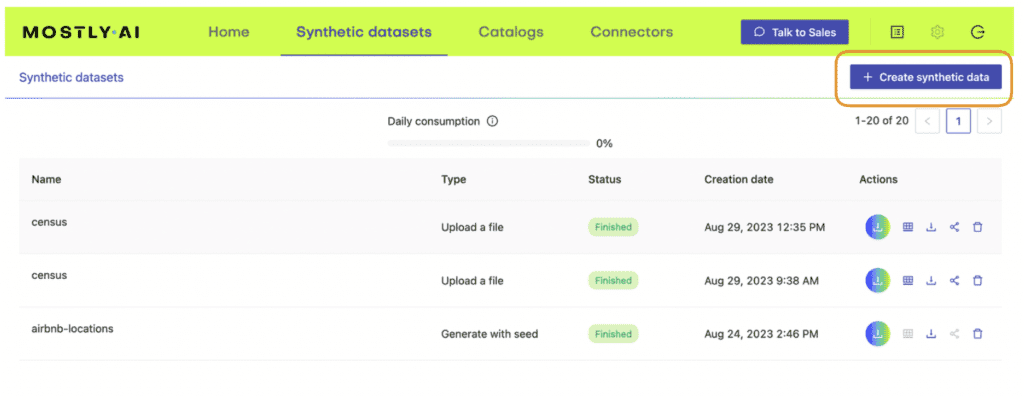

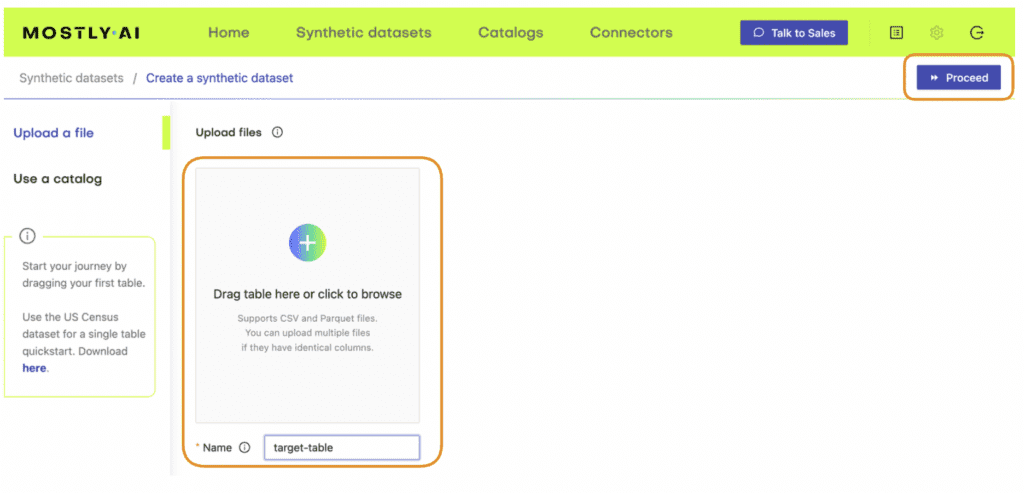

- Navigate to your MOSTLY AI account and go to the “Synthetic datasets” tab. Click on “Create synthetic data” to start a new job.

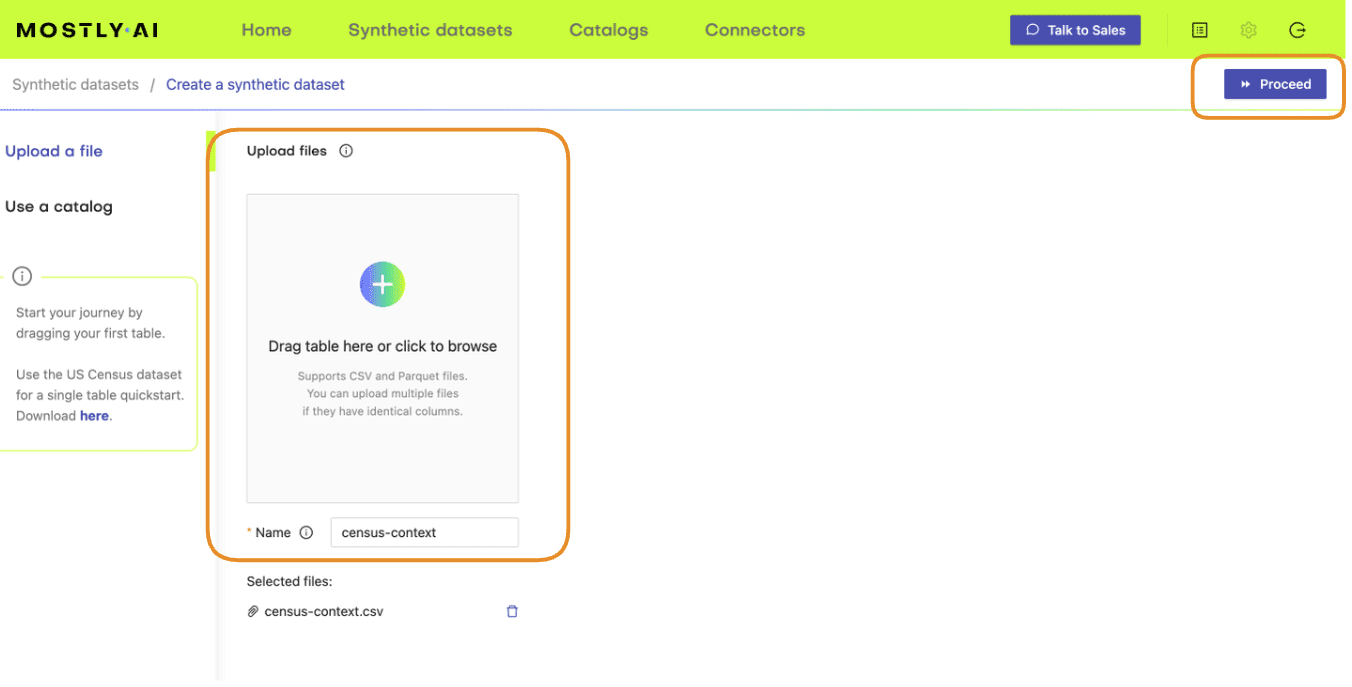

- Upload the census-context.csv (the file containing your context data).

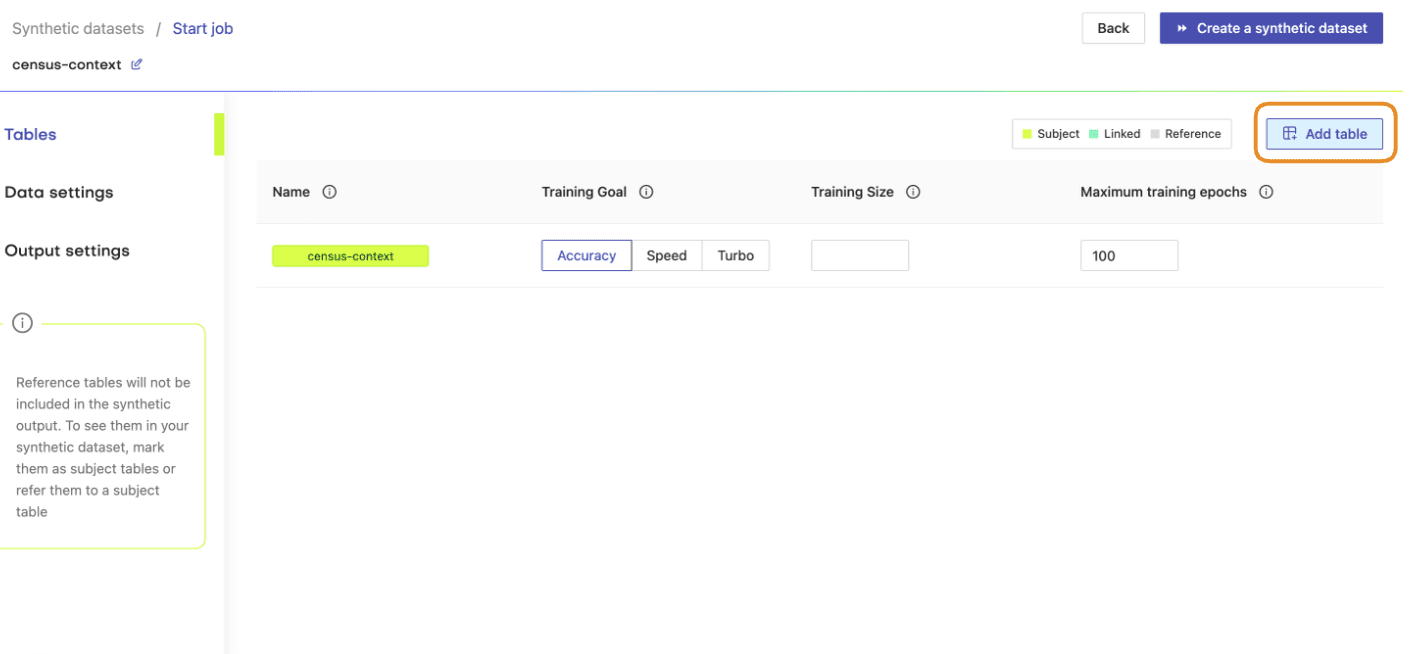

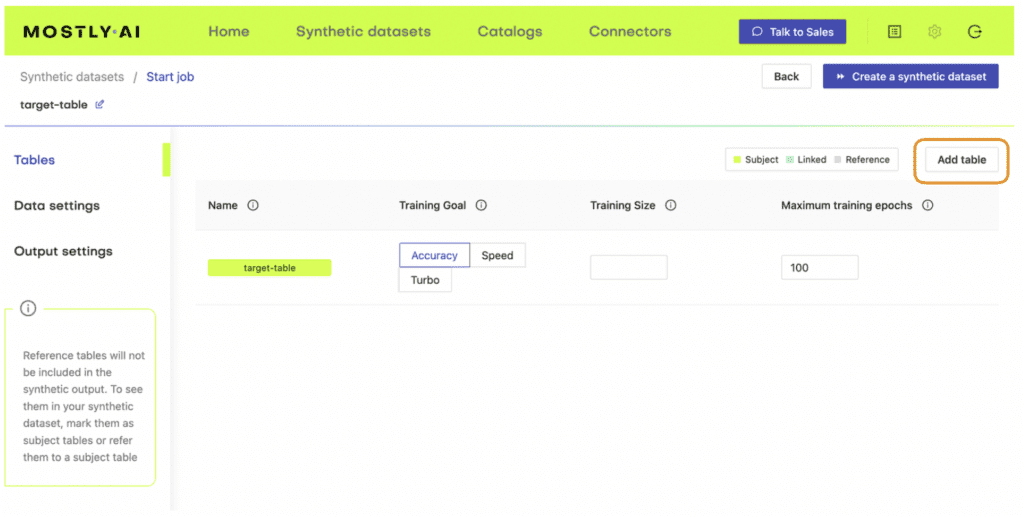

- Once the upload is complete, click on “Add table” and upload census-target.csv (the file containing your target data) here.

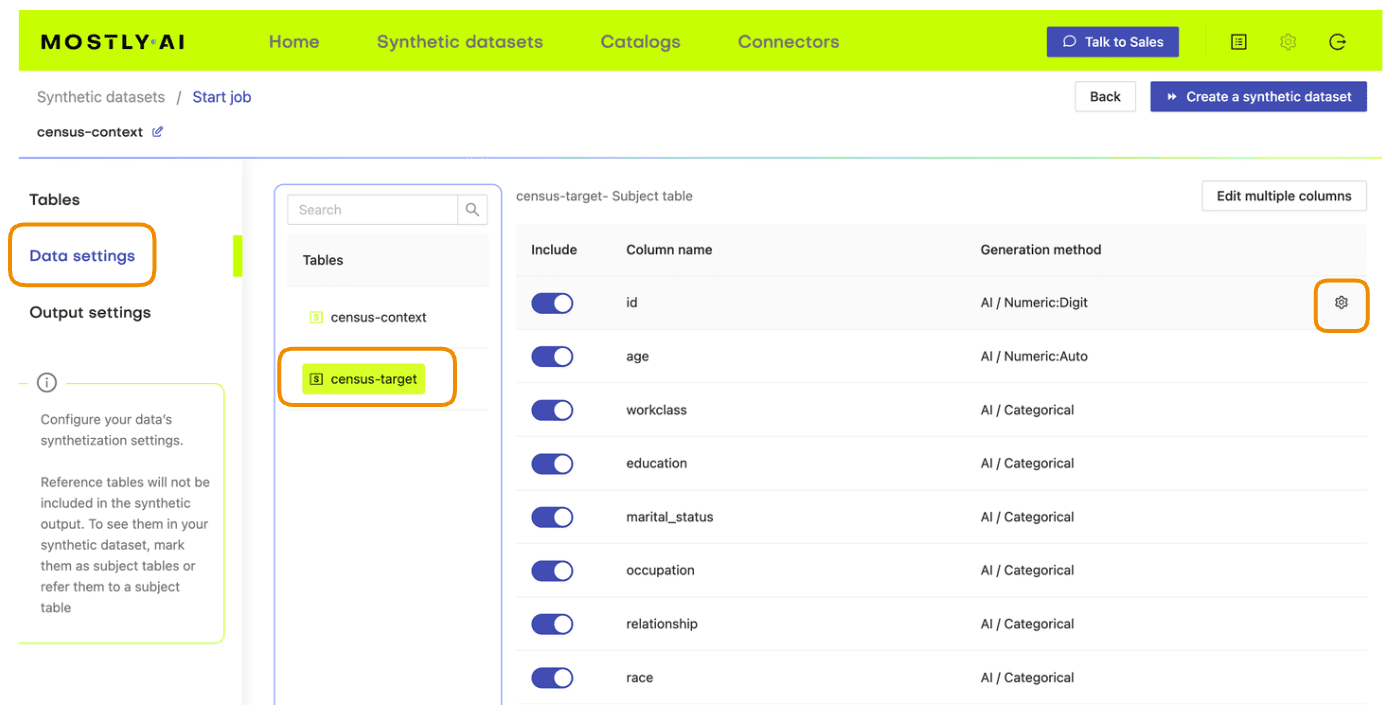

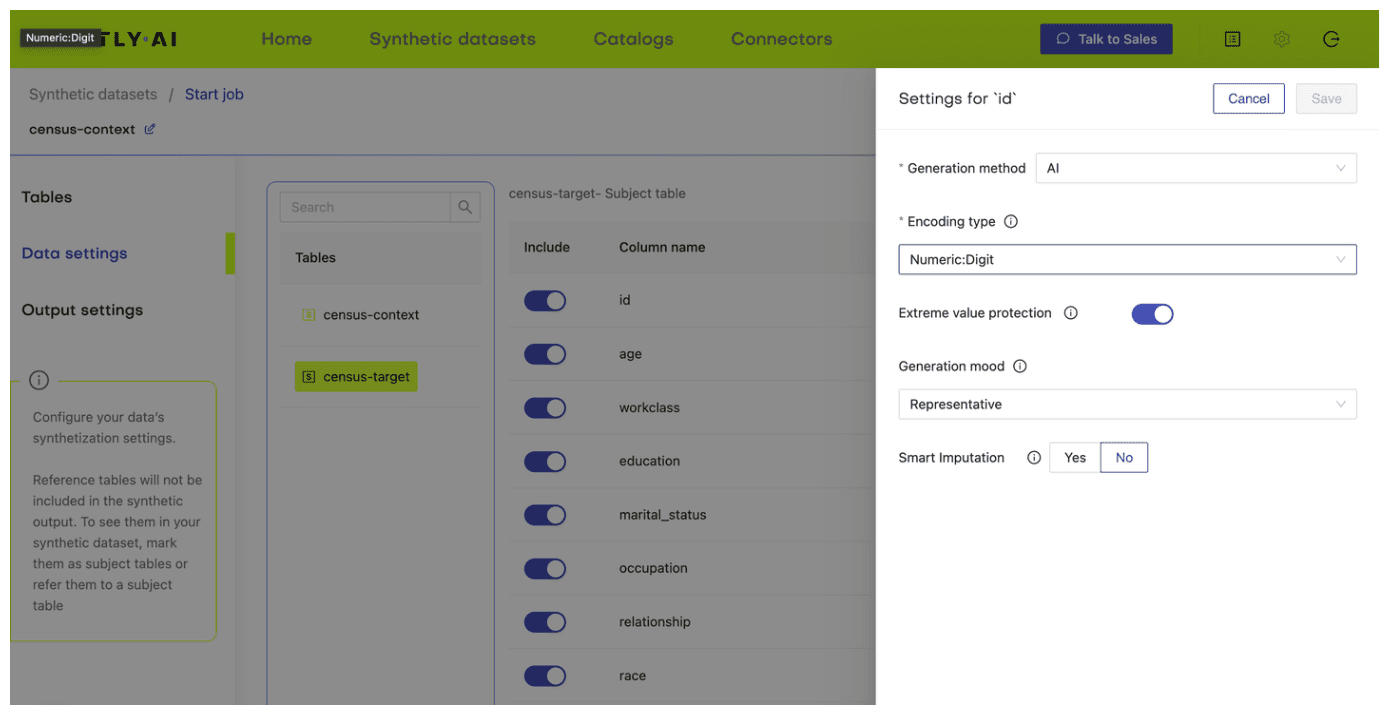

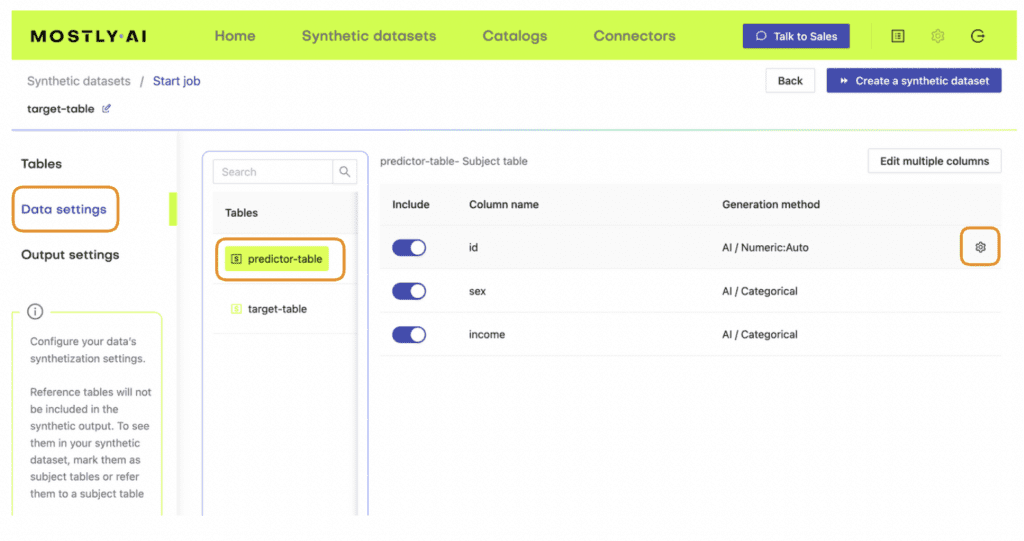

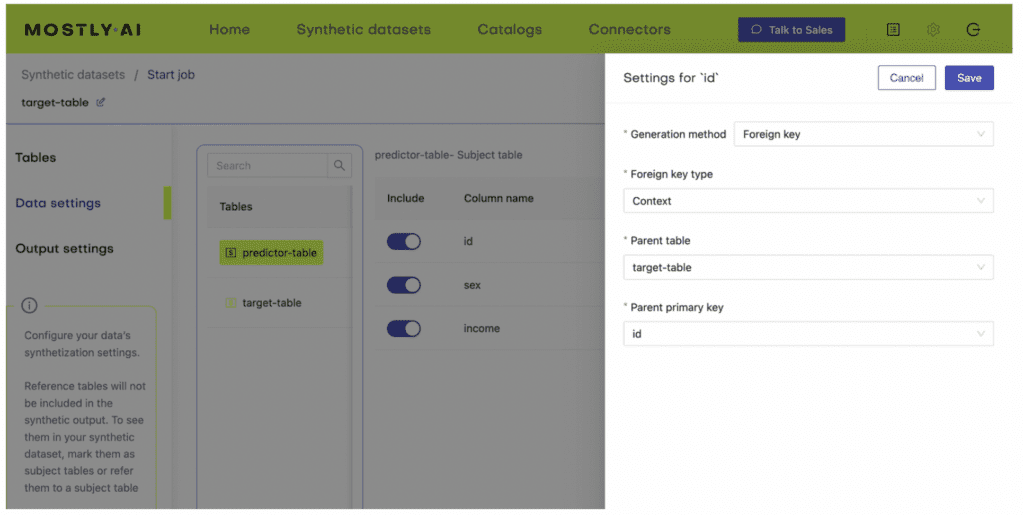

- Next, define the table relationship by navigating to “Data Settings,” selecting the ID column of the context table, and setting the following settings:

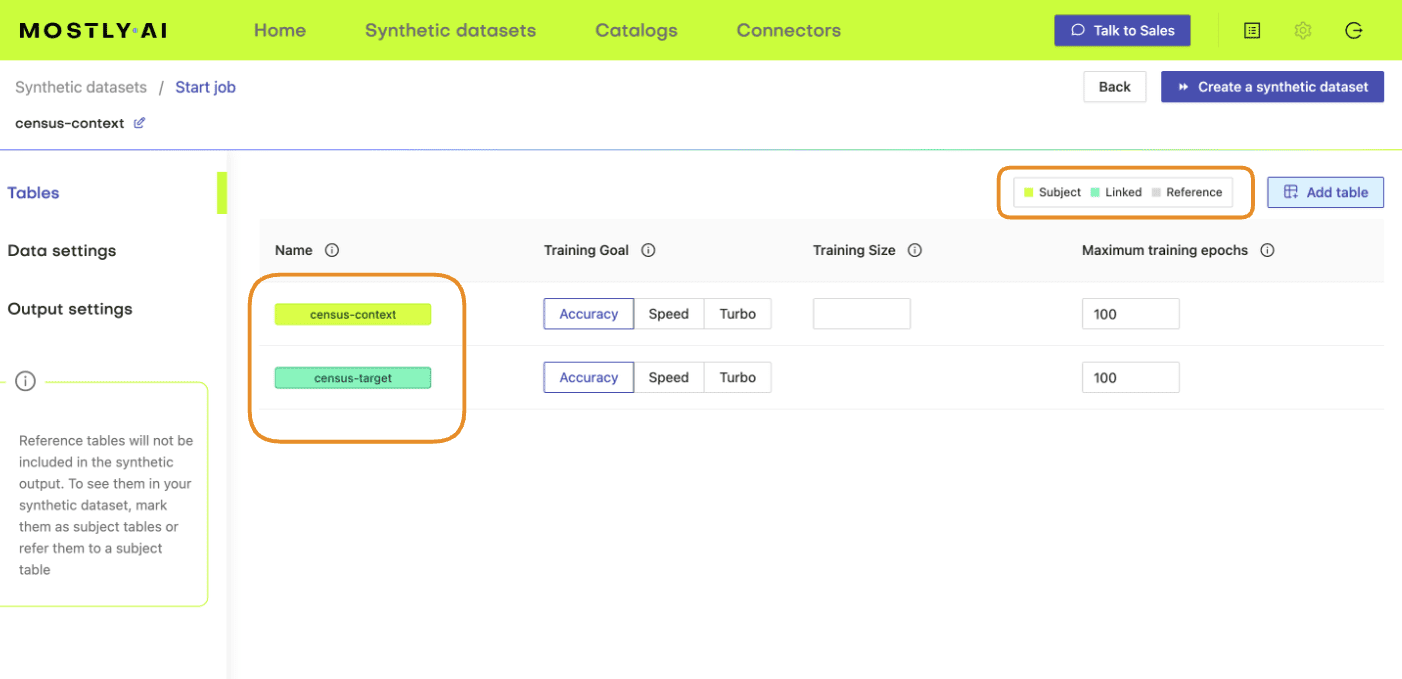

- Confirm that your census-context table is now set as the subject table and the

census-targetas linked table.

- Click “Create a synthetic dataset” to launch the job and train the model. As noted before, the resulting synthetic data is not of particular interest at the moment. We are interested in the model that is created and will use it for conditional data generation in the next section.

Conditional data generation with MOSTLY AI

Now that we have our base model, we will need to specify the conditions within which we want to create synthetic data. For this first use case, you will simulate what the dataset will look like if there was no gender income gap.

- Create a CSV file that contains the same columns as the context file but now containing the specific distributions of those variables you are interested in simulating. In this case, we’ll create a dataset containing an even split between male and female records as well as an even distribution of high- and low-income records. You can use the code block below to do this.

import numpy as np

np.random.seed(1)

n = 10_000

p_inc = (df.income=='>50K').mean()

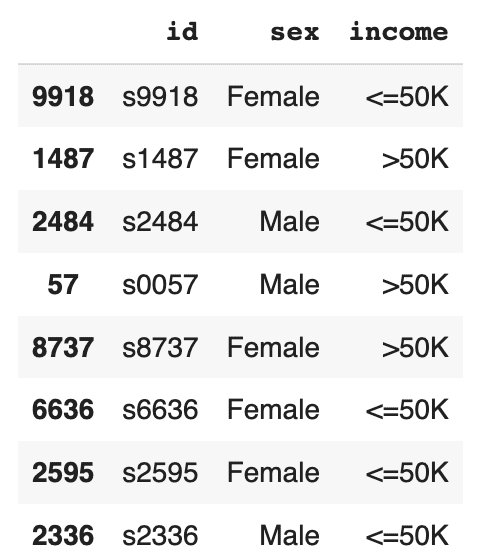

seed = pd.DataFrame({

'id': [f's{i:04}' for i in range(n)],

'sex': np.random.choice(['Male', 'Female'], n, p=[.5, .5]),

'income': np.random.choice(['<=50K', '>50K'], n, p=[1-p_inc, p_inc]),

})

seed.to_csv('census-seed.csv', index=False)

seed.sample(8)

The resulting DataFrame contains an even income split between males and females, i.e. no gender income gap.

- Download this CSV to disk as

census-seed.csv

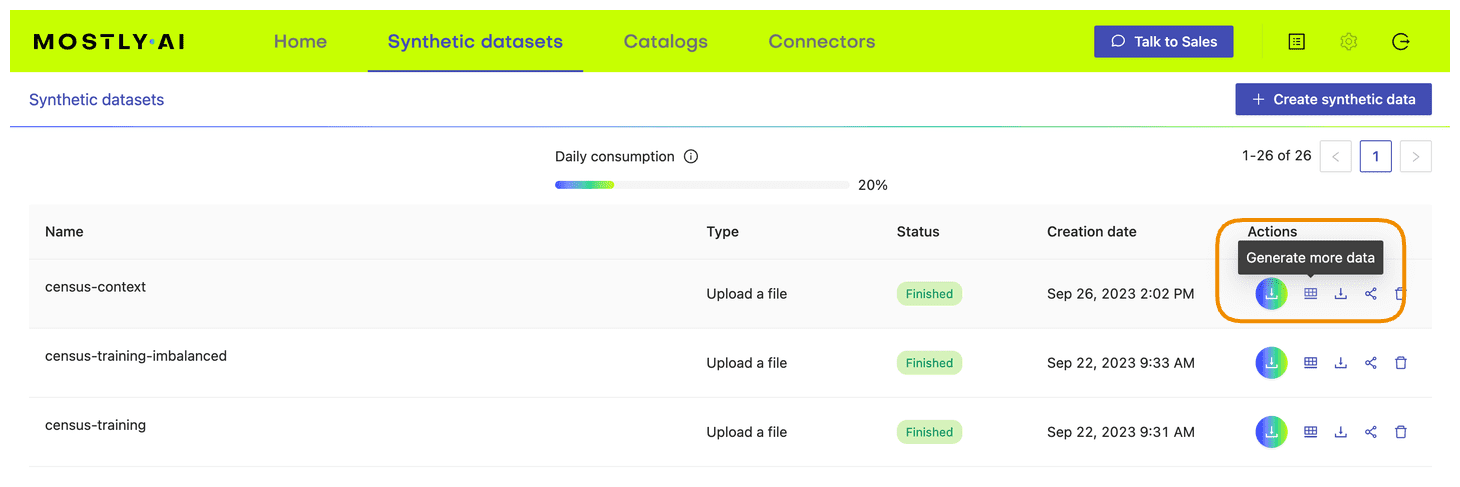

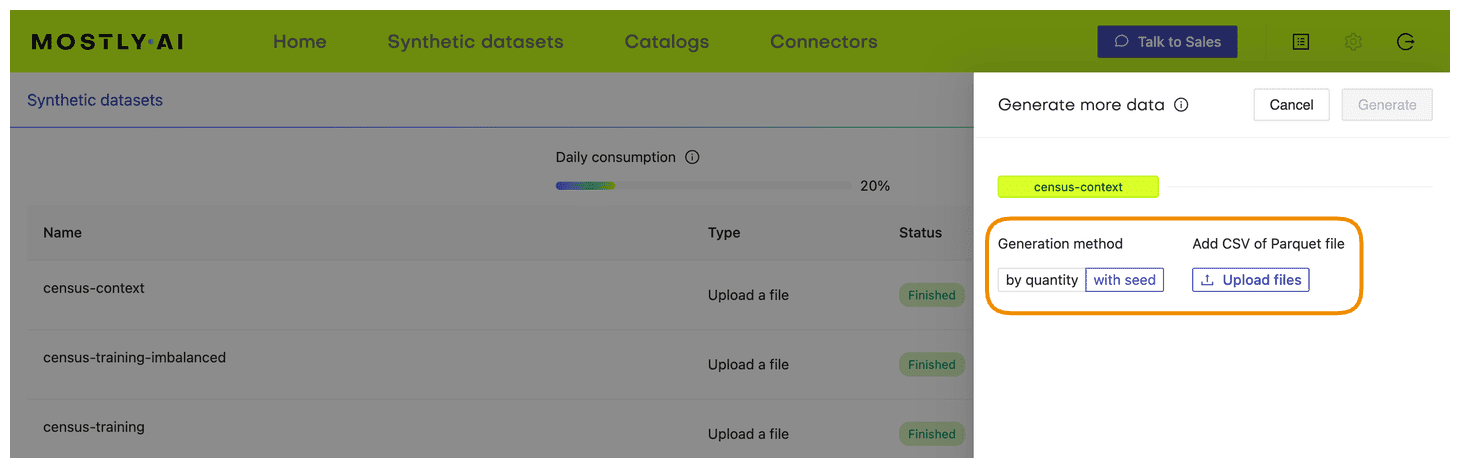

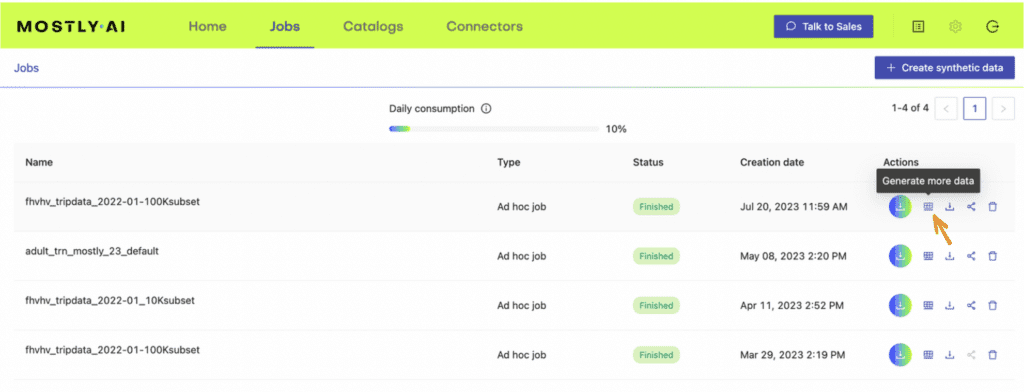

- In your MOSTLY AI account, click on the “Generate more data” button located to the right of the model that you have just trained.

- Select the “Generate with seed” option. This allows you to specify conditions that the synthesization should respect. Upload

census-seed.csvhere.

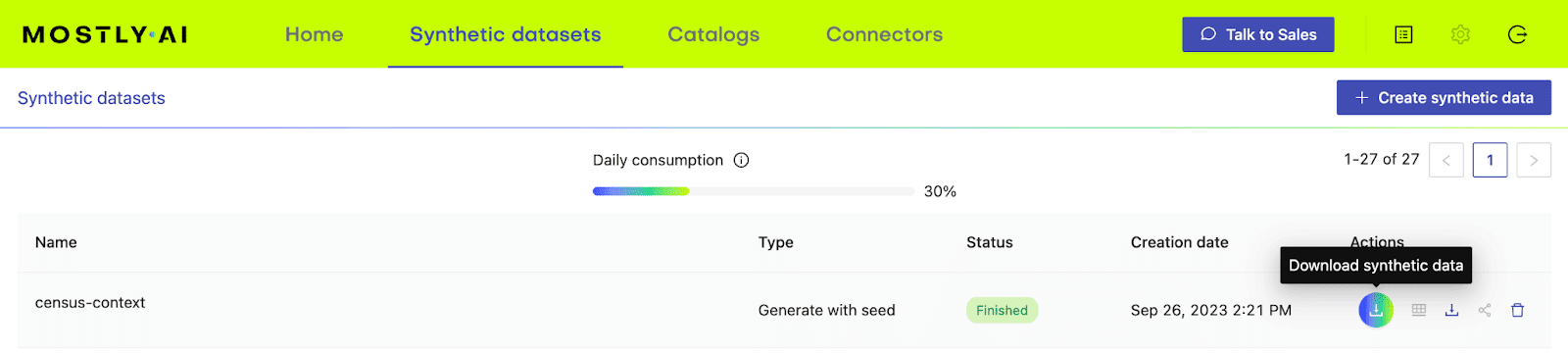

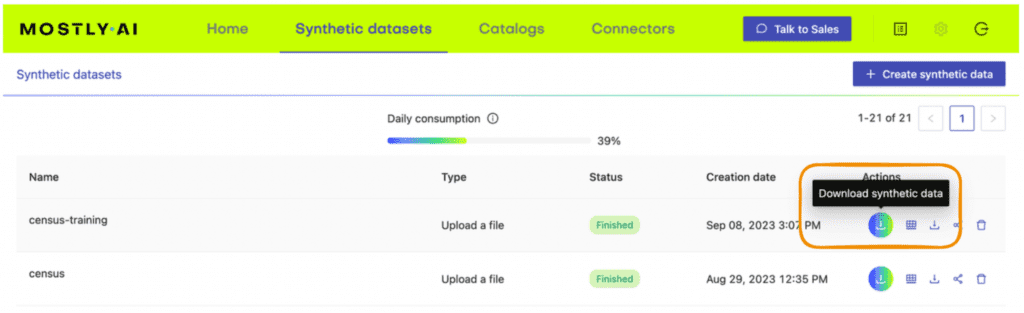

- Generate more data by clicking on “Generate”. Once completed, download the resulting synthetic dataset as CSV.

- Merge the synthetic target data to your seed context columns to get your complete, conditionally generated dataset.

# merge fixed seed with synthetic target to

# a single partially synthetic dataset

syn = pd.read_csv(syn_file_path)

syn = pd.merge(seed, syn, on='id').drop(columns='id')Explore synthetic data

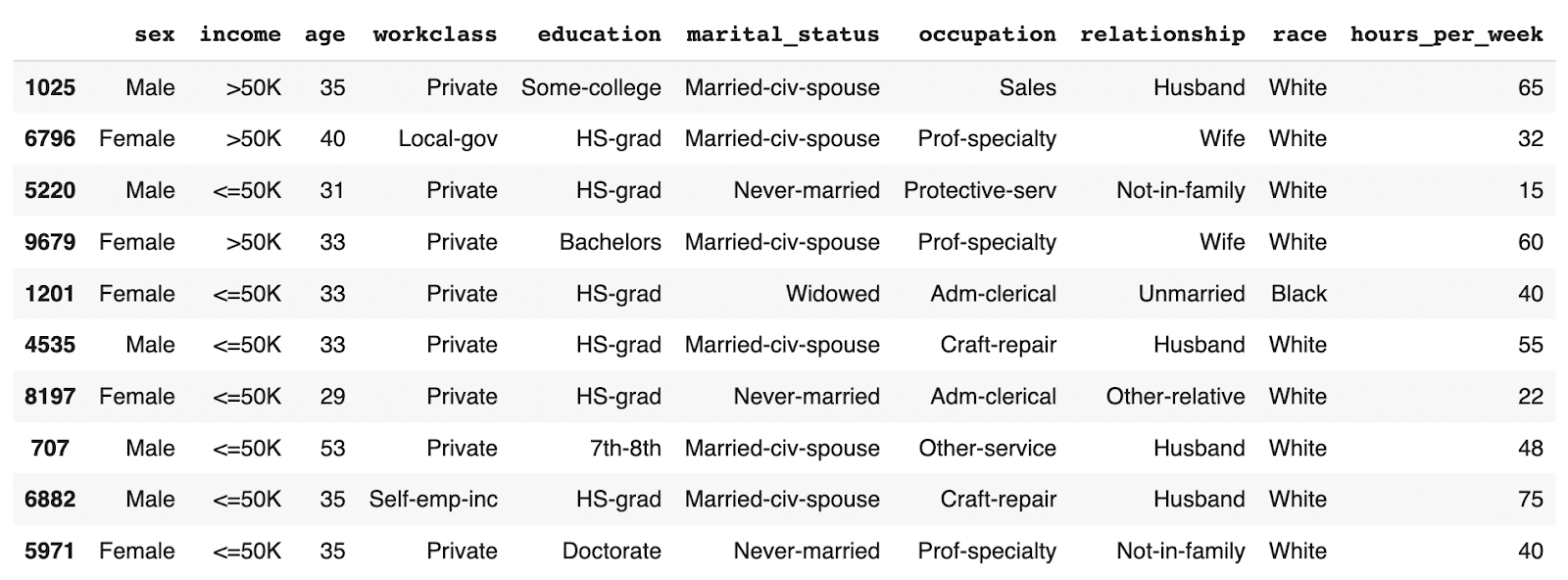

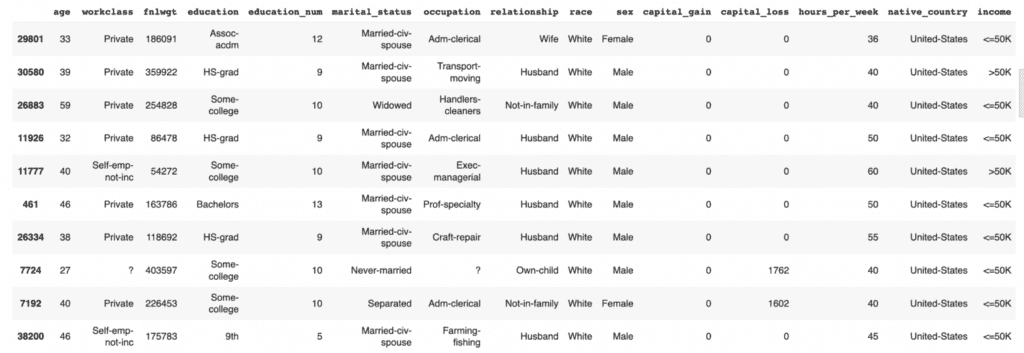

Let’s take a look at the data you have just created using conditional data generation. Start by showing 10 randomly sampled synthetic records. You can run this line multiple times to see different samples.

syn.sample(n=10)

You can see that the partially synthetic dataset consists of about half male and half female records.

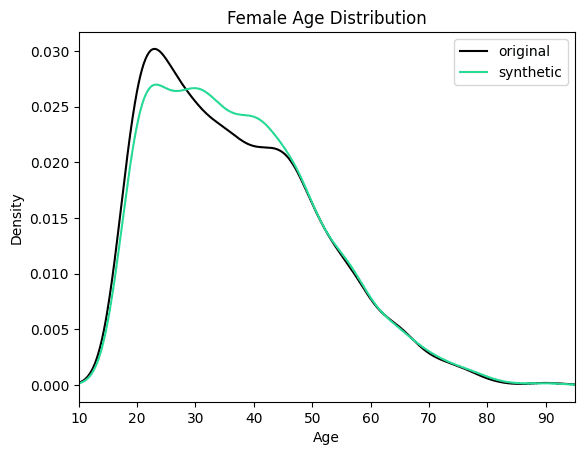

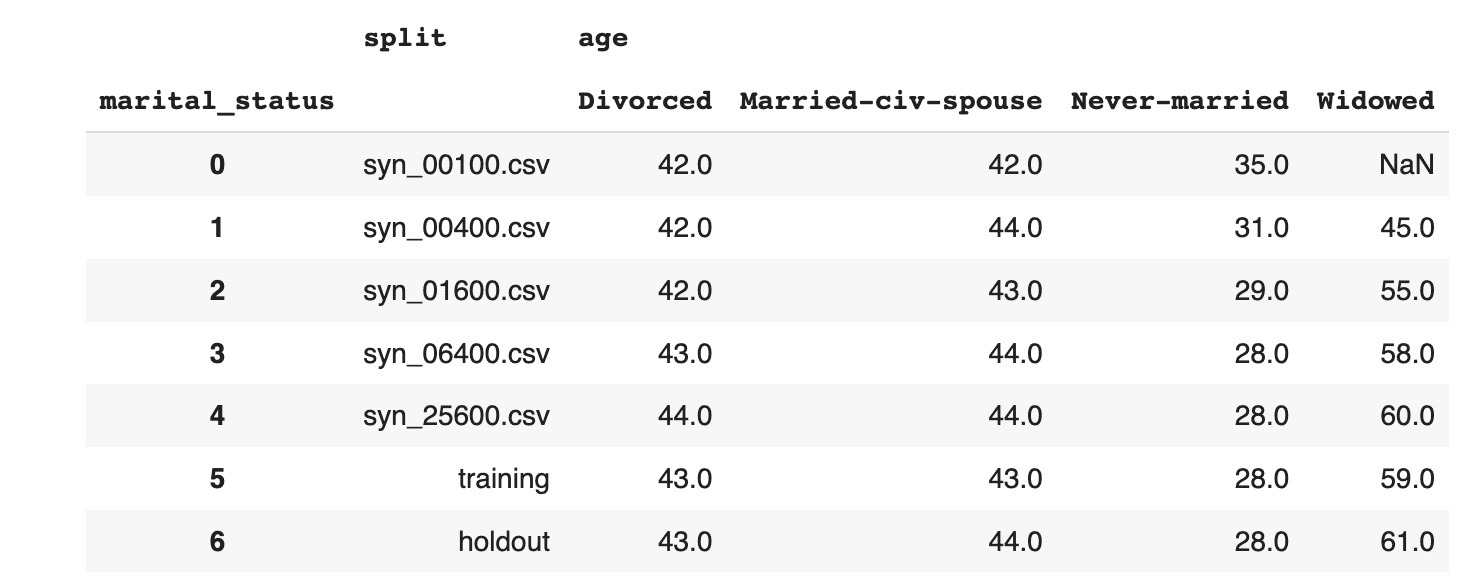

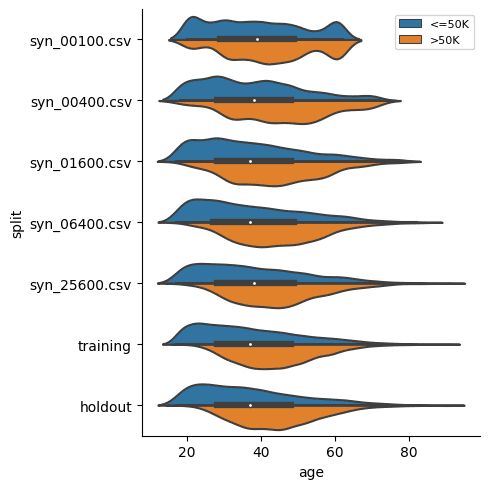

Let's now compare the age distribution of records from the original data against those from the partially synthetic data. We’ll plot both the original and synthetic distributions on a single plot to compare.

import matplotlib.pyplot as plt

plt.xlim(10, 95)

plt.title('Female Age Distribution')

plt.xlabel('Age')

df[df.sex=='Female'].age.plot.kde(color='black', bw_method=0.2)

syn[syn.sex=='Female'].age.plot.kde(color='#24db96', bw_method=0.2)

plt.legend({'original': 'black', 'synthetic': '#24db96'})

plt.show()

We can see clearly that the synthesized female records are now significantly older in order to meet the criteria of removing the gender income gap. Similarly, you can now study other shifts in the distributions that follow as a consequence of the provided seed data.

Conditional generation to retain original data

In the above use case, you customized your data generation process in order to simulate a particular, predetermined distribution for specific columns of interest (i.e. no gender income gap). In the following section, we will explore another useful application of conditional generation: retaining certain columns of the original dataset exactly as they are while letting the rest of the columns be synthesized. This can be a useful tool in situations when it is crucial to retain parts of the original dataset.

In this section, you will be working with a dataset containing the 2019 Airbnb listings in Manhattan. For this use case, it is crucial to preserve the exact locations of the listings in order to avoid situations in which the synthetic dataset contains records in impossible or irrelevant locations (in the middle of the Hudson River, for example, or outside of Manhattan entirely).

You need the ability to execute control over the location column to ensure the relevance and utility of your resulting synthetic dataset - and conditional data generation gives you exactly that level of control.

Let’s look at this type of conditional data generation in action. Since many of the steps will be the same as in the use case above, this section will be a bit more compact.

Preprocess your data

Start by enriching the DataFrame with an id column. Then split it into two DataFrames: airbnb-context.csv and airbnb-target.csv. Additionally, you will need to concatenate the latitude and longitude columns together into a single column. This is the format expected by MOSTLY AI, in order to improve its representation of geographical information.

df_orig = pd.read_csv(f'{repo}/airbnb.csv')

df = df_orig.copy()

# concatenate latitude and longitude to "LAT, LONG" format

df['LAT_LONG'] = (

df['latitude'].astype(str) + ', ' + df['longitude'].astype(str)

)

df = df.drop(columns=['latitude', 'longitude'])

# define list of columns, on which we want to condition on

ctx_cols = ['neighbourhood', 'LAT_LONG']

tgt_cols = [c for c in df.columns if c not in ctx_cols]

# enricht with ID column

df.insert(0, 'id', pd.Series(range(df.shape[0])))

# persist actual context, that will be used as subject table

df_ctx = df[['id'] + ctx_cols]

df_ctx.to_csv('airbnb-locations.csv', index=False)

display(df_ctx.head())

# persist actual target, that will be used as linked table

df_tgt = df[['id'] + tgt_cols]

df_tgt.to_csv('airbnb-data.csv', index=False)

display(df_tgt.head())Train generative model with MOSTLY AI

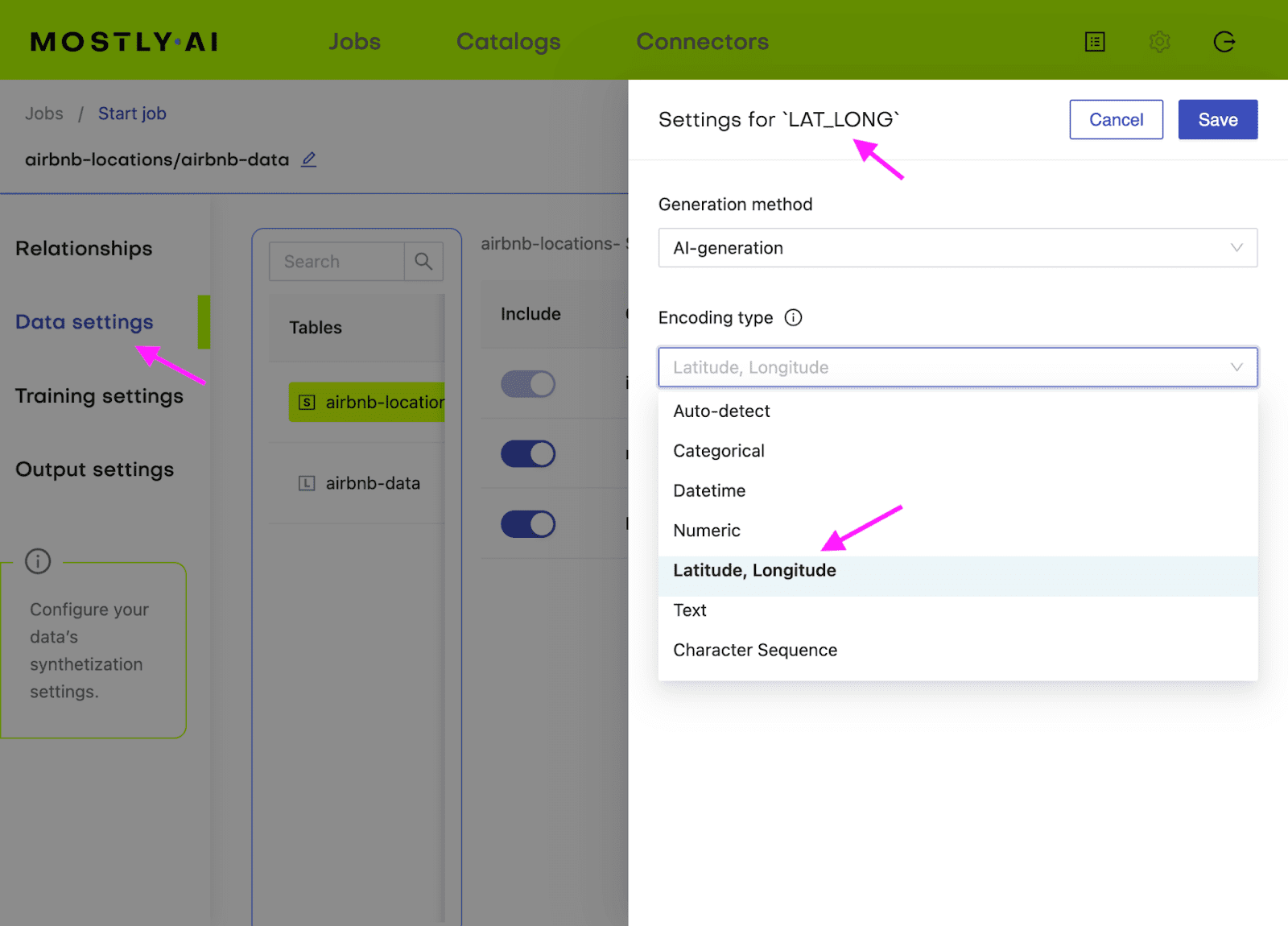

Follow the same steps as in the first use case to train a generative model with MOSTLY AI. Upload airbnb-locations.csv first, then add the airbnb-data.csv file and define the table relationship by setting the ID column of the airbnb-data table as the Foreign Key pointing to the ID column of the airbnb-locations file. Refer to the detailed steps in the first use case if you need a refresher on how to do that.

Additionally, you will need to configure the LAT_LONG column to the encoding type Lat, Long in order for MOSTLY AI to correctly process this geolocation data.

Generate more data with MOSTLY AI

Once the training has finished, you can generate your partially synthetic data. In the previous example, this is where we uploaded the seed context file that we generated. In this case, we actually want our fixed attributes to remain exactly as they are in the original dataset. This means you can simply re-upload the original airbnb-locations.csv as the seed file to the "generate more data" form. Once the data has been generated, download the data as a CSV file again. Merge the two files (seed and synthetic target) and split the LAT_LONG column back into separate latitude and longitude columns.

# merge fixed seed with synthetic target

# to a single partially synthetic dataset

syn = pd.read_csv(syn_file_path)

syn_partial = pd.merge(df_ctx, syn, on='id')

# split LAT_LONG into separate columns again

syn_partial = pd.concat([

syn_partial,

syn_partial.LAT_LONG.str.split(', ', n=2, expand=True).rename(columns={0: 'latitude', 1: 'longitude'}).astype(float),

], axis=1).drop(columns='LAT_LONG')

# restore column order

syn_partial = syn_partial[df_orig.columns]Explore synthetic data

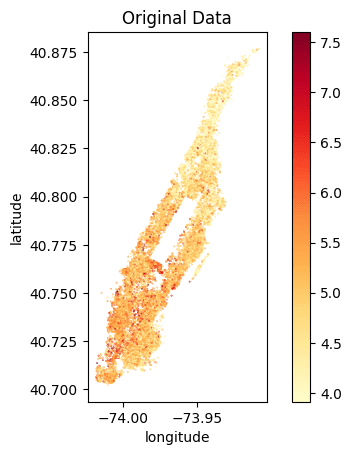

Let's compare the price distribution of listings across Manhattan. Note that while the locations in the partially synthetic data are actual locations, all other attributes, including the price per night, are randomly sampled by the generative model. Still, these prices remain statistically representative given the context, i.e. the location within Manhattan.

The code block below plots the price distribution of the original dataset as well as that of the partially synthetic dataset. We hope to see the exact same locations (so no listings in the middle of the Hudson River) and a price distribution that is statistically similar but not exactly the same.

We can clearly see that the locations of the listings have been preserved and the price distribution is accurately represented. We can see a similar gradient of lower prices per night in the Northern tip of Manhattan as well as high prices per night at the Southern end of Central Park and the Financial District.

Of course, you could also create fully synthetic data for this use case, and this will yield statistically representative locations with their attributes. However, as these locations do not necessarily exist (e.g. they might end up in the Hudson River), the demonstrated approach allows you to combine the best of both worlds.

Conditional data generation with MOSTLY AI

In this tutorial, you have learned how to perform conditional data generation. You have explored the value conditional generation can provide by working through two use cases: one in which you simulated a specific distribution for a subset of the columns (the Adult Income dataset with no gender income gap) and another in which you retained certain columns of the original dataset (the locations of the Airbnb listings). In both cases, you were able to execute more fine-grained control over the statistical distributions of your synthetic data by setting certain conditions in advance.

The result is a dataset that is partially pre-determined by the user (to either remain in its original form or follow a specific distribution) and partially synthesized. You can now put your conditional data generation skill to use for data simulation, to tackle data drift, or for other relevant use cases.

What’s next?

In addition to walking through the above instructions, we suggest experimenting with the following to get an even deeper understanding of conditional data generation:

- use a different set of fixed columns for the US Census dataset

- generate a very large number of records for a fixed value set, e.g. create 1 million records of 48-year-old female Professors

- perform a fully synthetic dataset of the Airbnb Manhattan dataset

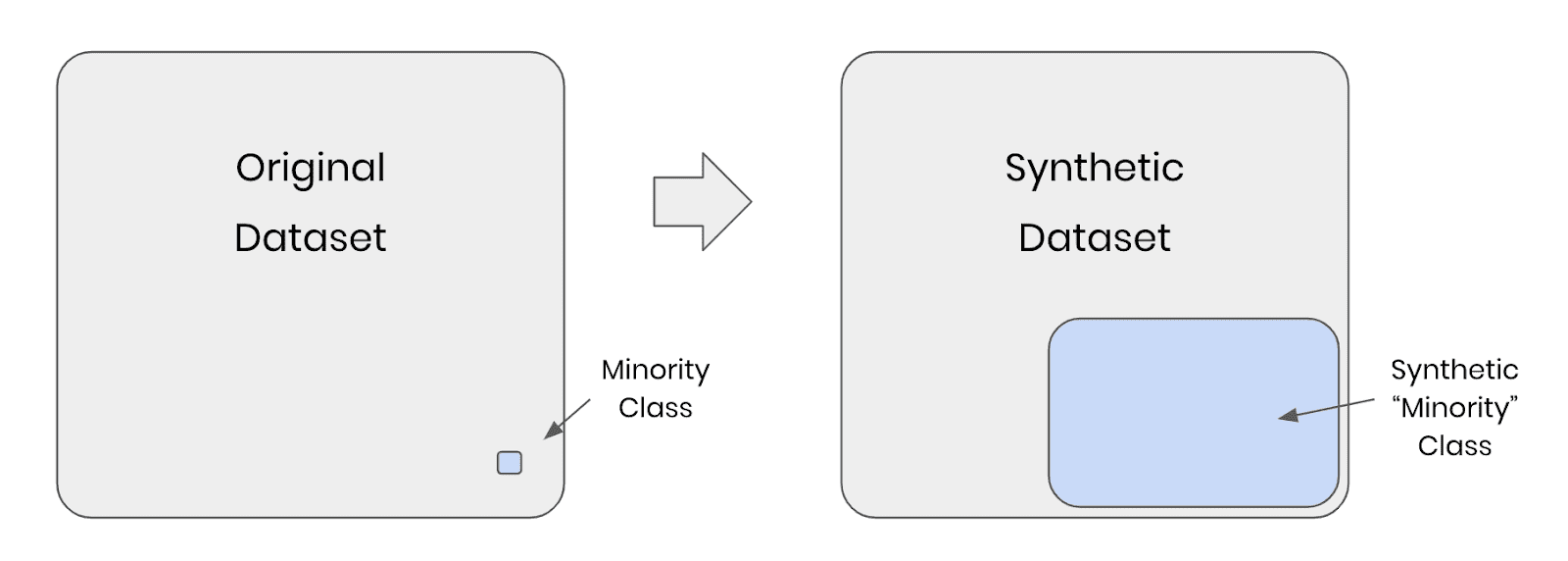

Synthetic data holds the promise of addressing the underrepresentation of minority classes in tabular data sets by adding new, diverse, and highly realistic synthetic samples. In this post, we'll benchmark AI-generated synthetic data for upsampling highly unbalanced tabular data sets. Specifically, we compare the performance of predictive models trained on data sets upsampled with synthetic records to that of well-known upsampling methods, such as naive oversampling or SMOTE-NC.

Our experiments are conducted on multiple data sets and different predictive models. We demonstrate that synthetic data can improve predictive accuracy for minority groups as it creates diverse data points that fill gaps in sparse regions in feature space.

Our results highlight the potential of synthetic data upsampling as a viable method for improving predictive accuracy on highly unbalanced data sets. We show that upsampled synthetic training data consistently results in top-performing predictive models, in particular for mixed-type data sets containing a very low number of minority samples, where it outperforms all other upsampling techniques.

Try upsampling on MOSTLY AI's synthetic data platform!

The definition of synthetic data

AI-generated synthetic data, which we refer to as synthetic data throughout, is created by training a generative model on the original data set. In the inference phase, the generative model creates statistically representative, synthetic records from scratch.

The use of synthetic data has gained increasing importance in various industries, particularly due to its primary use case of enhancing data privacy. Beyond privacy, synthetic data offers the possibility to modify and tailor data sets to our specific needs. In this blog post, we investigate the potential of synthetic data to improve the performance of machine learning algorithms on data sets with unbalanced class distributions, specifically through the synthetic upsampling of minority classes.

Upsampling for class imbalance

Class imbalance is a common problem in many real-world tabular data sets where the number of samples in one or more classes is significantly lower than the others. Such imbalances can lead to poor prediction performance for the minority classes, often of greatest interest in many applications, such as detecting fraud or extreme insurance claims.

Traditional upsampling methods, such as naive oversampling or SMOTE, have shown some success in mitigating this issue. However, the effectiveness of these methods is often limited, and they may introduce biases in the data, leading to poor model performance. In recent years, synthetic data has emerged as a promising alternative to traditional upsampling methods. By creating highly realistic samples for minority classes, synthetic data can significantly improve the accuracy of predictive models.

While upsampling methods like naive oversampling and SMOTE are effective in addressing unbalanced data sets, they also have their limitations. Naive oversampling mitigates class imbalance effects by simply duplicating minority class examples. Due to this strategy, they bear the risk of overfitting the model to the training data, resulting in poor generalization in the inference phase.

SMOTE, on the other hand, generates new records by interpolating between existing minority-class samples, leading to higher diversity. However, SMOTE’s ability to increase diversity is limited when the absolute number of minority records is very low. This is especially true when generating samples for mixed-type data sets containing categorical columns. For mixed-type data sets, SMOTE-NC is commonly used as an extension for handling categorical columns.

SMOTE-NC may not work well with non-linear decision boundaries, as it only linearly interpolates between minority records. This can lead to SMOTE-NC examples being generated in an “unfavorable” region of feature space, far from where additional samples would help the predictive model place a decision boundary.

All these limitations highlight the need for exploring alternative upsampling methods, such as synthetic data upsampling, that can overcome these challenges and improve the accuracy of minority group predictions.

The strength of upsampling minority classes with AI-generated synthetic data is that the generative model is not limited to upsampling or interpolating between existing minority classes. Most AI-based generators can create realistic synthetic data examples in any region of feature space and, thus, considerably increase diversity. Because they are not tied to existing minority samples, AI-based generators can also leverage and learn from the properties of (parts of) the majority samples that are transferable to minority examples.

An additional strength of using AI-based upsampling is that it can be easily extended to more complex data structures, such as sequential data, where not only one but many rows in a data set belong to a single data subject. This aspect of synthetic data upsampling is, however, out of the scope of this study.

In this post, we present a comprehensive benchmark study comparing the performance of predictive models trained on unbalanced data upsampled with AI-generated synthetic data, naive upsampling, and SMOTE-NC upsampling. Our experiments are carried out on various data sets and using different predictive models.

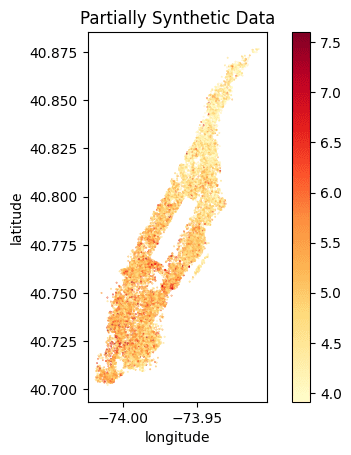

The upsampling experiment

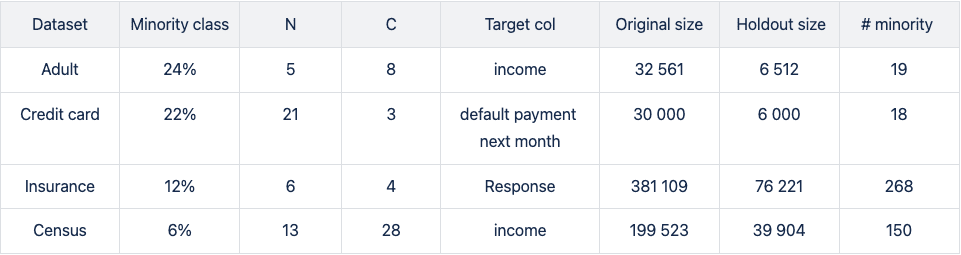

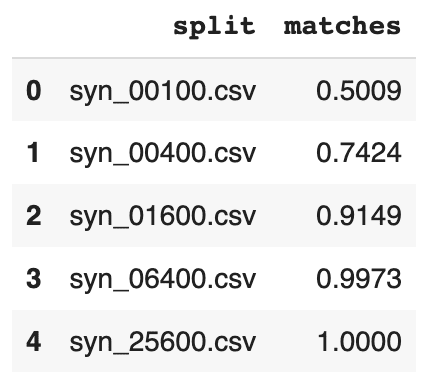

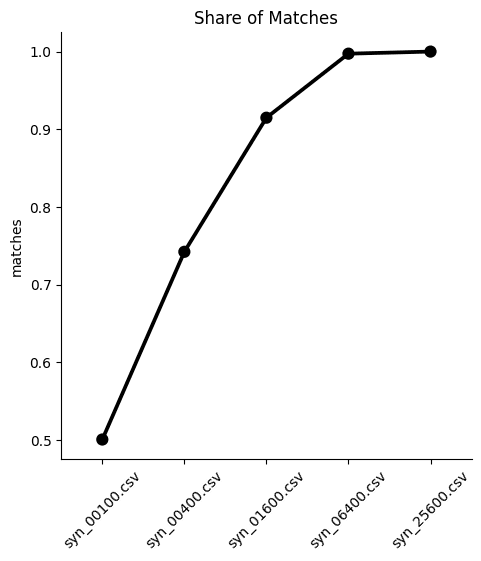

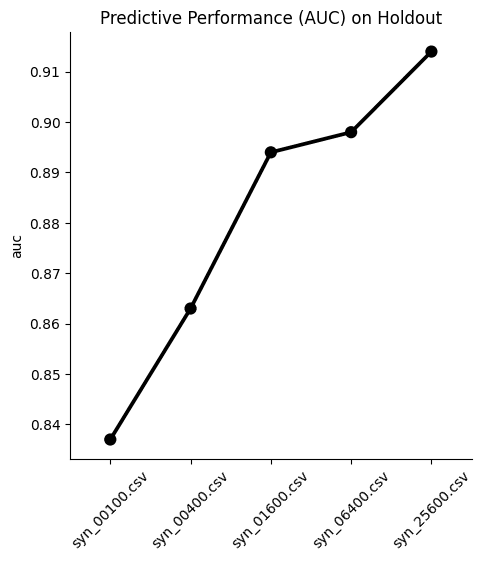

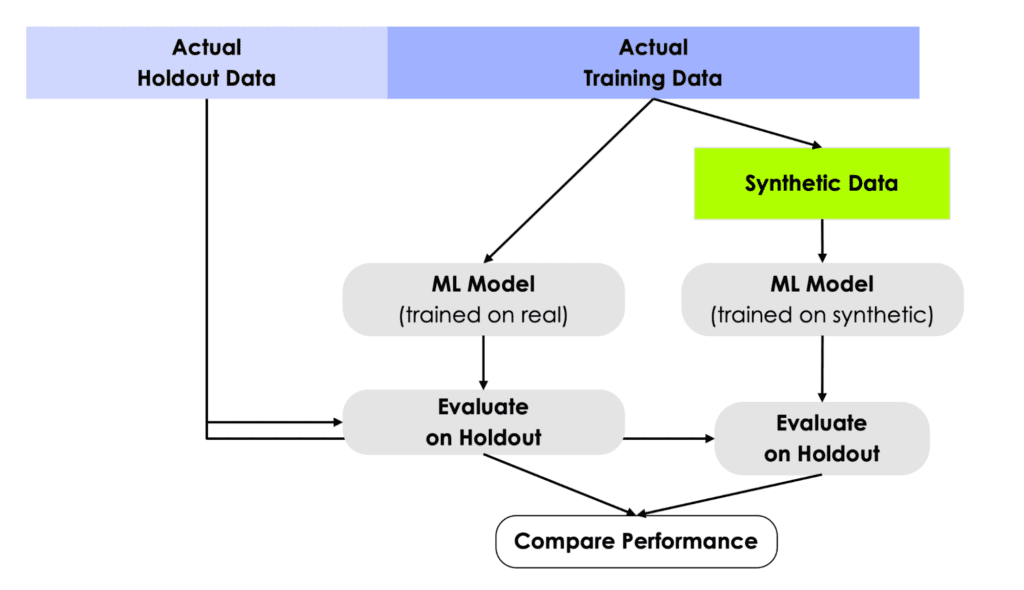

Figure 1: Experimental Setup: (1) We split the original data set into a base data set and a holdout. (2) Strong imbalances are introduced in the base data set by downsampling the minority classes to fractions as low as 0.05% to yield the unbalanced training data. (3) We test different mitigation strategies: balancing through naive upsampling, SMOTE-NC upsampling, and upsampling with AI-generated synthetic records (the hybrid data set). (4) We train LGBM, RandomForest, and XGB classifiers on the balanced and unbalanced training data. (5) We evaluate the properties of the upsampling techniques by measuring the performance of the trained classifier on the holdout set. Steps 1–5 are repeated five times, and we report the mean AUC-ROC as well as the AUC-PR.

For every data set we use in our experiments, we run through the following steps (see Fig. 1):

- We split the original data set into a base and a holdout set by using a five-fold stratified sampling approach to ensure that each class is represented proportionally.

- All of the original data sets have a binary target column and only a rather moderate imbalance with the fraction of the minority class ranging from 6% to 24% (see table 1 for data set details). We artificially induce different levels of strong imbalances to the base set by randomly down-sampling the minority class, resulting in unbalanced training data sets with minority fractions of 0.05%, 0.1%, 0.2%, 0.5%, 1%, 2%, and 5%.

- To mitigate the strong imbalances in the training data sets, we apply three different upsampling techniques:

- naive oversampling (red box in fig. 1) duplicating existing examples of the minority classes (scikit-learn, RandomOverSampler)

- SMOTE-NC (blue box in fig. 1): applying the SMOTE-NC upsampling technique (scikit-learn, SMOTENC)

- Hybrid (green box in fig. 1): The hybrid data set represents the concept of enriching unbalanced training data with AI-generated synthetic data. It is composed of the training data (including majority samples and a limited number of minority samples) along with additional synthetic minority samples that are created using an AI-based synthetic data generator. This generator is trained on the highly unbalanced training data set. In this study, we use the MOSTLY AI synthetic data platform. It is freely accessible for generating highly realistic AI-based synthetic data.

In all cases, we upsample the minority class to achieve a 50:50 balance between the majority and minority classes, resulting in the naively balanced, the SMOTE-NC balanced, and the balanced hybrid data set.

- We assess the benefits of the different upsampling techniques by training three popular classifiers: RandomForest, XGB, and LightGBM, on the balanced data sets. Additionally, we train the classifiers on the heavily unbalanced training data sets as a baseline in the evaluation of the predictive model performance.

- The classifiers are scored on the holdout set, and we calculate the AUC-ROC score and AUC-PR score across all upsampling techniques and initial imbalance ratios for all 5 folds. We report the average scores over five different samplings and model predictions. We opt for AUC metrics to eliminate dependencies on thresholds, as seen in, e.g., F1 scores.

The results of upsampling

We run four publicly available data sets (Figure 1) of varying sizes through steps 1–5: Adult, Credit Card, Insurance, and Census (Kohavi and Becker). All data sets tested are of mixed type (categorical and numerical features) with a binary, that is, a categorical target column.

In step 2 (Fig. 1), we downsample minority classes to induce strong imbalances. For the smaller data sets with ~30k records, downsampling to minority-class fractions of 0.1% results in extremely low numbers of minority records.

The downsampled Adult and Credit Card unbalanced training data sets contain as little as 19 and 18 minority records, respectively. This scenario mimics situations where data is limited and extreme cases occur rarely. Such setups create significant challenges for predictive models, as they may encounter difficulty making accurate predictions and generalizing well on unseen data.

Please note that the holdout sets on which the trained predictive models are scored are not subject to extreme imbalances as they are sampled from the original data before downsampling is applied. The imbalance ratios of the holdout set are moderate and vary from 6 to 24%.

In the evaluation, we report both the AUC-ROC and the AUC-PR due to the moderate but inhomogeneous distribution of minority fractions in the holdout set. The AUC-ROC is a very popular and expressive metric, but it is known to be overly optimistic on unbalanced optimization problems. While the AUC-ROC considers both classes, making it susceptible to neglecting the minority class, the AUC-PR focuses on the minority class as it is built up by precision and recall.

Upsampling the Adult income dataset

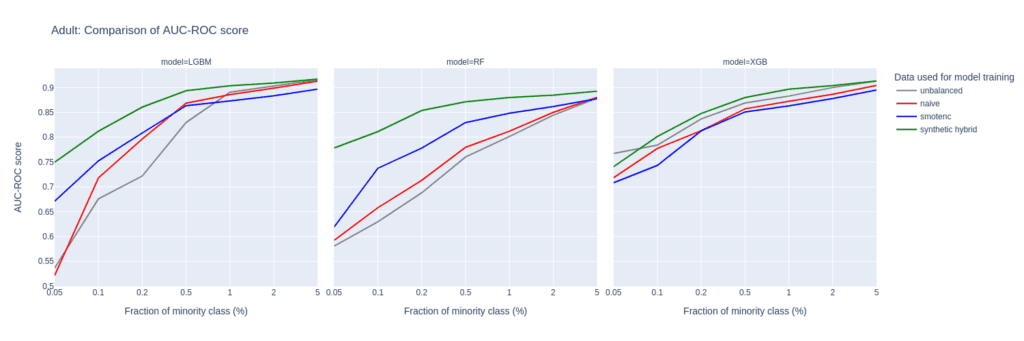

The largest differences between upsampling techniques are observed in the AUC-ROC when balancing training sets with a substantial class imbalance of 0.05% to 0.5%. This scenario involves a very limited number of minority samples, down to 19 for the Adult unbalanced training data set.

For the RF and the LGBM classifiers trained on the balanced hybrid data set, the AUC-ROC is larger than the ones obtained with other upsampling techniques. Differences can go up to 0.2 (RF classifier, minority fraction of 0.05%) between the AI-based synthetic upsampling and the second-best method.

The AUC-PR shows similar yet less pronounced differences. LGBM and XGB classifiers trained on the balanced hybrid data set perform best throughout almost all minority fractions. Interestingly, results for the RF classifier are mixed. Upsampling with synthetic data does not always lead to better performance, but it is always among the best-performing methods.

While synthetic data upsampling improves results through most of the minority fractions for the XGB classifier, too, the differences in performance are less pronounced. Especially the XGB classifier trained on the highly unbalanced training data performs surprisingly well. This suggests that the XGB classifier is better suited for handling unbalanced data.

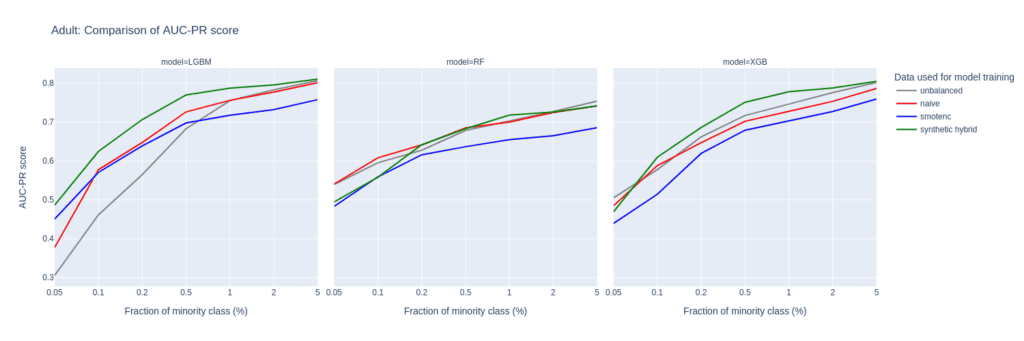

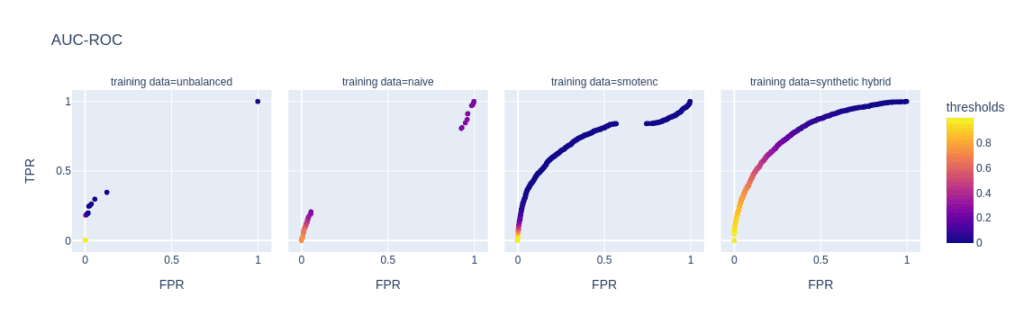

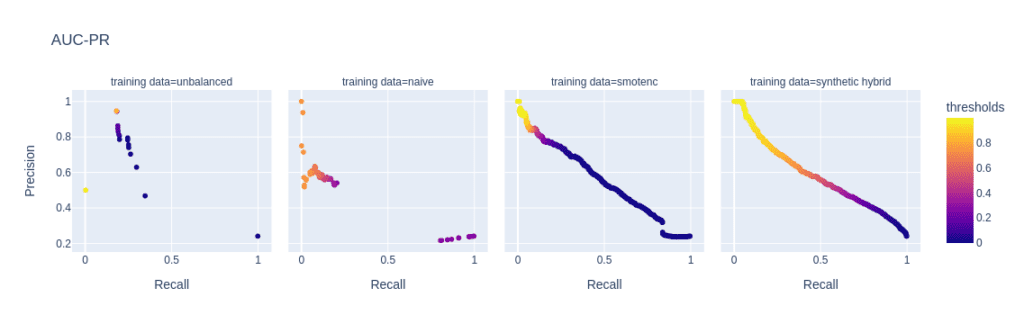

The reason for the performance differences in the AUC-ROC and AUC-PR is due to the low diversity and, consequently, overfitting when using naive or SMOTE-NC upsampling. These effects are visible in, e.g., the ROC and PR curves of the LGBM classifier for a minority fraction of 0.1% (fig. 3).

Every point on these curves corresponds to a specific prediction threshold for the classifier. The set of threshold values is defined by the variance of probabilities predicted by the models when scored on the holdout set. For both the highly unbalanced training data and the naively upsampled one, we observe very low diversity, with more than 80% of the holdout samples predicted to have an identical, very low probability of belonging to the minority class.

In the plot of the PR curve, this leads to an accumulation of points in the area with high precision and low recall, which means that the model is very conservative in making positive predictions and only makes a positive prediction when it is very confident that the data point belongs to the positive, that is, the minority class. This demonstrates the effect of overfitting on a few samples in the minority group.

SMOTE-NC has a much higher but still limited diversity, resulting in a smoother PR curve which, however, still contains discontinuities and has a large segment where precision and recall change rapidly with small changes in the prediction threshold.

The hybrid data set offers high diversity during model training, resulting in almost every holdout sample being assigned an unique probability of belonging to the minority class. Both ROC and PR curves are smooth and have a threshold of ~0.5 at the center, the point that is closest to the perfect classifier.

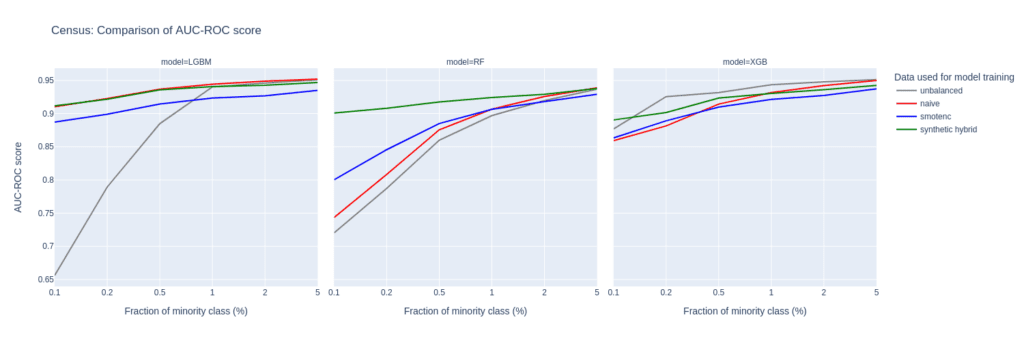

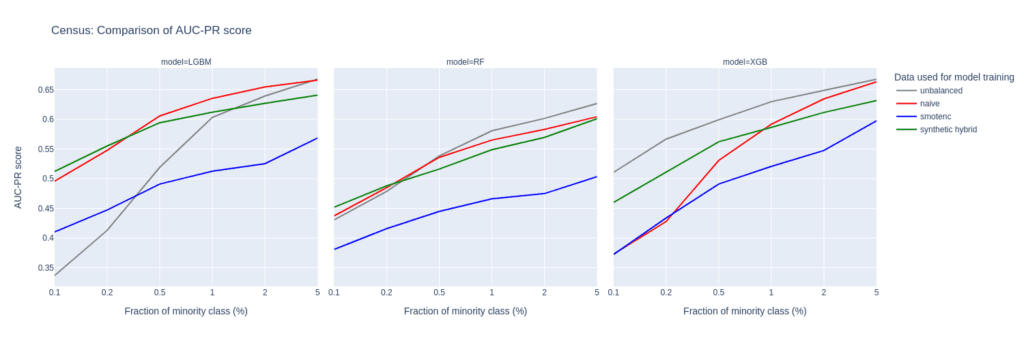

Figure 2: AUC-ROC (top) and AUC-PR (bottom) of classifiers LGBM, RandomForest (RF), and XGB trained on the Adult data set to predict the target feature income. The classifiers are trained on unbalanced data sets (grey) and data sets that are upsampled naively (red), with the SMOTE-NC algorithm (blue), and with AI-generated synthetic records (green). AUC values are reported for different fractions of the minority class in the unbalanced training data (x-axis).

Figure 3: ROC (top) and PR (bottom) curves of the Light GBM classifier trained on different versions of the adult data set (from left to right): unbalanced training data (minority class fraction 0.1%), naively upsampled training data, training data upsampled with SMOTE-NC, and training data enriched with AI-generated synthetic records (synthetic hybrid). Every point on the plots corresponds to a specific prediction threshold for the classifier.

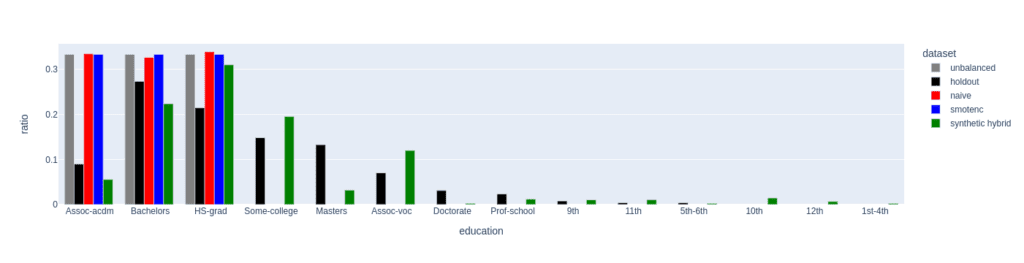

Figure 4: Distribution of the feature education for the female subgroup (sex equals female) of the minority class (income equals high). The distributions of the unbalanced (grey, minority class fraction of 0.1%), the naively upsampled (red), and the SMOTE-NC upsampled (blue) considerably differ from the holdout distribution. Only the data set upsampled with AI-generated synthetic records (synthetic hybrid) recovers the holdout distribution to a satisfactory degree and captures its diversity.

The limited power in creating diverse samples in situations where the minority class is severely underrepresented stems from naive upsampling and SMOTE-NC being limited to duplicating and interpolating between existing minority samples. Both methods are bound to a limited region in feature space.

Upsampling with AI-based synthetic minority samples, on the other hand, can, in principle, populate any region in feature space and can leverage and learn from properties of the majority samples which are transferable to minority examples, resulting in more diverse and realistic synthetic minority samples.

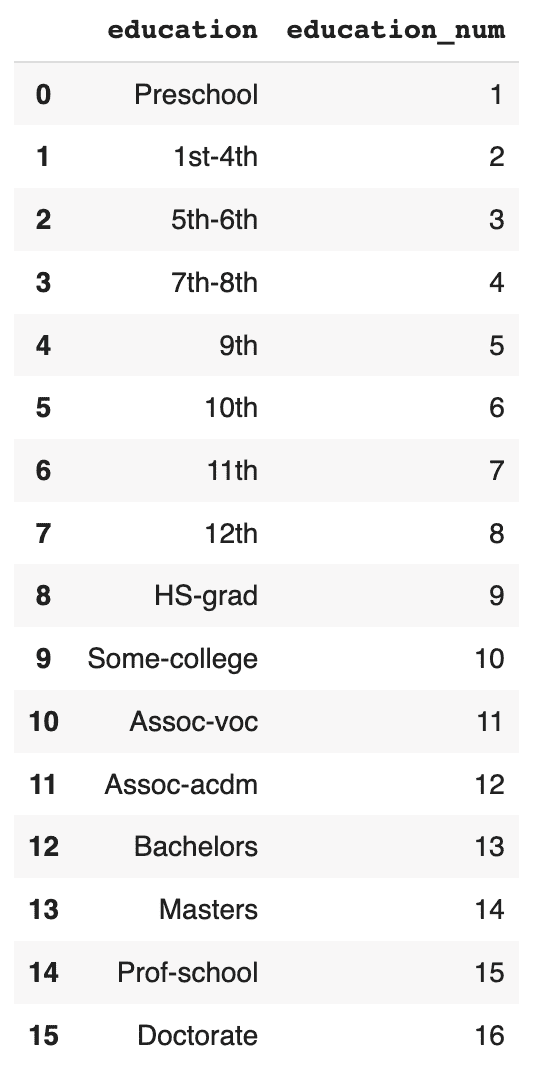

We analyze the difference in diversity by further “drilling down” the minority class (feature “income” equals “high”) and comparing the distribution of the feature “education” for the female subgroup (feature “sex” equals “female”) in the upsampled data sets (fig. 4).

For a minority fraction of 0.1%, this results in only three female minority records. Naive upsampling and SMOTE-NC have a very hard time generating diversity in such settings. Both just duplicate the existing categories “Bachelors”, “HS-grade”, and "Assoc-acdm,” resulting in a strong distortion of the distribution of the “education” feature as compared to the distribution in the holdout data set.

The distribution of the hybrid data has some imperfections, too, but it recovers the holdout distribution to a much better degree. Many more “education” categories are populated, and, with a few exceptions, the frequencies of the holdout data set are recovered to a satisfactory level. This ultimately leads to a larger diversity in the hybrid data set than in the naively balanced or SMOTE-NC balanced one.

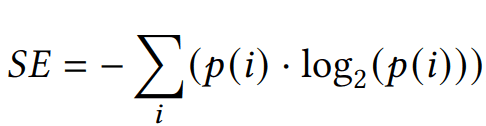

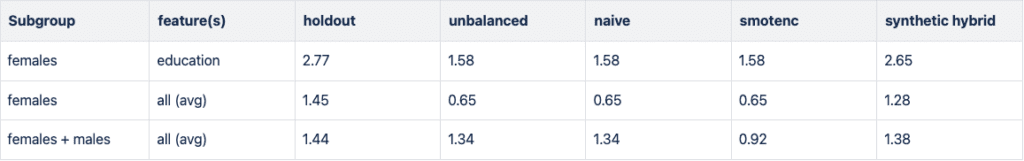

Diversity assessment with the Shannon entropy

We quantitatively assess diversity with the Shannon entropy, which measures the variability within a data set particularly for categorical data. It provides a measure of how uniformly the different categories of a specific feature are distributed within the data set.

The Shannon Entropy (SE) of a specific feature is defined as

where p(i) represents the probability of occurrence, i.e. the relative frequency of category i. SE ranges from 0 to log2(N), where N is the total number of categories. A value of 0 indicates maximum certainty with only one category, while higher entropy implies greater diversity and uncertainty, indicating comparable probabilities p(i) across categories.

In Figure 5, we report the Shannon entropy for different features and subgroups of the high-income population. In all cases, data diversity is the largest for the holdout data set. The downsampled training data set (unbalanced) has a strongly reduced SE, especially when focusing on the small group of high-income women. Naive and SMOTE-NC upsampling cannot recover any of the diversity in the holdout as both are limited to the categories present in the minority class. In line with the results presented in the paragraph above, synthetic data recovers the SE, i.e., the diversity of the holdout data set, to a large degree.

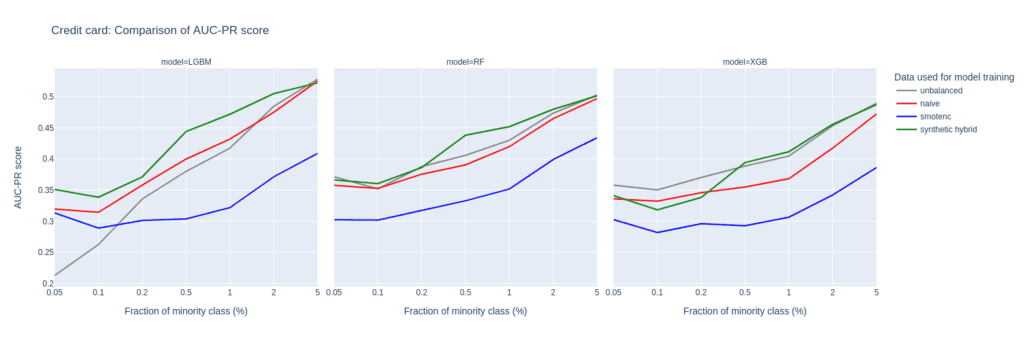

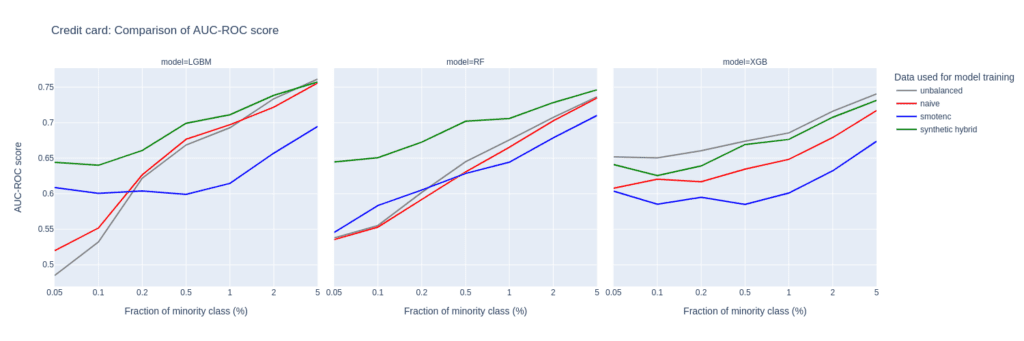

Upsampling the Credit Card data set

The Credit Card data set has similar properties as the Adult data set. The number of records, features, and the original, moderate imbalance are comparable. This again results in a very small number of minority records (18) after downsampling to a 0.1% minority fraction.

The main difference between them is the fact that Credit Card consists of more numeric features. The performance of different upsampling techniques on the unbalanced Credit Card training data set shows similar results to the Adult Data set, too. AUC-ROC and AUC-PR for both LGBM and RF classifiers improve over naive upsampling and SMOTE-NC when using the hybrid data set.

Again, the performance of the XGB model is more comparable between the different balanced data sets and we find very good performance for the highly-unbalanced training data set. Here, too, the hybrid data set is always among the best-performing upsampling techniques.

Interestingly, SMOTE-NC performs worst almost throughout all the metrics. This is surprising because we expect this data set, consisting mainly of numerical features, to be favorable for the SMOTE-NC upsampling technique.

Figure 6: AUC-ROC (top) and AUC-PR (bottom) of classifiers LGBM, RandomForest (RF), and XGB trained on the Credit Card data set to predict the target feature default payment. The classifiers are trained on unbalanced data sets (grey) and data sets that are upsampled naively (red), with the SMOTE-NC algorithm (blue), and with AI-generated synthetic records (green). AUC values are reported for different fractions of the minority class in the unbalanced training data (x-axis).

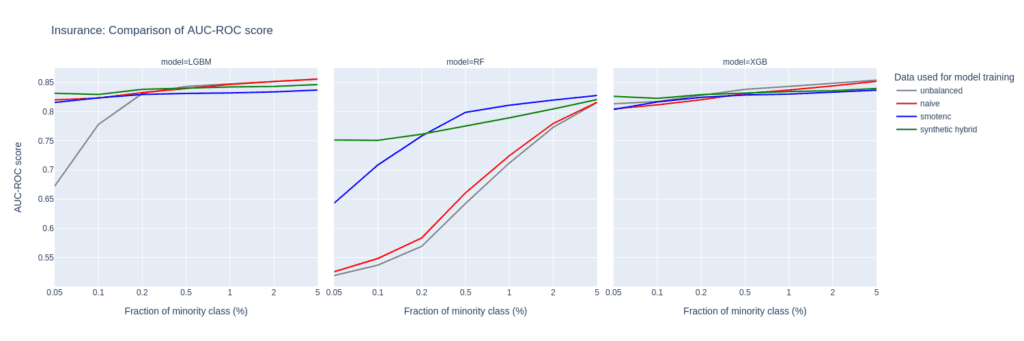

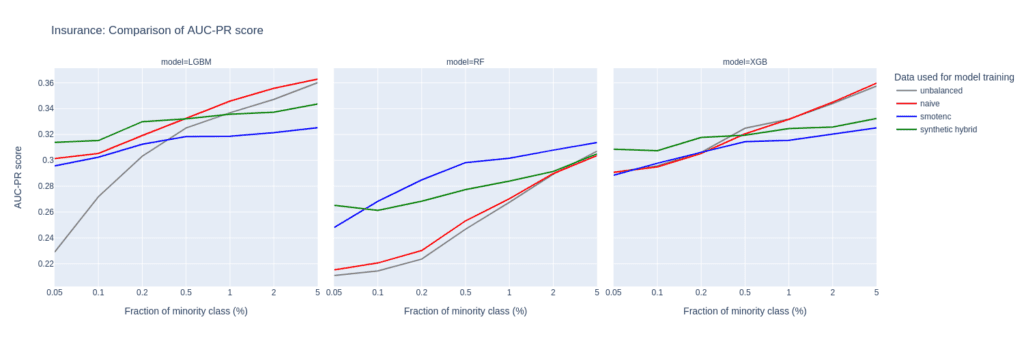

Upsampling the Insurance data set

The Insurance data set is larger than Adult and Census resulting in a larger number of minority records (268) when downsampling to the 0.1% minority fraction. This leads to a much more balanced performance between different upsampling techniques.

A notable difference in performance only appears for very small minority fractions. For minority fractions below 0.5%, both the AUC-ROC and AUC-PR of LGBM and XGB classifiers trained on the hybrid data set are consistently larger than for classifiers trained on other balanced data sets. The maximum performance gains, however, are smaller than those observed for “Adult” and “Credit Card”.

Figure 7: AUC-ROC (top) and AUC-PR (bottom) of classifiers LGBM, RandomForest (RF), and XGB trained on the Insurance data set to predict the target feature Response. The classifiers are trained on unbalanced data sets (grey) and data sets that are upsampled naively (red), with the SMOTE-NC algorithm (blue), and with AI-generated synthetic records (green). AUC values are reported for different fractions of the minority class in the unbalanced training data (x-axis).

Upsampling the Census data set

The Census data set has the largest number of features of all the data sets tested in this study. Especially, the 28 categorical features pose a challenge for SMOTE-NC, leading to poor performance in terms of AUC-PR.

Comparably to the Insurance data set, the performance of the LGBM classifier severely deteriorates when trained on highly unbalanced data sets. On the other hand, the XGB model excels and performs very well even on unbalanced training sets.

The Census data set highlights the importance of carefully selecting the appropriate model and upsampling technique when working with data sets that have high dimensionality and unbalanced class distributions, as performances can vary a lot.

Upsampling with synthetic data mitigates this variance, as all models trained on the hybrid data set are among the best performers across all classifiers and ranges of minority fractions.

Figure 8: AUC-ROC (top) and AUC-PR (bottom) of classifiers LGBM, RandomForest (RF), and XGB trained on the Census data set to predict the target feature income. The classifiers are trained on unbalanced data sets (grey) and data sets that are upsampled naively (red), with the SMOTE-NC algorithm (blue), and with AI-generated synthetic records (green). AUC values are reported for different fractions of the minority class in the unbalanced training data (x-axis).

Synthetic data for upsampling

AI-based synthetic data generation can provide an effective solution to the problem of highly unbalanced data sets in machine learning. By creating diverse and realistic samples, upsampling with synthetic data generation can improve the performance of predictive models. This is especially true for cases where not only the minority fraction is low but also the absolute number of minority records is at a bare minimum. In such extreme settings, training on data upsampled with AI-generated synthetic records leads to better performance of prediction models than upsampling with SMOTE-NC or naive upsampling. Across all parameter settings explored in this study, synthetic upsampling resulted in predictive models which rank among the top-performing ones.

TABLE OF CONTENT

- Why care about data anonymization tools?

- Data anonymization tools: What are they anyway?

- How do data anonymization tools work?

- Legacy data anonymization approaches

- The next generation of data anonymization tools

- Data anonymization approaches and their use cases

- The best and the worst data anonymization approaches

- Conclusion

Why should you care about data anonymization tools?

Data anonymization tools can be your best friends or your data quality’s worst enemies. Sometimes both. Anonymizing data is never easy, and it gets trickier when:

- You collect more data,

- Your datasets become more complex,

- Your adversaries come up with new types of privacy attacks,

- You remove PII from your data, thinking it will provide privacy protection,

- You add too much noise to the data and destroy the intellingence.

You try to do your best and use data anonymization tools on a daily basis. You have removed all sensitive information, masked the rest, and randomized for good measure. So, your data is safe now. Right?

As the Austrians—Arnold Schwarzenegger included—say: Schmäh! Which roughly translates as bullshit. Why do so many data anonymization efforts end up being Schmäh?

Data anonymization tools: What are they anyway?

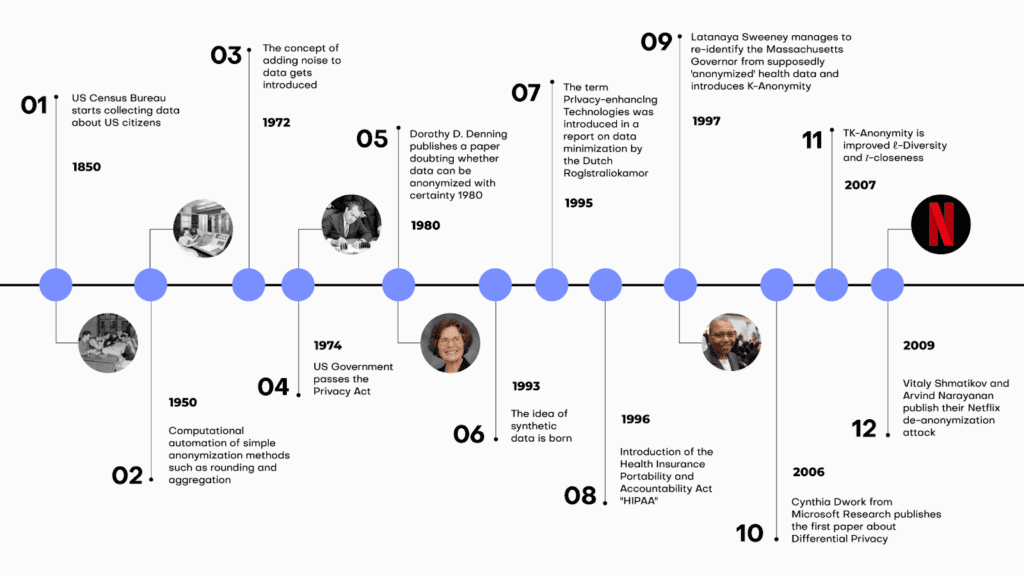

Data anonymization tools conveniently automate the process of data anonymization with the goal of making sure that no individual included in the data can be re-identified. The most ancient of data anonymization tools, namely aggregation and the now obsolete rounding, were born in the 1950s. The concept of adding noise to data as a way to protect anonymity entered the picture in the 1970s. We have come a long way since then. Privacy-enhancing technologies were born in the 90s and have been evolving since, offering better, safer, and more data-friendly data anonymization tools.

Data anonymization tools must constantly evolve since attacks are also getting more and more sophisticated. Today, new types of privacy attacks using the power of AI, can reidentify individuals in datasets that are thought of as anonymous. Data privacy is a constantly shifting field with lots of moving targets and constant pressure to innovate.

Data anonymization tools: How do they work?

Although a myriad of data anonymization tools exist, we can differentiate between two groups of data anonymization tools based on how they approach privacy in principle. Legacy data anonymization tools work by removing or disguising personally identifiable information, or so-called PII. Traditionally, this means unique identifiers, such as social security numbers, credit card numbers, and other kinds of ID numbers.

The trouble with these types of data anonymization tools is that no matter how much of the data is removed or modified, a 1:1 relationship between the data subject and the data points remains. With the advances of AI-based reidentification attacks, it’s getting increasingly easier to find this 1:1 relationship, even in the absence of obvious PII pointers. Our behavior—essentially a series of events—is almost like a fingerprint. An attacker doesn’t need to know my name or social security number if there are other behavior-based identifiers that are unique to me, such as my purchase history or location history. As a result, state of the art data anonymization tools are needed to anonymize behavioral data.

Which data anonymization approaches can be considered legacy?

Legacy data anonymization tools are often associated with manual work, whereas modern data privacy solutions incorporate machine learning and AI to achieve more dynamic and effective results. But let's have a look at the most common forms of traditional anonymization first.

1. What is data masking?

Data masking is one of the most frequently used data anonymization approaches across industries. It works by replacing parts of the original data with asterisks or another placeholder. Data masking can reduce the value or utility of the data, especially if it's too aggressive. The data might not retain the same distribution or characteristics as the original, making it less useful for analysis.

The process of data masking can be complex, especially in environments with large and diverse datasets. The masking should be consistent across all records to ensure that the data remains meaningful. The masked data should adhere to the same validation rules, constraints, and formats as the original dataset. Over time, as systems evolve and new data is added or structures change, ensuring consistent and accurate data masking can become challenging.

The biggest challenge with data masking: to decide what to actually mask. Simply masking PII from data using Python, for example, still has its place, but the resulting data should not be considered anonymized by any stretch of the imagination. The problem are quasi identifiers (= the combination of attributes of data) that if left unprocessed still allow re-identification in a masked dataset quite easily.

2. What is pseudonymization?

Pseudonymization is strictly speaking not an anonymization approach as pseudomized data is not anonymous data. However, it's very common and so we will explain it here. Pseudonymization replaces private identifiers with fake identifiers, or pseudonyms or removes private identifiers alltogether. While the data can still be matched with its source when one has the right key, it can't be matched without it. The 1:1 relationship remains and can be recovered not only by accessing the key but also by linking different datasets. The risk of reversibility is always high, and as a result, pseudonymization should only be used when it’s absolutely necessary to reidentify data subjects at a certain point in time.

The pseudonyms typically need a key for the transformation process. Managing, storing, and protecting this key is critical. If it's compromised, the pseudonymization can be reversed.

What’s more, under GDPR, pseudonymized data is still considered personal data, meaning that data protection obligations continue to apply.

Overall, while pseudonymization might be a common practice today, it should only be used as a stand-alone tool when absolutely necessary. Pseudonymization is not anonymization and pseudonymized data should never be considered anonymized.

3. What is generalization and aggregation?

This method reduces the granularity of the data. For instance, instead of displaying an exact age of 27, the data might be generalized to an age range, like 20-30. Generalization causes a significant loss of data utility by decreasing data granularity. Over-generalizing can render data almost useless, while under-generalizing might not provide sufficient privacy.

You also have to consider the risk of residual disclosure. Generalized data sets might contain enough information to infer about individuals, especially when combined with other data sources.

4. What is data swapping or perturbation?

Data swapping or perturbation describes the approach of replacing original data values with values from other records. The privacy-utility trade-off strikes again: perturbing data leads to a loss of information, which can affect the accuracy and reliability of analyses performed on the perturbed data. However at the same time the achieved privacy protection is not very high. Protecting against re-identification while maintaining data utility is challenging. Finding the appropriate perturbation methods that suit the specific data and use case is not always straightforward.

5. What is randomization?

Randomization is a legacy data anonymization approach that changes the data to make it less connected to a person. This is done through adding random noise to the data.

Some data types, such as geospatial or temporal data, can be challenging to randomize effectively while maintaining data utility. Preserving spatial or temporal relationships in the data can be complex.

Selecting the right approach (i.e. what variables to add noise to and how much) to do the job is also challenging since each data type and use case could call for a different approach. Choosing the wrong approach can have serious consequences downstream, resulting in inadequate privacy protection or excessive data distortion.

Data consumers could be unaware of the effect randomization had on the data and might end up with false conclusions. On the bright side, randomization techniques are relatively straightforward to implement, making them accessible to a wide range of organizations and data professionals.

6. What is data redaction?

Data redaction is similar to data masking, but in the case of this data anonymization approach, entire data values or sections are removed or obscured. Deleting PII is easy to do. However, it’s a sure-fire way to encounter a privacy disaster down the line. It’s also devastating for data utility since critical elements or crucial contextual information could be removed from the data.

Redacted data may introduce inconsistencies or gaps in the dataset, potentially affecting data integrity. Redacting sensitive information can result in a smaller dataset. This could impact statistical analyses and models that rely on a certain volume of data for accuracy.

Next-generation data anonymization tools

The next-generation data anonymization tools, or so-called privacy-enhancing technologies take an entirely different, more use-case-centered approach to data anonymization and privacy protection.

1. Homomorphic encryption

The first group of modern data anonymization tools works by encrypting data in a way that allows for computational operations on encrypted data. The downside of this approach is that the data, well, stays encrypted which makes it very hard to work with such data if it was previously unknown the user. You can't perform e.g. exploratory analyses on encrypted data. In addition it is computationally very intensive and, as such, not widely available and cumbersome to use. As the price of computing power decreases and capacity increases, this technology is set to become more popular and easier to access.

2. Federated learning

Federated learning is a fairly complicated approach, enabling machine learning models to be trained on distributed datasets. Federated learning is commonly used in applications that involve mobile devices, such as smartphones and IoT devices.

For example, predictive text suggestions on smartphones can be improved without sending individual typing data to a central server. In the energy sector, federated learning helps optimize energy consumption and distribution without revealing specific consumption patterns of individual users or entities. However, these federated systems require the participation of all players, which is near-impossible to achieve if the different parts of the system belong to different operators. Simply put, Google can pull it off, while your average corporation would find it difficult.

3. Synthetic data generation

A more readily available approach is an AI-powered data anonymization tool: synthetic data generation. Synthetic data generation extracts the distributions, statistical properties, and correlations of datasets and generates entirely new, synthetic versions of said datasets, where all individual data points are synthetic. The synthetic data points look realistic and, on a group level, behave like the original. As a data anonymization tool, reliable synthetic data generators produce synthetic data that is representative, scalable, and suitable for advanced use cases, such as AI and machine learning development, analytics, and research collaborations.

4. Secure multiparty computation (SMPC)

Secure Multiparty Computation (SMPC), in simple terms, is a cryptographic technique that allows multiple parties to jointly compute a function over their private inputs while keeping those inputs confidential. It enables these parties to collaborate and obtain results without revealing sensitive information to each other.

While it's a powerful tool for privacy-preserving computations, it comes with its set of implementation challenges, particularly in terms of complexity, efficiency, and security considerations. It requires expertise and careful planning to ensure that it is applied effectively and securely in practical applications.

Data anonymization approaches and their use cases

Data anonymization encompass a diverse set of approaches, each with its own strengths and limitations. In this comprehensive guide, we explore ten key data anonymization strategies, ranging from legacy methods like data masking and pseudonymization to cutting-edge approaches such as federated learning and synthetic data generation. Whether you're a data scientist or privacy officer, you will find this bullshit-free table listing their advantages, disadvantages, and common use cases very helpful.

| # | Data Anonymization Approach | Description | Advantages | Disadvantages | Use Cases |

|---|---|---|---|---|---|

| 1 | Data Masking | Masks or disguises sensitive data by replacing characters with symbols or placeholders. | - Simplicity of implementation. - Preservation of data structure. | - Limited protection against inference attacks. - Potential negative impact on data analysis. | - Anonymizing email addresses in communication logs. - Concealing rare names in datasets. - Masking sensitive words in text documents. |

| 2 | Pseudonymization | Replaces sensitive data with pseudonyms or aliases or removes it alltogether. | - Preservation of data structure. - Data utility is generally preserved. - Fine-grained control over pseudonymization rules. | - Pseudomized data is not anonymous data. - Risk of re-identification is very high. - Requires secure management of pseudonym mappings. | - Protecting patient identities in medical research. - Securing employee IDs in HR records. |

| 3 | Generalization/Aggregation | Aggregates or generalizes data to reduce granularity. | - Simple implementation. | - Loss of fine-grained detail in the data. - Risk of data distortion that affects analysis outcomes. - Challenging to determine appropriate levels of generalization. | - Anonymizing age groups in demographic data. - Concealing income brackets in economic research. |

| 4 | Data Swapping/Perturbation | Swaps or perturbs data values between records to break the link between individuals and their data. | - Flexibility in choosing perturbation methods. - Potential for fine-grained control. | - Privacy-utility trade-off is challenging to balance. - Risk of introducing bias in analyses. - Selection of appropriate perturbation methods is crucial. | - E-commerce. - Online user behavior analysis. |

| 5 | Randomization | Introduces randomness (noise) into the data to protect data subjects. | - Flexibility in applying to various data types. - Reproducibility of results when using defined algorithms and seeds. | - Privacy-utility trade-off is challenging to balance. - Risk of introducing bias in analyses. - Selection of appropriate randomization methods is hard. | - Anonymizing survey responses in social science research. - Online user behavior analysis. |

| 6 | Data Redaction | Removes or obscures specific parts of the dataset containing sensitive information. | - Simplicity of implementation. | - Loss of data utility, potentially significant. - Risk of removing contextual information. - Data integrity challenges. | - Concealing personal information in legal documents. - Removing private data in text documents. |

| 7 | Homomorphic Encryption | Encrypts data in such a way that computations can be performed on the encrypted data without decrypting it, preserving privacy. | - Strong privacy protection for computations on encrypted data. - Supports secure data processing in untrusted environments. - Cryptographically provable privacy guarantees. | - Encrypted data cannot be easily worked with if previously unknown to the user. - Complexity of encryption and decryption operations. - Performance overhead for cryptographic operations. - May require specialized libraries and expertise. | - Basic data analytics in cloud computing environments. - Privacy-preserving machine learning on sensitive data. |

| 8 | Federated Learning | Trains machine learning models across decentralized edge devices or servers holding local data samples, avoiding centralized data sharing. | - Preserves data locality and privacy, reducing data transfer. - Supports collaborative model training on distributed data. - Suitable for privacy-sensitive applications. | - Complexity of coordination among edge devices or servers. - Potential communication overhead. - Ensuring model convergence can be challenging. - Shared models can still leak privacy. | - Healthcare institutions collaboratively training disease prediction models. - Federated learning for mobile applications preserving user data privacy. - Privacy-preserving AI in smart cities. |

| 9 | Synthetic Data Generation | Creates artificial data that mimics the statistical properties of the original data while protecting privacy. | - Strong privacy protection with high data utility. - Preserves data structure and relationships. - Scalable for generating large datasets. | - Accuracy and representativeness of synthetic data may vary depending on the generator. - May require specialized algorithms and expertise. | - Sharing synthetic healthcare data for research purposes. - Synthetic data for machine learning model training. - Privacy-preserving data sharing in financial analysis. |

| 10 | Secure Multiparty Computation (SMPC) | Enables multiple parties to jointly compute functions on their private inputs without revealing those inputs to each other, preserving privacy. | - Strong privacy protection for collaborative computations. - Suitable for multi-party data analysis while maintaining privacy. - Offers security against collusion. | - Complexity of protocol design and setup. - Performance overhead, especially for large-scale computations. - Requires trust in the security of the computation protocol. | - Privacy-preserving data aggregation across organizations. - Collaborative analytics involving sensitive data from multiple sources. - Secure voting systems. |

The best and the worst data anonymization approaches

When it comes to choosing the right data anonymization approach, we are faced with a complex problem requiring a nuanced view and careful consideration. When we put all the Schmäh aside, choosing the right data anonymization strategy comes down to balancing the so-called privacy-utility trade-off.

The privacy-utility trade-off refers to the balancing act of data anonymization’ two key objectives: providing privacy to data subjects and utility to data consumers. Depending on the specific use case, the quality of implementation, and the level of privacy required, different data anonymization approaches are more or less suitable to achieve the ideal balance of privacy and utility. However, some data anonymization approaches are inherently better than others when it comes to the privacy-utility trade-off. High utility with robust, unbreakable privacy is the unicorn all privacy officers are hunting for, and since the field is constantly evolving with new types of privacy attacks, data anonymization must evolve too.

As it stands today, the best data anonymization approaches for preserving a high level of utility while effectively protecting privacy are the following:

Synthetic Data Generation

Synthetic data generation techniques create artificial datasets that mimic the statistical properties of the original data. These datasets can be shared without privacy concerns. When properly designed, synthetic data can preserve data utility for a wide range of statistical analyses while providing strong privacy protection. It is particularly useful for sharing data for research and analysis without exposing sensitive information.

Privacy: high

Utility: high for analytical, data sharing, and ML/AI training use cases

Homomorphic Encryption

Homomorphic encryption allows computations to be performed on encrypted data without the need to decrypt it. This technology is valuable for secure data processing in untrusted environments, such as cloud computing. While it can be computationally intensive, it offers a high level of privacy and maintains data utility for specific tasks, particularly when privacy-preserving machine learning or data analytics is involved. Depending on the specific encryption scheme and parameters chosen, there may be a trade-off between the level of security and the efficiency of computations. Also, increasing security often leads to slower performance.

Privacy: high

Utility: can be high, depending on the use case

Secure Multiparty Computation (SMPC)

SMPC allows multiple parties to jointly compute a function over their private inputs without revealing those inputs to each other. It offers strong privacy guarantees and can be used for various collaborative data analysis tasks while preserving data utility. SMPC has applications in areas like secure data aggregation and privacy-preserving collaborative analytics.

Privacy: High

Utility: can be high, depending on the use case

Data anonymization tools: the saga continues

In the ever-evolving landscape of data anonymization strategies, the journey to strike a balance between preserving privacy and maintaining data utility is an ongoing challenge. As data grows more extensive and complex and adversaries devise new tactics, the stakes of protecting sensitive information have never been higher.

Legacy data anonymization approaches have their limitations and are increasingly likely to fail in protecting privacy. While they may offer simplicity in implementation, they often fall short in preserving the intricate relationships and structures within data.

Modern data anonymization tools, however, present a promising shift towards more robust privacy protection. Privacy-enhancing technologies have emerged as powerful solutions. These tools harness encryption, machine learning, and advanced statistical techniques to safeguard data while enabling meaningful analysis.

Furthermore, the rise of synthetic data generation signifies a transformative approach to data anonymization. By creating artificial data that mirrors the statistical properties of the original while safeguarding privacy, synthetic data generation provides an innovative solution for diverse use cases, from healthcare research to machine learning model training.

As the data privacy landscape continues to evolve, organizations must stay ahead of the curve. What is clear is that the pursuit of privacy-preserving data practices is not only a necessity but also a vital component of responsible data management in our increasingly vulnerable world.

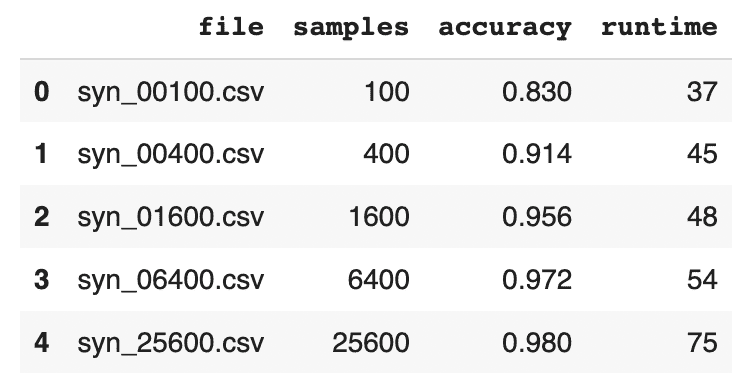

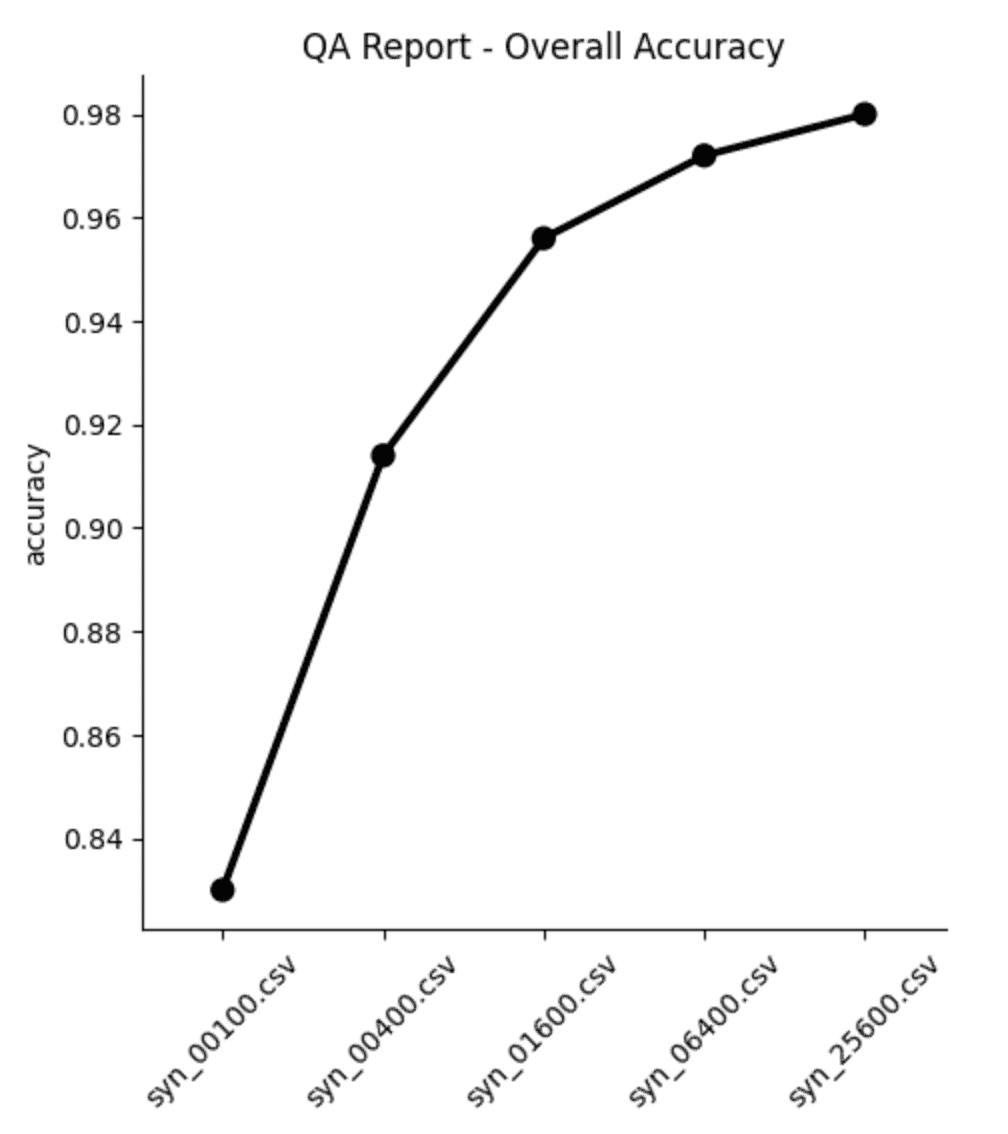

In this tutorial, you will learn how to use synthetic rebalancing to improve the performance of machine-learning (ML) models on imbalanced classification problems. Rebalancing can be useful when you want to learn more of an otherwise small or underrepresented population segment by generating more examples of it. Specifically, we will look at classification ML applications in which the minority class accounts for less than 0.1% of the data.

We will start with a heavily imbalanced dataset. We will use synthetic rebalancing to create more high-quality, statistically representative instances of the minority class. We will compare this method against 2 other types of rebalancing to explore their advantages and pitfalls. We will then train a downstream machine learning model on each of the rebalanced datasets and evaluate their relative predictive performance. The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

Fig 1 - Synthetic rebalancing creates more statistically representative instances of the minority class

Why should I rebalance my dataset?

In heavily imbalanced classification projects, a machine learning model has very little data to effectively learn patterns about the minority class. This will affect its ability to correctly class instances of this minority class in the real (non-training) data when the model is put into production. A common real-world example is credit card fraud detection: the overwhelming majority of credit card transactions are perfectly legitimate, but it is precisely the rare occurrences of illegitimate use that we would be interested in capturing.

Let’s say we have a training dataset with 100,000 credit card transactions which contains 999,900 legitimate transactions and 100 fraudulent ones. A machine-learning model trained on this dataset would have ample opportunity to learn about all the different kinds of legitimate transactions, but only a small sample of 100 records in which to learn everything it can about fraudulent behavior. Once this model is put into production, the probability is high that fraudulent transactions will occur that do not follow any of the patterns seen in the small training sample of 100 fraudulent records. The machine learning model is unlikely to classify these fraudulent transactions.

So how can we address this problem? We need to give our machine learning model more examples of fraudulent transactions in order to ensure optimal predictive performance in production. This can be achieved through rebalancing.

Rebalancing Methods

We will explore three types of rebalancing:

- Random (or “naive”) oversampling

- SMOTE upsampling

- Synthetic rebalancing

The tutorial will give you hands-on experience with each type of rebalancing and provide you with in-depth understanding of the differences between them so you can choose the right method for your use case. We’ll start by generating an imbalanced dataset and showing you how to perform synthetic rebalancing using MOSTLY AI's synthetic data generator. We will then compare performance metrics of each rebalancing method on a downstream ML task.

But first things first: we need some data.

Generate an Imbalanced Dataset

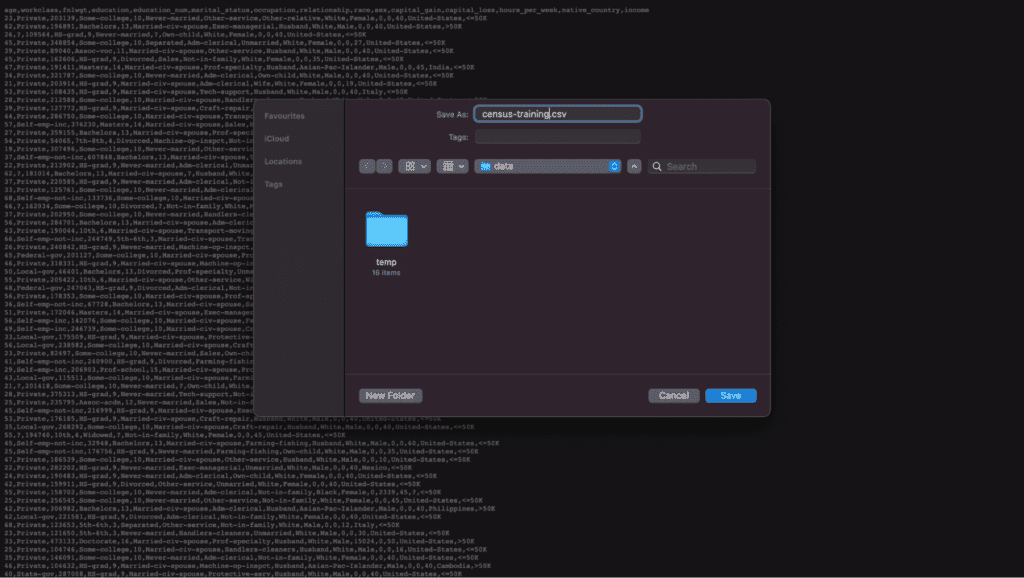

For this tutorial, we will be using the UCI Adult Income dataset, as well as the same training and validation split, that was used in the Train-Synthetic-Test-Real tutorial. However, for this tutorial we will work with an artificially imbalanced version of the dataset containing only 0.1% of high-income (>50K) records in the training data, by downsampling the minority class. The downsampling has already been done for you, but if you want to reproduce it yourself you can use the code block below:

def create_imbalance(df, target, ratio):

val_min, val_maj = df[target].value_counts().sort_values().index

df_maj = df.loc[df[target]==val_maj]

n_min = int(df_maj.shape[0]/(1-ratio)*ratio)

df_min = df.loc[df[target]==val_min].sample(n=n_min, random_state=1)

df_maj = df.loc[df[target]==val_maj]

df_imb = pd.concat([df_min, df_maj]).sample(frac=1, random_state=1)

return df_imb

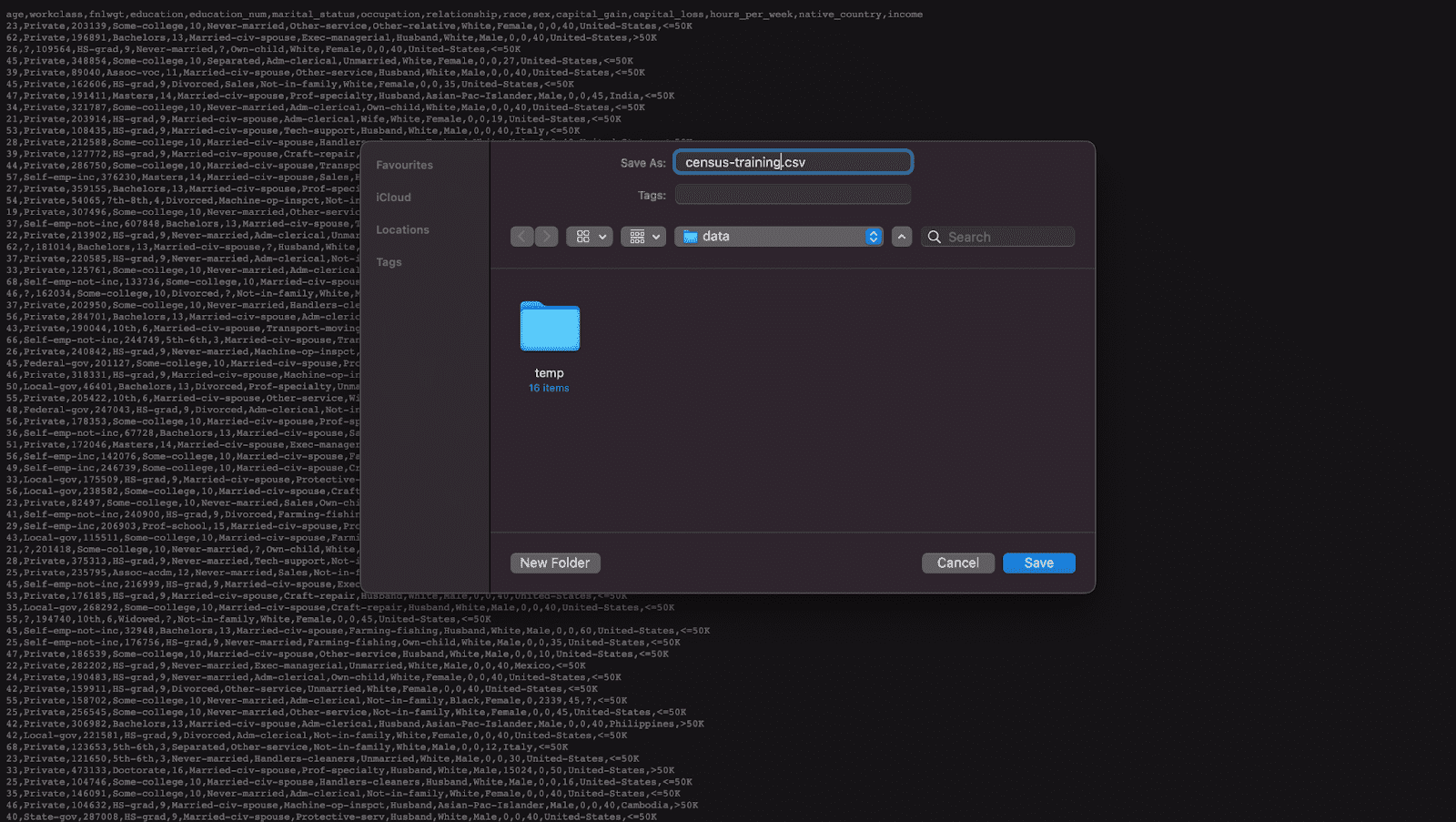

df_trn = pd.read_csv(f'{repo}/census-training.csv')

df_trn_imb = create_imbalance(df_trn, 'income', 1/1000)

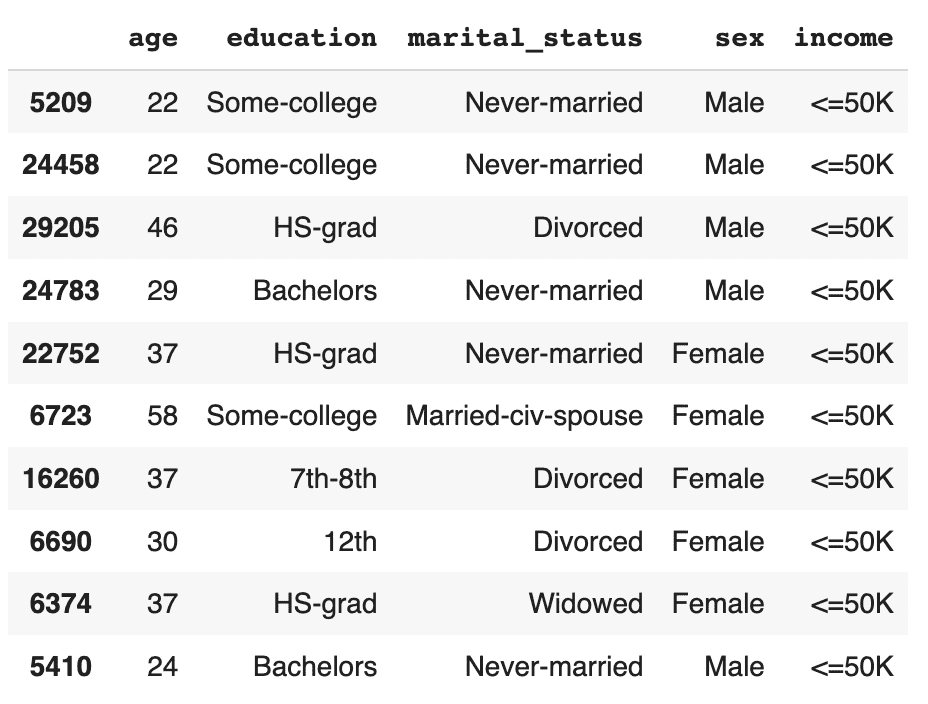

df_trn_imb.to_csv('census-training-imbalanced.csv', index=False)Let’s take a quick look at this imbalanced dataset by randomly sampling 10 rows. For legibility let’s select only a few columns, including the income column as our imbalanced feature of interest:

trn = pd.read_csv(f'{repo}/census-training-imbalanced.csv')

trn.sample(n=10)

You can try executing the line above multiple times to see different samples. Still, due to the strong class imbalance, the chance of finding a record with high income in a random sample of 10 is minimal. This would be problematic if you were interested in creating a machine learning model that could accurately classify high-income records (which is precisely what we’ll be doing in just a few minutes).

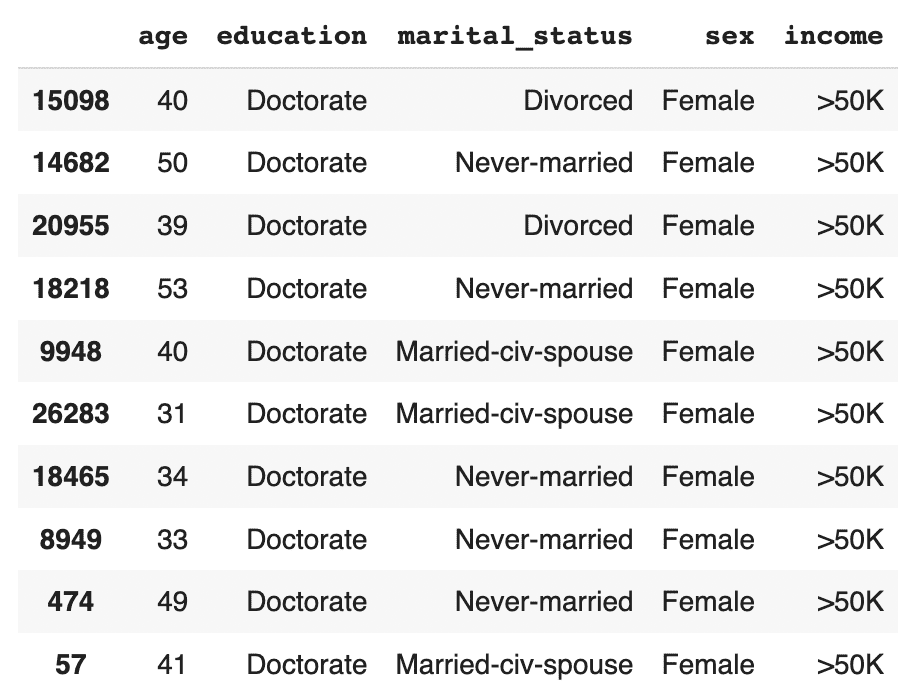

The problem becomes even more clear when we try to sample a specific sub-group in the population. Let’s sample all the female doctorates with a high income in the dataset. Remember, the dataset contains almost 30 thousand records.

trn[

(trn['income']=='>50K')

& (trn.sex=='Female')

& (trn.education=='Doctorate')

]

It turns out there are actually no records of this type in the training data. Of course, we know that these kinds of individuals exist in the real world and so our machine learning model is likely to encounter them when put in production. But having had no instances of this record type in the training data, it is likely that the ML model will fail to classify this kind of record correctly. We need to provide the ML model with a higher quantity and more varied range of training samples of the minority class to remedy this problem.

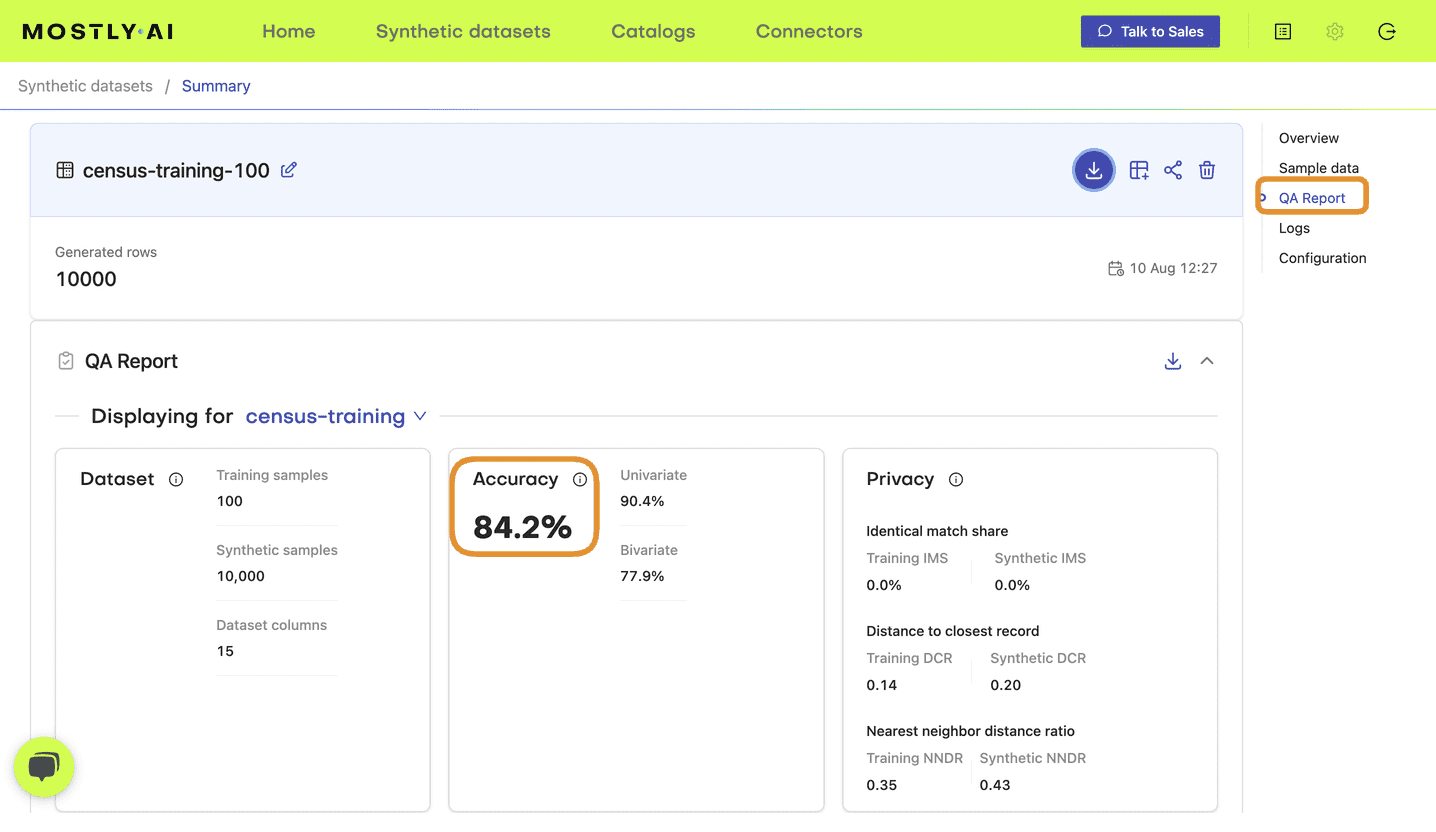

Synthetic rebalancing with MOSTLY AI

MOSTLY AI offers a synthetic rebalancing feature that can be used with any categorical column. Let’s walk through how this works:

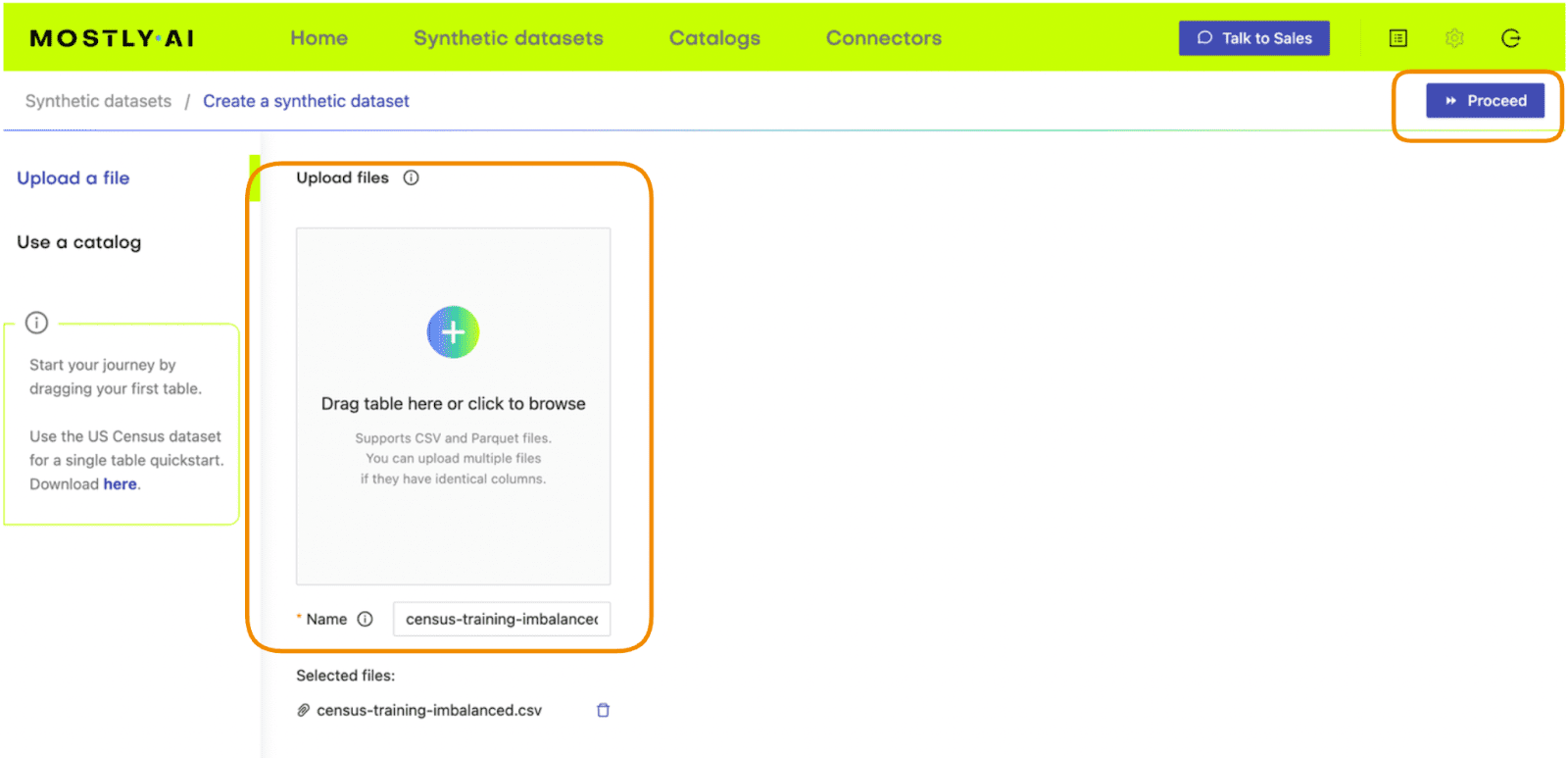

- Download the imbalanced dataset here if you haven’t generated it yourself already. Use Ctrl+S or Cmd+S to save the file locally.

- Go to your MOSTLY AI account and navigate to “Synthetic Datasets”. Upload

census-training-imbalanced.csvand click “Proceed”.

Fig 2 - Upload the original dataset to MOSTLY AI’s synthetic data generator.

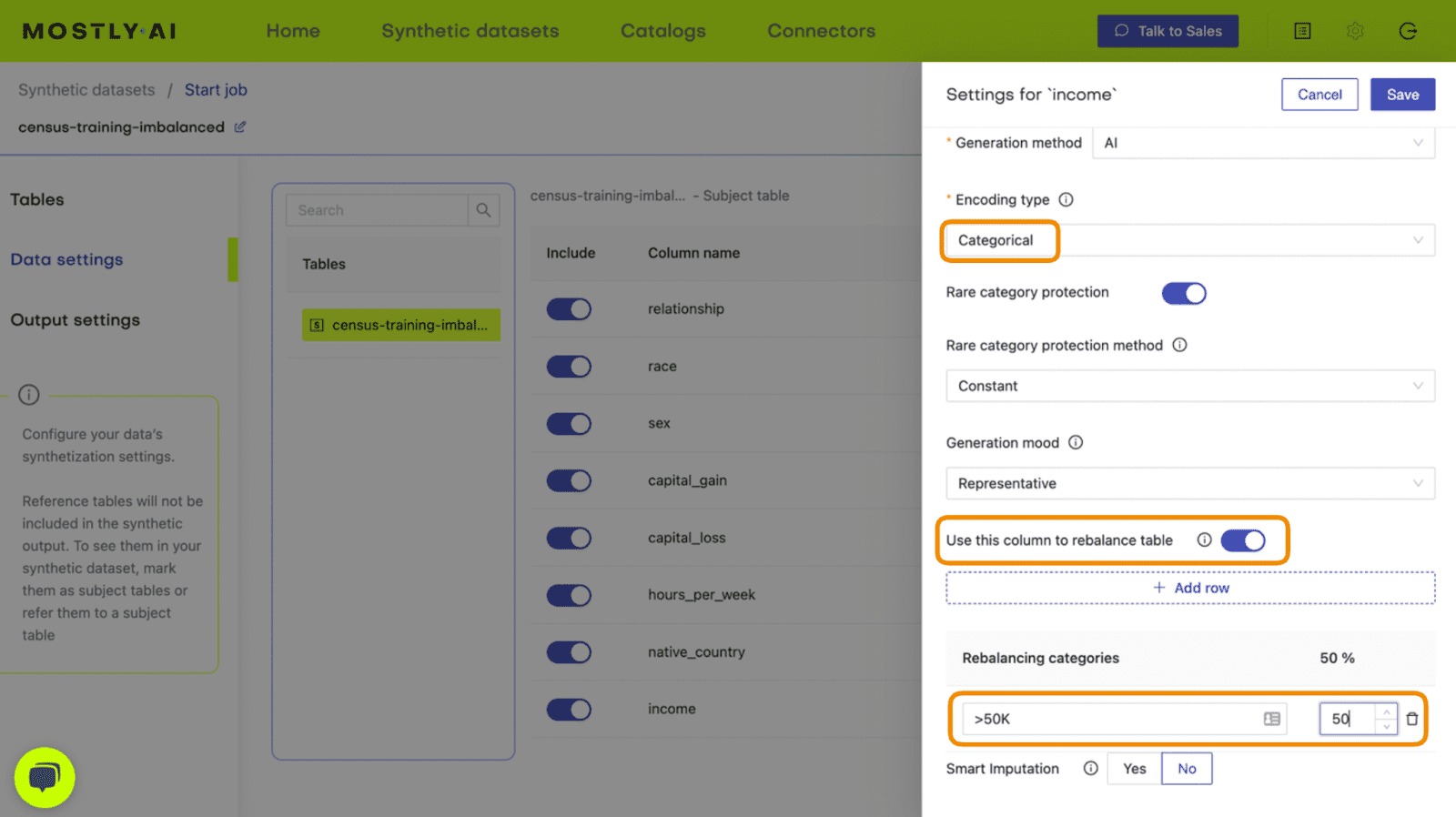

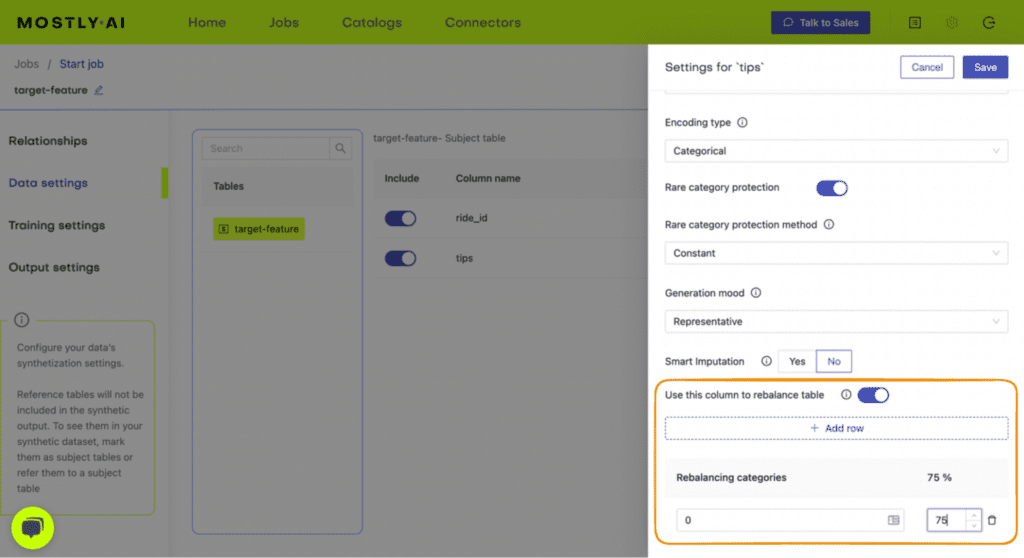

- On the next page, click “Data Settings” and then click on the “Income” column

Fig 3 - Navigate to the Data Settings of the Income column.

- Set the Encoding Type to “Categorical” and select the option to “Use this column to rebalance the table”. Then add a new row and rebalance the “>50K” column to be “50%” of the dataset. This will synthetically upsample the minority class to create an even split between high-income and low-income records.

Fig 4 - Set the relevant settings to rebalance the income column.

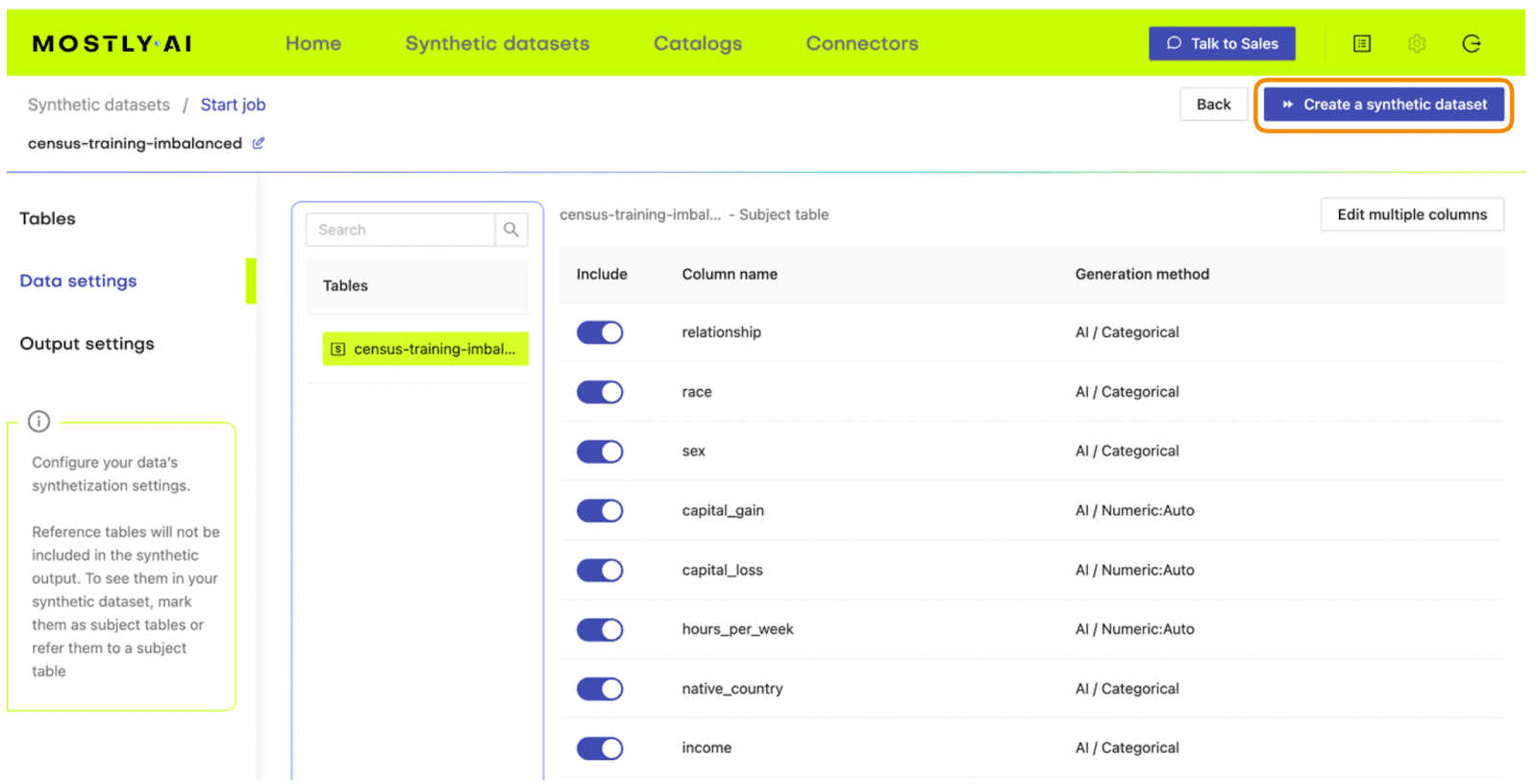

- Click “Save” and on the next page click “Create a synthetic dataset” to launch the job.

Fig 5 - Launch the synthetic data generation

Once the synthesization is complete, you can download the synthetic dataset to disk. Then return to wherever you are running your code and use the following code block to create a DataFrame containing the synthetic data.

# upload synthetic dataset

import pandas as pd

try:

# check whether we are in Google colab

from google.colab import files

print("running in COLAB mode")

repo = 'https://github.com/mostly-ai/mostly-tutorials/raw/dev/rebalancing'

import io

uploaded = files.upload()

syn = pd.read_csv(io.BytesIO(list(uploaded.values())[0]))

print(f"uploaded synthetic data with {syn.shape[0]:,} records and {syn.shape[1]:,} attributes")

except:

print("running in LOCAL mode")

repo = '.'

print("adapt `syn_file_path` to point to your generated synthetic data file")

syn_file_path = './census-synthetic-balanced.csv'

syn = pd.read_csv(syn_file_path)

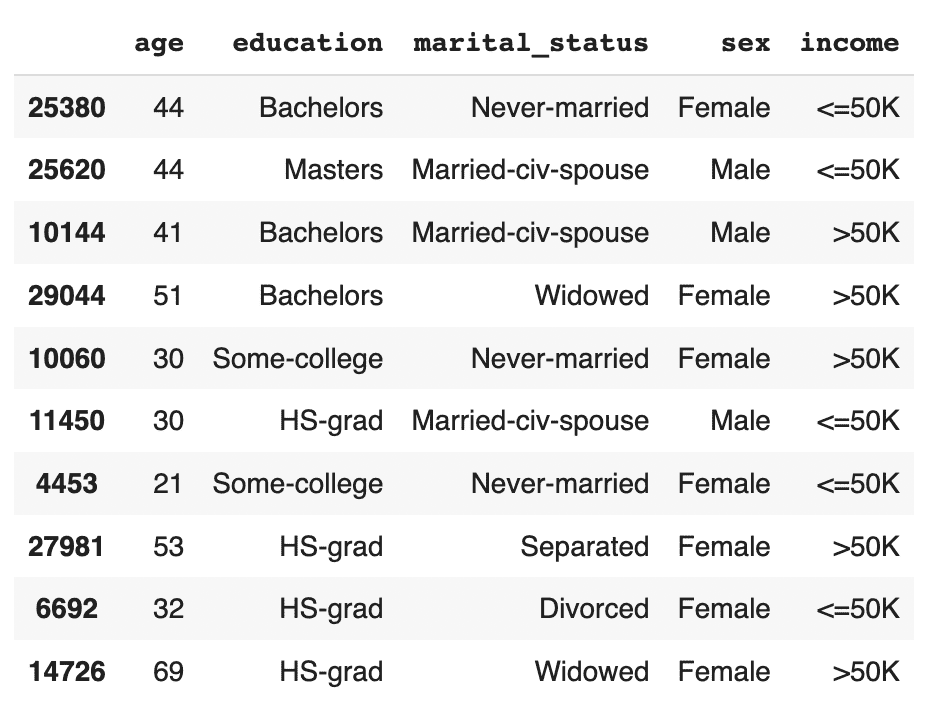

print(f"read synthetic data with {syn.shape[0]:,} records and {syn.shape[1]:,} attributes")Let's now repeat the data exploration steps we performed above with the original, imbalanced dataset. First, let’s display 10 randomly sampled synthetic records. We'll subset again for legibility. You can run this line multiple times to get different samples.

# sample 10 random records

syn_sub = syn[['age','education','marital_status','sex','income']]

syn_sub.sample(n=10)

This time, you should see that the records are evenly distributed across the two income classes.

Let's now investigate all female doctorates with a high income in the synthetic, rebalanced dataset:

syn_sub[

(syn_sub['income']=='>50K')

& (syn_sub.sex=='Female')

& (syn_sub.education=='Doctorate')

].sample(n=10)

The synthetic data contains a list of realistic, statistically sound female doctorates with a high income. This is great news for our machine learning use case because it means that our ML model will have plenty of data to learn about this particular important subsegment.

Evaluate ML performance using TSTR

Let’s now compare the quality of different rebalancing methods by training a machine learning model on the rebalanced data and evaluating the predictive performance of the resulting models.

We will investigate and compare 3 types of rebalancing:

- Random (or “naive”) oversampling

- SMOTE upsampling

- Synthetic rebalancing

The code block below defines the functions that will preprocess your data, train a LightGBM model and evaluate its performance using a holdout dataset. For more detailed descriptions of this code, take a look at the Train-Synthetic-Test-Real tutorial.

# import necessary libraries

import lightgbm as lgb

from lightgbm import early_stopping

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_auc_score, f1_score

import seaborn as sns

import matplotlib.pyplot as plt

# define target column and value

target_col = 'income'

target_val = '>50K'

# define preprocessing function

def prepare_xy(df: pd.DataFrame):

y = (df[target_col]==target_val).astype(int)

str_cols = [

col for col in df.select_dtypes(['object', 'string']).columns if col != target_col

]

for col in str_cols:

df[col] = pd.Categorical(df[col])

cat_cols = [

col for col in df.select_dtypes('category').columns if col != target_col

]

num_cols = [

col for col in df.select_dtypes('number').columns if col != target_col

]

for col in num_cols:

df[col] = df[col].astype('float')

X = df[cat_cols + num_cols]

return X, y

# define training function

def train_model(X, y):

cat_cols = list(X.select_dtypes('category').columns)

X_trn, X_val, y_trn, y_val = train_test_split(X, y, test_size=0.2, random_state=1)

ds_trn = lgb.Dataset(

X_trn,

label=y_trn,

categorical_feature=cat_cols,

free_raw_data=False

)

ds_val = lgb.Dataset(

X_val,

label=y_val,

categorical_feature=cat_cols,

free_raw_data=False

)

model = lgb.train(

params={

'verbose': -1,

'metric': 'auc',

'objective': 'binary'

},

train_set=ds_trn,

valid_sets=[ds_val],

callbacks=[early_stopping(5)],

)

return model

# define evaluation function

def evaluate_model(model, hol):

X_hol, y_hol = prepare_xy(hol)

probs = model.predict(X_hol)

preds = (probs >= 0.5).astype(int)

auc = roc_auc_score(y_hol, probs)

f1 = f1_score(y_hol, probs>0.5, average='macro')

probs_df = pd.concat([

pd.Series(probs, name='probability').reset_index(drop=True),

pd.Series(y_hol, name=target_col).reset_index(drop=True)

], axis=1)

sns.displot(

data=probs_df,

x='probability',

hue=target_col,

bins=20,

multiple="stack"

)

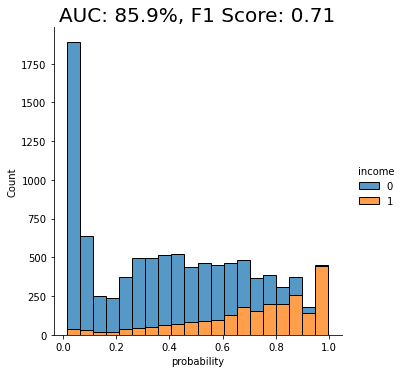

plt.title(f"AUC: {auc:.1%}, F1 Score: {f1:.2f}", fontsize = 20)

plt.show()

return auc

# create holdout dataset

df_hol = pd.read_csv(f'{repo}/census-holdout.csv')

df_hol_min = df_hol.loc[df_hol['income']=='>50K']

print(f"Holdout data consists of {df_hol.shape[0]:,} records",

f"with {df_hol_min.shape[0]:,} samples from the minority class")ML performance of imbalanced dataset

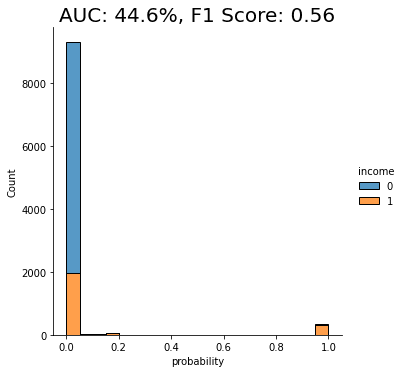

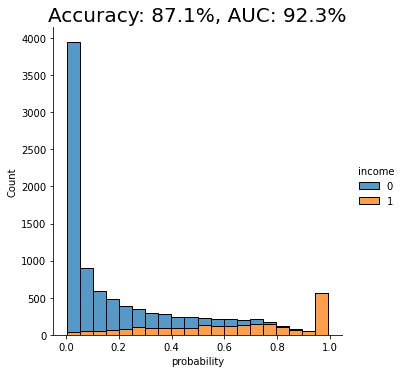

Let’s now train a LightGBM model on the original, heavily imbalanced dataset and evaluate its predictive performance. This will give us a baseline against which we can compare the performance of the different rebalanced datasets.

X_trn, y_trn = prepare_xy(trn)

model_trn = train_model(X_trn, y_trn)

auc_trn = evaluate_model(model_trn, df_hol)

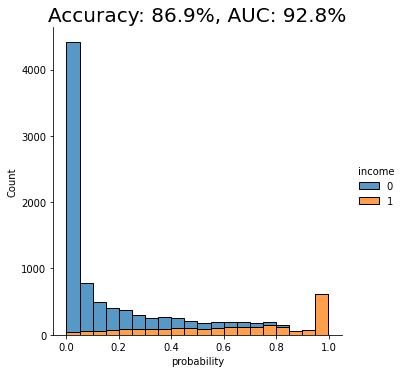

With an AUC of about 50%, the model trained on the imbalanced dataset is just as good as a flip of a coin, or, in other words, not worth very much at all. The downstream LightGBM model is not able to learn any signal due to the low number of minority-class samples.

Let’s see if we can improve this using rebalancing.

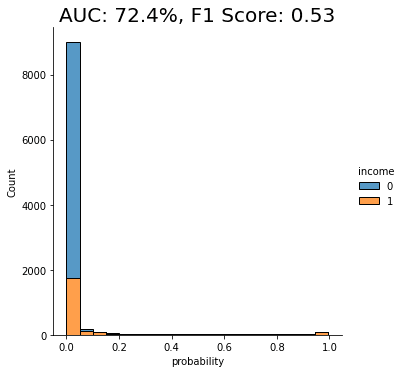

Naive rebalancing

First, let’s rebalance the dataset using the random oversampling method, also known as “naive rebalancing”. This method simply takes the minority class records and copies them to increase their quantity. This increases the number of records of the minority class but does not increase the statistical diversity. We will use the imblearn library to perform this step, feel free to check out their documentation for more context.

The code block performs the naive rebalancing, trains a LightGBM model using the rebalanced dataset and evaluates its predictive performance:

from imblearn.over_sampling import RandomOverSampler

X_trn, y_trn = prepare_xy(trn)

sm = RandomOverSampler(random_state=1)

X_trn_up, y_trn_up = sm.fit_resample(X_trn, y_trn)

model_trn_up = train_model(X_trn_up, y_trn_up)

auc_trn_up = evaluate_model(model_trn_up, df_hol)

We see a clear improvement in predictive performance, with an AUC score of around 70%. This is better than the baseline model trained on the imbalanced dataset, but still not great. We see that a significant portion of the “0” class (low-income) is being incorrectly classified as “1” (high-income).

This is not surprising because, as stated above, this rebalancing method just copies the existing minority class records. This increases their quantity but does not add any new statistical information into the model and therefore does not offer the model much data that it can use to learn about minority-class instances that are not present in the training data.

Let’s see if we can improve on this using another rebalancing method.

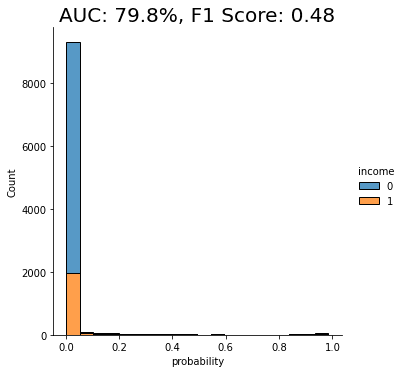

SMOTE rebalancing

SMOTE upsampling is a state-of-the art upsampling method which, unlike the random oversampling seen above, does create novel, statistically representative samples. It does so by interpolating between neighboring samples. It’s important to note, however, that SMOTE upsampling is non-privacy-preserving.

The following code block performs the rebalancing using SMOTE upsampling, trains a LightGBM model on the rebalanced dataset, and evaluates its performance:

from imblearn.over_sampling import SMOTENC

X_trn, y_trn = prepare_xy(trn)

sm = SMOTENC(

categorical_features=X_trn.dtypes=='category',

random_state=1

)

X_trn_smote, y_trn_smote = sm.fit_resample(X_trn, y_trn)

model_trn_smote = train_model(X_trn_smote, y_trn_smote)

auc_trn_smote = evaluate_model(model_trn_smote, df_hol)

We see another clear jump in performance: the SMOTE upsampling boosts the performance of the downstream model to close to 80%. This is clearly an improvement from the random oversampling we saw above, and for this reason, SMOTE is quite commonly used.

Let’s see if we can do even better.

Synthetic rebalancing with MOSTLY AI