Table of Contents

What is data bias?

Data bias is the systematic error introduced into data workflows and machine learning (ML) models due to inaccurate, missing, or incorrect data points which fail to accurately represent the population. Data bias in AI systems can lead to poor decision-making, costly compliance issues as well as drastic societal consequences. Amazon’s gender-biased HR model and Google’s racially-biased hate speech detector are some well-known examples of data bias with significant repercussions in the real world. It is no surprise, then, that 54% of top-level business leaders in the AI industry say they are “very to extremely concerned about data bias”.

With the massive new wave of interest and investment in Large Language Models (LLMs) and Generative AI, it is crucial to understand how data bias can affect the quality of these applications and the strategies you can use to mitigate this problem.

In this article, we will dive into the nuances of data bias. You will learn all about the different types of data bias, explore real-world examples involving LLMs and Generative AI applications, and learn about effective strategies for mitigation and the crucial role of synthetic data.

Data bias types and examples

There are many different types of data bias that you will want to watch out for in your LLM or Generative AI projects. This comprehensive Wikipedia list contains over 100 different types, each covering a very particular instance of biased data. For this discussion, we will focus on 5 types of data bias that are highly relevant to LLMs and Generative AI applications.

- Selection bias

- Automation bias

- Temporal bias

- Implicit bias

- Social bias

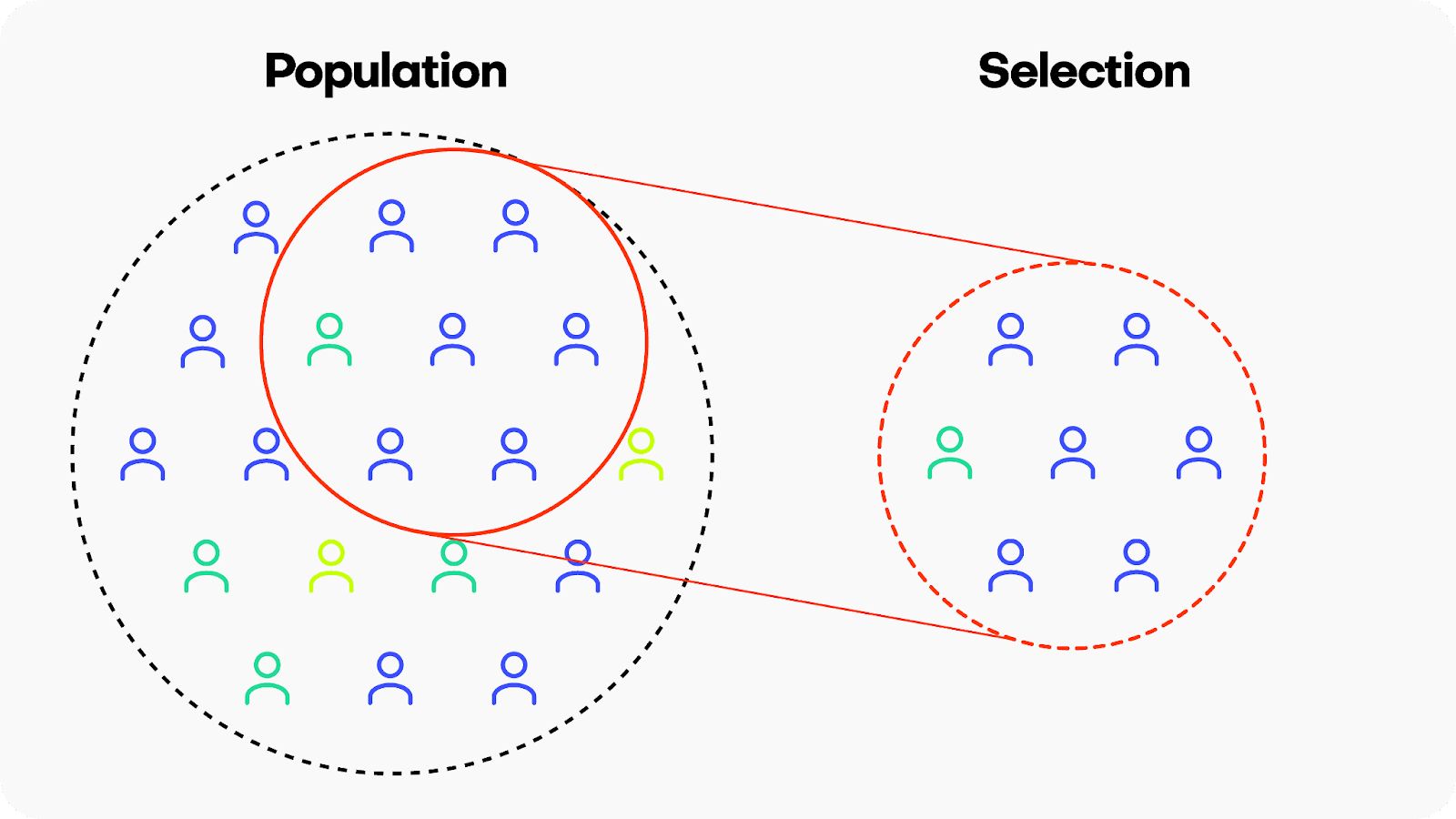

Selection bias

Selection bias occurs when the data used for training a machine learning model is not representative of the population it is intended to generalize to. This means that certain groups or types of data are either overrepresented or underrepresented, leading the model to learn patterns that may not accurately reflect the broader population. There are many different kinds of selection bias, such as sampling bias, participation bias and coverage bias.

Example: Google’s hate-speech detection algorithm Perspective is reported to exhibit bias against black American speech patterns, among other groups. Because the training data did not include sufficient examples of the linguistic patterns typical of the black American community, the model ended up flagging common slang used by black Americans as toxic. Leading generative AI companies like OpenAI, Anthropic and others are using Perspective daily at massive scale to determine the toxicity of their LLMs, potentially perpetuating these biased predictions.

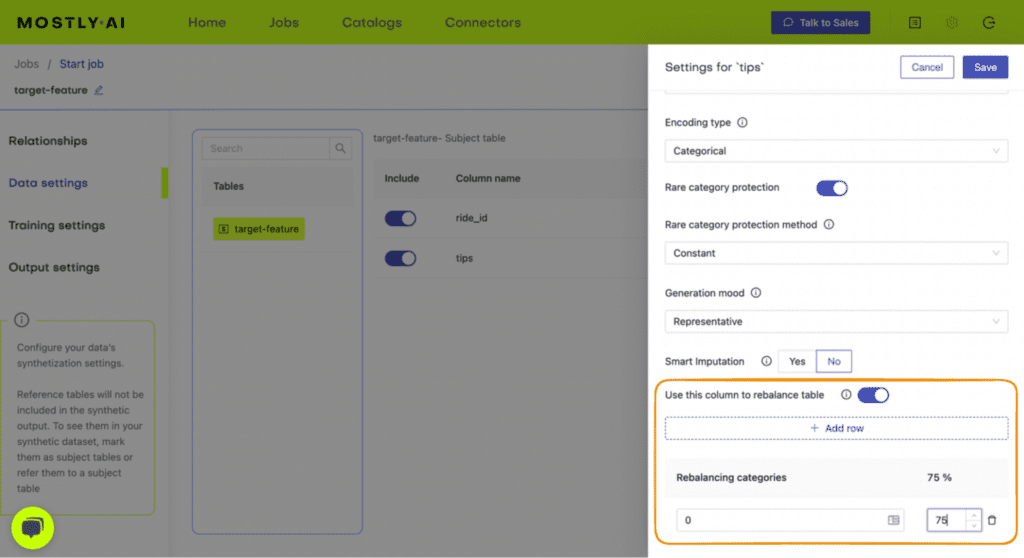

Solution: Invest in high-quality, diverse data sources. When your data still has missing values or imbalanced categories, consider using synthetic data with rebalancing and smart imputation methods.

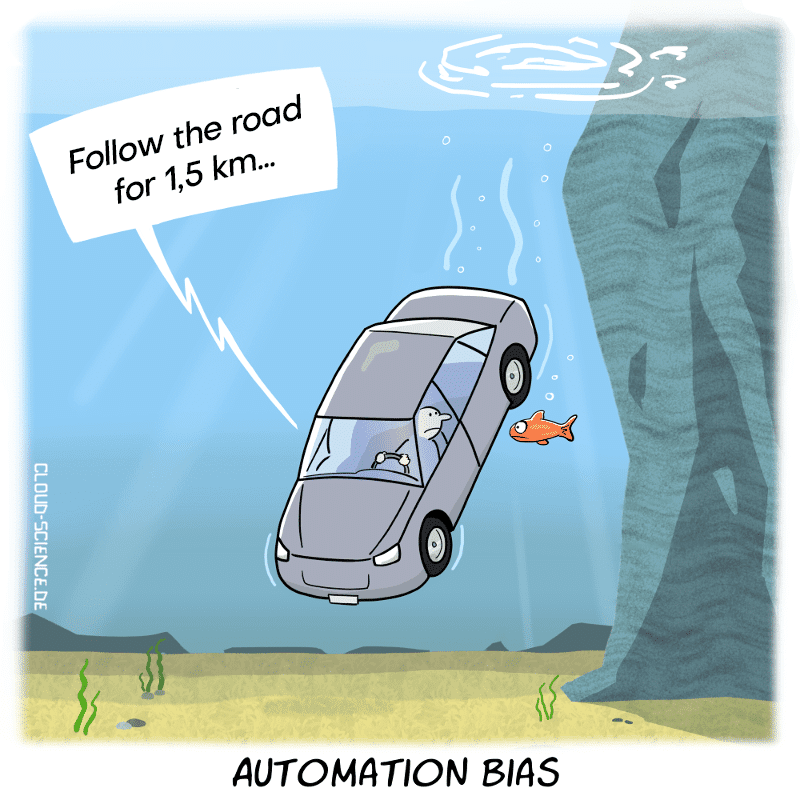

Automation bias

Automation bias is the tendency to favor results generated by automated systems over those generated by non-automated systems, irrespective of the relative quality of their outputs. This is becoming an increasingly relevant type of bias to watch out for as people, including top-level business leaders, may rush to implement automatically generated AI applications with the underlying assumption that simply because these applications use the latest, most popular tech their output will be inherently more trustworthy or performant.

Example: In a somewhat ironic overlap of generative technologies, a 2023 study found that some Mechanical Turk workers were using LLMs to generate the data which they were being paid to generate themselves. Later studies have since shown that training generative models on generated data can create a negative loop, also called “the curse of recursion”, which can significantly reduce output quality.

Solution: Include human supervision safeguards in any mission-critical AI application.

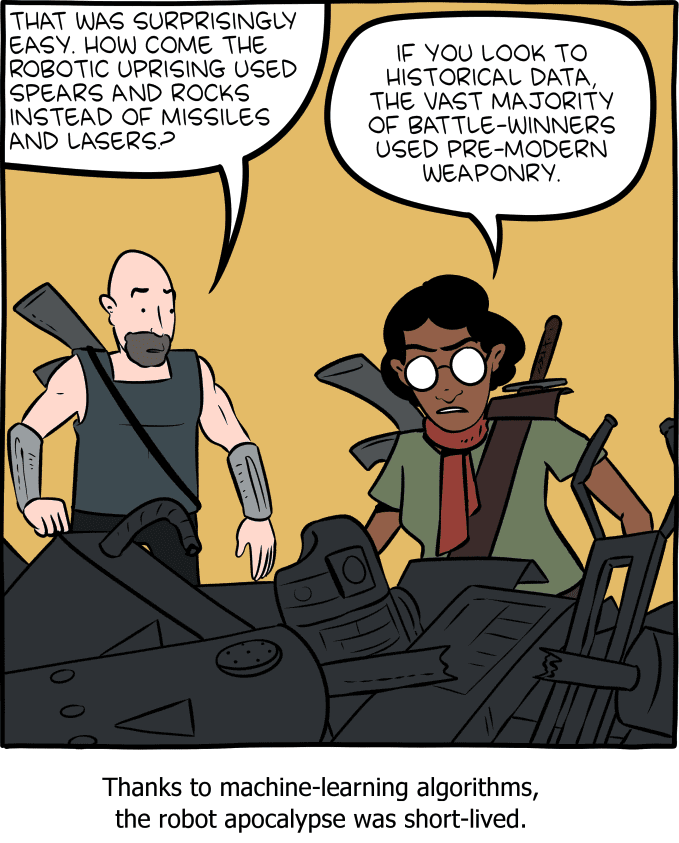

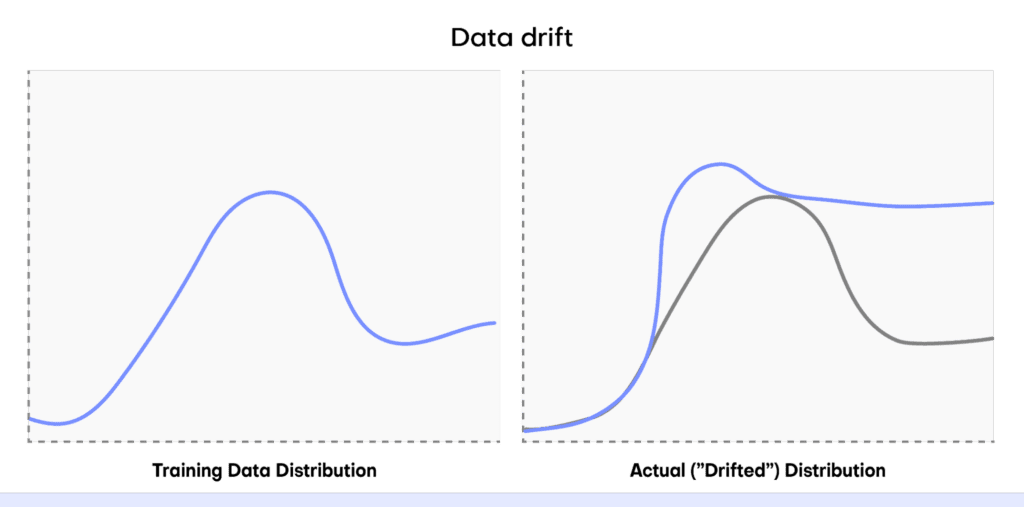

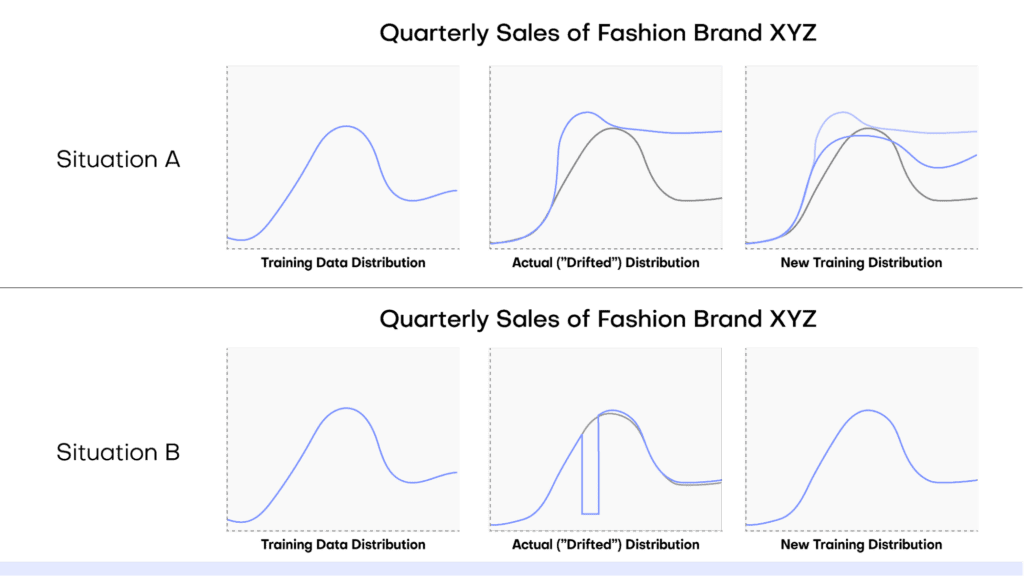

Temporal or historical bias

Temporal or historical bias arises when the training data is not representative of the current context in terms of time. Imagine a language model trained on a dataset from a specific time period, adopting outdated language or perspectives. This temporal bias can limit the model's ability to generate content that aligns with current information.

Example: ChatGPT’s long-standing September 2021 cut-off date is a clear example of a temporal bias that we have probably all encountered. Until recently, the LLM could not access training data after this date, severely limiting its applicability for use cases that required up-to-date data. Fortunately, in most cases the LLM was aware of its own bias and communicated it clearly with responses like "'I'm sorry, but I cannot provide real-time information".

Solution: Invest in high-quality data, up-to-date data sources. If you are still lacking data records, it may be possible to simulate them using synthetic data’s conditional generation feature.

Implicit bias

Implicit bias can happen when the humans involved in ML building or testing operate based on unconscious assumptions or preexisting judgments that do not accurately match the real world. Implicit biases are typically ingrained in individuals based on societal and cultural influences and can impact perceptions and behaviors without conscious awareness. Implicit biases operate involuntarily and can influence judgments and actions even when an individual consciously holds no biased beliefs. Because of the implied nature of this bias, it is a particularly challenging type of bias to address.

Example: LLMs and generative AI applications require huge amounts of labeled data. This labeling or annotation is largely done by human workers. These workers may operate with implicit biases. For example, in assigning a toxicity score for specific language prompts, a human annotation worker may assign an overly cautious or liberal score depending on personal experiences related to that specific word or phrase.

Solution: Invest in fairness and data bias training for your team. Whenever possible, involve multiple, diverse individuals in important data processing tasks to balance possible implicit biases.

Social bias

Social bias occurs when machine learning models reinforce existing social stereotypes present in the training data, such as negative racial, gender or age-dependent biases. Generative AI applications can inadvertently perpetuate biased views if their training data includes data that reflects societal prejudices. This can result in responses that reinforce harmful societal narratives. As ex-Google researcher Timit Gebru and colleagues cautioned in their 2021 paper: “In accepting large amounts of web text as ‘representative’ of ‘all’ of humanity [LLMs] risk perpetuating dominant viewpoints, increasing power imbalances and further reifying inequality.”

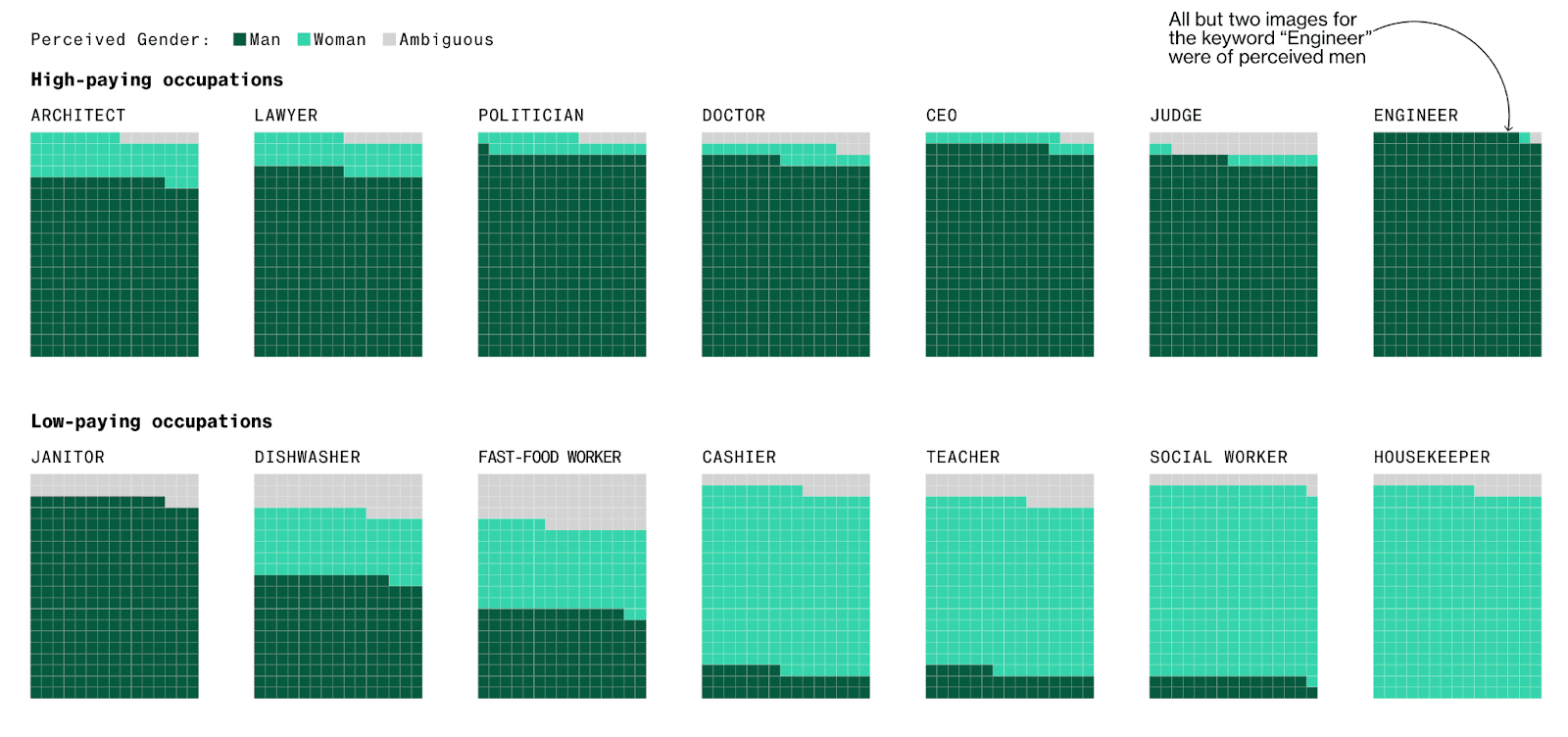

Example: Stable Diffusion and other generative AI models have been reported to exhibit socially biased behavior due to the quality of their training datasets. One study reported that the platform tends to underrepresent women in images of high-performing occupations and overrepresent darker-skinned people in images of low-wage workers and criminals. Part of the problem here seems to be the size of the training data. Generative AI models require massive amounts of training data and in order to achieve this data volume, the selection controls are often relaxed leading to poorer quality (i.e. more biased) input data.

Solution: Invest in high-quality, diverse data sources as well as data bias training for your team. It may also be possible to build automated safeguarding checks that will spot social bias in model outputs.

Perhaps more than any other type of data bias, social bias shows us the importance of the quality of the data you start with. You may build the perfect generative AI model but if your training data contains implicit social biases (simply because these biases existed in the subjects who generated the data) then your final model will most likely reproduce or even amplify these biases. For this reason, it’s crucial to invest in high-quality training data that is fair and unbiased.

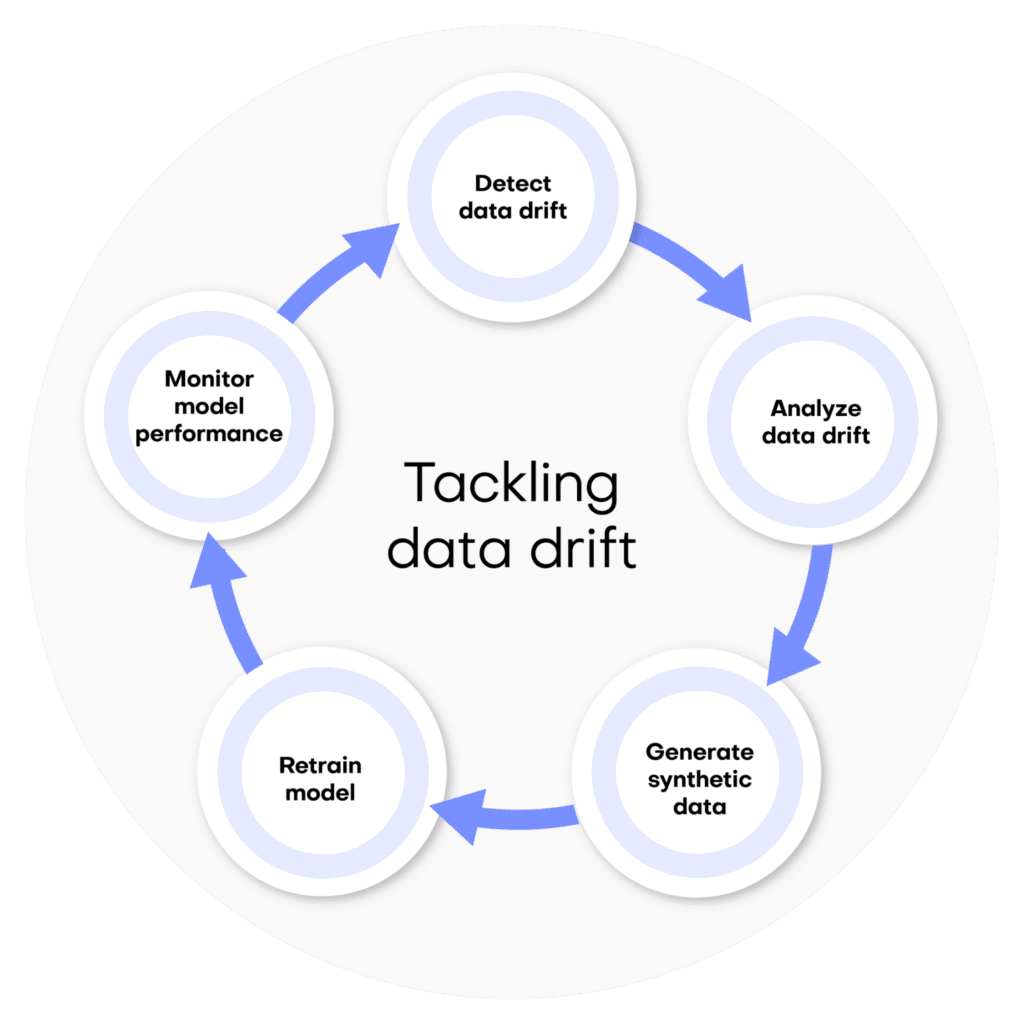

Strategies for reducing data bias

Recognizing and acknowledging data bias is of course just the first step. Once you have identified data bias in your project you will also want to take concrete action to mitigate it. Sometimes, identifying data bias while your project is ongoing is already too late; for this reason it’s important to consider preventive strategies as well.

To mitigate data bias in the complex landscape of AI applications, consider:

- Investing in dataset diversity and data collection quality assurances.

- Performing regular algorithmic auditing to identify and rectify bias.

- Including humans in the loop for supervision.

- Investing in model explainability and transparency.

Let’s dive into more detail for each strategy.

Diverse dataset curation

There is no way around the old adage: “garbage in, garbage out”. Because of this, the cornerstone of combating bias is curating high-quality, diverse datasets. In the case of LLMs, this involves exposing the model to a wide array of linguistic styles, contexts, and cultural nuances. For Generative AI models more generally, it means ensuring to the best of your ability that training data sets are sourced from as varied a population as possible and actively working to identify and rectify any implicit social biases. If, after this, your data still has missing values or imbalanced categories, consider using synthetic data with rebalancing and smart imputation methods.

Algorithmic auditing

Regular audits of machine learning algorithms are crucial for identifying and rectifying bias. For both LLMs and generative AI applications in general, auditing involves continuous monitoring of model outputs for potential biases and adjusting the training data and/or the model’s architecture accordingly.

Humans in the loop

When combating data bias it is ironically easy to fall into the trap of automation bias by letting programs do all the work and trusting them blindly to recognize bias when it occurs. This is the core of the problem with the widespread use of Google’s Perspective to avoid toxic LLM output. Because the bias-detector in this case is not fool-proof, its application is not straightforward. This is why the builders of Perspective strongly recommend continuing to include human supervision in the loop.

Explainability and transparency

Some degree of data bias is unavoidable. For this reason, it is crucial to invest in the explainability and transparency of your LLMs and Generative AI models. For LLMs, providing explanations and sources for generated text can offer insights into the model's decision-making process. When done right, model explainability and transparency will give users more context on the generated output and allow them to understand and potentially contest biased outputs.

Synthetic data reduces data bias

Synthetic data can help you mitigate data bias. During the data synthesization process, it is possible to introduce different kinds of constraints, such as fairness. The result is fair synthetic data, without any bias. You can also use synthetic data to improve model explainability and transparency by removing privacy concerns and significantly expanding the group of users you can share the training data with.

More specifically, you can mitigate the following types of data bias using synthetic data:

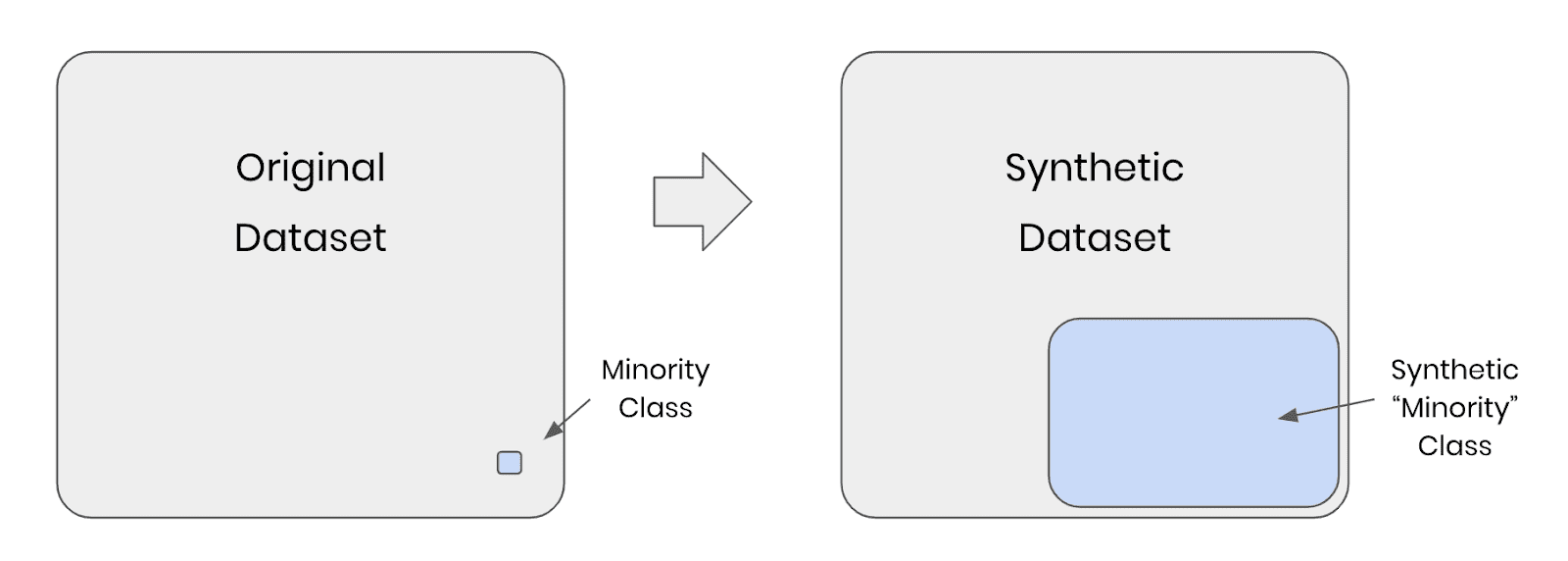

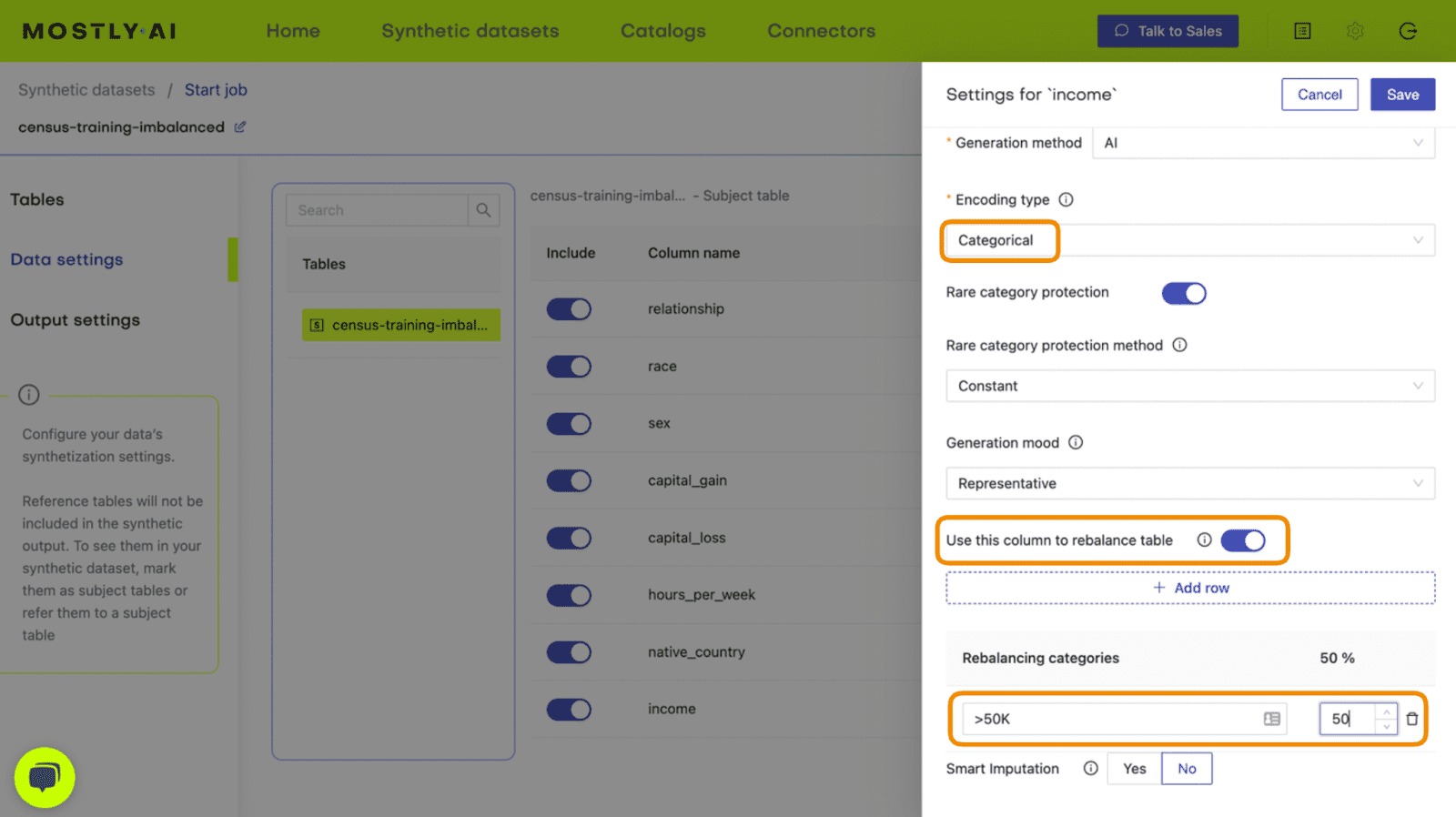

Selection Bias

If you are dealing with imbalanced datasets due to selection bias, you can use synthetic data to rebalance your datasets to include more samples of the minority population. For example, you can use this feature to provide more nuanced responses for polarizing topics (e.g. book reviews, which generally tend to be overly positive or negative) to train your LLM app.

Social Bias

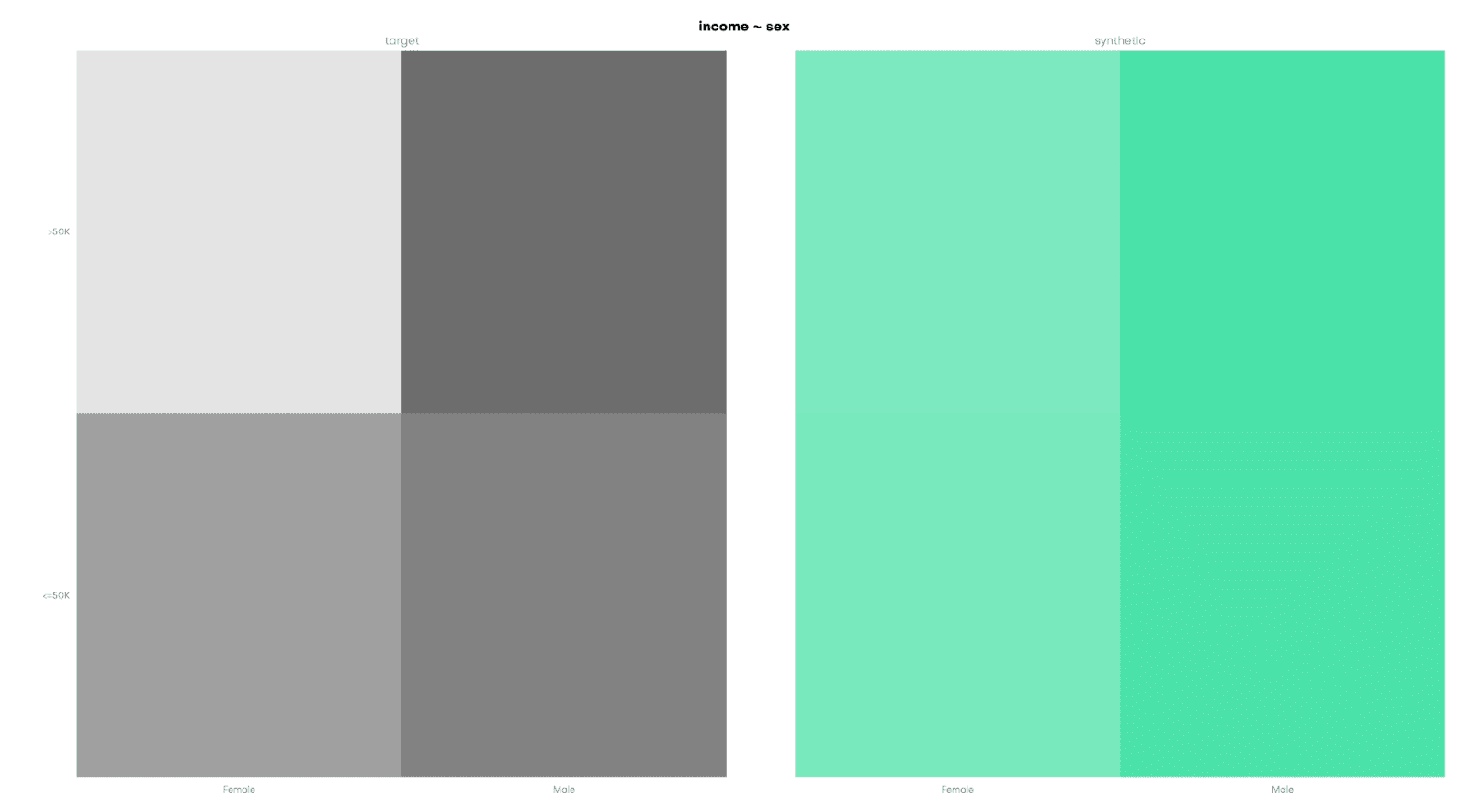

Conditional data generation enables you to take a gender- or racially-biased dataset and simulate what it would look like without the biases included. For example, you can simulate what the UCI Adult Income dataset would look like without a gender income gap. This can be a powerful tool in combating social biases.

Reporting or Participation Bias

If you are dealing with missing data points due to reporting or participation bias, you can use smart imputation to impute the missing values in a high-quality, statistically representative manner. This allows you to avoid data loss by allowing you to use all the records available. Using MOSTLY AI’s Smart Imputation feature it is possible to recover the original population distribution which means you can continue to use the dataset as if there were no missing values to begin with.

Mitigating data bias in LLM and generative AI applications

Data bias is a pervasive and multi-faceted problem that can have significant negative impacts if not dealt with appropriately. The real-world examples you have seen in this article show clearly that even the biggest players in the field of AI struggle to get this right. With tightening government regulations and increasing social pressure to ensure fair and responsible AI applications, the urgency to identify and rectify data bias at all points of the LLM and Generative AI lifecycle is only becoming stronger.

In this article you have learned how to recognise the different kinds of data bias that can affect your LLM or Generative AI applications. You have explored the impact of data bias through real-world examples and learned about some of the most effective strategies for mitigating data bias. You have also seen the role synthetic data can play in addressing this problem.

If you’d like to put this new knowledge to use directly, take a look at our hands-on coding tutorials on conditional data generation, rebalancing, and smart imputation. MOSTLY AI's free, state-of-the-art synthetic data generator allows you to try these advanced data augmentation techniques without the need to code.

For a more in-depth study on the importance of fairness in AI and the role that synthetic data can play, read our series on fair synthetic data.

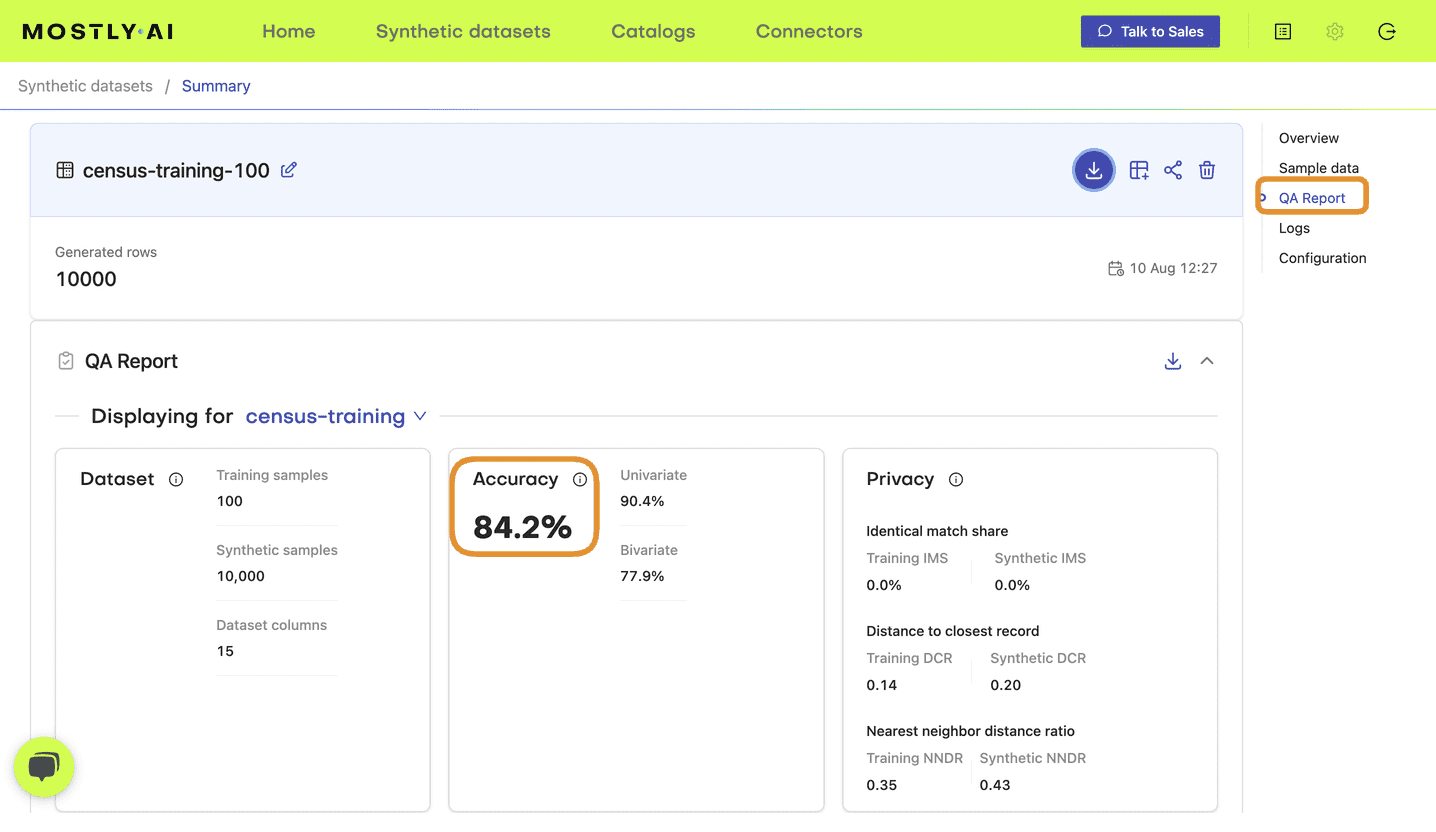

In this tutorial, you will learn the key concepts behind MOSTLY AI’s synthetic data Quality Assurance (QA) framework. This will enable you to efficiently and reliably assess the quality of your generated synthetic datasets. It will also give you the skills to confidently explain the quality metrics to any interested stakeholders.

Using the code in this tutorial, you will replicate key parts of both the accuracy and privacy metrics that you will find in any MOSTLY AI QA Report. For a full-fledged exploration of the topic including a detailed mathematical explanation, see our peer-reviewed journal paper as well as the accompanying benchmarking study.

The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

QA reports for synthetic data sets

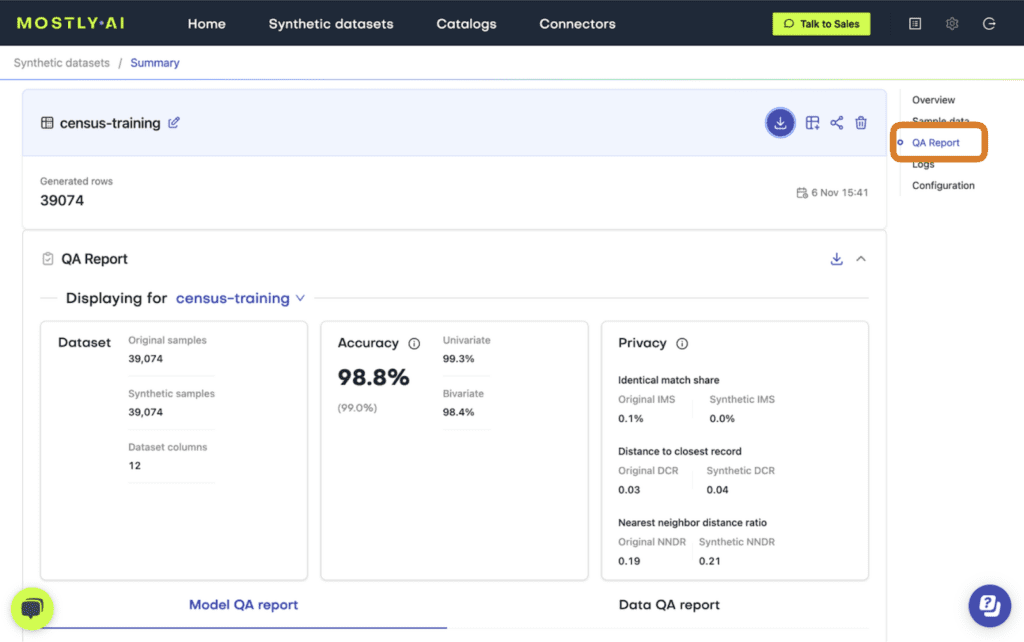

If you have run any synthetic data generation jobs with MOSTLY AI, chances are high that you’ve already encountered the QA Report. To access it, click on any completed synthesization job and select the “QA Report” tab:

Fig 1 - Click on a completed synthesization job.

Fig 2 - Select the “QA Report” tab.

At the top of the QA Report you will find some summary statistics about the dataset as well as the average metrics for accuracy and privacy of the generated dataset. Further down, you can toggle between the Model QA Report and the Data QA Report. The Model QA reports on the accuracy and privacy of the trained Generative AI model. The Data QA, on the other hand, visualizes the distributions not of the underlying model but of the outputted synthetic dataset. If you generate a synthetic dataset with all the default settings enabled, the Model and Data QA Reports should look the same.

Exploring either of the QA reports you will discover various performance metrics, such as univariate and bivariate distributions for each of the columns and well as more detailed privacy metrics. You can use these metrics to precisely evaluate the quality of your synthetic dataset.

So how does MOSTLY AI calculate these quality assurance metrics?

In the following sections you will replicate the accuracy and privacy metrics. The code is almost exactly the code that MOSTLY AI runs under the hood to generate the QA Reports – it has been tweaked only slightly to improve legibility and usability. Working through this code will give you a hands-on insight into how MOSTLY AI evaluates synthetic data quality.

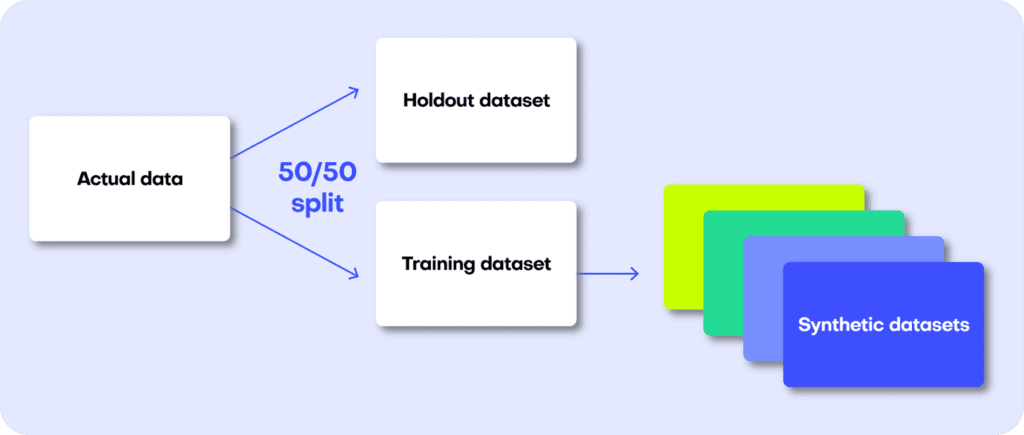

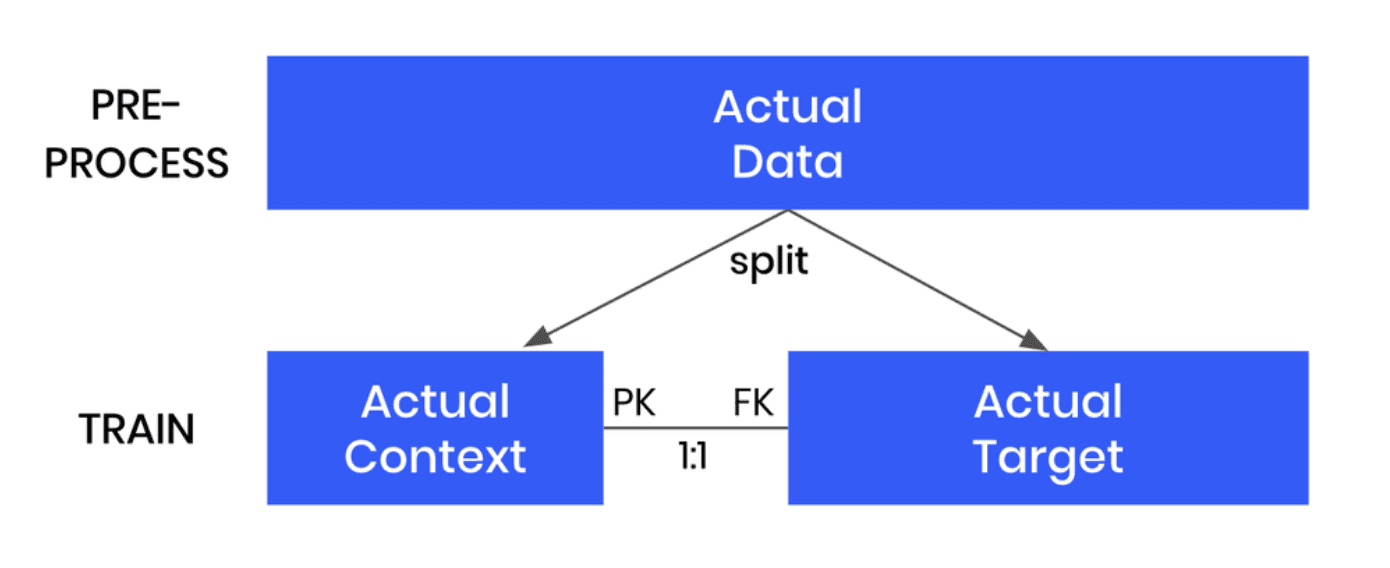

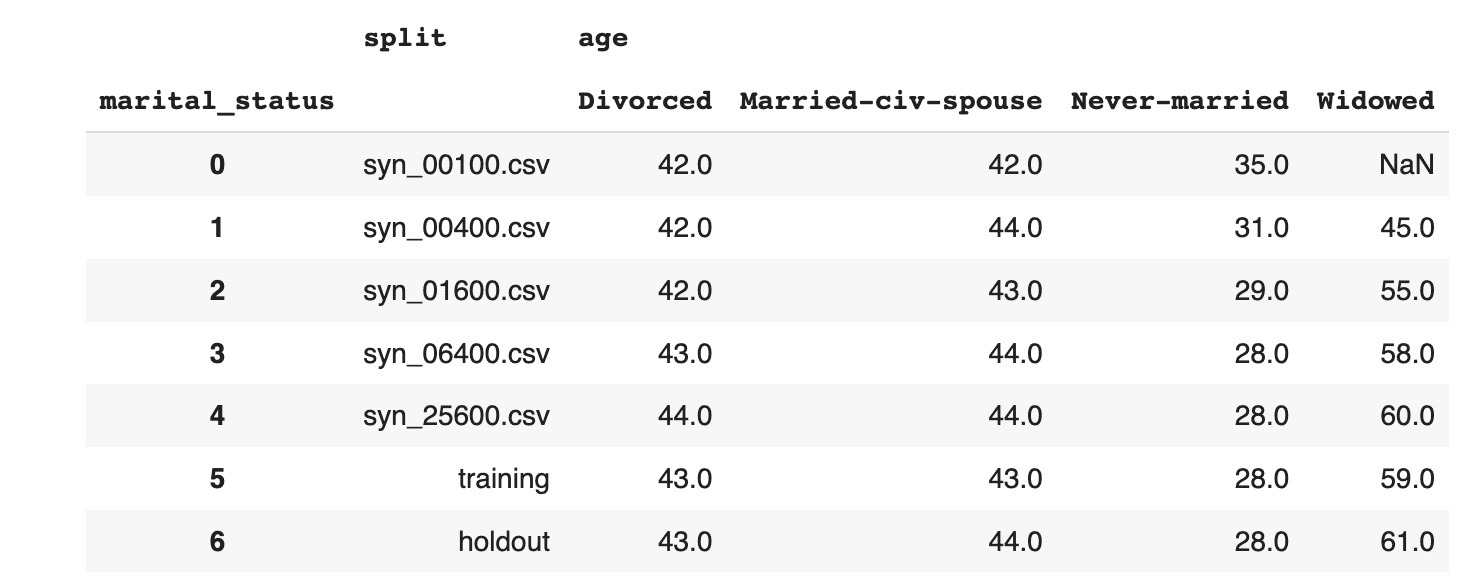

Preprocessing the data

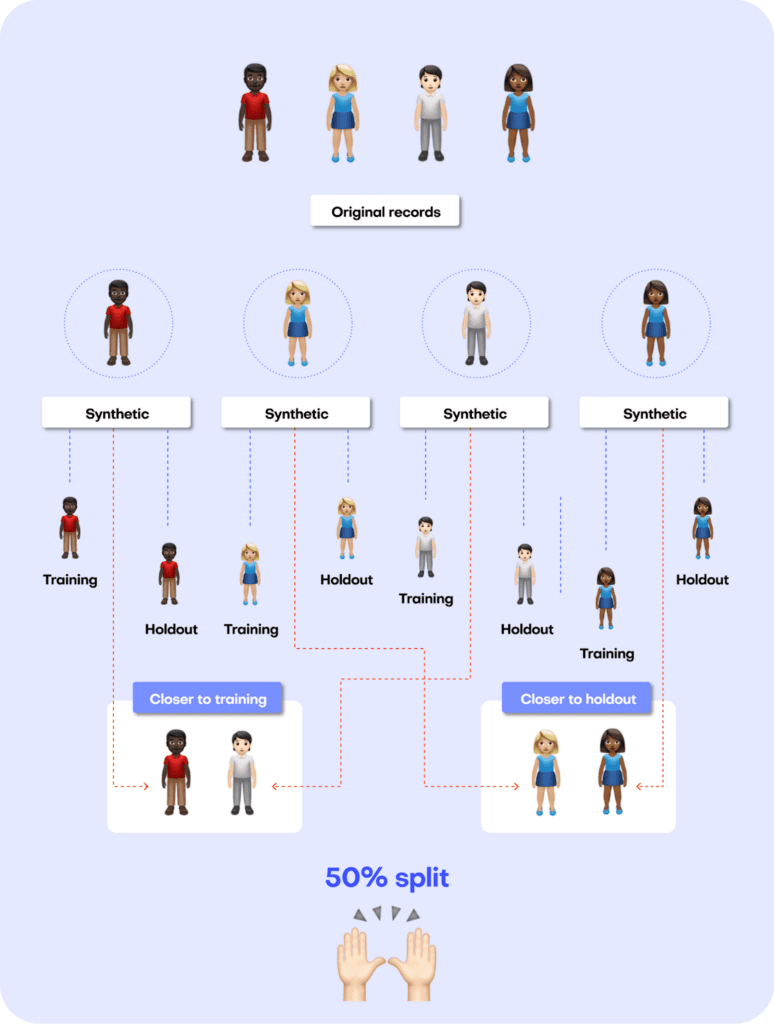

The first step in MOSTLY AI’s synthetic data quality evaluation methodology is to take the original dataset and split it in half to yield two subsets: a training dataset and a holdout dataset. We then use only the training samples (so only 50% of the original dataset) to train our synthesizer and generate synthetic data samples. The holdout samples are never exposed to the synthesis process but are kept aside for evaluation.

Fig 3 - The first step is to split the original dataset in two equal parts and train the synthesizer on only one of the halves.

Distance-based quality metrics for synthetic data generation

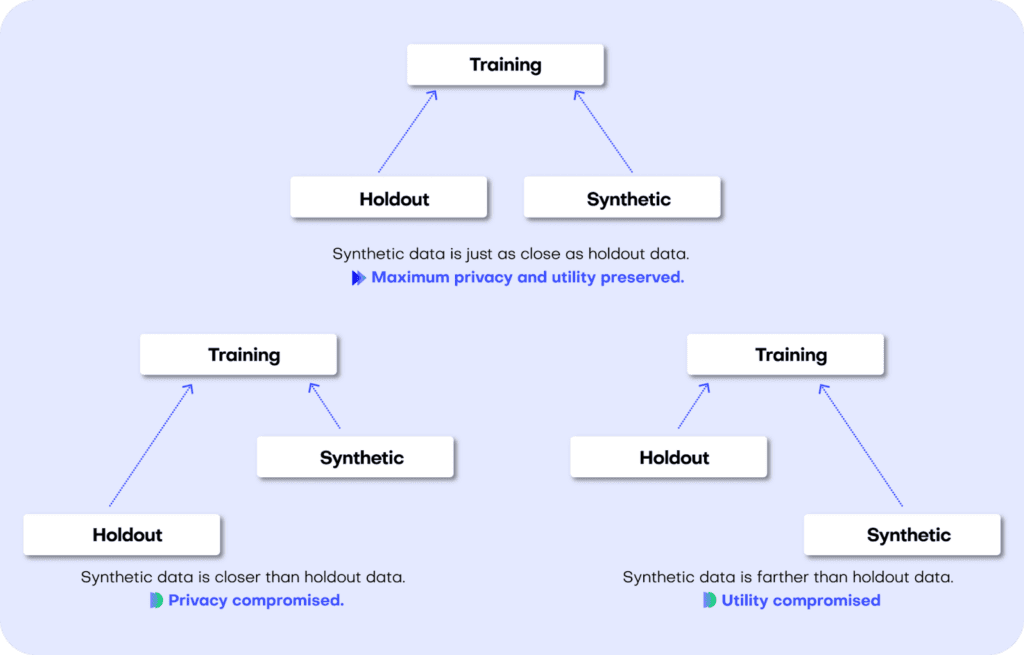

Both the accuracy and privacy metrics are measured in terms of distance. Remember that we split the original dataset into two subsets: a training and a holdout set. Since these are all samples from the same dataset, these two sets will exhibit the same statistics and the same distributions. However, as the split was made at random we can expect a slight difference in the statistical properties of these two datasets. This difference is normal and is due to sampling variance.

The difference (or, to put it mathematically: the distance) between the training and holdout samples will serve us as a reference point: in an ideal scenario, the synthetic data we generate should be no different from the training dataset than the holdout dataset is. Or to put it differently: the distance between the synthetic samples and the training samples should approximate the distance we would expect to occur naturally within the training samples due to sampling variance.

If the synthetic data is significantly closer to the training data than the holdout data, this means that some information specific to the training data has leaked into the synthetic dataset. If the synthetic data is significantly farther from the training data than the holdout data, this means that we have lost information in terms of accuracy or fidelity.

For more context on this distance-based quality evaluation approach, check out our benchmarking study which dives into more detail.

Fig 4 - A perfect synthetic data generator creates data samples that are just as different from the training data as the holdout data. If this is not the case, we are compromising on either privacy or utility.

Let’s jump into replicating the metrics for both accuracy and privacy 👇

Synthetic data accuracy

The accuracy of MOSTLY AI’s synthetic datasets is measured as the total variational distance between the empirical marginal distributions. It is calculated by treating all the variables in the dataset as categoricals (by binning any numerical features) and then measuring the sum of all deviations between the empirical marginal distributions.

The code below performs the calculation for all univariate and bivariate distributions and then averages across to determine the simple summary statistics you see in the QA Report.

First things first: let’s access the data. You can fetch both the original and the synthetic datasets directly from the Github repo:

repo = (

"https://github.com/mostly-ai/mostly-tutorials/raw/dev/quality-assurance"

)

tgt = pd.read_parquet(f"{repo}/census-training.parquet")

print(

f"fetched original data with {tgt.shape[0]:,} records and {tgt.shape[1]} attributes"

)

syn = pd.read_parquet(f"{repo}/census-synthetic.parquet")

print(

f"fetched synthetic data with {syn.shape[0]:,} records and {syn.shape[1]} attributes"

)fetched original data with 39,074 records and 12 attributes

fetched synthetic data with 39,074 records and 12 attributes

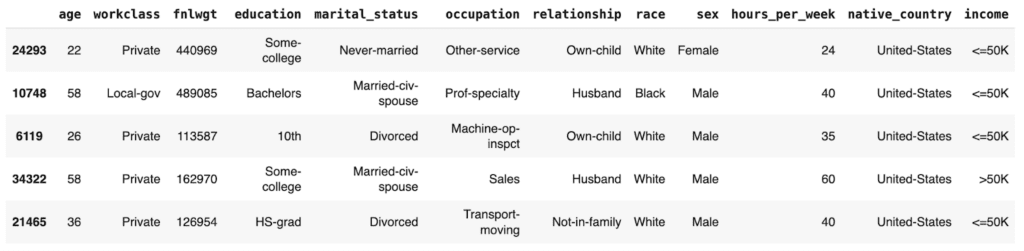

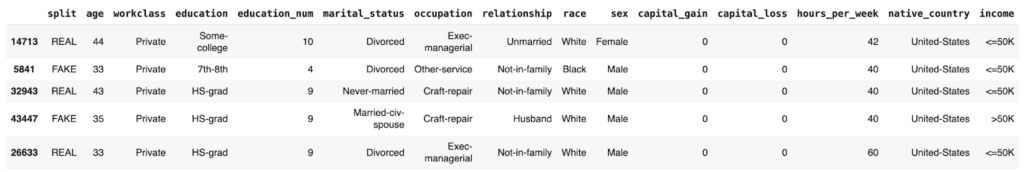

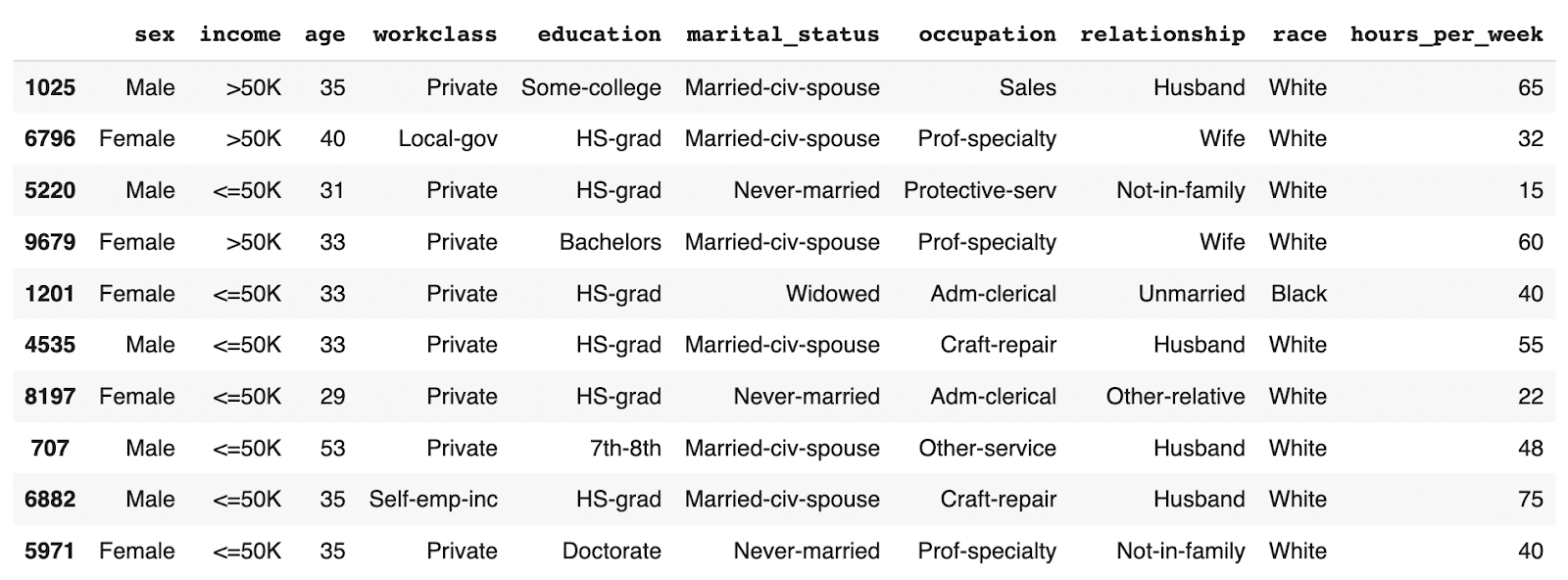

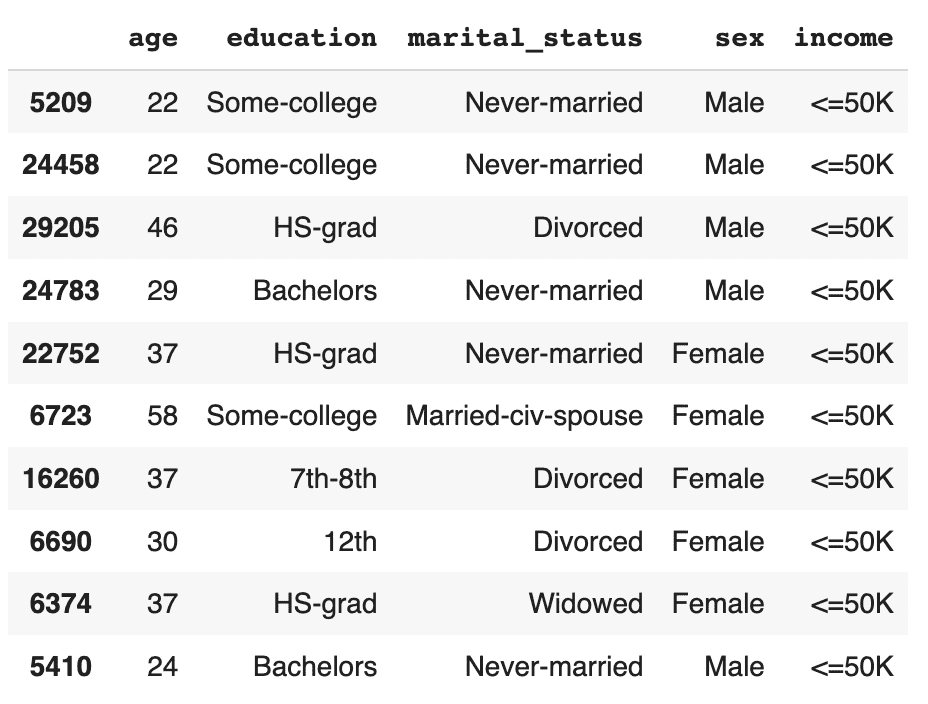

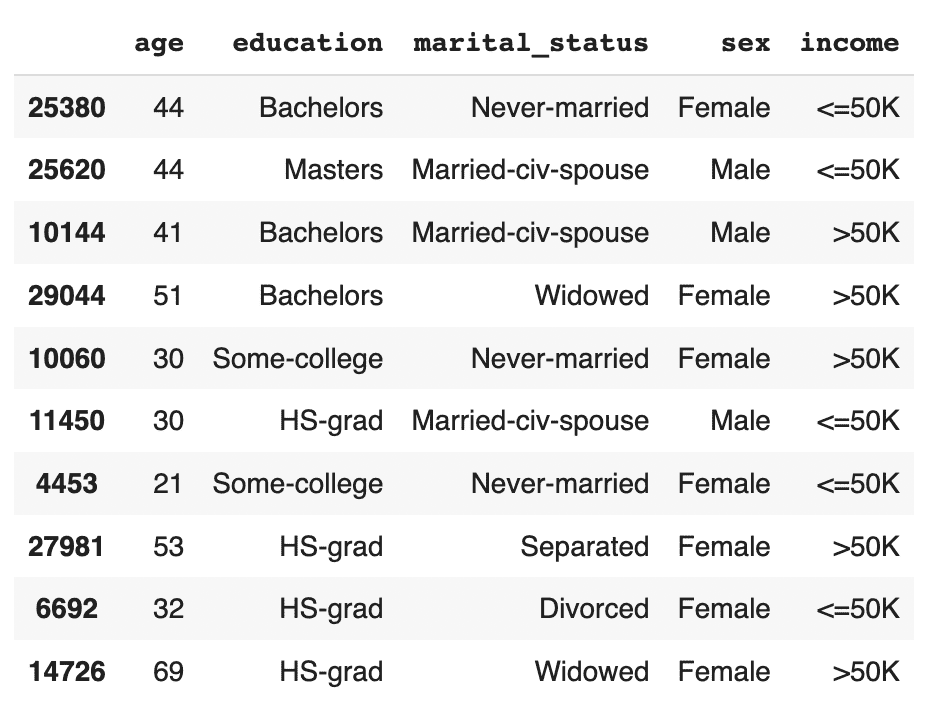

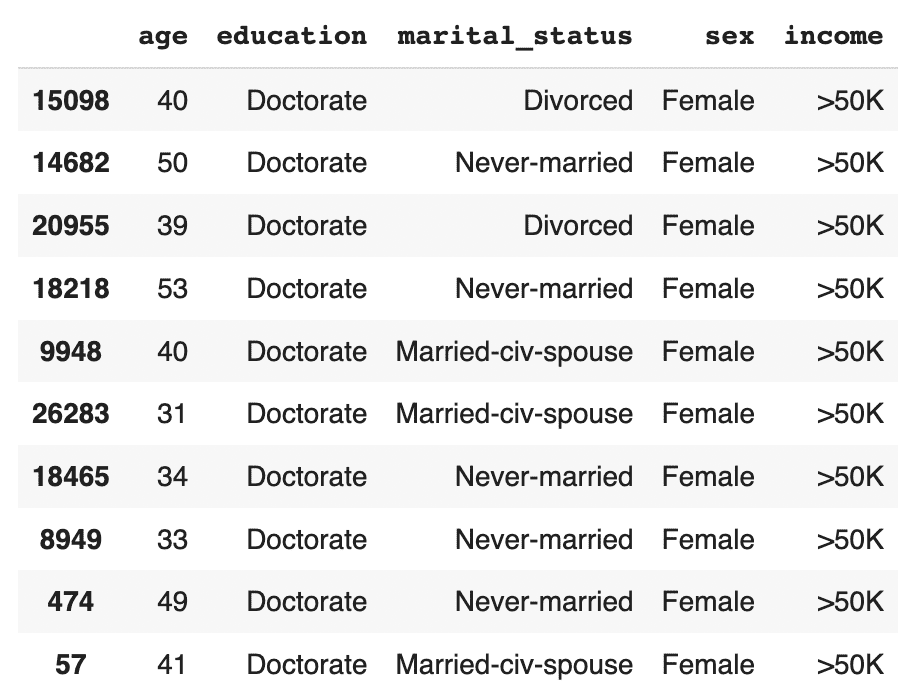

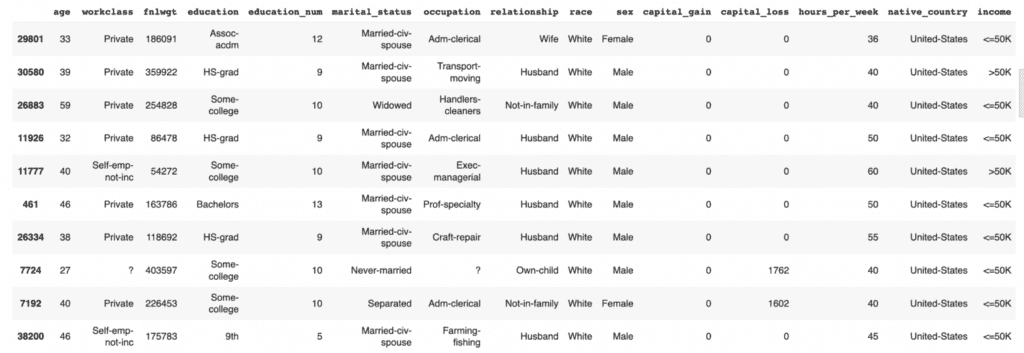

We are working with a version of the UCI Adult Income dataset. This dataset has just over 39K records and 12 columns. Go ahead and sample 5 random records to get a sense of what the data looks like:

tgt.sample(n=5)

Let’s define a helper function to bin the data in order treat any numerical features as categoricals:

def bin_data(dt1, dt2, bins=10):

dt1 = dt1.copy()

dt2 = dt2.copy()

# quantile binning of numerics

num_cols = dt1.select_dtypes(include="number").columns

cat_cols = dt1.select_dtypes(

include=["object", "category", "string", "bool"]

).columns

for col in num_cols:

# determine breaks based on `dt1`

breaks = dt1[col].quantile(np.linspace(0, 1, bins + 1)).unique()

dt1[col] = pd.cut(dt1[col], bins=breaks, include_lowest=True)

dt2_vals = pd.to_numeric(dt2[col], "coerce")

dt2_bins = pd.cut(dt2_vals, bins=breaks, include_lowest=True)

dt2_bins[dt2_vals < min(breaks)] = "_other_"

dt2_bins[dt2_vals > max(breaks)] = "_other_"

dt2[col] = dt2_bins

# top-C binning of categoricals

for col in cat_cols:

dt1[col] = dt1[col].astype("str")

dt2[col] = dt2[col].astype("str")

# determine top values based on `dt1`

top_vals = dt1[col].value_counts().head(bins).index.tolist()

dt1[col].replace(

np.setdiff1d(dt1[col].unique().tolist(), top_vals),

"_other_",

inplace=True,

)

dt2[col].replace(

np.setdiff1d(dt2[col].unique().tolist(), top_vals),

"_other_",

inplace=True,

)

return dt1, dt2And a second helper function to calculate the univariate and bivariate accuracies:

def calculate_accuracies(dt1_bin, dt2_bin, k=1):

# build grid of all cross-combinations

cols = dt1_bin.columns

interactions = pd.DataFrame(

np.array(np.meshgrid(cols, cols)).reshape(2, len(cols) ** 2).T

)

interactions.columns = ["col1", "col2"]

if k == 1:

interactions = interactions.loc[

(interactions["col1"] == interactions["col2"])

]

elif k == 2:

interactions = interactions.loc[

(interactions["col1"] < interactions["col2"])

]

else:

raise ("k>2 not supported")

results = []

for idx in range(interactions.shape[0]):

row = interactions.iloc[idx]

val1 = (

dt1_bin[row.col1].astype(str) + "|" + dt1_bin[row.col2].astype(str)

)

val2 = (

dt2_bin[row.col1].astype(str) + "|" + dt2_bin[row.col2].astype(str)

)

# calculate empirical marginal distributions (=relative frequencies)

freq1 = val1.value_counts(normalize=True, dropna=False).to_frame(

name="p1"

)

freq2 = val2.value_counts(normalize=True, dropna=False).to_frame(

name="p2"

)

freq = freq1.join(freq2, how="outer").fillna(0.0)

# calculate Total Variation Distance between relative frequencies

tvd = np.sum(np.abs(freq["p1"] - freq["p2"])) / 2

# calculate Accuracy as (100% - TVD)

acc = 1 - tvd

out = pd.DataFrame(

{

"Column": [row.col1],

"Column 2": [row.col2],

"TVD": [tvd],

"Accuracy": [acc],

}

)

results.append(out)

return pd.concat(results)Then go ahead and bin the data. We restrict ourselves to 100K records for efficiency.

# restrict to max 100k records

tgt = tgt.sample(frac=1).head(n=100_000)

syn = syn.sample(frac=1).head(n=100_000)

# bin data

tgt_bin, syn_bin = bin_data(tgt, syn, bins=10)Now you can go ahead and calculate the univariate accuracies for all the columns in the dataset:

# calculate univariate accuracies

acc_uni = calculate_accuracies(tgt_bin, syn_bin, k=1)[['Column', 'Accuracy']]Go ahead and inspect the first 5 columns:

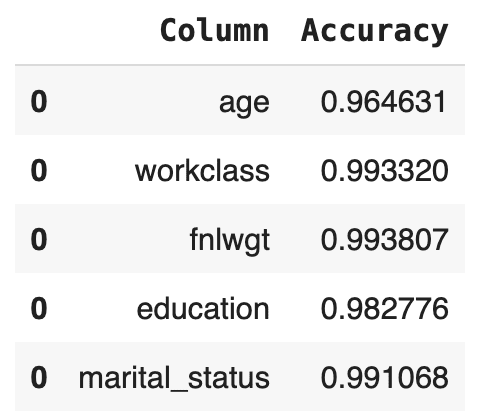

acc_uni.head()

Now let’s calculate the bivariate accuracies as well. This measures how well the relationships between all the sets of two columns are maintained.

# calculate bivariate accuracies

acc_biv = calculate_accuracies(tgt_bin, syn_bin, k=2)[

["Column", "Column 2", "Accuracy"]

]

acc_biv = pd.concat(

[

acc_biv,

acc_biv.rename(columns={"Column": "Column 2", "Column 2": "Column"}),

]

)

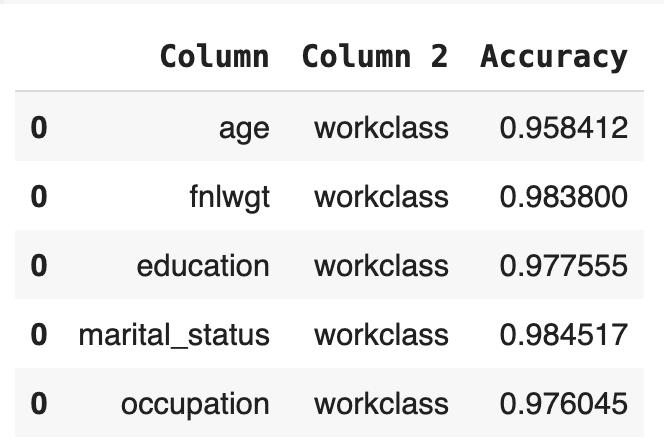

acc_biv.head()

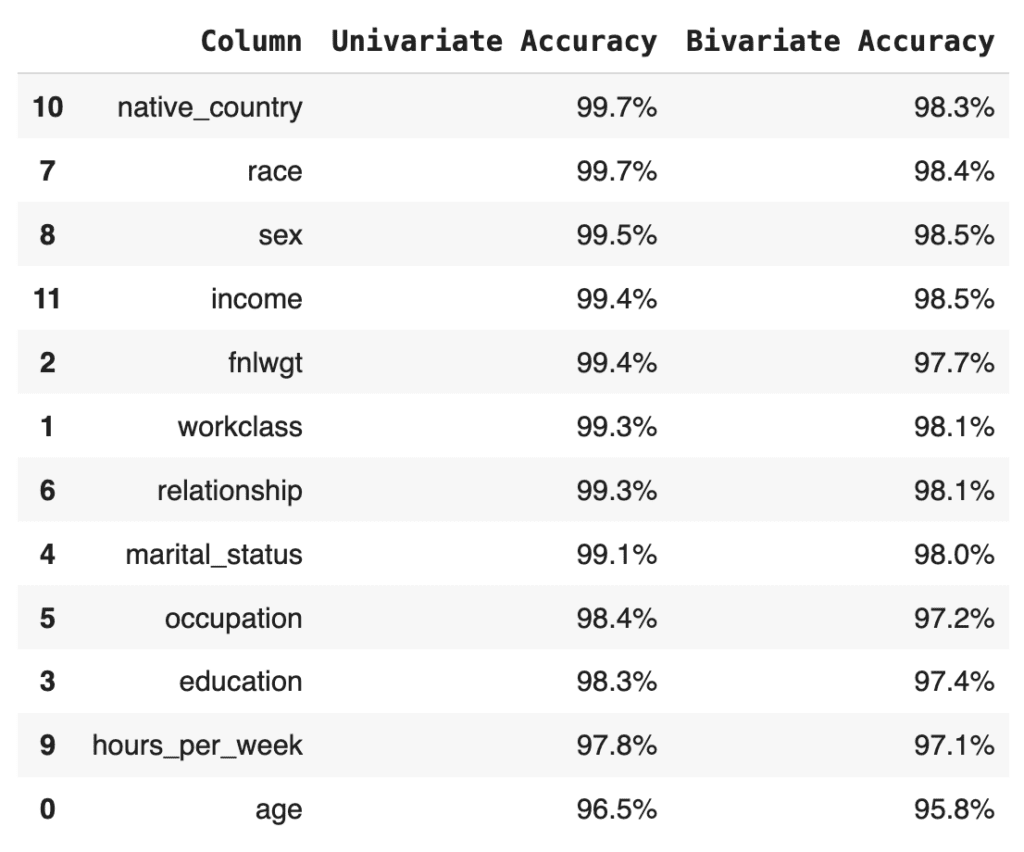

The bivariate accuracy that is reported for each column in the MOSTLY AI QA Report is an average over all of the bivariate accuracies for that column with respect to all the other columns in the dataset. Let’s calculate that value for each column and then create an overview table with the univariate and average bivariate accuracies for all columns:

# calculate the average bivariate accuracy

acc_biv_avg = (

acc_biv.groupby("Column")["Accuracy"]

.mean()

.to_frame("Bivariate Accuracy")

.reset_index()

)

# merge to univariate and avg. bivariate accuracy to single overview table

acc = pd.merge(

acc_uni.rename(columns={"Accuracy": "Univariate Accuracy"}),

acc_biv_avg,

on="Column",

).sort_values("Univariate Accuracy", ascending=False)

# report accuracy as percentage

acc["Univariate Accuracy"] = acc["Univariate Accuracy"].apply(

lambda x: f"{x:.1%}"

)

acc["Bivariate Accuracy"] = acc["Bivariate Accuracy"].apply(

lambda x: f"{x:.1%}"

)

acc

Finally, let’s calculate the summary statistic values that you normally see at the top of any MOSTLY AI QA Report: the overall accuracy as well as the average univariate and bivariate accuracies. We take the mean of the univariate and bivariate accuracies for all the columns and then take the mean of the result to arrive at the overall accuracy score:

print(f"Avg. Univariate Accuracy: {acc_uni['Accuracy'].mean():.1%}")

print(f"Avg. Bivariate Accuracy: {acc_biv['Accuracy'].mean():.1%}")

print(f"-------------------------------")

acc_avg = (acc_uni["Accuracy"].mean() + acc_biv["Accuracy"].mean()) / 2

print(f"Avg. Overall Accuracy: {acc_avg:.1%}")Avg. Univariate Accuracy: 98.9%

Avg. Bivariate Accuracy: 97.7%

------------------------------

Avg. Overall Accuracy: 98.3%

If you’re curious how this compares to the values in the MOSTLY AI QA Report, go ahead and download the tgt dataset and synthesize it using the default settings. The overall accuracy reported will be close to 98%.

Next, let’s see how MOSTLY AI generates the visualization segments of the accuracy report. The code below defines two helper functions: one for the univariate and one for the bivariate plots. Getting the plots right for all possible edge cases is actually rather complicated, so while the code block below is lengthy, this is in fact the trimmed-down version of what MOSTLY AI uses under the hood. You do not need to worry about the exact details of the implementation here; just getting an overall sense of how it works is enough:

import plotly.graph_objects as go

def plot_univariate(tgt_bin, syn_bin, col, accuracy):

freq1 = (

tgt_bin[col].value_counts(normalize=True, dropna=False).to_frame("tgt")

)

freq2 = (

syn_bin[col].value_counts(normalize=True, dropna=False).to_frame("syn")

)

freq = freq1.join(freq2, how="outer").fillna(0.0).reset_index()

freq = freq.sort_values(col)

freq[col] = freq[col].astype(str)

layout = go.Layout(

title=dict(

text=f"<b>{col}</b> <sup>{accuracy:.1%}</sup>", x=0.5, y=0.98

),

autosize=True,

height=300,

width=800,

margin=dict(l=10, r=10, b=10, t=40, pad=5),

plot_bgcolor="#eeeeee",

hovermode="x unified",

yaxis=dict(

zerolinecolor="white",

rangemode="tozero",

tickformat=".0%",

),

)

fig = go.Figure(layout=layout)

trn_line = go.Scatter(

mode="lines",

x=freq[col],

y=freq["tgt"],

name="target",

line_color="#666666",

yhoverformat=".2%",

)

syn_line = go.Scatter(

mode="lines",

x=freq[col],

y=freq["syn"],

name="synthetic",

line_color="#24db96",

yhoverformat=".2%",

fill="tonexty",

fillcolor="#ffeded",

)

fig.add_trace(trn_line)

fig.add_trace(syn_line)

fig.show(config=dict(displayModeBar=False))

def plot_bivariate(tgt_bin, syn_bin, col1, col2, accuracy):

x = (

pd.concat([tgt_bin[col1], syn_bin[col1]])

.drop_duplicates()

.to_frame(col1)

)

y = (

pd.concat([tgt_bin[col2], syn_bin[col2]])

.drop_duplicates()

.to_frame(col2)

)

df = pd.merge(x, y, how="cross")

df = pd.merge(

df,

pd.concat([tgt_bin[col1], tgt_bin[col2]], axis=1)

.value_counts()

.to_frame("target")

.reset_index(),

how="left",

)

df = pd.merge(

df,

pd.concat([syn_bin[col1], syn_bin[col2]], axis=1)

.value_counts()

.to_frame("synthetic")

.reset_index(),

how="left",

)

df = df.sort_values([col1, col2], ascending=[True, True]).reset_index(

drop=True

)

df["target"] = df["target"].fillna(0.0)

df["synthetic"] = df["synthetic"].fillna(0.0)

# normalize values row-wise (used for visualization)

df["target_by_row"] = df["target"] / df.groupby(col1)["target"].transform(

"sum"

)

df["synthetic_by_row"] = df["synthetic"] / df.groupby(col1)[

"synthetic"

].transform("sum")

# normalize values across table (used for accuracy)

df["target_by_all"] = df["target"] / df["target"].sum()

df["synthetic_by_all"] = df["synthetic"] / df["synthetic"].sum()

df["y"] = df[col1].astype("str")

df["x"] = df[col2].astype("str")

layout = go.Layout(

title=dict(

text=f"<b>{col1} ~ {col2}</b> <sup>{accuracy:.1%}</sup>",

x=0.5,

y=0.98,

),

autosize=True,

height=300,

width=800,

margin=dict(l=10, r=10, b=10, t=40, pad=5),

plot_bgcolor="#eeeeee",

showlegend=True,

# prevent Plotly from trying to convert strings to dates

xaxis=dict(type="category"),

xaxis2=dict(type="category"),

yaxis=dict(type="category"),

yaxis2=dict(type="category"),

)

fig = go.Figure(layout=layout).set_subplots(

rows=1,

cols=2,

horizontal_spacing=0.05,

shared_yaxes=True,

subplot_titles=("target", "synthetic"),

)

fig.update_annotations(font_size=12)

# plot content

hovertemplate = (

col1[:10] + ": `%{y}`<br />" + col2[:10] + ": `%{x}`<br /><br />"

)

hovertemplate += "share target vs. synthetic<br />"

hovertemplate += "row-wise: %{customdata[0]} vs. %{customdata[1]}<br />"

hovertemplate += "absolute: %{customdata[2]} vs. %{customdata[3]}<br />"

customdata = df[

[

"target_by_row",

"synthetic_by_row",

"target_by_all",

"synthetic_by_all",

]

].apply(lambda x: x.map("{:.2%}".format))

heat1 = go.Heatmap(

x=df["x"],

y=df["y"],

z=df["target_by_row"],

name="target",

zmin=0,

zmax=1,

autocolorscale=False,

colorscale=["white", "#A7A7A7", "#7B7B7B", "#666666"],

showscale=False,

customdata=customdata,

hovertemplate=hovertemplate,

)

heat2 = go.Heatmap(

x=df["x"],

y=df["y"],

z=df["synthetic_by_row"],

name="synthetic",

zmin=0,

zmax=1,

autocolorscale=False,

colorscale=["white", "#81EAC3", "#43E0A5", "#24DB96"],

showscale=False,

customdata=customdata,

hovertemplate=hovertemplate,

)

fig.add_trace(heat1, row=1, col=1)

fig.add_trace(heat2, row=1, col=2)

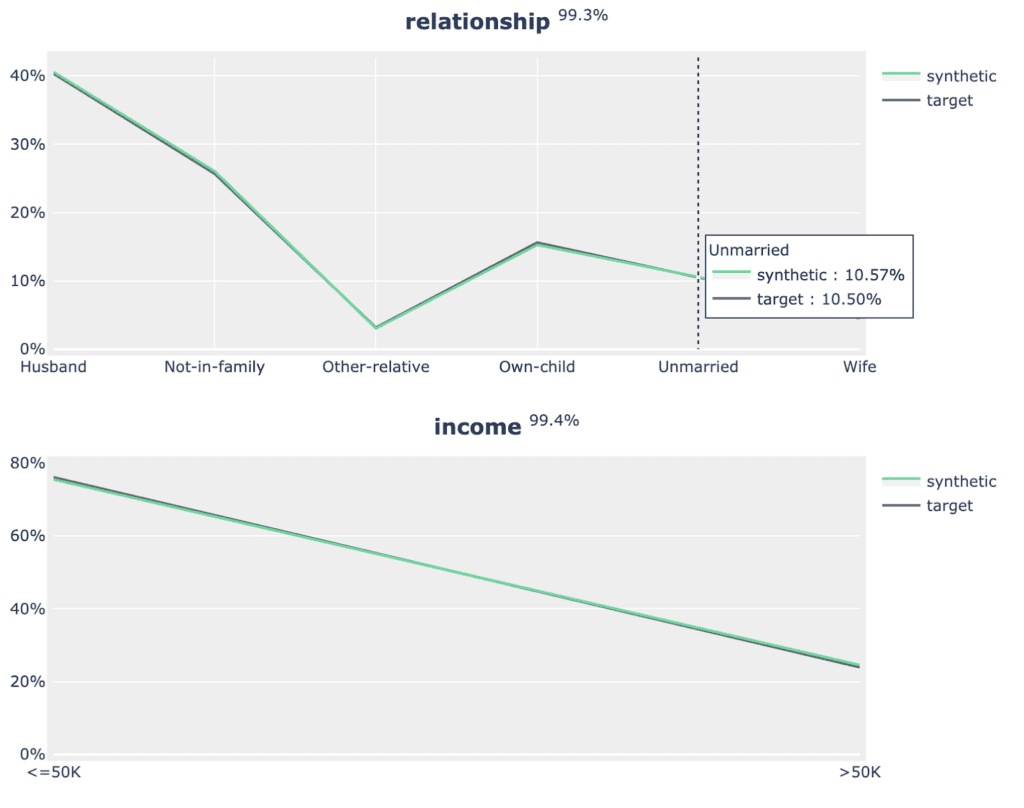

fig.show(config=dict(displayModeBar=False))Now you can create the plots for the univariate distributions:

for idx, row in acc_uni.sample(n=5, random_state=0).iterrows():

plot_univariate(tgt_bin, syn_bin, row["Column"], row["Accuracy"])

print("")

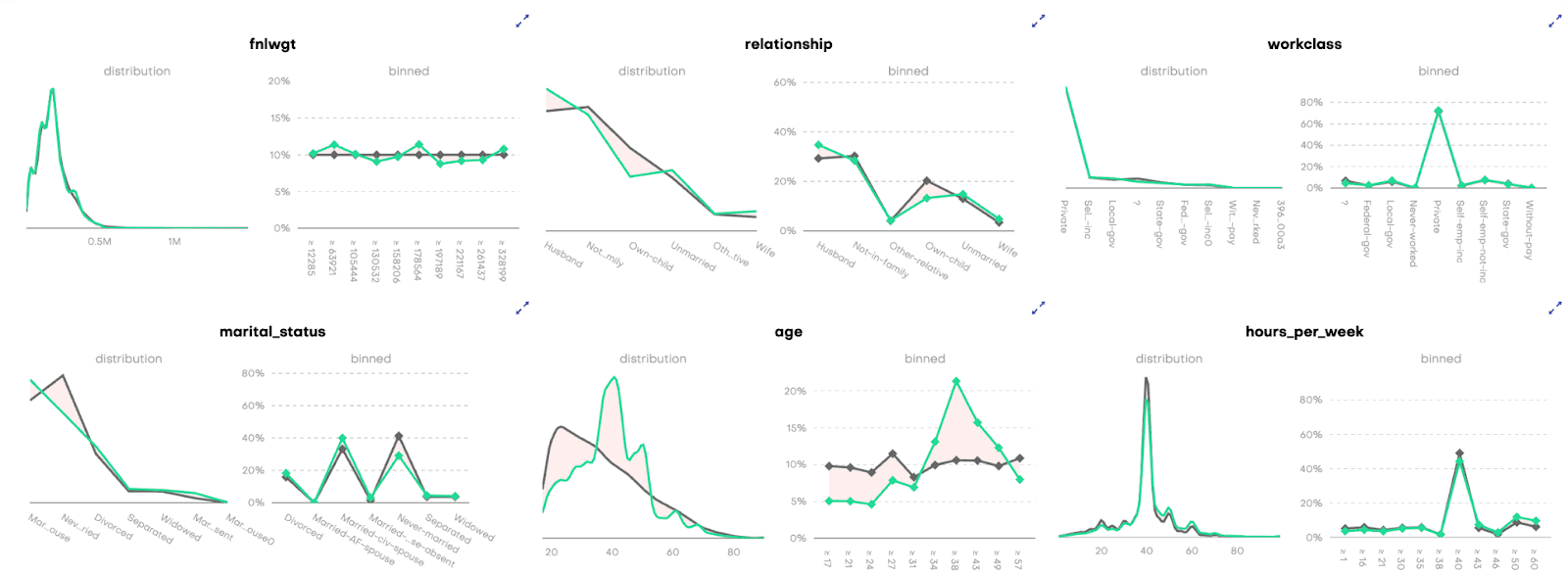

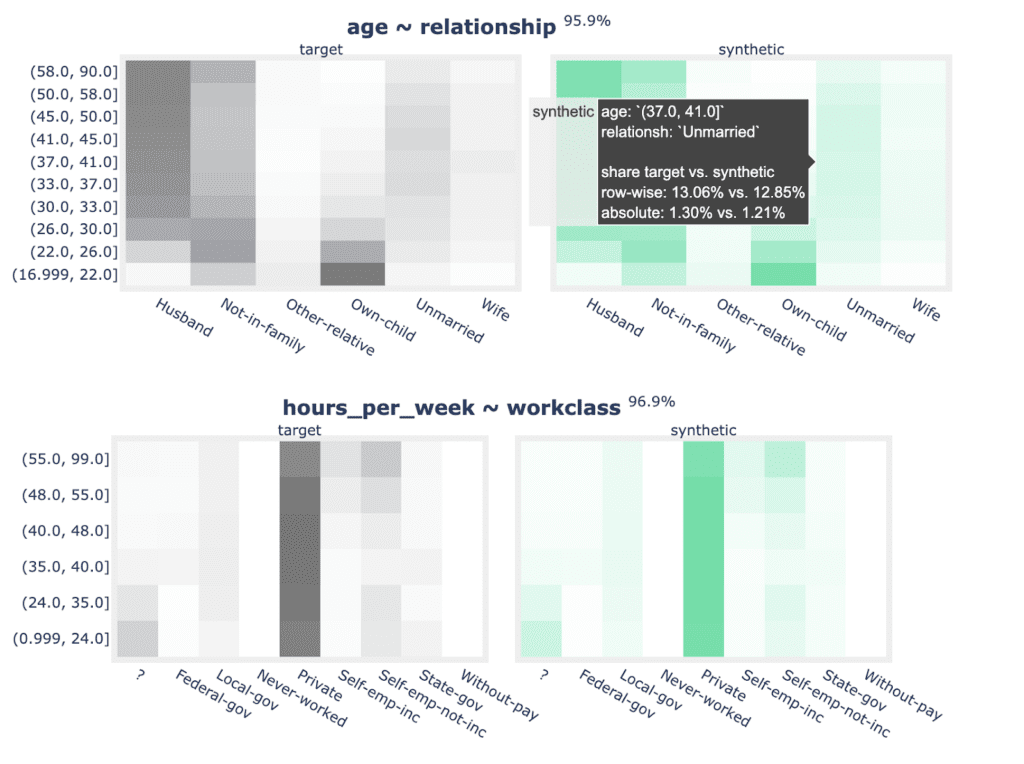

Fig 5 - Sample of 2 univariate distribution plots.

As well as the bivariate distribution plots:

for idx, row in acc_biv.sample(n=5, random_state=0).iterrows():

plot_bivariate(

tgt_bin, syn_bin, row["Column"], row["Column 2"], row["Accuracy"]

)

print("")

Fig 6 - Sample of 2 bivariate distribution plots.

Now that you have replicated the accuracy component of the QA Report in sufficient detail, let’s move on to the privacy section.

Synthetic data privacy

Just like accuracy, the privacy metric is also calculated as a distance-based value. To gauge the privacy risk of the generated synthetic data, we calculate the distances between the synthetic samples and their "nearest neighbor" (i.e., their most similar record) from the original dataset. This nearest neighbor could be either in the training split or in the holdout split. We then tally the ratio of synthetic samples that are closer to the holdout and the training set. Ideally, we will see an even split, which would mean that the synthetic samples are not systematically any closer to the original dataset than the original samples are to each other.

Fig 7 - A perfect synthetic data generator creates synthetic records that are just as different from the training data as from the holdout data.

The code block below uses the scikit-learn library to perform a nearest-neighbor search across the synthetic and original datasets. We then use the results from this search to calculate two different distance metrics: the Distance to the Closest Record (DCR) and the Nearest Neighbor Distance Ratio (NNDR), both at the 5-th percentile.

from sklearn.compose import make_column_transformer

from sklearn.neighbors import NearestNeighbors

from sklearn.preprocessing import OneHotEncoder

from sklearn.impute import SimpleImputer

no_of_records = min(tgt.shape[0] // 2, syn.shape[0], 10_000)

tgt = tgt.sample(n=2 * no_of_records)

trn = tgt.head(no_of_records)

hol = tgt.tail(no_of_records)

syn = syn.sample(n=no_of_records)

string_cols = trn.select_dtypes(exclude=np.number).columns

numeric_cols = trn.select_dtypes(include=np.number).columns

transformer = make_column_transformer(

(SimpleImputer(missing_values=np.nan, strategy="mean"), numeric_cols),

(OneHotEncoder(), string_cols),

remainder="passthrough",

)

transformer.fit(pd.concat([trn, hol, syn], axis=0))

trn_hot = transformer.transform(trn)

hol_hot = transformer.transform(hol)

syn_hot = transformer.transform(syn)

# calculcate distances to nearest neighbors

index = NearestNeighbors(

n_neighbors=2, algorithm="brute", metric="l2", n_jobs=-1

)

index.fit(trn_hot)

# k-nearest-neighbor search for both training and synthetic data, k=2 to calculate DCR + NNDR

dcrs_hol, _ = index.kneighbors(hol_hot)

dcrs_syn, _ = index.kneighbors(syn_hot)

dcrs_hol = np.square(dcrs_hol)

dcrs_syn = np.square(dcrs_syn)Now calculate the DCR for both datasets:

dcr_bound = np.maximum(np.quantile(dcrs_hol[:, 0], 0.95), 1e-8)

ndcr_hol = dcrs_hol[:, 0] / dcr_bound

ndcr_syn = dcrs_syn[:, 0] / dcr_bound

print(

f"Normalized DCR 5-th percentile original {np.percentile(ndcr_hol, 5):.3f}"

)

print(

f"Normalized DCR 5-th percentile synthetic {np.percentile(ndcr_syn, 5):.3f}"

)Normalized DCR 5-th percentile original 0.001

Normalized DCR 5-th percentile synthetic 0.009

As well as the NNDR:

print(

f"NNDR 5-th percentile original {np.percentile(dcrs_hol[:,0]/dcrs_hol[:,1], 5):.3f}"

)

print(

f"NNDR 5-th percentile synthetic {np.percentile(dcrs_syn[:,0]/dcrs_syn[:,1], 5):.3f}"

)NNDR 5-th percentile original 0.019

NNDR 5-th percentile synthetic 0.058

For both privacy metrics, the distance value for the synthetic dataset should be similar but not smaller. This gives us confidence that our synthetic record has not learned privacy-revealing information from the training data.

Quality assurance for synthetic data with MOSTLY AI

In this tutorial, you have learned the key concepts behind MOSTLY AI’s Quality Assurance framework. You have gained insight into the preprocessing steps that are required as well as a close look into exactly how the accuracy and privacy metrics are calculated. With these newly acquired skills, you can now confidently and efficiently interpret any MOSTLY AI QA Report and explain it thoroughly to any interested stakeholders.

For a more in-depth exploration of these concepts and the mathematical principles behind them, check out the benchmarking study or the peer-reviewed academic research paper to dive deeper.

You can also check out the other Synthetic Data Tutorials:

- Tackle missing data with smart data imputation

- Generate synthetic text data

- Explainable AI with synthetic data

- Perform conditional data generation

- Rebalancing your data for ML classification problems

- Optimize your training sample size for synthetic data accuracy

- Build a “fake vs real” ML classifier

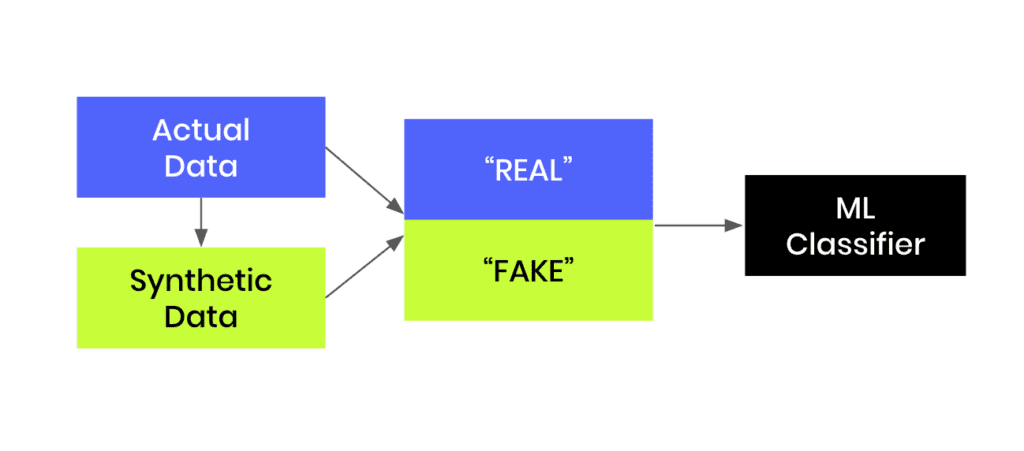

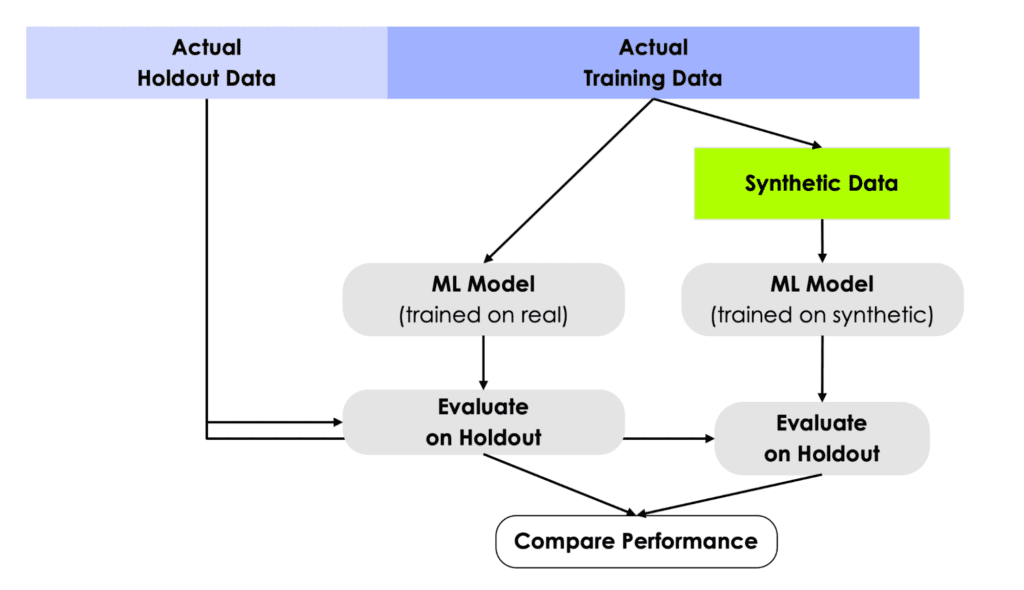

In this tutorial, you will learn how to build a machine-learning model that is trained to distinguish between synthetic (fake) and real data records. This can be a helpful tool when you are given a hybrid dataset containing both real and fake records and want to be able to distinguish between them. Moreover, this model can serve as a quality evaluation tool for any synthetic data you generate. The higher the quality of your synthetic data records, the harder it will be for your ML discriminator to tell these fake records apart from the real ones.

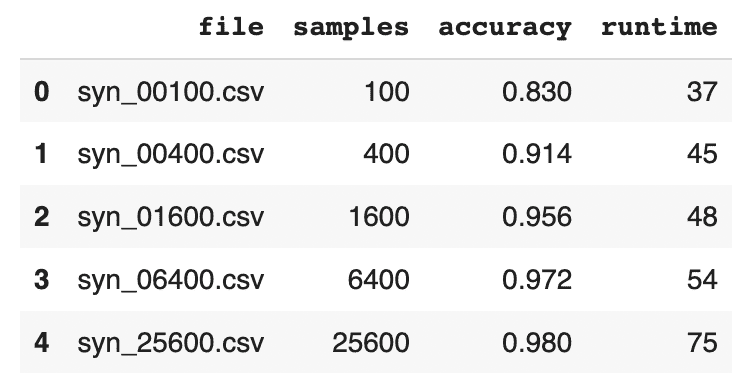

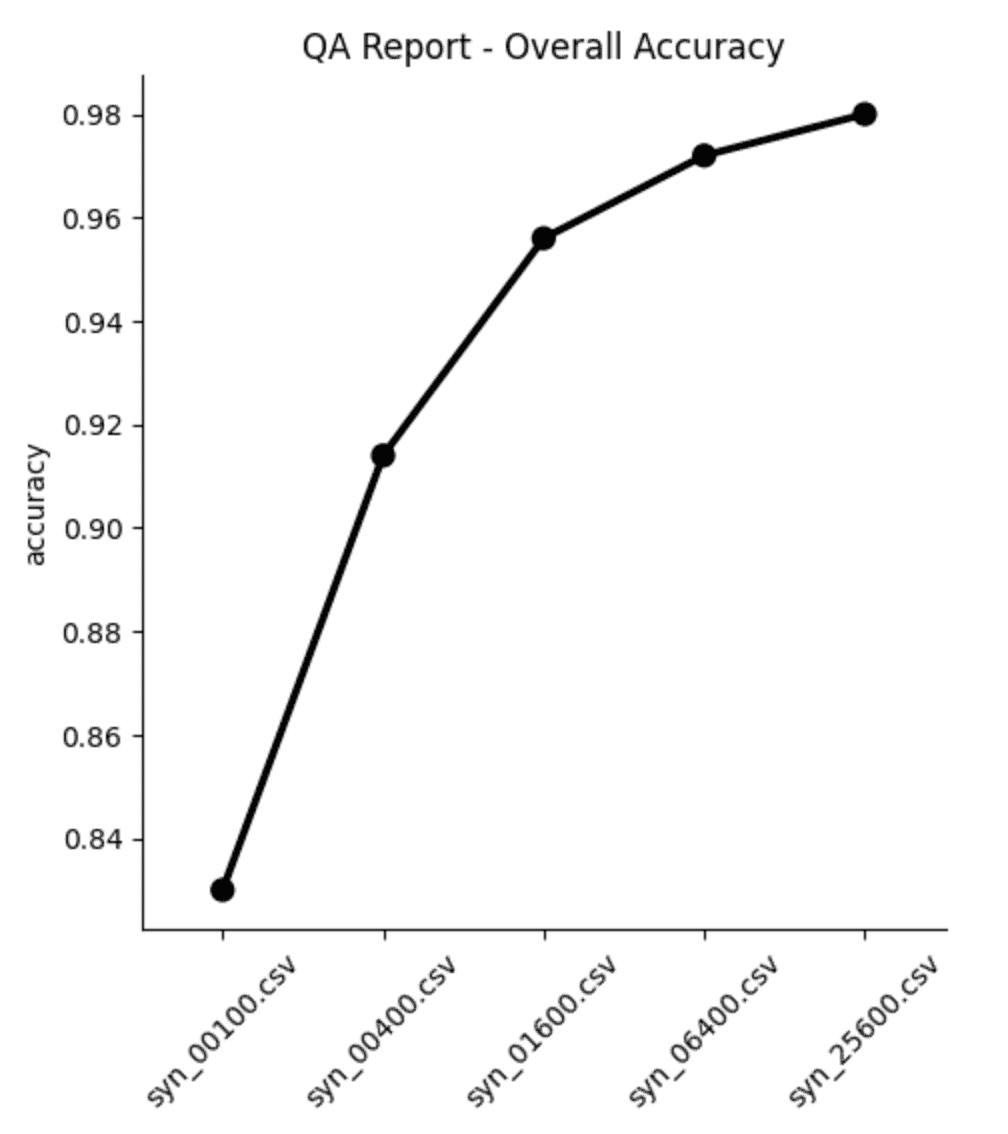

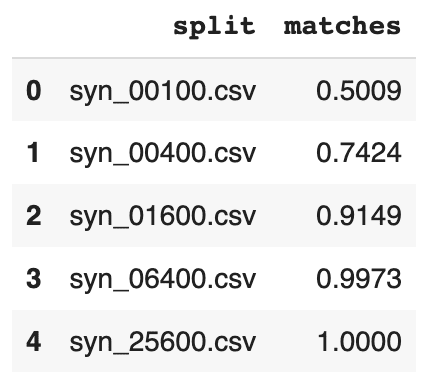

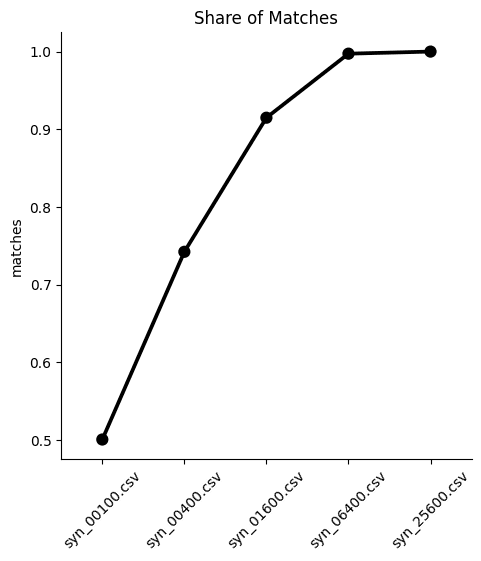

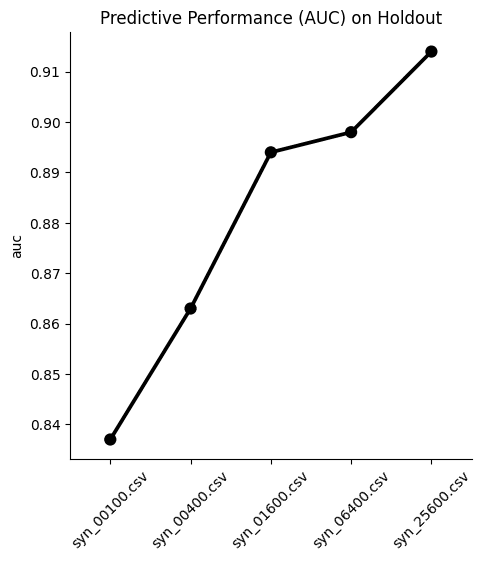

You will be working with the UCI Adult Income dataset. The first step will be to synthesize the original dataset. We will start by intentionally creating synthetic data of lower quality in order to make it easier for our “Fake vs. Real” ML classifier to detect a signal and tell the two apart. We will then compare this against a synthetic dataset generated using MOSTLY AI's default high-quality settings to see whether the ML model can tell the fake records apart from the real ones.

The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

Fig 1 - Generate synthetic data and join this to the original dataset in order to train an ML classifier.

Create synthetic training data

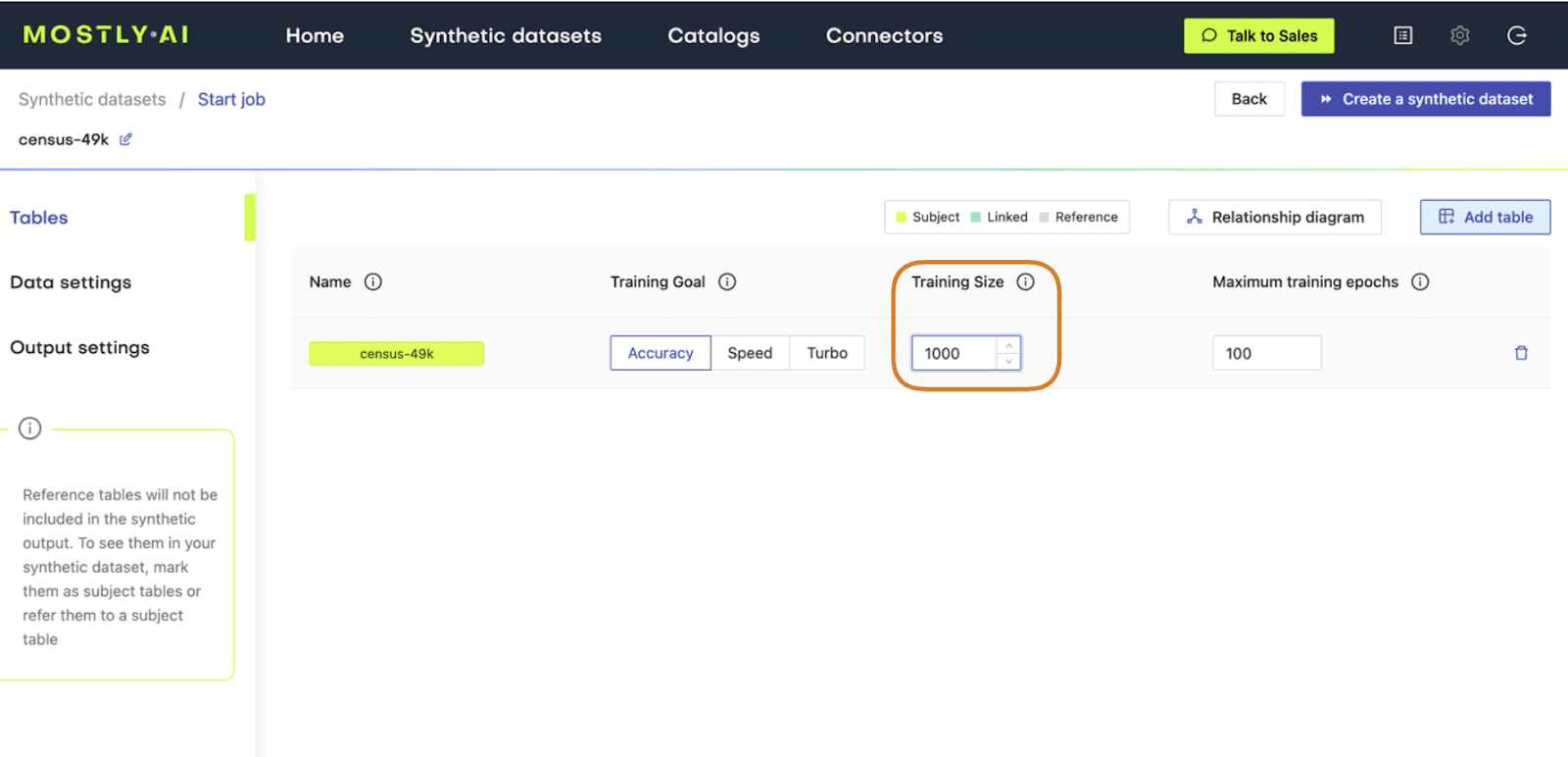

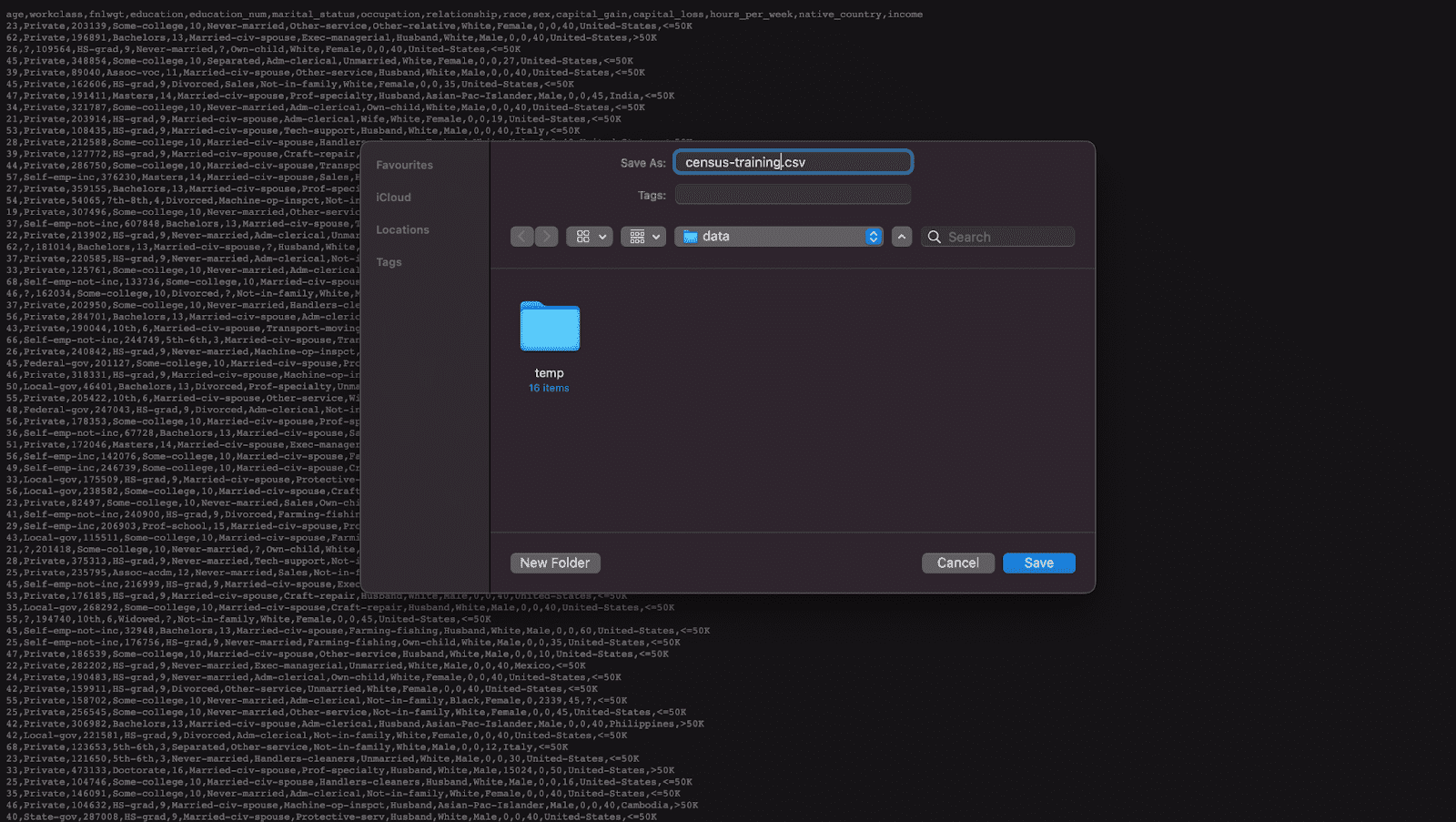

Let’s start by creating our synthetic data:

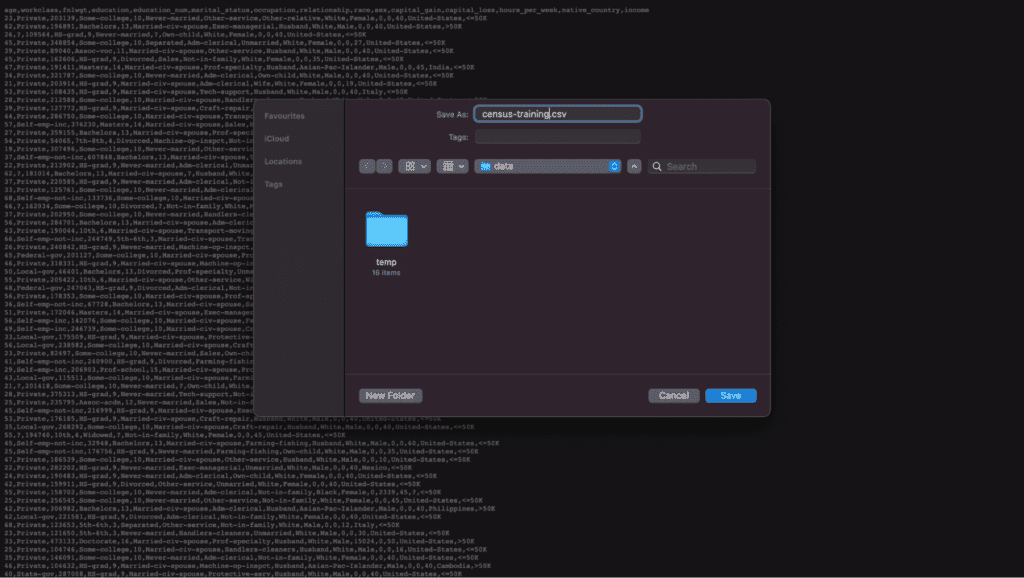

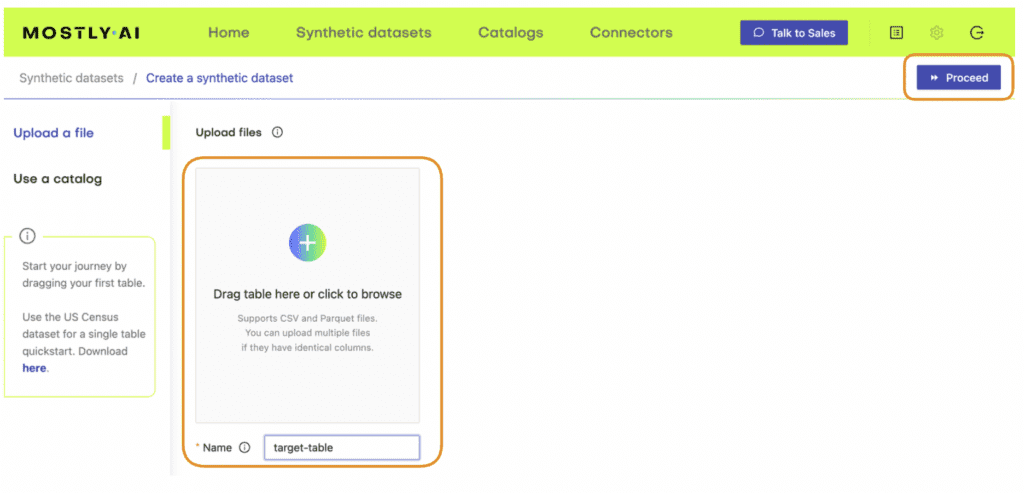

- Download the original dataset here. Depending on your operating system, use either Ctrl+S or Cmd+S to save the file locally.

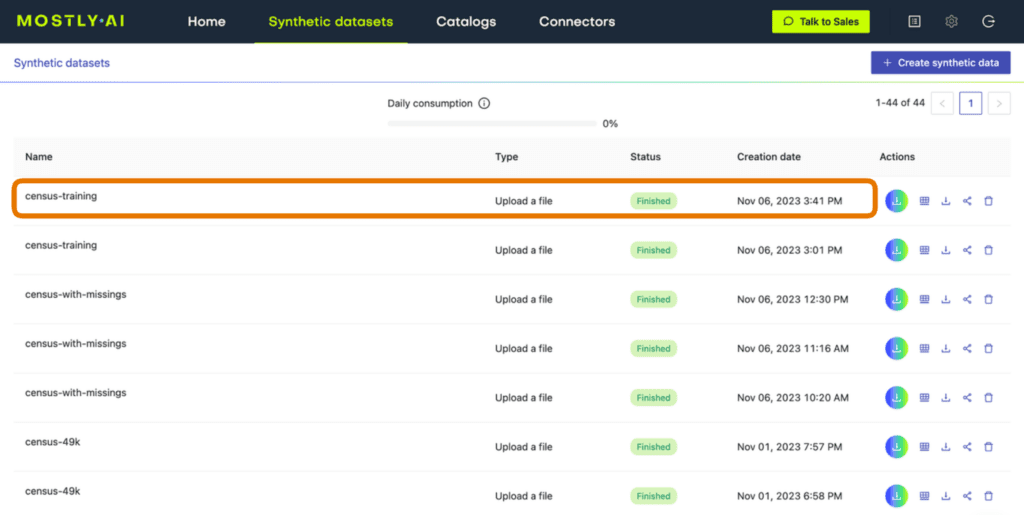

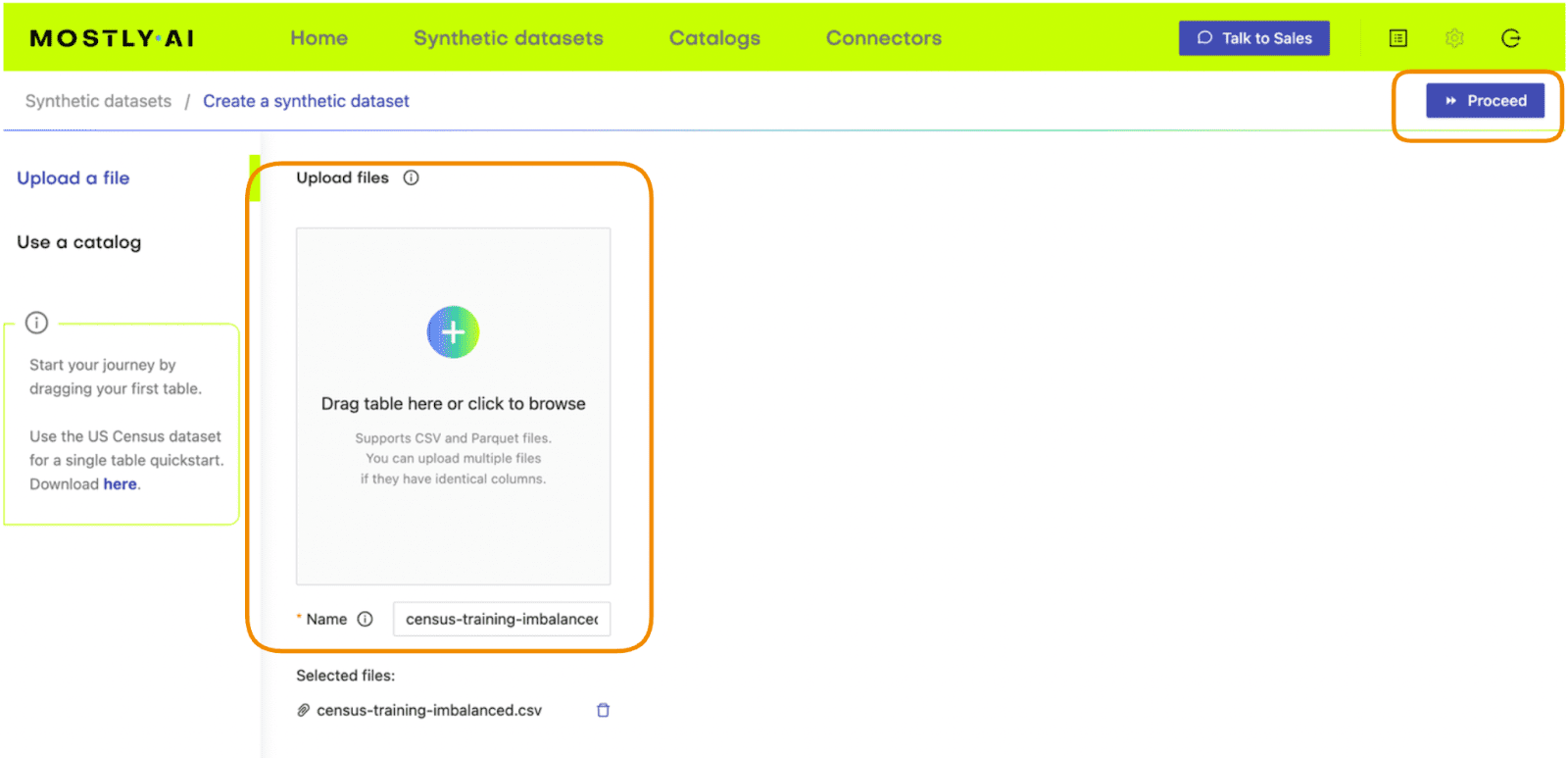

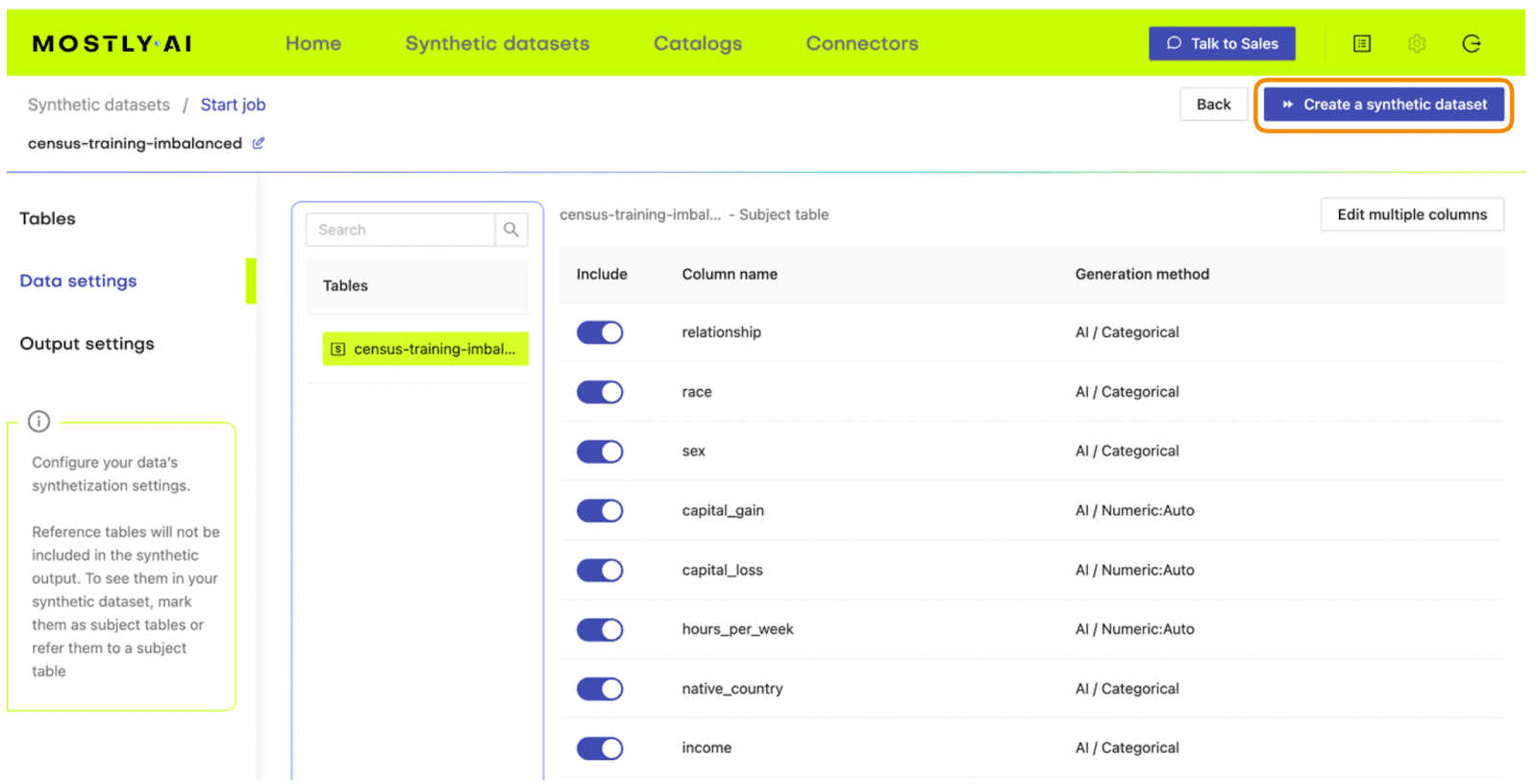

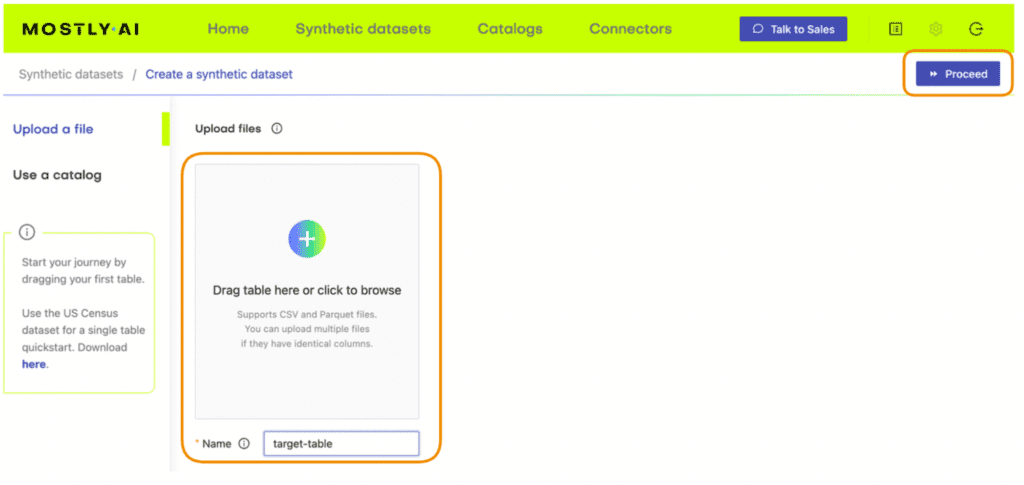

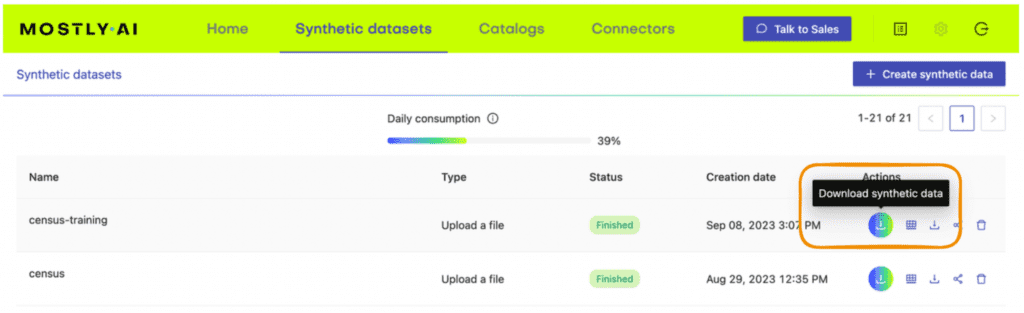

- Go to your MOSTLY AI account and navigate to “Synthetic Datasets”. Upload the CSV file you downloaded in the previous step and click “Proceed”.

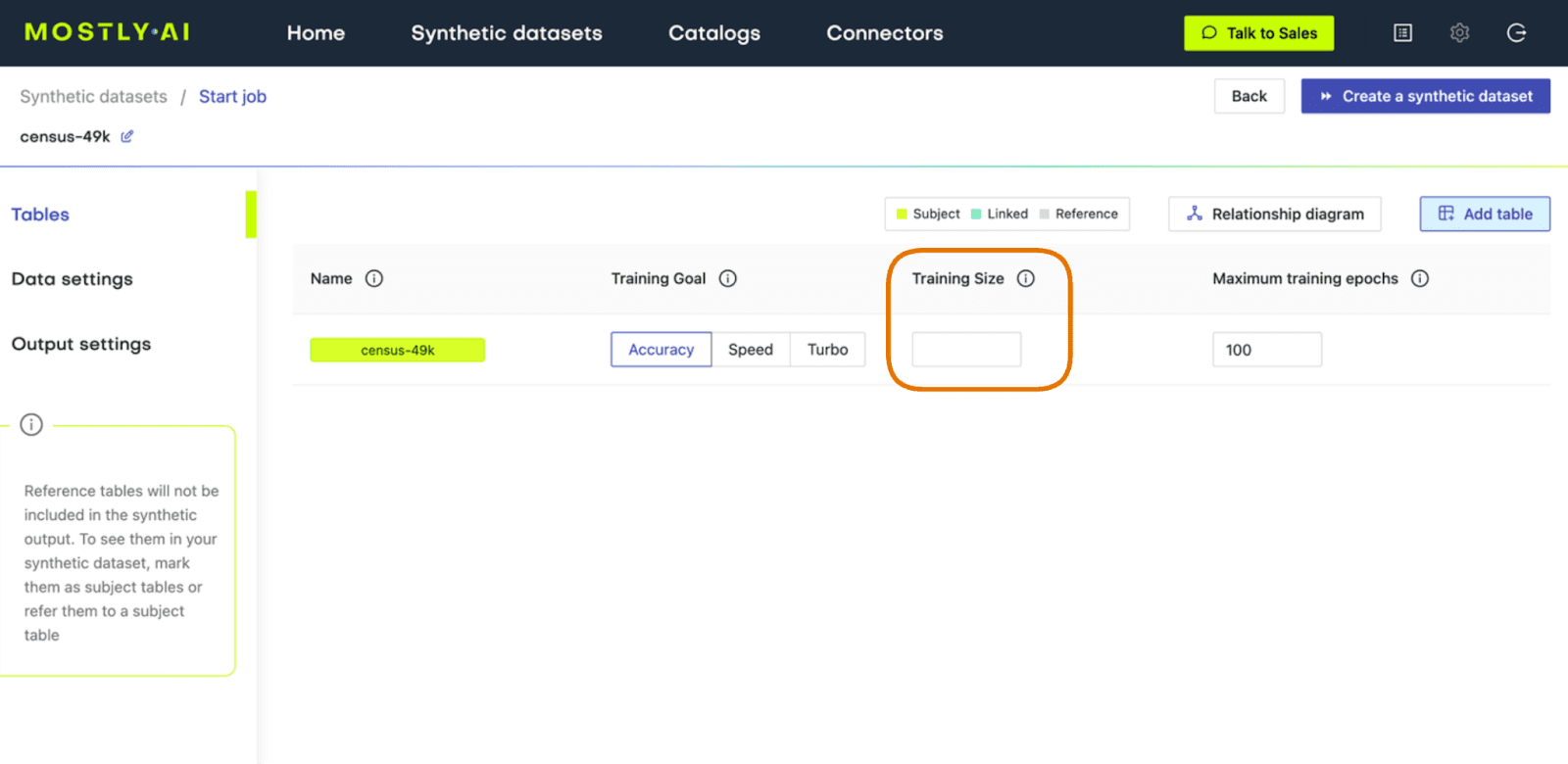

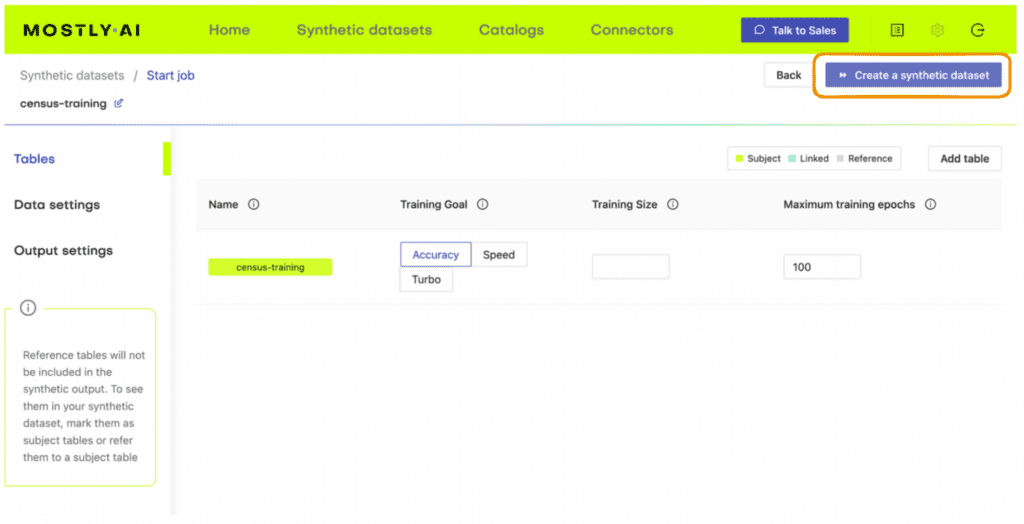

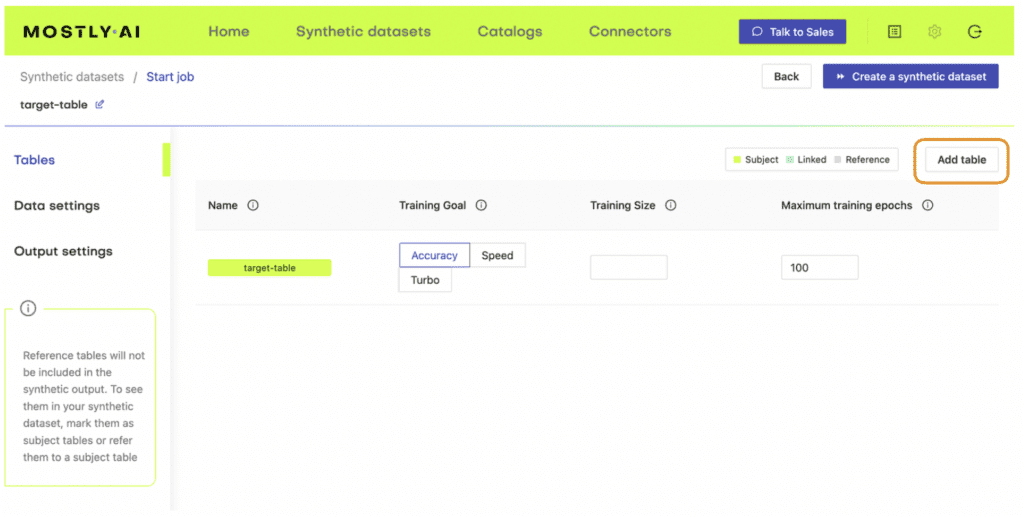

- Set the Training Size to 1000. This will intentionally lower the quality of the resulting synthetic data. Click “Create a synthetic dataset” to launch the job.

Fig 2 - Set the Training Size to 1000.

- Once the synthetic data is ready, download it to disk as CSV and use the following code to upload it if you’re running in Google Colab or to access it from disk if you are working locally:

# upload synthetic dataset

import pandas as pd

try:

# check whether we are in Google colab

from google.colab import files

print("running in COLAB mode")

repo = "https://github.com/mostly-ai/mostly-tutorials/raw/dev/fake-or-real"

import io

uploaded = files.upload()

syn = pd.read_csv(io.BytesIO(list(uploaded.values())[0]))

print(

f"uploaded synthetic data with {syn.shape[0]:,} records"

" and {syn.shape[1]:,} attributes"

)

except:

print("running in LOCAL mode")

repo = "."

print("adapt `syn_file_path` to point to your generated synthetic data file")

syn_file_path = "./census-synthetic-1k.csv"

syn = pd.read_csv(syn_file_path)

print(

f"read synthetic data with {syn.shape[0]:,} records"

" and {syn.shape[1]:,} attributes"

)Train your “fake vs real” ML classifier

Now that we have our low-quality synthetic data, let’s use it together with the original dataset to train a LightGBM classifier.

The first step will be to concatenate the original and synthetic datasets together into one large dataset. We will also create a split column to label the records: the original records will be labeled as REAL and the synthetic records as FAKE.

# concatenate FAKE and REAL data together

tgt = pd.read_csv(f"{repo}/census-49k.csv")

df = pd.concat(

[

tgt.assign(split="REAL"),

syn.assign(split="FAKE"),

],

axis=0,

)

df.insert(0, "split", df.pop("split"))Sample some records to take a look at the complete dataset:

df.sample(n=5)

We see that the dataset contains both REAL and FAKE records.

By grouping by the split column and verifying the size, we can confirm that we have an even split of synthetic and original records:

df.groupby('split').size()split

FAKE 48842

REAL 48842

dtype: int64

The next step will be to train your LightGBM model on this complete dataset. The following code contains two helper scripts to preprocess the data and train your model:

import lightgbm as lgb

from lightgbm import early_stopping

from sklearn.model_selection import train_test_split

def prepare_xy(df, target_col, target_val):

# split target variable `y`

y = (df[target_col] == target_val).astype(int)

# convert strings to categoricals, and all others to floats

str_cols = [

col

for col in df.select_dtypes(["object", "string"]).columns

if col != target_col

]

for col in str_cols:

df[col] = pd.Categorical(df[col])

cat_cols = [

col

for col in df.select_dtypes("category").columns

if col != target_col

]

num_cols = [

col for col in df.select_dtypes("number").columns if col != target_col

]

for col in num_cols:

df[col] = df[col].astype("float")

X = df[cat_cols + num_cols]

return X, y

def train_model(X, y):

cat_cols = list(X.select_dtypes("category").columns)

X_trn, X_val, y_trn, y_val = train_test_split(

X, y, test_size=0.2, random_state=1

)

ds_trn = lgb.Dataset(

X_trn, label=y_trn, categorical_feature=cat_cols, free_raw_data=False

)

ds_val = lgb.Dataset(

X_val, label=y_val, categorical_feature=cat_cols, free_raw_data=False

)

model = lgb.train(

params={"verbose": -1, "metric": "auc", "objective": "binary"},

train_set=ds_trn,

valid_sets=[ds_val],

callbacks=[early_stopping(5)],

)

return modelBefore training, make sure to set aside a holdout dataset for evaluation. Let’s reserve 20% of the records for this:

trn, hol = train_test_split(df, test_size=0.2, random_state=1)Now train your LightGBM classifier on the remaining 80% of the combined original and synthetic data:

X_trn, y_trn = prepare_xy(trn, 'split', 'FAKE')

model = train_model(X_trn, y_trn)Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[30] valid_0's auc: 0.594648

Next, score the model’s performance on the holdout dataset. We will include the model’s predicted probability for each record. A score of 1.0 indicates that the model is fully certain that the record is FAKE. A score of 0.0 means the model is certain the record is REAL.

Let’s sample some random records to take a look:

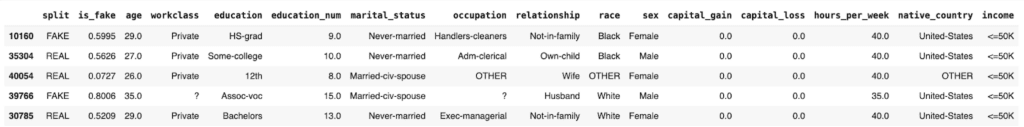

hol.sample(n=5)

We see that the model assigns varying levels of probability to the REAL and FAKE records. In some cases it is not able to predict with much confidence (scores around 0.5) and in others it is quite confident and also correct: see the 0.0727 for a REAL record and 0.8006 for a FAKE record.

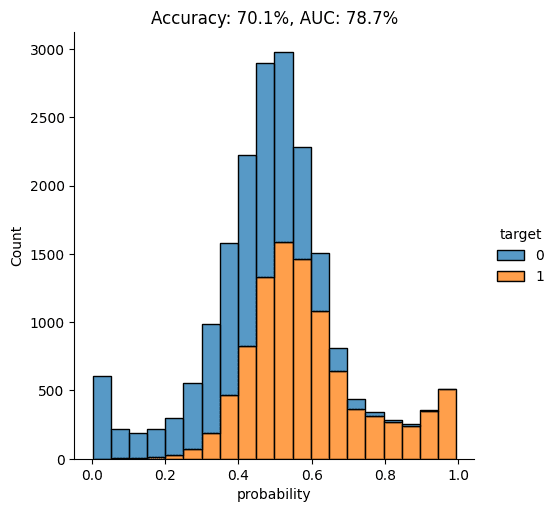

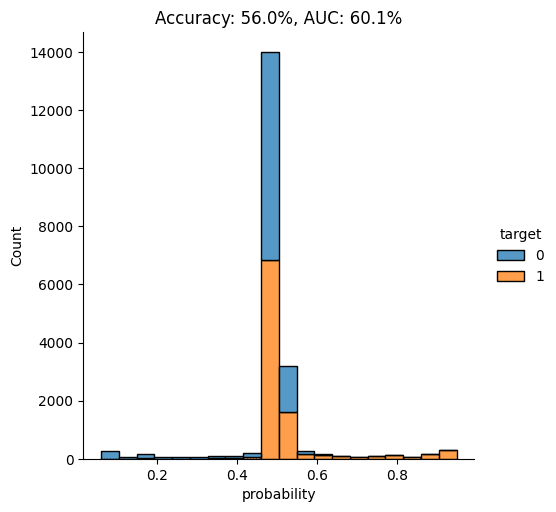

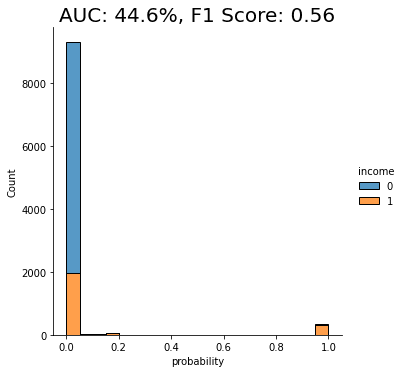

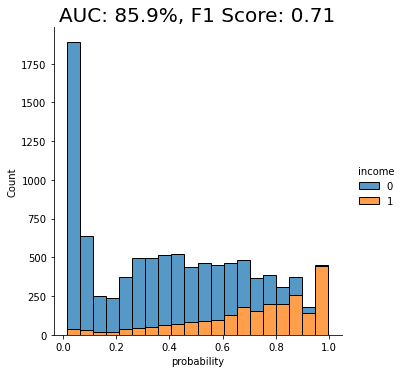

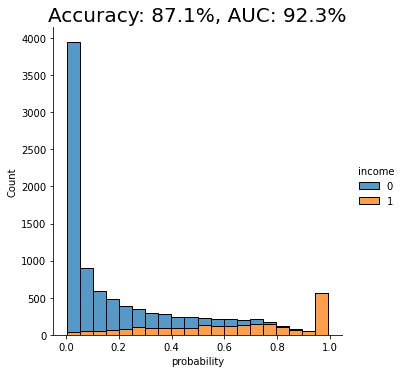

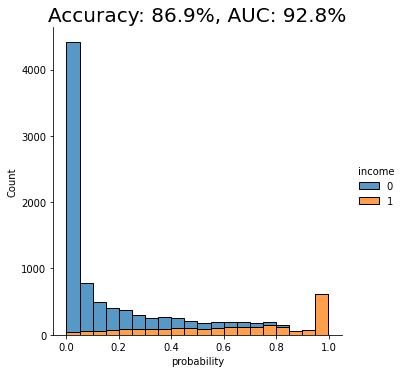

Let’s visualize the model’s overall performance by calculating the AUC and Accuracy scores and plotting the probability scores:

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.metrics import roc_auc_score, accuracy_score

auc = roc_auc_score(y_hol, hol.is_fake)

acc = accuracy_score(y_hol, (hol.is_fake > 0.5).astype(int))

probs_df = pd.concat(

[

pd.Series(hol.is_fake, name="probability").reset_index(drop=True),

pd.Series(y_hol, name="target").reset_index(drop=True),

],

axis=1,

)

fig = sns.displot(

data=probs_df, x="probability", hue="target", bins=20, multiple="stack"

)

fig = plt.title(f"Accuracy: {acc:.1%}, AUC: {auc:.1%}")

plt.show()

As you can see from the chart above, the discriminator has learned to pick up some signals that allow it with a varying level of confidence to determine whether a record is FAKE or REAL. The AUC can be interpreted as the percentage of cases in which the discriminator is able to correctly spot the FAKE record, given a set of a FAKE and a REAL record.

Let’s dig a little deeper by looking specifically at records that seem very fake and records that seem very real. This will give us a better understanding of the type of signals the model is learning.

Go ahead and sample some random records which the model has assigned a particularly high probability of being FAKE:

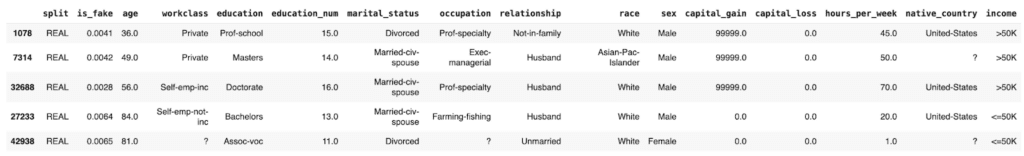

hol.sort_values('is_fake').tail(n=100).sample(n=5)

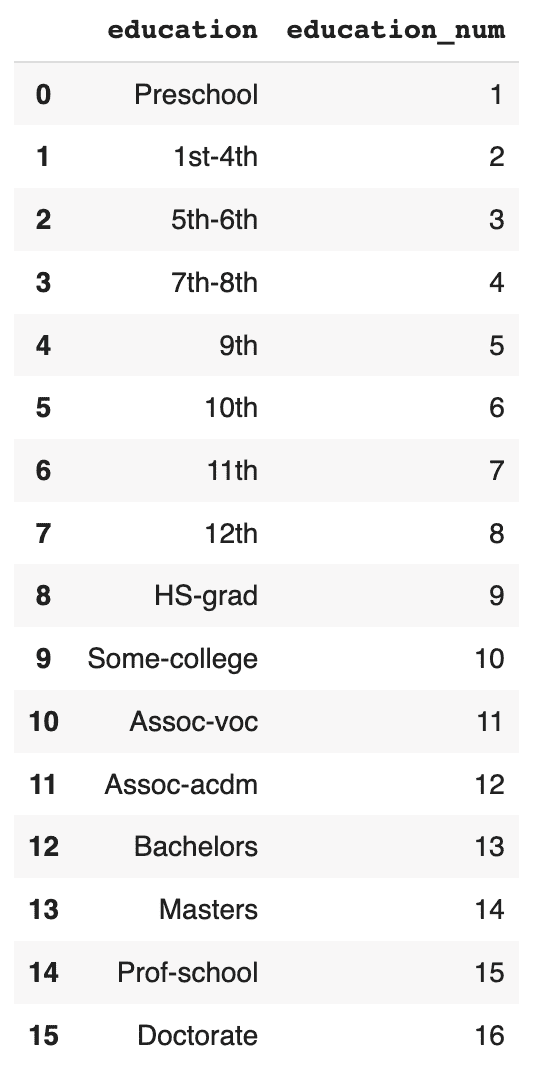

In these cases, it seems to be the mismatch between the education and education_num columns that gives away the fact that these are synthetic records. In the original data, these two columns have a 1:1 mapping of numerical to textual values. For example, the education value Some-college is always mapped to the numerical education_num value 10.0. In this poor-quality synthetic data, we see that there are multiple numerical values for the Some-college value, thereby giving away the fact that these records are fake.

Now let’s take a closer look at records which the model is especially certain are REAL:

hol.sort_values('is_fake').head(n=100).sample(n=5)

These “obviously real” records are types of records which the synthesizer has apparently failed to create. Thus, as they are then absent from the synthetic data, the discriminator recognizes these as REAL.

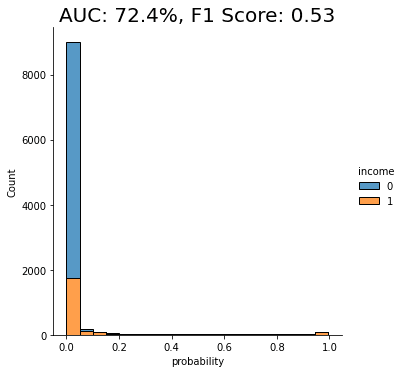

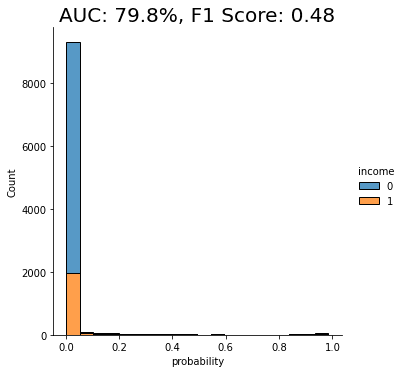

Generate high-quality synthetic data with MOSTLY AI

Now, let’s proceed to synthesize the original dataset again but this time using MOSTLY AI’s default settings for high-quality synthetic data. Run the same steps as before to synthesize the dataset except this time leave the Training Sample field blank. This will use all the records for the model training, ensuring the highest-quality synthetic data is generated.

Fig 3 - Leave the Training Size blank to train on all available records.

Once the job has completed, download the high-quality data as CSV and then upload it to wherever you are running your code.

Make sure that the syn variable now contains the new, high-quality synthesized data. Then re-run the code you ran earlier to concatenate the synthetic and original data together, train a new LightGBM model on the complete dataset, and evaluate its ability to tell the REAL records from FAKE.

Again, let’s visualize the model’s performance by calculating the AUC and Accuracy scores and by plotting the probability scores:

This time, we see that the model’s performance has dropped significantly. The model is not really able to pick up any meaningful signal from the combined data and assigns the largest share of records a probability around the 0.5 mark, which is essentially the equivalent of flipping a coin.

This means that the data you have generated using MOSTLY AI’s default high-quality settings is so similar to the original, real records that it is almost impossible for the model to tell them apart.

Classifying “fake vs real” records with MOSTLY AI

In this tutorial, you have learned how to build a machine learning model that can distinguish between fake (i.e. synthetic) and real data records. You have synthesized the original data using MOSTLY AI and evaluated the resulting model by looking at multiple performance metrics. By comparing the model performance on both an intentionally low-quality synthetic dataset and MOSTLY AI’s default high-quality synthetic data, you have seen firsthand that the synthetic data MOSTLY AI delivers is so statistically representative of the original data that a top-notch LightGBM model was practically unable to tell these synthetic records apart from the real ones.

If you are interested in comparing performance across various data synthesizers, you may want to check out our benchmarking article which surveys 8 different synthetic data generators.

What’s next?

In addition to walking through the above instructions, we suggest:

- measuring the Discriminator's AUC if more training samples are used,

- using a different dataset,

- using a different ML model for the discriminator, eg. a RandomForest model,

- generate synthetic data with MOSTLY using your own dataset,

- using a different synthesizer, eg. SynthCity, SDV, etc.

You can also head straight to the other synthetic data tutorials:

- Generate synthetic text data

- Explainable AI with synthetic data

- Perform conditional data generation

- Rebalancing your data for ML classification problems

- Optimize your training sample size for synthetic data accuracy

In this tutorial, you will learn how to generate synthetic text using MOSTLY AI's synthetic data generator. While synthetic data generation is most often applied to structured (tabular) data types, such as numbers and categoricals, this tutorial will show you that you can also use MOSTLY AI to generate high-quality synthetic unstructured data, such as free text.

You will learn how to use the MOSTLY AI platform to synthesize text data and also how to evaluate the quality of the synthetic text that you will generate. For context, you may want to check out the introductory article which walks through a real-world example of using synthetic text when working with voice assistant data.

You will be working with a public dataset containing AirBnB listings in London. We will walk through how to synthesize this dataset and pay special attention to the steps needed to successfully synthesize the columns containing unstructured text data. We will then proceed to evaluate the statistical quality of the generated text data by inspecting things like the set of characters, the distribution of character and term frequencies and the term co-occurrence.

We will also perform a privacy check by scanning for exact matches between the original and synthetic text datasets. Finally, we will evaluate the correlations between the synthesized text columns and the other features in the dataset to ensure that these are accurately preserved. The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

Synthesize text data

Synthesizing text data with MOSTLY AI can be done through a single step before launching your data generation job. We will indicate which columns contain unstructured text and let MOSTLY AI’s generative algorithm do the rest.

Let’s walk through how this works:

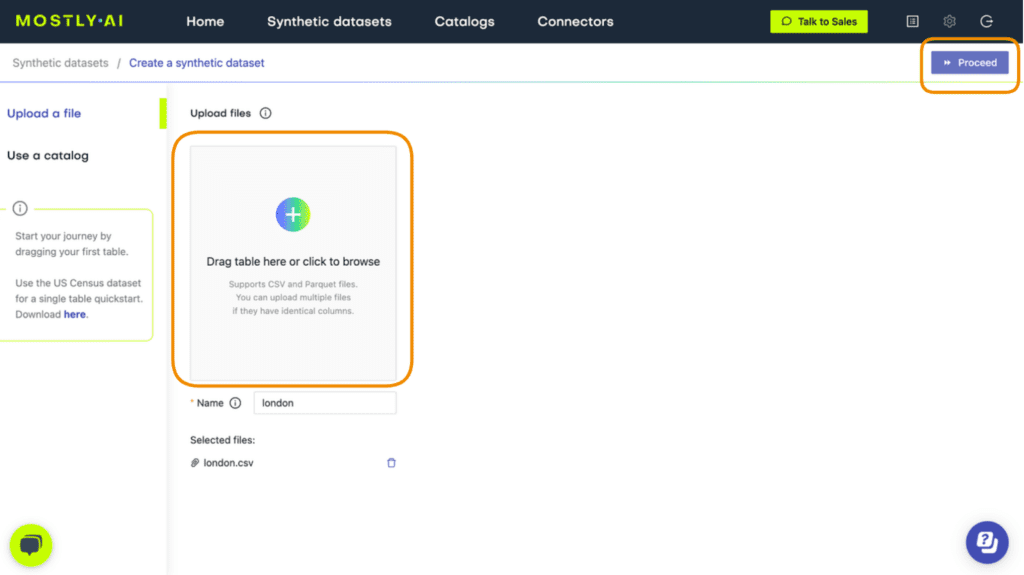

- Download the original AirBnB dataset. Depending on your operating system, use either Ctrl+S or Cmd+S to save the file locally.

- Go to your MOSTLY AI account and navigate to “Synthetic Datasets”. Upload the CSV file you downloaded in the previous step and click “Proceed”.

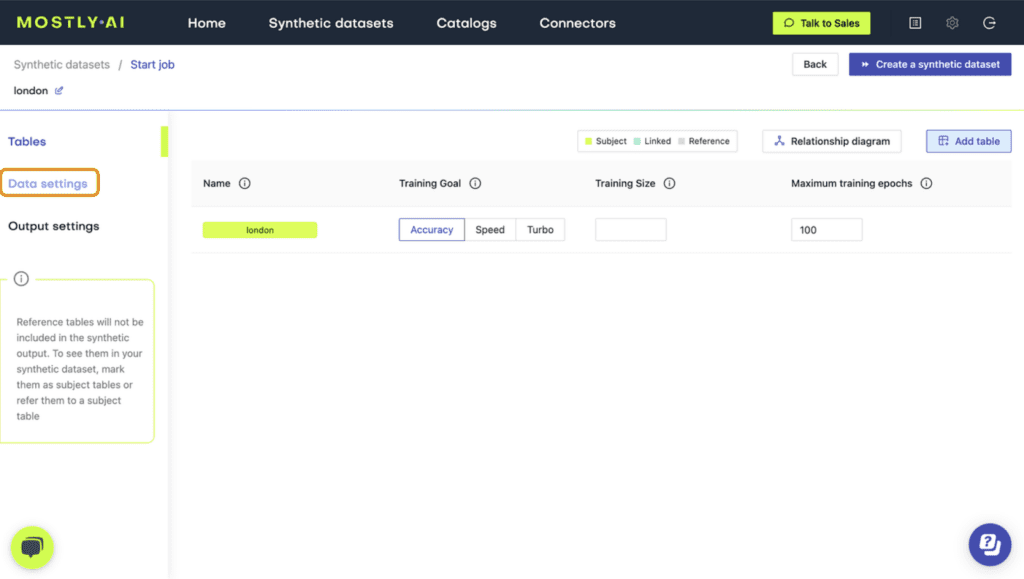

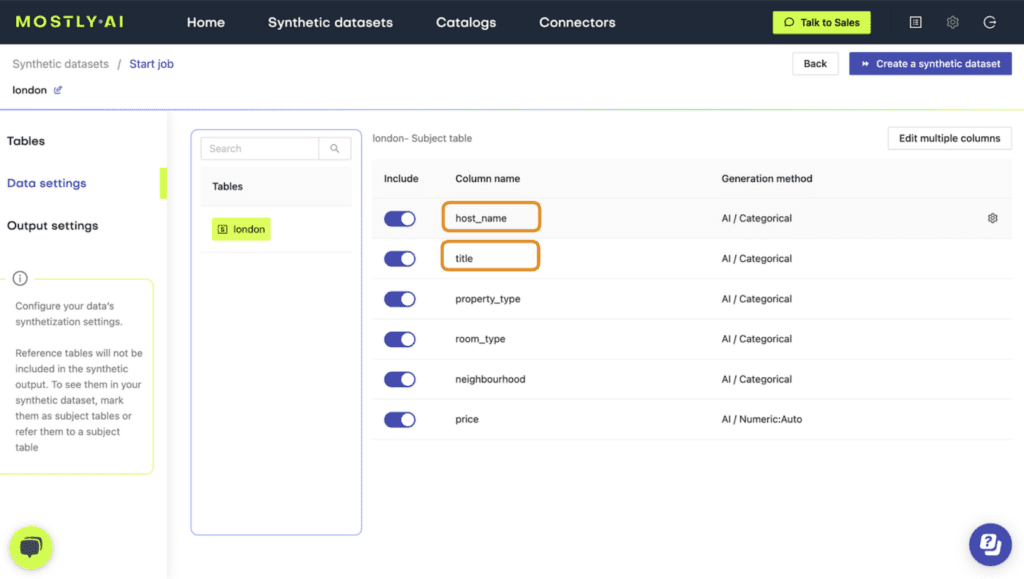

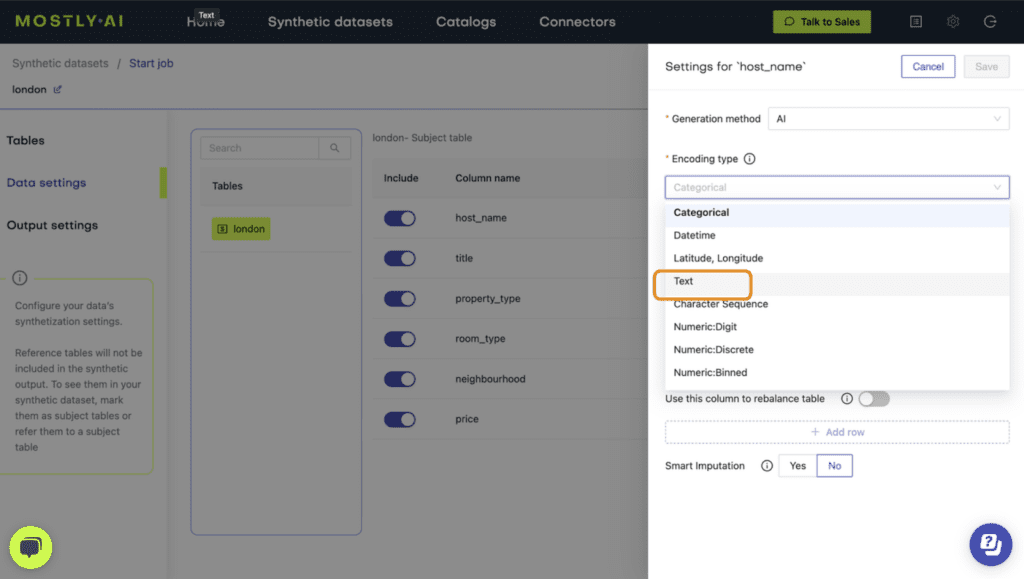

- Navigate to “Data Settings” in order to specify which columns should be synthesized as unstructured text data.

- Click on the host_name and title columns and set the Generation Method to “Text”.

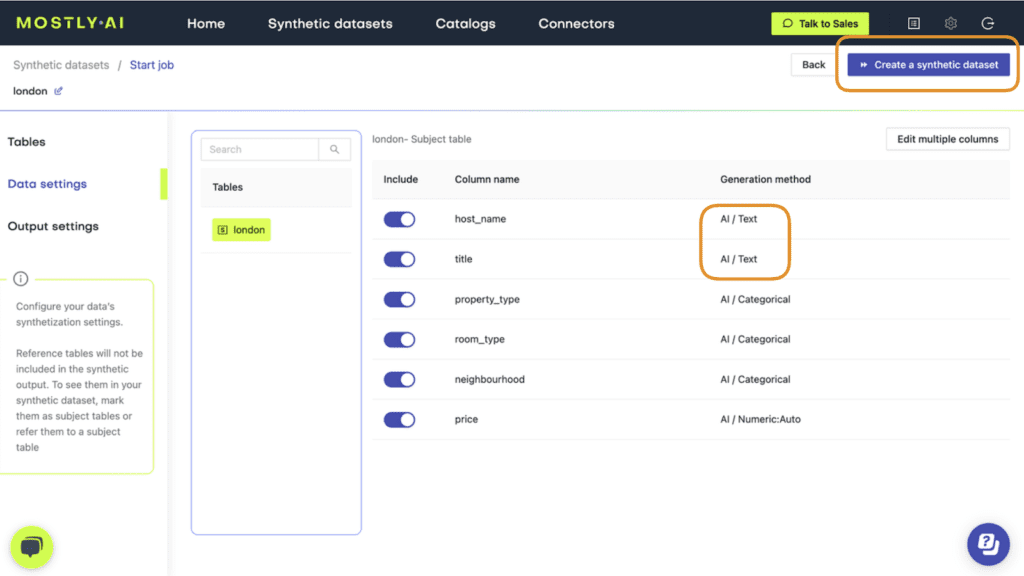

- Verify that both columns are set to Generation Method “AI / Text” and then launch the job by clicking “Create a synthetic dataset”. Synthetic text generation is compute-intensive, so this may take up to an hour to complete.

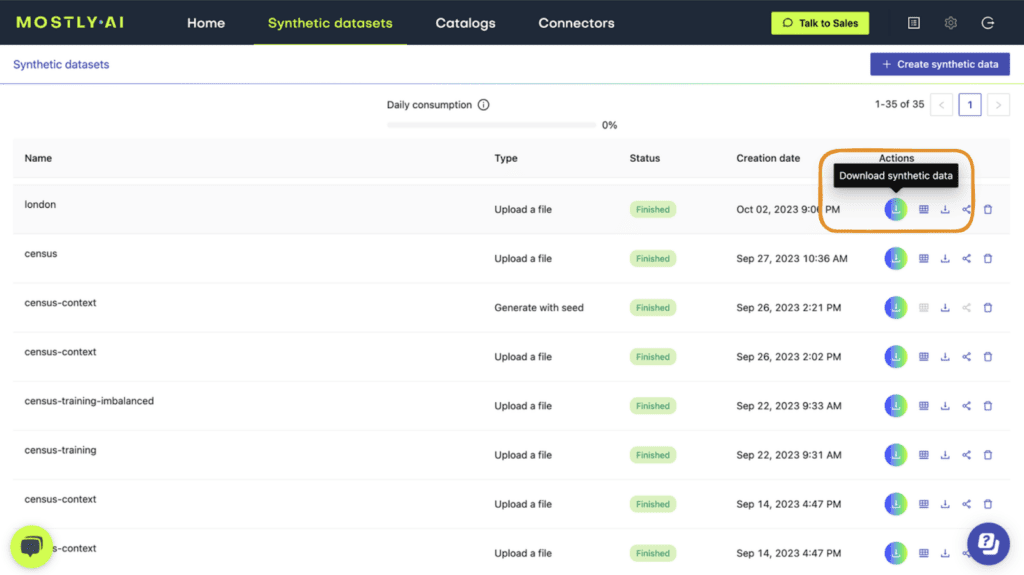

- Once completed, download the synthetic dataset as CSV.

And that’s it! You have successfully synthesized unstructured text data using MOSTLY AI.

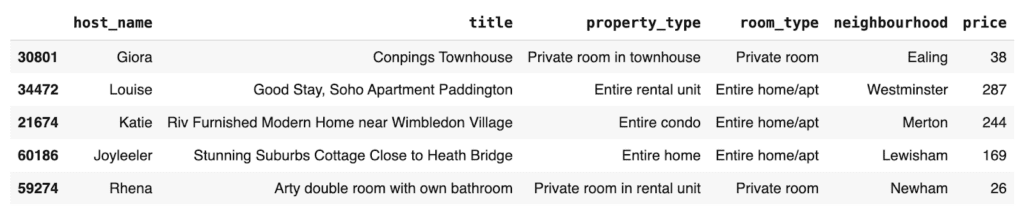

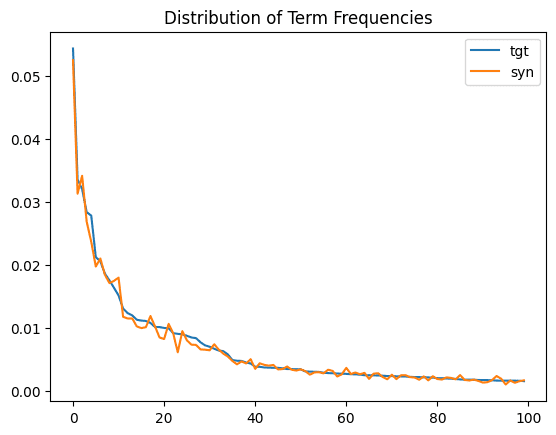

You can now poke around the synthetic data you’ve created, for example, by sampling 5 random records:

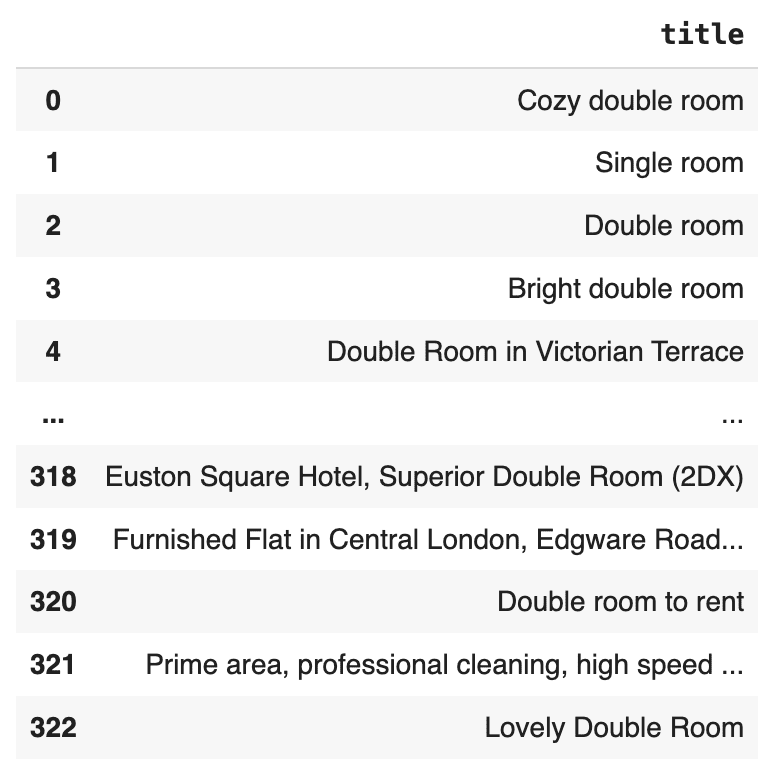

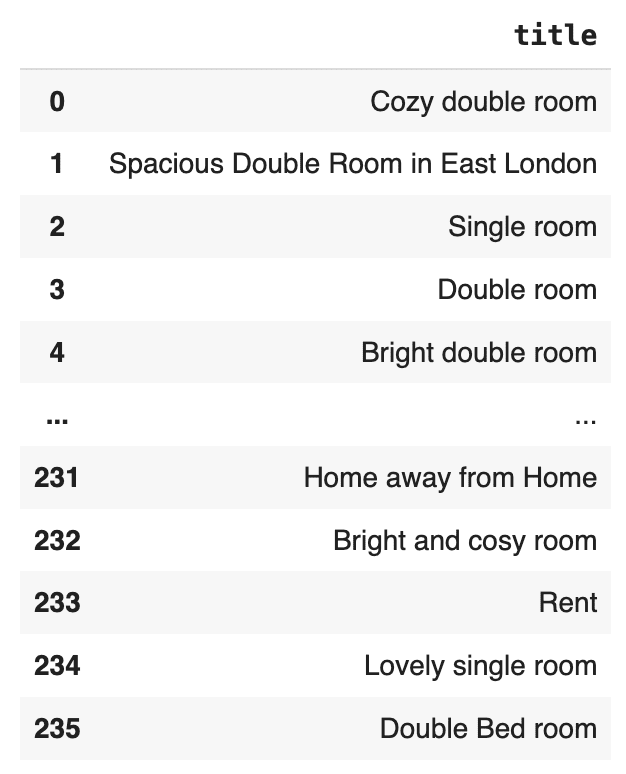

syn.sample(n=5)

And compare this to 5 random records sampled from the original dataset:

But of course, you shouldn’t just take our word for the fact that this is high-quality synthetic data. Let’s be a little more critical and evaluate the data quality in more detail in the next section.

Evaluate statistical integrity

Let’s take a closer look at how statistically representative the synthetic text data is compared to the original text. Specifically, we’ll investigate four different aspects of the synthetic data: (1) the character set, (2) the character and (3) term frequency distributions, and (4) the term co-occurrence. We’ll explain the technical terms in each section.

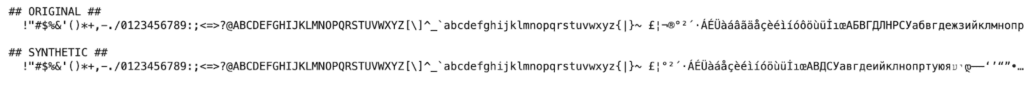

Character set

Let’s start by taking a look at the set of all characters that occur in both the original and synthetic text. We would expect to see a strong overlap between the two sets, indicating that the same kinds of characters that appear in the original dataset also appear in the synthetic version.

The code below generates the set of characters for the original and synthetic versions of the title column:

print(

"## ORIGINAL ##\n",

"".join(sorted(list(set(tgt["title"].str.cat(sep=" "))))),

"\n",

)

print(

"## SYNTHETIC ##\n",

"".join(sorted(list(set(syn["title"].str.cat(sep=" "))))),

"\n",

)

The output is quite long and is best inspected by running the code in the notebook yourself.

We see a perfect overlap in the character set for all characters up until the “£” symbol. These are all the most commonly used characters. This is a good first sign that the synthetic data contains the right kinds of characters.

From the “£” symbol onwards, you will note that the character set of the synthetic data is shorter. This is expected and is due to the privacy mechanism called rare category protection within the MOSTLY AI platform, which removes very rare tokens in order to prevent their presence giving away information on the existence of individual records in the original dataset.

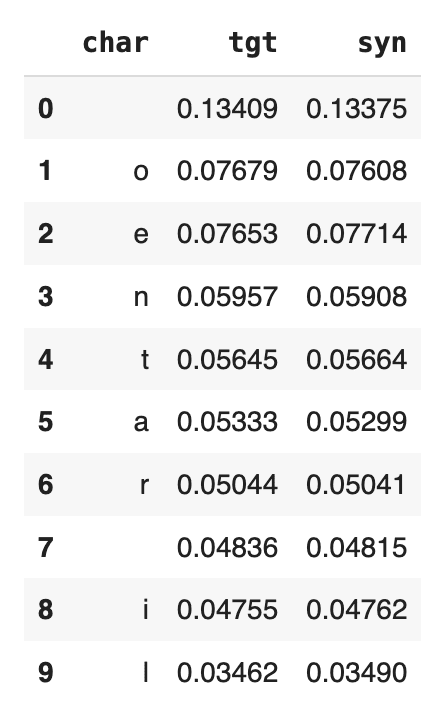

Character frequency distribution

Next, let’s take a look at the character frequency distribution: how many times each letter shows up in the dataset. Again, we will compare this statistical property between the original and synthetic text data in the title column.

The code below creates a list of all characters that occur in the datasets along with the percentage that character constitutes of the whole dataset:

title_char_freq = (

pd.merge(

tgt["title"]

.str.split("")

.explode()

.value_counts(normalize=True)

.to_frame("tgt")

.reset_index(),

syn["title"]

.str.split("")

.explode()

.value_counts(normalize=True)

.to_frame("syn")

.reset_index(),

on="index",

how="outer",

)

.rename(columns={"index": "char"})

.round(5)

)

title_char_freq.head(10)

We see that “o” and “e” are the 2 most common characters (after the whitespace character), both showing up a little more than 7.6% of the time in the original dataset. If we inspect the syn column, we see that the percentages match up nicely. There are about as many “o”s and “e”s in the synthetic dataset as there are in the original now. And this goes for the other characters in the list as well.

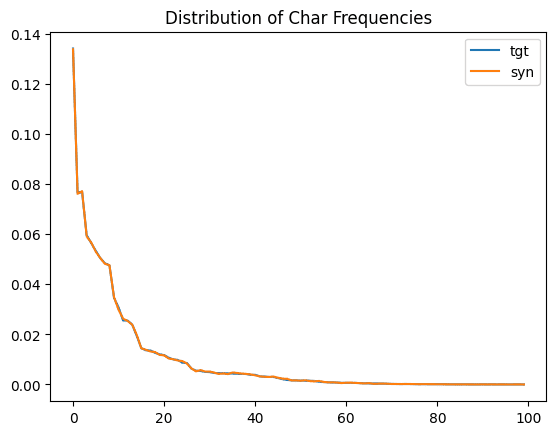

For a visualization of all the distributions of the 100 most common characters, you can run the code below:

import matplotlib.pyplot as plt

ax = title_char_freq.head(100).plot.line()

plt.title('Distribution of Char Frequencies')

plt.show()

We see that the original distribution (in light blue) and the synthetic distribution (orange) are almost identical. This is another important confirmation that the statistical properties of the original text data are being preserved during the synthetic text generation.

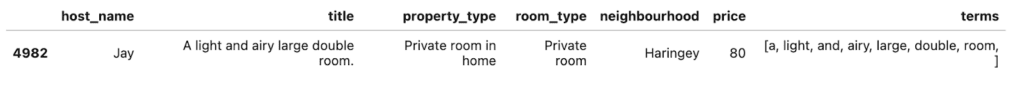

Term frequency distribution

Let’s now do the same exercise we did above but with words (or “terms”) instead of characters. We will look at the term frequency distribution: how many times each term shows up in the dataset and how this compares across the synthetic and original datasets.

The code below performs some data cleaning and then performs the analysis and displays the 10 most frequently used terms.

import re

def sanitize(s):

s = str(s).lower()

s = re.sub('[\\,\\.\\)\\(\\!\\"\\:\\/]', " ", s)

s = re.sub("[ ]+", " ", s)

return s

tgt["terms"] = tgt["title"].apply(lambda x: sanitize(x)).str.split(" ")

syn["terms"] = syn["title"].apply(lambda x: sanitize(x)).str.split(" ")

title_term_freq = (

pd.merge(

tgt["terms"]

.explode()

.value_counts(normalize=True)

.to_frame("tgt")

.reset_index(),

syn["terms"]

.explode()

.value_counts(normalize=True)

.to_frame("syn")

.reset_index(),

on="index",

how="outer",

)

.rename(columns={"index": "term"})

.round(5)

)

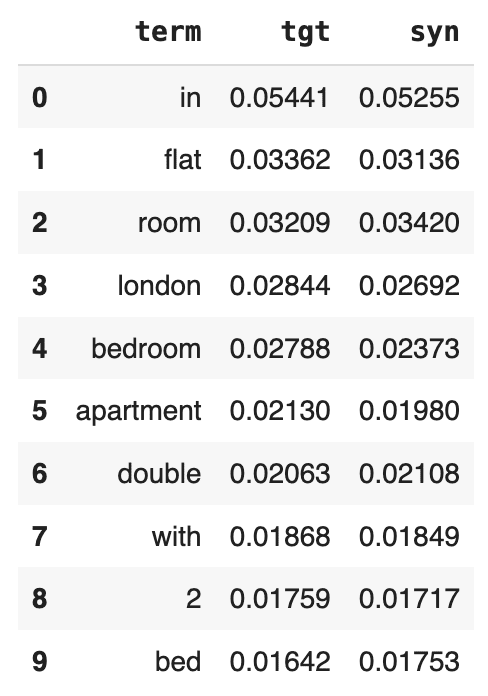

display(title_term_freq.head(10))

You can also take a look at the 10 least-common words:

display(title_term_freq.head(200).tail(10))

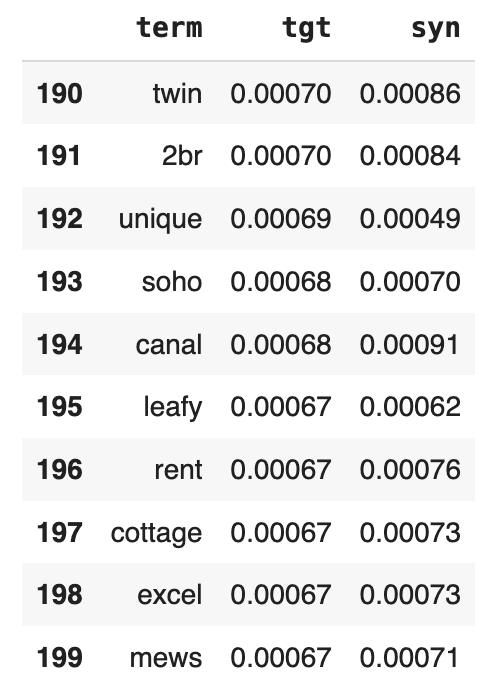

And again, plot the entire distribution for a comprehensive overview:

ax = title_term_freq.head(100).plot.line()

plt.title('Distribution of Term Frequencies')

plt.show()

Just as we saw above with the character frequency distribution, we see a close match between the original and synthetic term frequency distributions. The statistical properties of the original dataset are being preserved.

Term co-occurrence

As a final statistical test, let’s take a look at the term co-occurrence: how often a word appears in a given listing title given the presence of another word. For example, how many words that contain the word “heart” also contain the word “London”?

The code below defines a helper function to calculate the term co-occurrence given two words:

def calc_conditional_probability(term1, term2):

tgt_beds = tgt["title"][

tgt["title"].str.lower().str.contains(term1).fillna(False)

]

syn_beds = syn["title"][

syn["title"].str.lower().str.contains(term1).fillna(False)

]

tgt_beds_double = tgt_beds.str.lower().str.contains(term2).mean()

syn_beds_double = syn_beds.str.lower().str.contains(term2).mean()

print(

f"{tgt_beds_double:.0%} of actual Listings, that contain `{term1}`, also contain `{term2}`"

)

print(

f"{syn_beds_double:.0%} of synthetic Listings, that contain `{term1}`, also contain `{term2}`"

)

print("")Let’s run this function for a few different examples of word combinations:

calc_conditional_probability('bed', 'double')

calc_conditional_probability('bed', 'king')

calc_conditional_probability('heart', 'london')

calc_conditional_probability('london', 'heart')14% of actual Listings, that contain `bed`, also contain `double`

13% of synthetic Listings, that contain `bed`, also contain `double`

7% of actual Listings, that contain `bed`, also contain `king`

6% of synthetic Listings, that contain `bed`, also contain `king`

28% of actual Listings, that contain `heart`, also contain `london`

26% of synthetic Listings, that contain `heart`, also contain `london`

4% of actual Listings, that contain `london`, also contain `heart`

4% of synthetic Listings, that contain `london`, also contain `heart`

Once again, we see that the term co-occurrences are being accurately preserved (with some minor variation) during the process of generating the synthetic text.

Now you might be asking yourself: if all of these characteristics are maintained, what are the chances that we'll end up with exact matches, i.e., synthetic records with the exact same title value as a record in the original dataset? Or perhaps even a synthetic record with the exact same values for all the columns?

Let's start by trying to find an exact match for 1 specific synthetic title value. Choose a title_value from the original title column and then use the code below to search for an exact match in the synthetic title column.

title_value = "Airy large double room"

tgt.loc[tgt["title"].str.contains(title_value, case=False, na=False)]

We see that there is an exact (partial) match in this case. Depending on the value you choose, you may or may not find an exact match. But how big of a problem is it that we find an exact partial match? Is this a sign of a potential privacy breach? It’s hard to tell from a single row-by-row validation, and, more importantly, this process doesn't scale very well to the 71K rows in the dataset.

Evaluate the privacy of synthetic text

Let's perform a more comprehensive check for privacy by looking for exact matches between the synthetic and the original.

To do that, first split the original data into two equally-sized sets and measure the number of matches between those two sets:

n = int(tgt.shape[0]/2)

pd.merge(tgt[['title']][:n].drop_duplicates(), tgt[['title']][n:].drop_duplicates())

This is interesting. There are 323 cases of duplicate title values in the original dataset itself. This means that the appearance of one of these duplicate title values in the synthetic dataset would not point to a single record in the original dataset and therefore does not constitute a privacy concern.

What is important to find out here is whether the number of exact matches between the synthetic dataset and the original dataset exceeds the number of exact matches within the original dataset itself.

Let’s find out.

Take an equally-sized subset of the synthetic data, and again measure the number of matches between that set and the original data:

pd.merge(

tgt[["title"]][:n].drop_duplicates(), syn[["title"]][:n].drop_duplicates()

)

There are 236 exact matches between the synthetic dataset and the original, but significantly less than the number of exact matches that exist within the original dataset itself. Moreover, we can see that they occur only for the most commonly used descriptions.

It’s important to note that matching values or matching complete records are by themselves not a sign of a privacy leak. They are only an issue if they occur more frequently than we would expect based on the original dataset. Also note that removing those exact matches via post-processing would actually have a detrimental contrary effect. The absence of a value like "Lovely single room" in a sufficiently large synthetic text corpus would, in this case, actually give away the fact that this sentence was present in the original. See our peer-reviewed academic paper for more context on this topic.

Correlations between text and other columns

So far, we have inspected the statistical quality and privacy preservation of the synthesized text column itself. We have seen that both the statistical properties and the privacy of the original dataset are carefully maintained.

But what about the correlations that exist between the text columns and other columns in the dataset? Are these correlations also maintained during synthesization?

Let’s take a look by inspecting the relationship between the title and price columns. Specifically, we will look at the median price of listings that contain specific words that we would expect to be associated with a higher (e.g., “luxury”) or lower (e.g., “small”) price. We will do this for both the original and synthetic datasets and compare.

The code below prepares the data and defines a helper function to print the results:

tgt_term_price = (

tgt[["terms", "price"]]

.explode(column="terms")

.groupby("terms")["price"]

.median()

)

syn_term_price = (

syn[["terms", "price"]]

.explode(column="terms")

.groupby("terms")["price"]

.median()

)

def print_term_price(term):

print(

f"Median Price of actual Listings, that contain `{term}`: ${tgt_term_price[term]:.0f}"

)

print(

f"Median Price of synthetic Listings, that contain `{term}`: ${syn_term_price[term]:.0f}"

)

print("")Let’s then compare the median price for specific terms across the two datasets:

print_term_price("luxury")

print_term_price("stylish")

print_term_price("cozy")

print_term_price("small")Median Price of actual Listings, that contain `luxury`: $180

Median Price of synthetic Listings, that contain `luxury`: $179

Median Price of actual Listings, that contain `stylish`: $134

Median Price of synthetic Listings, that contain `stylish`: $140

Median Price of actual Listings, that contain `cozy`: $70

Median Price of synthetic Listings, that contain `cozy`: $70

Median Price of actual Listings, that contain `small`: $55

Median Price of synthetic Listings, that contain `small`: $60

We can see that correlations between the text and price features are very well retained.

Generate synthetic text with MOSTLY AI

In this tutorial, you have learned how to generate synthetic text using MOSTLY AI by simply specifying the correct Generation Method for the columns in your dataset that contain unstructured text. You have also taken a deep dive into evaluating the statistical integrity and privacy preservation of the generated synthetic text by looking at character and term frequencies, term co-occurrence, and the correlations between the text column and other features in the dataset. These statistical and privacy indicators are crucial components for creating high-quality synthetic data.

What’s next?

In addition to walking through the above instructions, we suggest experimenting with the following in order to get even more hands-on experience generating synthetic text data:

- analyzing further correlations, also for host_name

- using a different generation mood, e.g., conservative sampling. Take a look at the end of the video tutorial for a quick walkthrough.

- using a different dataset, e.g., the Austrian First Name dataset

You can also head straight to the other synthetic data tutorials:

- Evaluate synthetic data quality using downstream ML

- Optimize your training sample size for synthetic data accuracy

- Rebalancing your data for ML classification problems

- Perform conditional data generation

- Explainable AI with synthetic data

- How to generate synthetic data

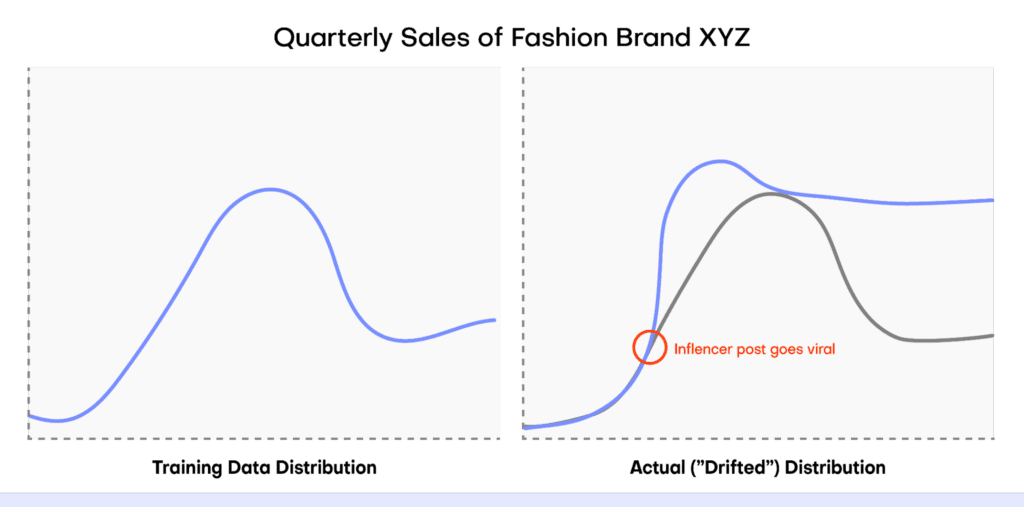

In this tutorial, you will learn how to perform conditional data generation. As the name suggests, this method of synthetic data generation is useful when you want to have more fine-grained control over the statistical distributions of your synthetic data by setting certain conditions in advance. This can be useful across a range of use cases, such as performing data simulation, tackling data drift, or when you want to retain certain columns as they are during synthesization.

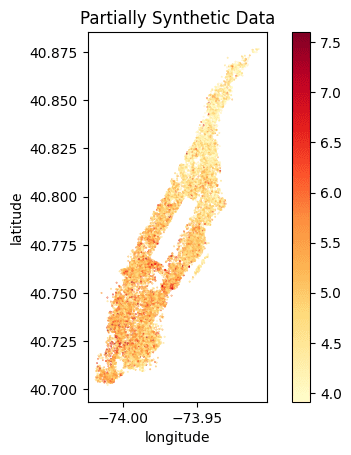

You will work through two use cases in this tutorial. In the first use case, you will be working with the UCI Adult Income dataset in order to simulate what this dataset would look like if there was no gender income gap. In the second use case, you will be working with Airbnb accommodation data for Manhattan, which contains geolocation coordinates for each accommodation.

To gain useful insights from this dataset, this geolocation data will need to remain exactly as it is in the original dataset during synthesization. In both cases, you will end up with data that is partially pre-determined by the user (to either remain in its original form or follow a specific distribution) and partially synthesized.

It’s important to note that the synthetic data you generate using conditional data generation is still statistically representative within the conditional context that you’ve created. The degree of privacy preservation of the resulting synthetic dataset is largely dependent on the privacy of the provided fixed attributes.

The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

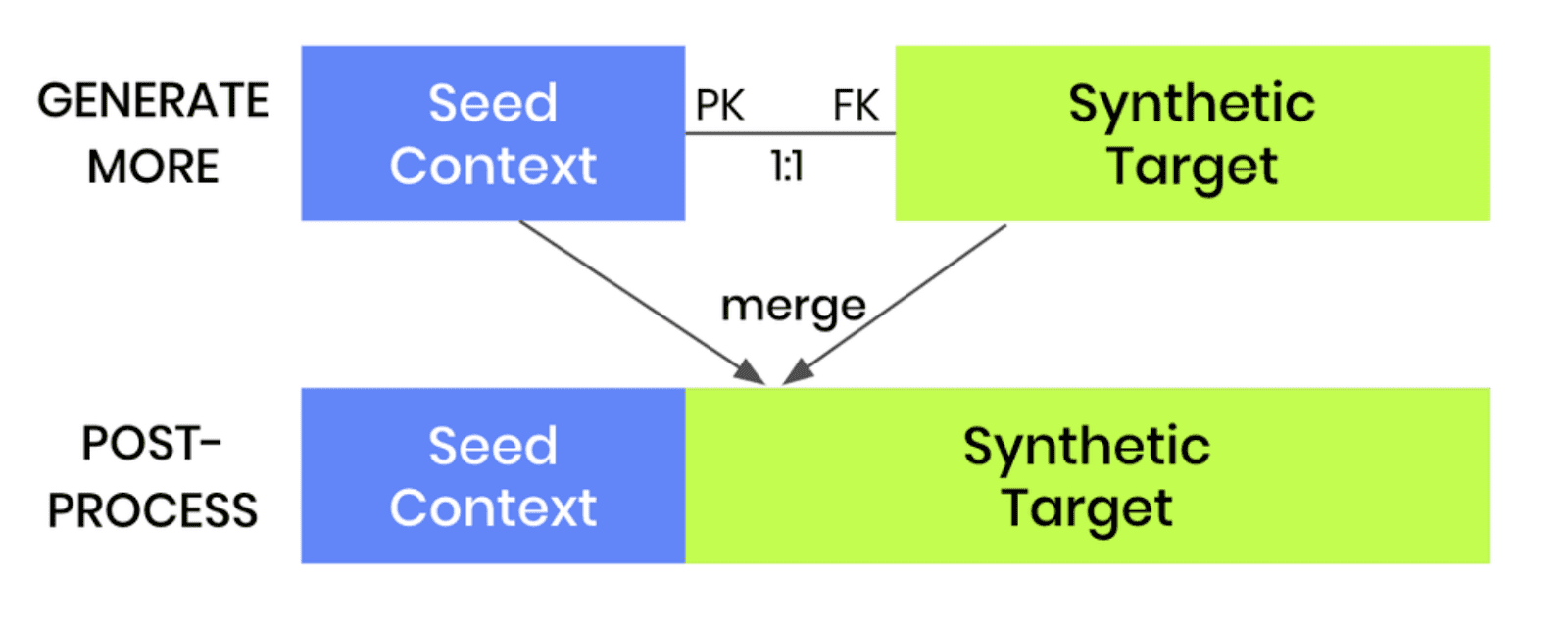

How does conditional data generation work?

We’ve broken down the process of conditional data generation with MOSTLY AI into 5 steps below. We’ll describe the steps here first, and in the following sections, you will get a chance to implement them yourself.

- We’ll start by splitting the original data table into two tables. The first table should contain all the columns that you want to hold fixed, the conditions based on which you want to generate your partially synthetic data. The second table will contain all the other columns, which will be synthesized within the context of the first table.

- We’ll then define the relationship between these two tables. The first table (containing the context data) should be set as a subject table, and the second table (containing the target data to be synthesized) as a linked table.

- Next, we will train a MOSTLY AI synthetic data generation model using this two-table setup. Note that this is just an intermediate step that will, in fact, create fully synthetic data since we are using the full original dataset (just split into two) and have not set any specific conditions yet.

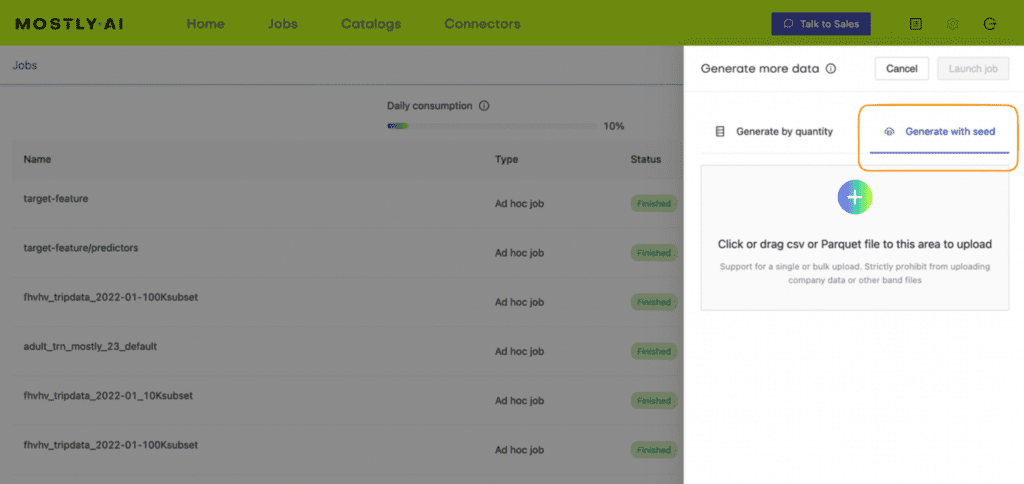

- Once the model is trained, we can then use it to generate more data by selecting the “Generate with seed” option. This option allows you to set conditions within which to create partially synthetic data. Any data you upload as the seed context will be used as fixed attributes that will appear as they are in the resulting synthetic dataset. Note that your seed context dataset must contain a matching ID column. The output of this step will be your synthetic target data.

- As a final post-processing step, we’ll merge the seed context with fixed attribute columns to your synthetic target data to get the complete, partially synthetic dataset created using conditional data generation.

Note that this same kind of conditional data generation can also be performed for two-table setups. The process is even easier in that case, as the pre-and post-processing steps are not required. Once a two-table model is trained, one can simply generate more data and provide a new subject table as the seed for the linked table.

Let’s see it in practice for our first use case: performing data simulation on the UCI Adult Income dataset to see what the data would look like if there was no gender income gap.

Conditional data generation for data simulation

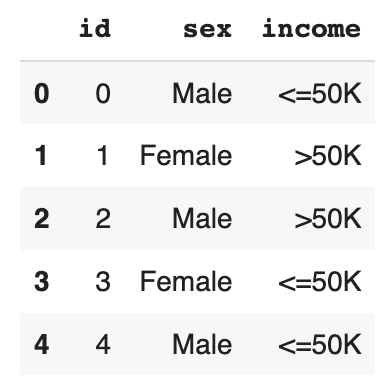

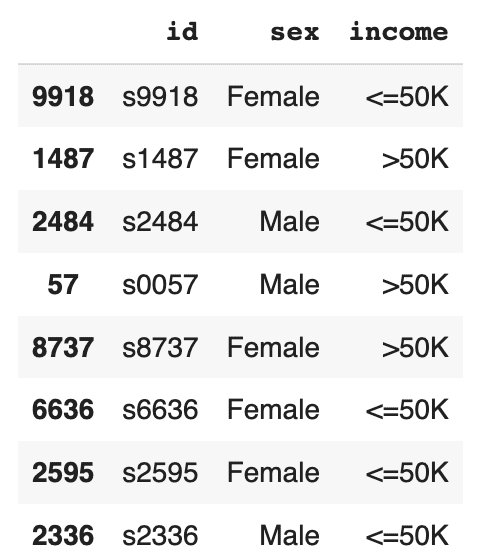

For this use case, we will be using a subset of the UCI Adult Income dataset, consisting of 10k records and 10 attributes. Our aim here is to provide a specific distribution for the sex and income columns and see how the other columns will change based on these predetermined conditions.

Preprocess your data

As described in the steps above, your first task will be to enrich the dataset with a unique ID column and then split the data into two tables, i.e. two CSV files. The first table should contain the columns you want to control, in this case, the sex and income columns. The second table should contain the columns you want to synthesize, in this case, all the other columns.

df = pd.read_csv(f'{repo}/census.csv')

# define list of columns, on which we want to condition on

ctx_cols = ['sex', 'income']

tgt_cols = [c for c in df.columns if c not in ctx_cols]

# insert unique ID column

df.insert(0, 'id', pd.Series(range(df.shape[0])))

# persist actual context, that will be used as subject table

df_ctx = df[['id'] + ctx_cols]

df_ctx.to_csv('census-context.csv', index=False)

display(df_ctx.head())

# persist actual target, that will be used as linked table

df_tgt = df[['id'] + tgt_cols]

df_tgt.to_csv('census-target.csv', index=False)

display(df_tgt.head())

Save the resulting tables to disk as CSV files in order to upload them to MOSTLY AI in the next step. If you are working in Colab this will require an extra step (provided in the notebook) in order to download the files from the Colab server to disk.

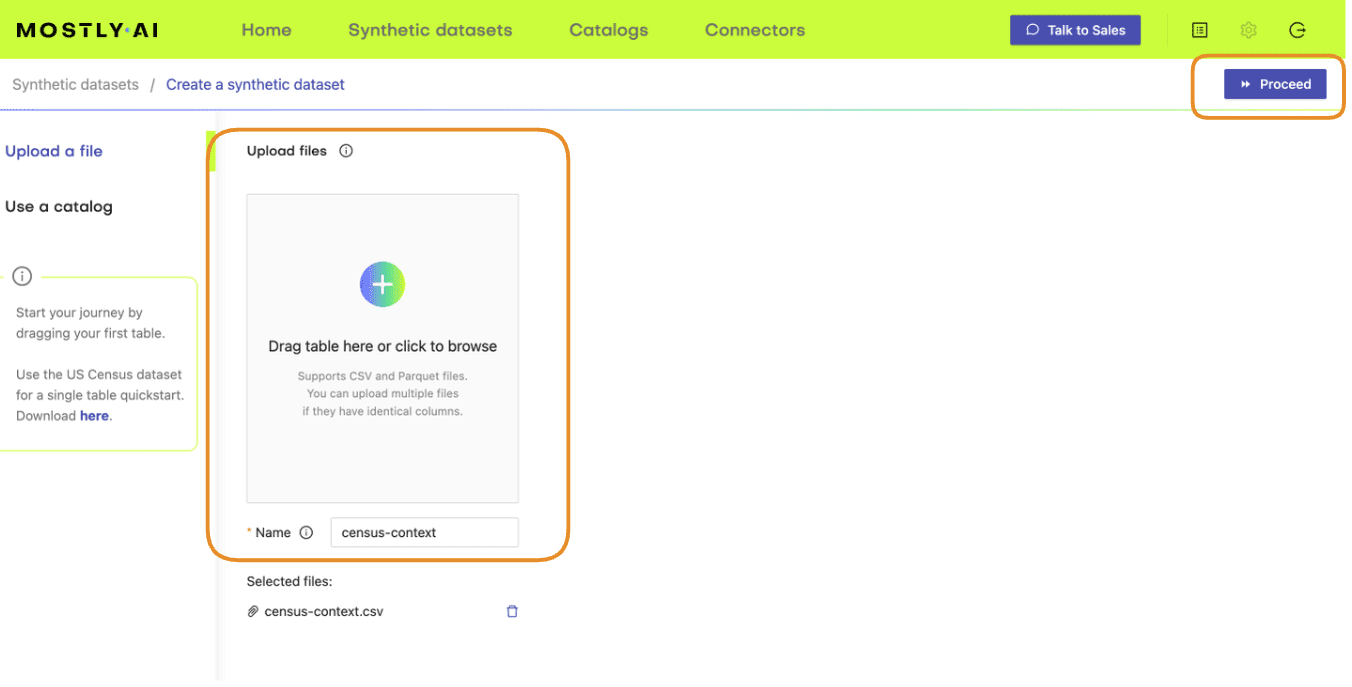

Train a generative model with MOSTLY AI

Use the CSV files you have just created to train a synthetic data generation model using MOSTLY AI.

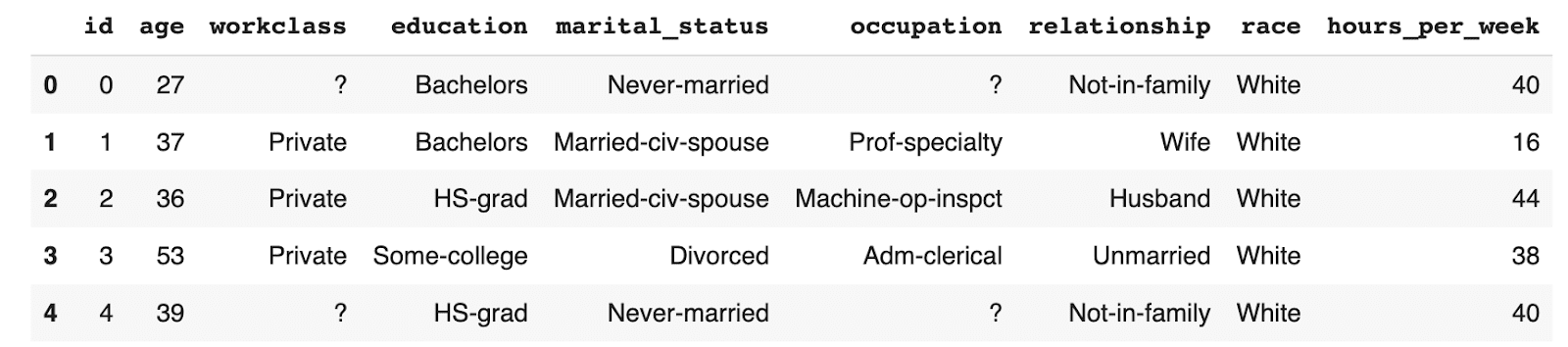

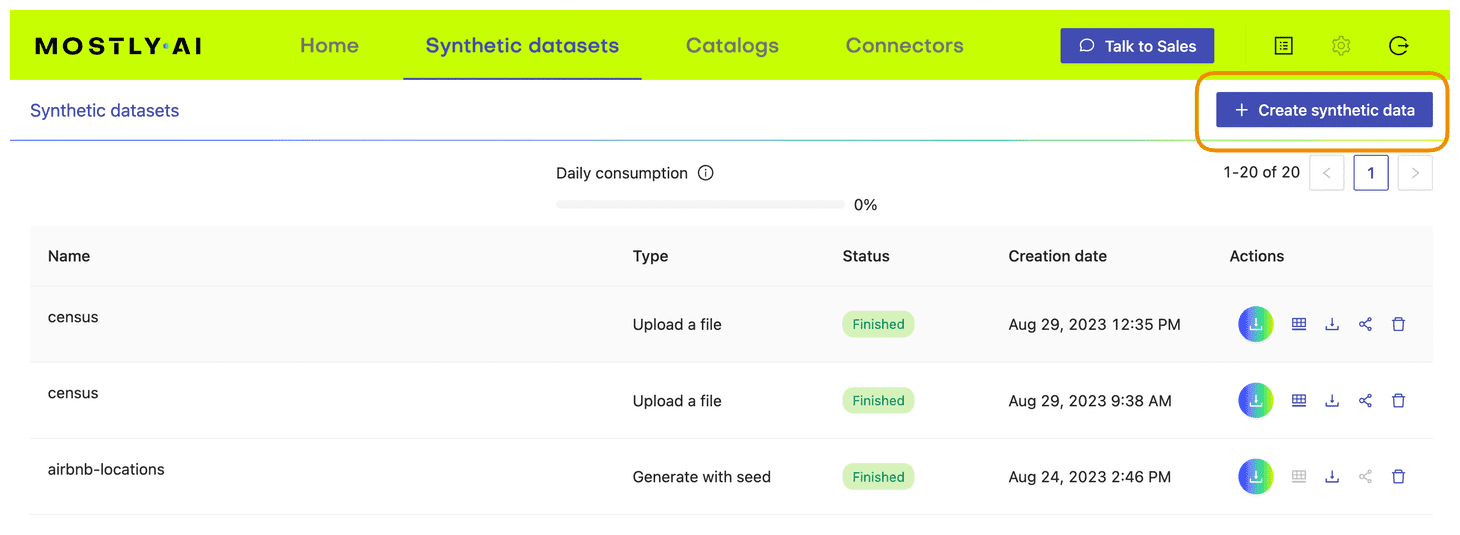

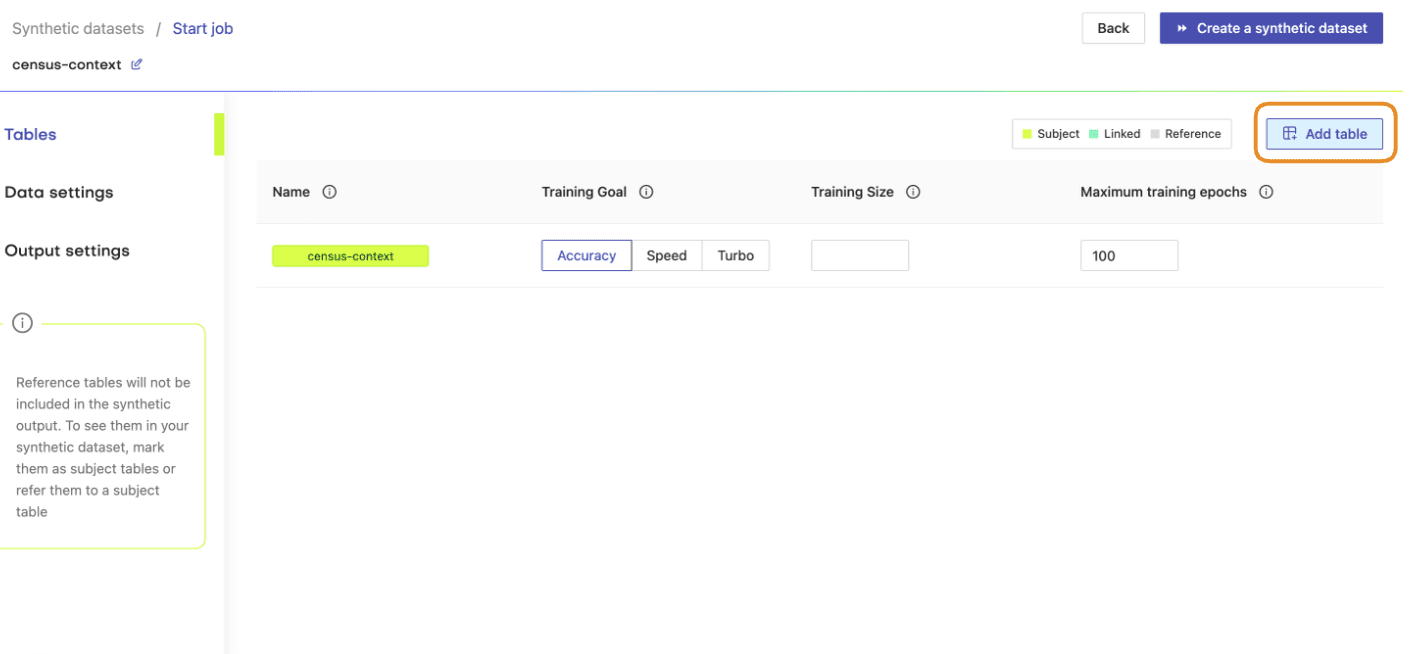

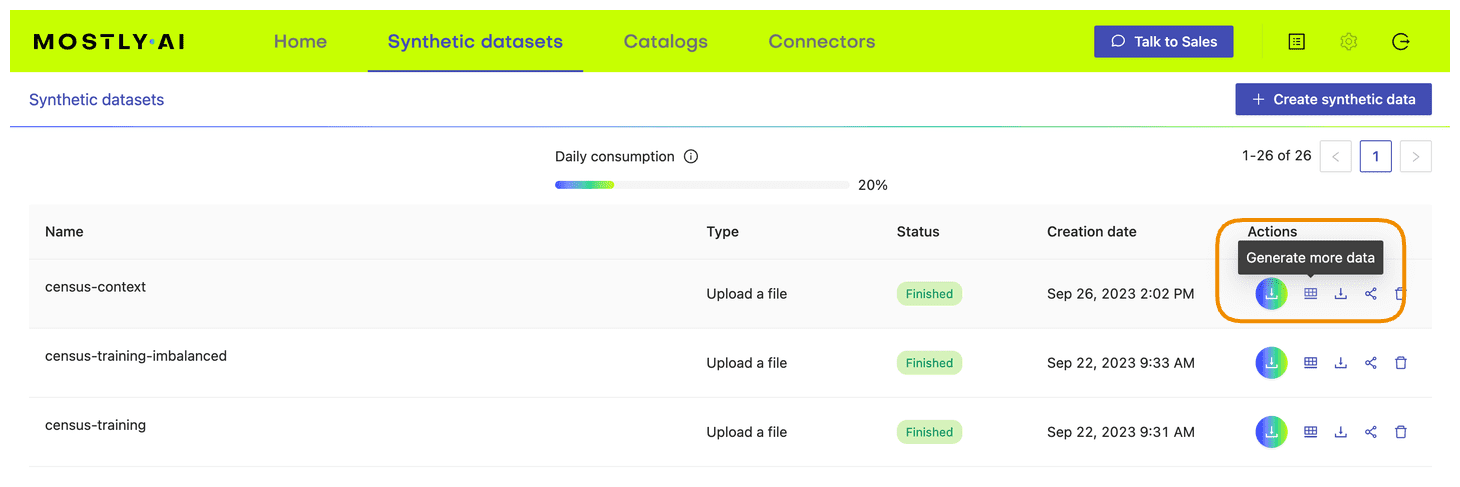

- Navigate to your MOSTLY AI account and go to the “Synthetic datasets” tab. Click on “Create synthetic data” to start a new job.

- Upload the census-context.csv (the file containing your context data).

- Once the upload is complete, click on “Add table” and upload census-target.csv (the file containing your target data) here.

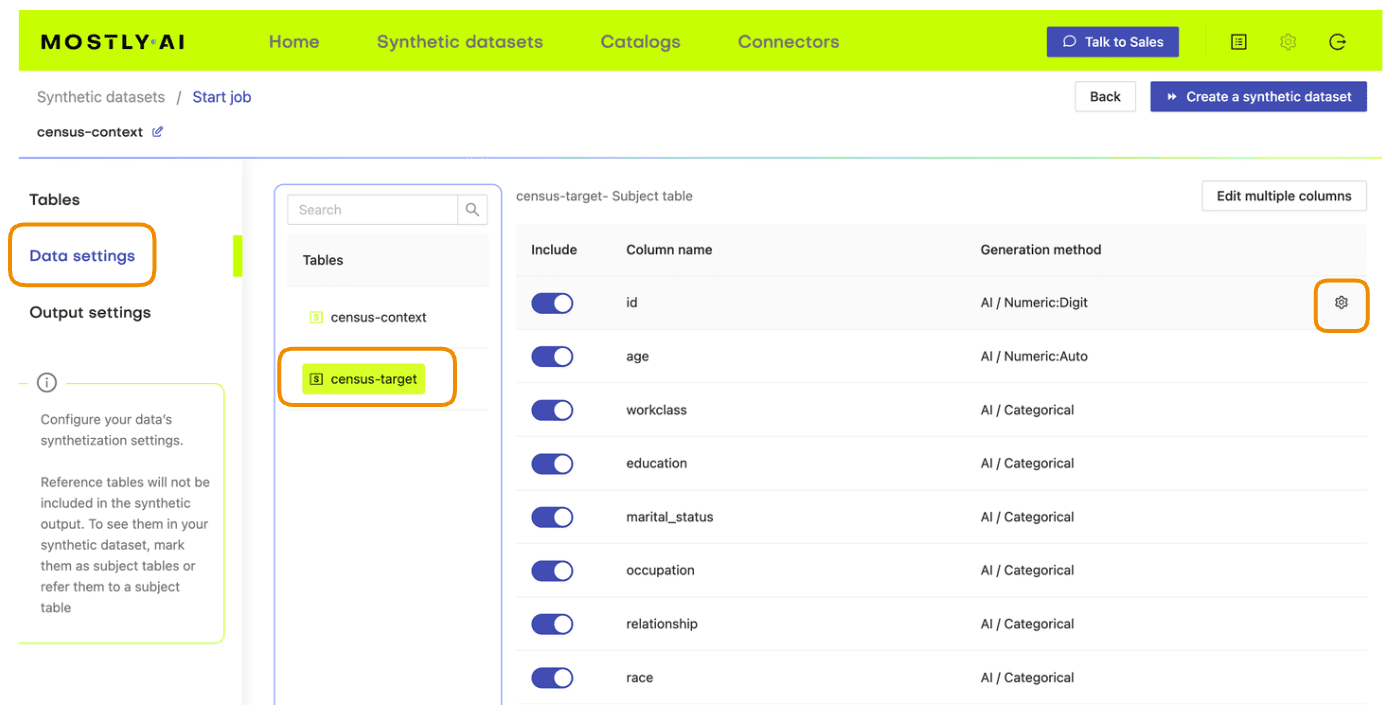

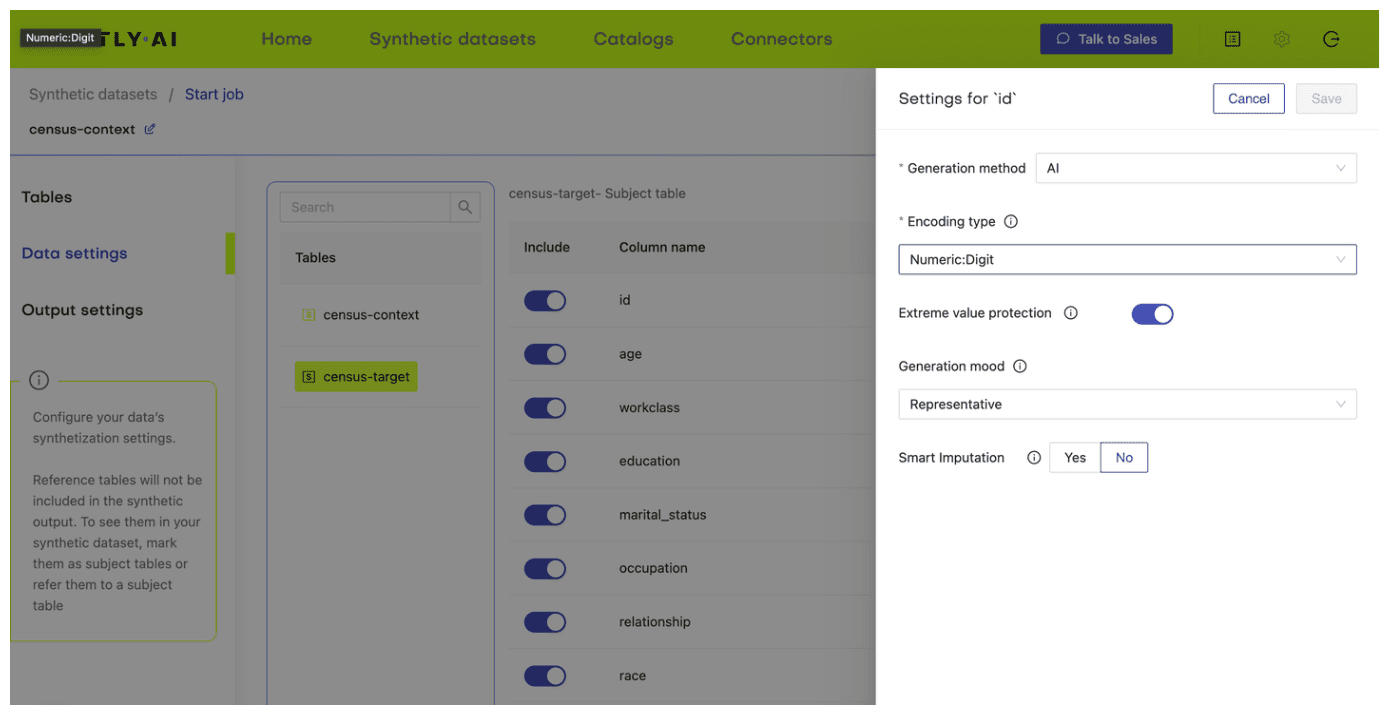

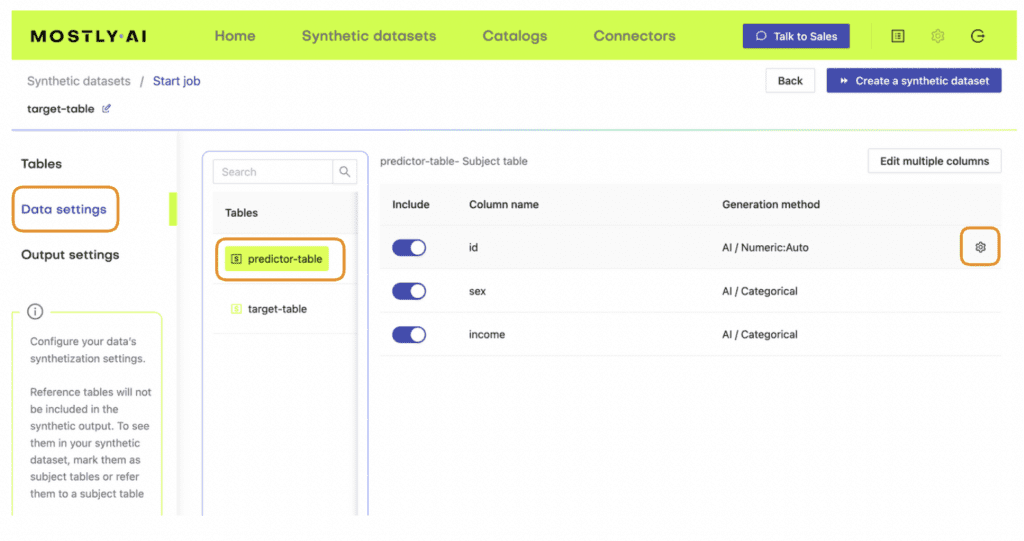

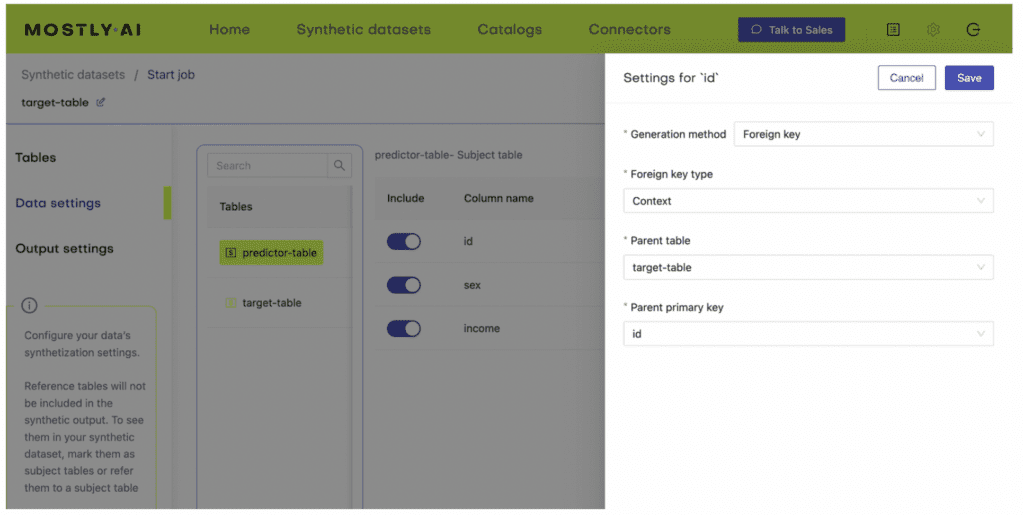

- Next, define the table relationship by navigating to “Data Settings,” selecting the ID column of the context table, and setting the following settings:

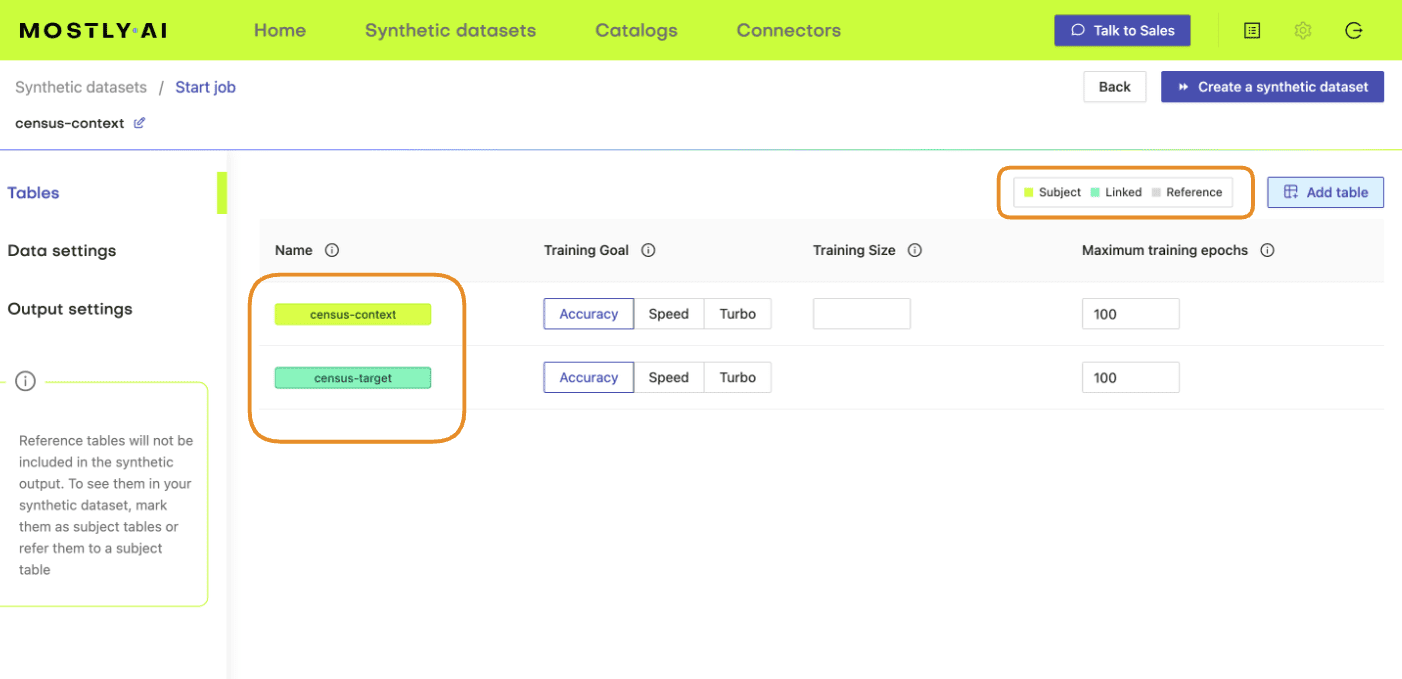

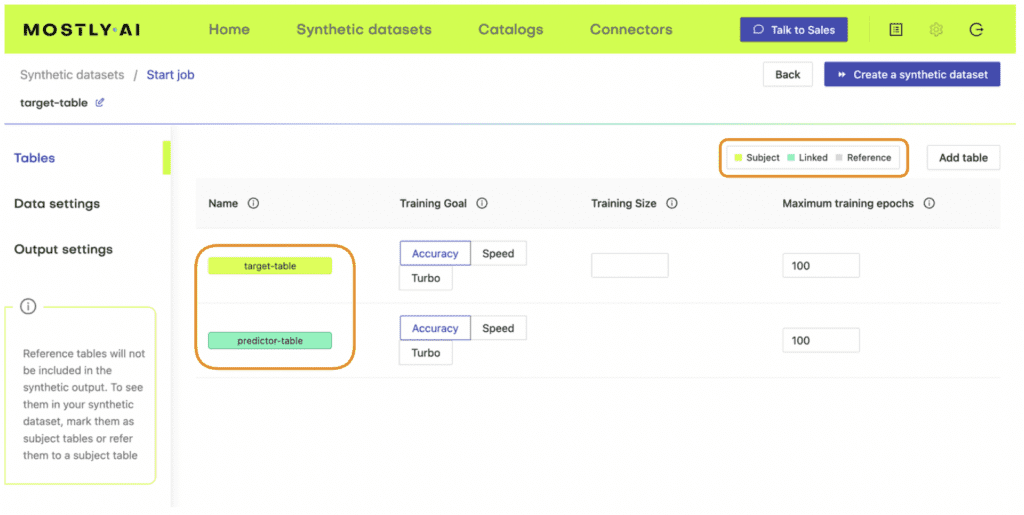

- Confirm that your census-context table is now set as the subject table and the

census-targetas linked table.

- Click “Create a synthetic dataset” to launch the job and train the model. As noted before, the resulting synthetic data is not of particular interest at the moment. We are interested in the model that is created and will use it for conditional data generation in the next section.

Conditional data generation with MOSTLY AI

Now that we have our base model, we will need to specify the conditions within which we want to create synthetic data. For this first use case, you will simulate what the dataset will look like if there was no gender income gap.

- Create a CSV file that contains the same columns as the context file but now containing the specific distributions of those variables you are interested in simulating. In this case, we’ll create a dataset containing an even split between male and female records as well as an even distribution of high- and low-income records. You can use the code block below to do this.

import numpy as np

np.random.seed(1)

n = 10_000

p_inc = (df.income=='>50K').mean()

seed = pd.DataFrame({

'id': [f's{i:04}' for i in range(n)],

'sex': np.random.choice(['Male', 'Female'], n, p=[.5, .5]),

'income': np.random.choice(['<=50K', '>50K'], n, p=[1-p_inc, p_inc]),

})

seed.to_csv('census-seed.csv', index=False)

seed.sample(8)

The resulting DataFrame contains an even income split between males and females, i.e. no gender income gap.

- Download this CSV to disk as

census-seed.csv

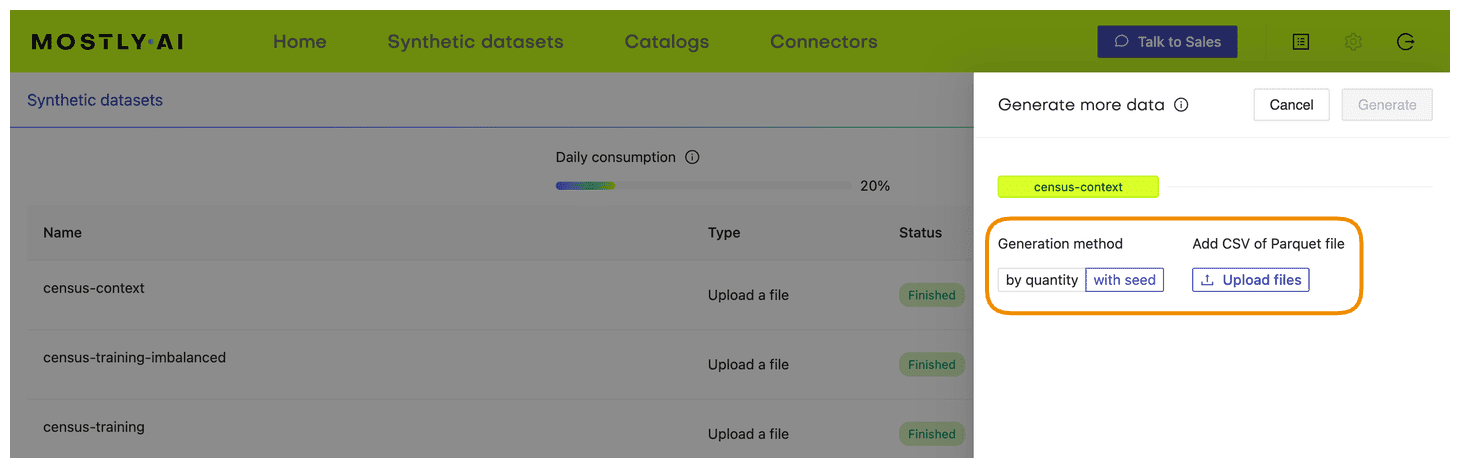

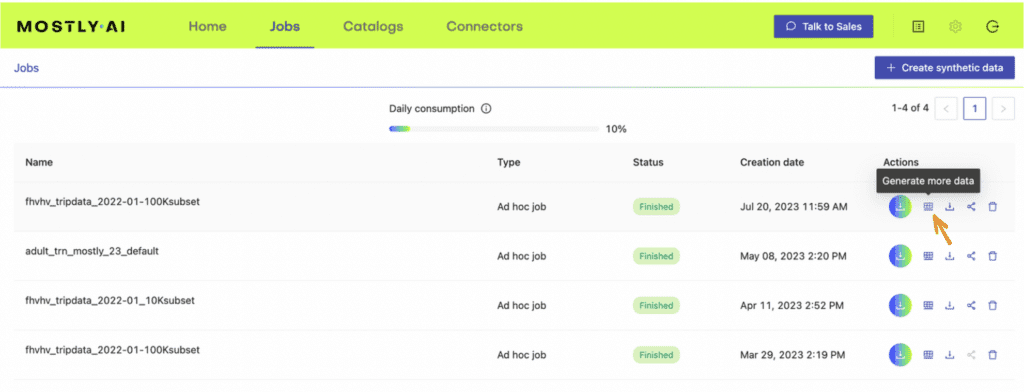

- In your MOSTLY AI account, click on the “Generate more data” button located to the right of the model that you have just trained.

- Select the “Generate with seed” option. This allows you to specify conditions that the synthesization should respect. Upload

census-seed.csvhere.

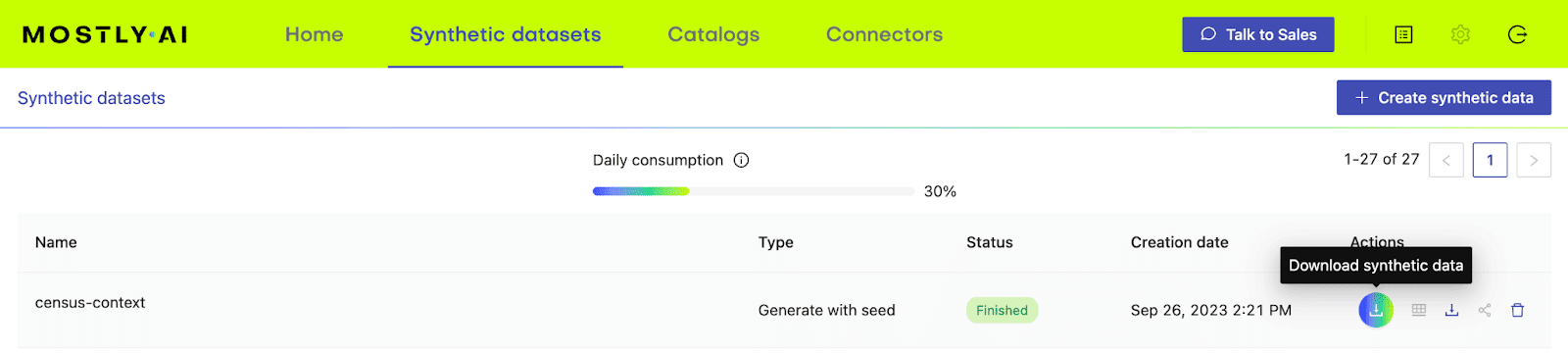

- Generate more data by clicking on “Generate”. Once completed, download the resulting synthetic dataset as CSV.

- Merge the synthetic target data to your seed context columns to get your complete, conditionally generated dataset.

# merge fixed seed with synthetic target to

# a single partially synthetic dataset

syn = pd.read_csv(syn_file_path)

syn = pd.merge(seed, syn, on='id').drop(columns='id')Explore synthetic data

Let’s take a look at the data you have just created using conditional data generation. Start by showing 10 randomly sampled synthetic records. You can run this line multiple times to see different samples.

syn.sample(n=10)

You can see that the partially synthetic dataset consists of about half male and half female records.

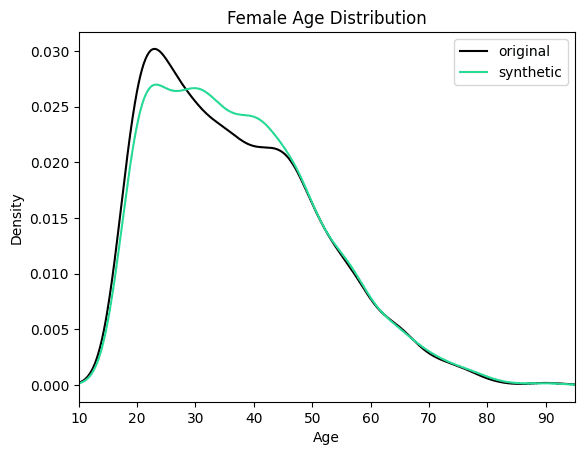

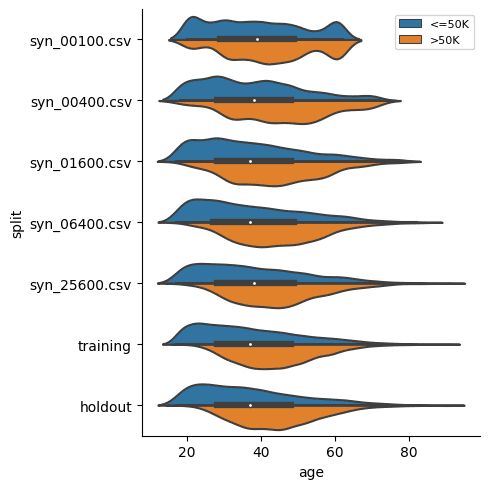

Let's now compare the age distribution of records from the original data against those from the partially synthetic data. We’ll plot both the original and synthetic distributions on a single plot to compare.

import matplotlib.pyplot as plt

plt.xlim(10, 95)

plt.title('Female Age Distribution')

plt.xlabel('Age')

df[df.sex=='Female'].age.plot.kde(color='black', bw_method=0.2)

syn[syn.sex=='Female'].age.plot.kde(color='#24db96', bw_method=0.2)

plt.legend({'original': 'black', 'synthetic': '#24db96'})

plt.show()

We can see clearly that the synthesized female records are now significantly older in order to meet the criteria of removing the gender income gap. Similarly, you can now study other shifts in the distributions that follow as a consequence of the provided seed data.

Conditional generation to retain original data

In the above use case, you customized your data generation process in order to simulate a particular, predetermined distribution for specific columns of interest (i.e. no gender income gap). In the following section, we will explore another useful application of conditional generation: retaining certain columns of the original dataset exactly as they are while letting the rest of the columns be synthesized. This can be a useful tool in situations when it is crucial to retain parts of the original dataset.

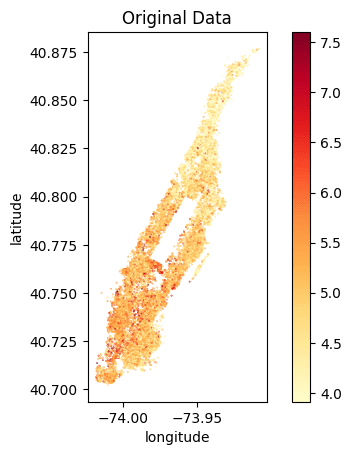

In this section, you will be working with a dataset containing the 2019 Airbnb listings in Manhattan. For this use case, it is crucial to preserve the exact locations of the listings in order to avoid situations in which the synthetic dataset contains records in impossible or irrelevant locations (in the middle of the Hudson River, for example, or outside of Manhattan entirely).

You need the ability to execute control over the location column to ensure the relevance and utility of your resulting synthetic dataset - and conditional data generation gives you exactly that level of control.

Let’s look at this type of conditional data generation in action. Since many of the steps will be the same as in the use case above, this section will be a bit more compact.

Preprocess your data

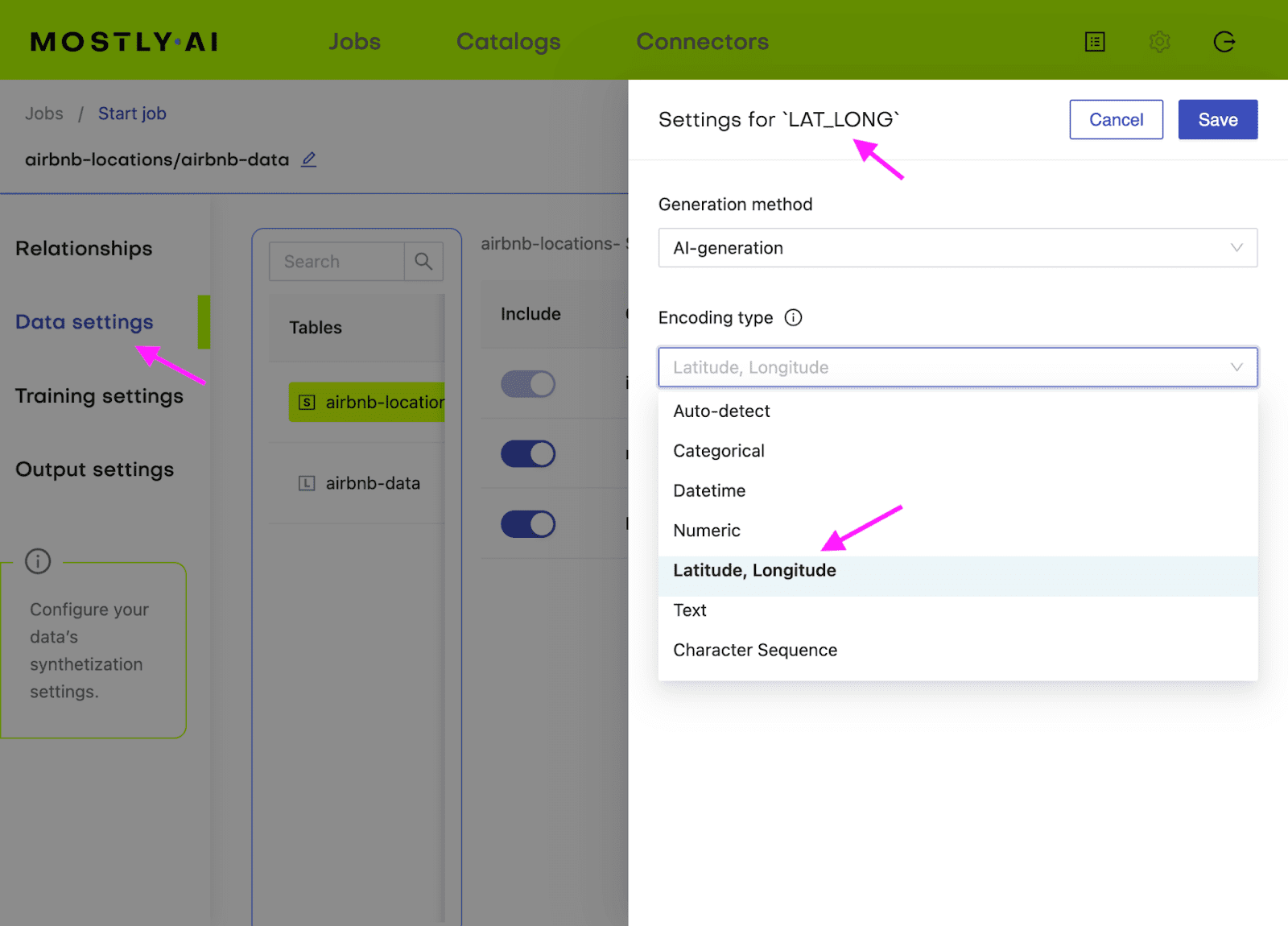

Start by enriching the DataFrame with an id column. Then split it into two DataFrames: airbnb-context.csv and airbnb-target.csv. Additionally, you will need to concatenate the latitude and longitude columns together into a single column. This is the format expected by MOSTLY AI, in order to improve its representation of geographical information.

df_orig = pd.read_csv(f'{repo}/airbnb.csv')

df = df_orig.copy()

# concatenate latitude and longitude to "LAT, LONG" format

df['LAT_LONG'] = (

df['latitude'].astype(str) + ', ' + df['longitude'].astype(str)

)

df = df.drop(columns=['latitude', 'longitude'])

# define list of columns, on which we want to condition on