Historically, synthetic data has been predominantly used to anonymize data and protect user privacy. This approach has been particularly valuable for organizations that handle vast amounts of sensitive data, such as financial institutions, telecommunications companies, healthcare providers, and government agencies. Synthetic data offers a solution to privacy concerns by generating artificial data points that maintain the same patterns and relationships as the original data but do not contain any personally identifiable information (PII).

There are several reasons why synthetic data is an effective tool for privacy use cases:

- Privacy by design: Synthetic data is generated in a way that ensures privacy is built into the process from the beginning. By creating data that closely resembles real-world data but without any PII, synthetic data allows organizations to share information without the risk of exposing sensitive information or violating privacy regulations.

- Compliance with data protection regulations: Synthetic data helps organizations adhere to data protection laws, such as the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA). Since synthetic data does not contain PII, organizations can share and analyze data without compromising user privacy or breaching regulations.

- Collaboration and data sharing: Synthetic data enables organizations to collaborate and share data more easily and securely. By using synthetic data, researchers and analysts can work together on projects without exposing sensitive information or violating privacy rules.

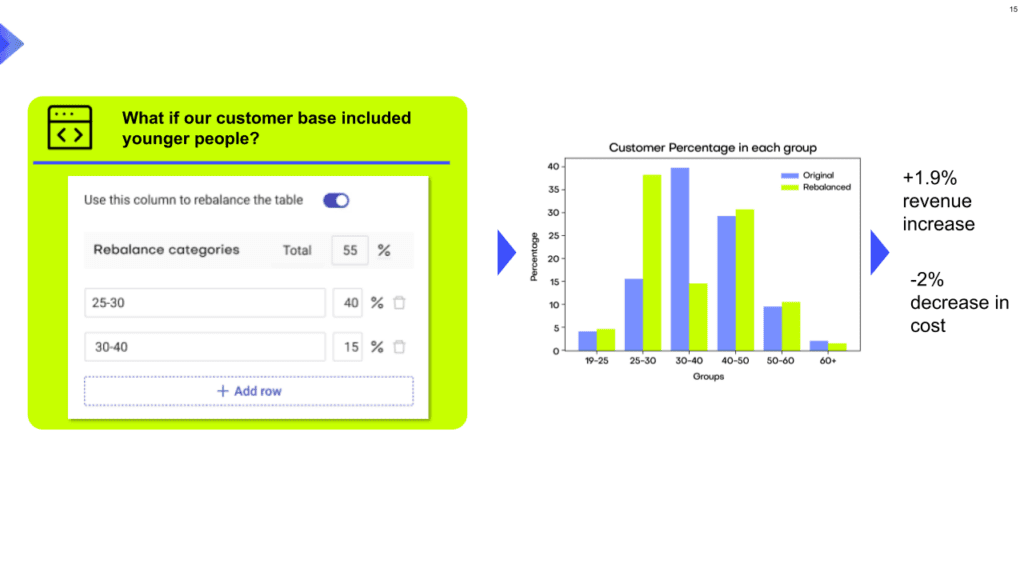

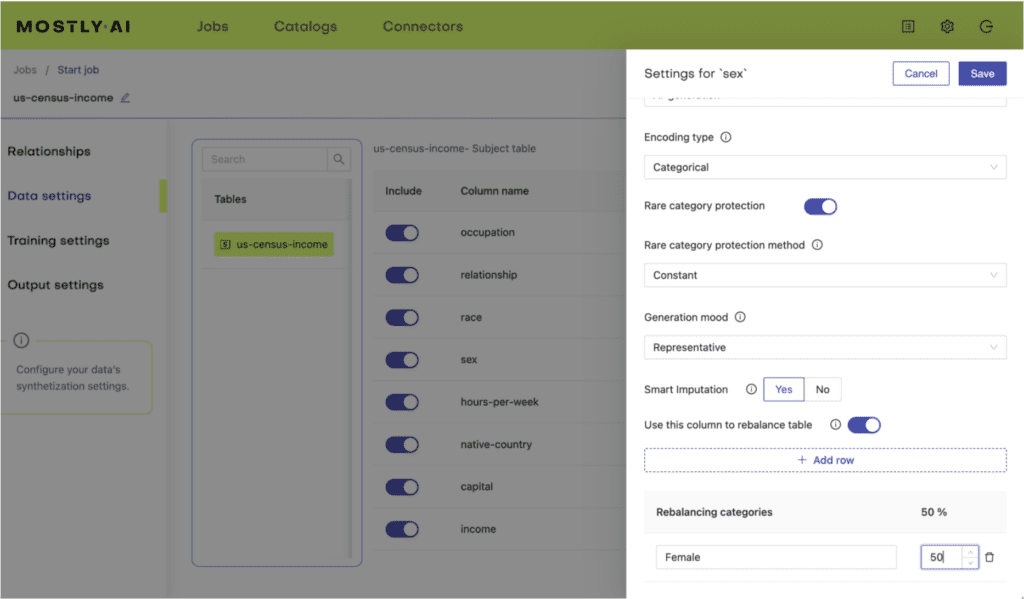

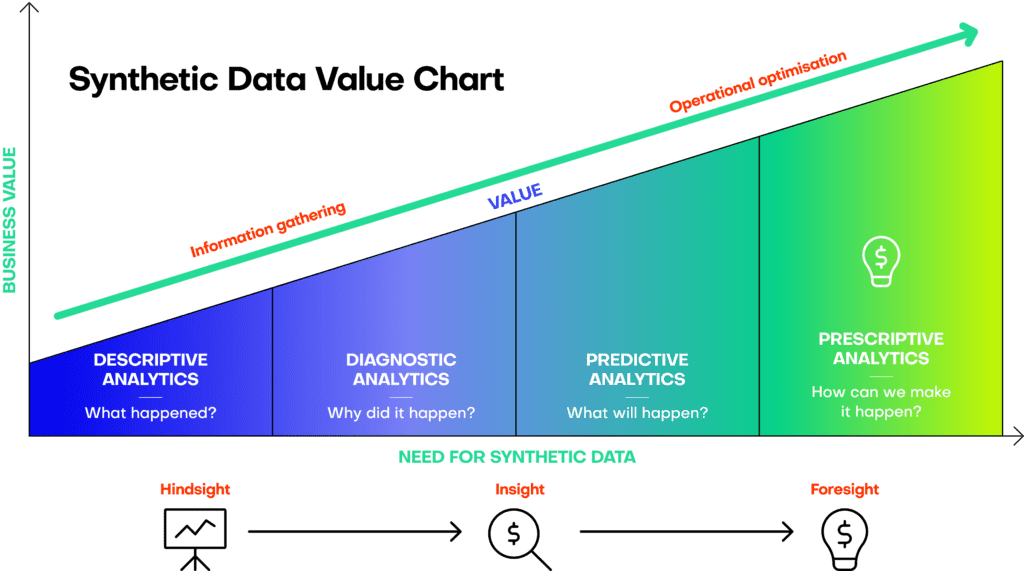

However, recent advancements in technology and machine learning have illuminated the vast potential of synthetic data, extending far beyond the privacy use case. A recent paper from Boris van Breugel and Michaela van der Schaar describes how AI-generated synthetic data moved beyond the data privacy use case. In this blog post, we will explore the potential of synthetic data beyond data privacy applications and the direction in which MOSTLY AI's synthetic data platform has been developing, including new features beyond privacy, such as data augmentation and data balancing, domain adaptation, simulations, and bias and fairness.

Data augmentation and data balancing

Synthetic data can be used to augment existing datasets, particularly when there is just not enough data or there is an imbalance in data representation. Already back in 2020 we showed that by simply generating more synthetic data than was there in the first place, it’s possible to improve the performance of a downstream task.

Since then, we have seen more and more interest in utilizing synthetic data to boost the performance of machine learning models. And there are two distinct approaches that one can take to achieve this: either amplifying existing data by creating more synthetic data (as we did in our research) and only working with the synthetic data or mixing real and synthetic data.

But synthetic data can also help with highly imbalanced datasets. In the realm of machine learning, imbalanced datasets can lead to biased models that perform poorly on underrepresented data points. Synthetic data generation can create additional data points for underrepresented categories, effectively balancing the dataset and improving the performance of the resulting models. We recently published a blog post on data augmentation with details about how our platform can be used to augment existing datasets.

Domain adaptation

In many cases, machine learning models are trained on data from one domain but need to be applied to a different domain where no or not enough training data exists, or where it would be costly to obtain that data. Synthetic data can bridge this gap by simulating the target domain's data, allowing models to adapt and perform better in the new environment. One of the advantages of this approach is that the standard downstream models don’t need to be changed and can be compared easily.

This has applications in various industries. We currently see the most applications of this use case in the unstructured data space. For example, when generating training material for autonomous vehicles, where synthetic data can be generated to simulate different driving conditions and scenarios. Or, similarly, in medical imaging, synthetic data can be generated to mimic different patient populations or medical conditions, allowing healthcare professionals to test and validate machine learning algorithms without the need for vast amounts of real-world data, which can be challenging and expensive to obtain.

However, the same approach and benefits hold true for structured, tabular data as well and it’s an area where we see great potential for structured synthetic data in the future.

Data simulations

But what happens if there is no real-world data at all to work with? Synthetic data can help in this scenario too. Synthetic data can be used to create realistic simulations for various purposes, such as testing, training, and decision-making. Companies can develop synthetic business scenarios and simulate customer behavior.

One example is the development of new marketing strategies for product launches. Companies can generate synthetic customer profiles that represent a diverse range of demographics, preferences, and purchasing habits. By simulating the behavior of these synthetic customers in response to different marketing campaigns, businesses can gain insights into the potential effectiveness of various strategies and make data-driven decisions to optimize their campaigns. This approach allows companies to test and refine their marketing efforts without the need for expensive and time-consuming real-world data collection.

In essence simulated synthetic data holds the potential of being the realistic data that every organization wishes to have: data that is relatively low-effort to create, cost-efficient, and highly customizable. This flexibility will allow organizations to innovate, adapt, and improve their products and services more effectively and efficiently.

Bias and fairness

Bias in datasets can lead to unfair and discriminatory outcomes in machine learning models. These biases often stem from historical data that reflects societal inequalities and prejudices, which can inadvertently be learned and perpetuated by the algorithms. For example, a facial recognition system trained on a dataset predominantly consisting of light-skinned individuals may have difficulty accurately identifying people with darker skin tones, leading to misclassifications and perpetuating racial bias. Similarly, a hiring algorithm trained on a dataset with a higher proportion of male applicants may inadvertently favor male candidates over equally qualified female candidates, perpetuating gender discrimination in the workplace.

Therefore, addressing bias in datasets is crucial for developing equitable and fair machine learning systems that provide equal opportunities and benefits for all individuals, regardless of their background or characteristics.

Synthetic data can help address these issues by generating data that better represents diverse populations, leading to more equitable and fair models. In short: one can generate fair synthetic data based on unfair real data. Already 3 years ago we showed this in our 5-part Fairness Blogpost Series that you can re-read to learn why bias in AI is a problem and how bias correction is one of the main potentials of synthetic data. There we also show the complexity and challenges of the topic including first and foremost how to define what is fair. We see an increasing interest in the market for leveraging synthetic data to address biases and fairness.

There is no question about it: the potential of synthetic data extends far beyond privacy and anonymization. As we showed, synthetic data offers a range of powerful applications that can transform industries, enhance decision-making, and ultimately change the way we work with data. By harnessing the power of synthetic data, we can unlock new possibilities, create more equitable models, and drive innovation in the data-driven world.

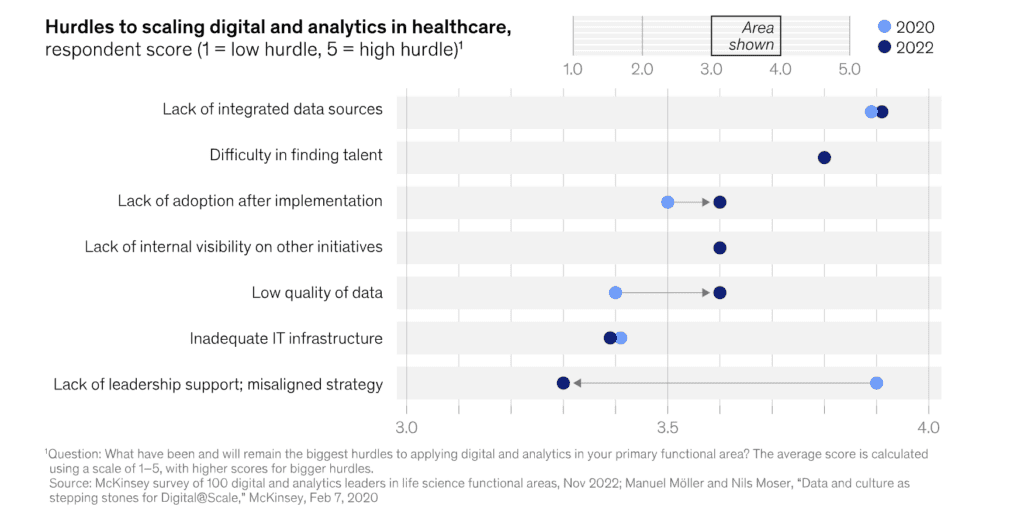

Funding for digital healthcare has doubled since the pandemic. Yet, a large gap between digital leaders and life sciences remains. The reason? According to McKinsey's survey published in 2023, a lack of high-quality, integrated healthcare data platforms is the main challenge cited by medtech and pharma leaders as the reason behind the lagging digital performance. As much as 45 percent of these companies' tech investments go to applied artificial intelligence (AI), industrialized machine learning (ML), and cloud and edge computing - none of which can be realized without meaningful data access.

Why are healthcare data platforms mission-critical?

Healthcare data is hard to access

Since healthcare data is one of the most sensitive data types with special protections from HIPAA and GDPR, it's no surprise that getting access is incredibly hard. MOSTLY AI's team has been working with healthcare companies and institutions closely, providing them with the technology needed to create privacy safe healthcare data platforms. We know firsthand how challenging it is to conduct research and use data-hungry technologies already standard in other industries like banking and telecommunications.

Efforts to unlock health data for research and AI applications are already under way on a federal level in Germany. The German data landscape is an especially challenging one. The country is made up of 16 different federal states, each with its own laws and regulations as well as siloed health data repositories.

InGef, the Institute for Applied Health Research in Berlin and other renowned institutes such as Fraunhofer, Berliner Charité and the Federal Institute for Drugs and Medical Devices set out to solve this problem. This multi-year program is sponsored by the Federal Ministry of Health, a public tender MOSTLY AI won in 2022 to support the program with its synthetic data generation capabilities. The goal here is to develop a healthcare data platform to improve the secure use of health data for research purposes with as-good-as-real, shareable synthetic data versions of health records.

According to Andreas Ponikiewicz, VP of Global Sales at MOSTLY AI,

"Enabling top research requires high quality data that is often either locked away, siloed, or sparse. Healthcare data is considered as very sensitive, therefore the highest safety standards need to be fulfilled. With generative AI based synthetic healthcare data, that contains all the statistical patterns, but is completely artificial, the data can be made available without privacy risk. This makes the data shareable to achieve better collaboration, research outcomes, diagnoses and treatments, and overall service efficiencies in the healthcare sector, which ultimately benefits society overall”.

Protecting health data should be, without a doubt, a priority. According to recent reports, healthcare overtook finance as the most breached industry in 2022, with 22% of data breaches occurring within healthcare companies, up 38% year over year. The so-called deidentification of data required by HIPAA is the go-to solution for many, even though simply removing PII or PHI from datasets never guarantees privacy.

Old-school data anonymization techniques, like data masking, not only endanger privacy but also destroy data utility, which is a major issue for medical research. Finding better ways to anonymize data is crucial for securing data access across the healthcare industry. A new generation of privacy-enhancing technologies is already commercially available and ready to overcome data access limitations. The European Commission's Joint Research Center recommends AI-generated synthetic data for healthcare and policy-making, eliminating data access issues across borders and institutions.

Healthcare data is incomplete and imbalanced

Research and patient care suffer due to incomplete, inaccurate, and inconsistent data. Filling in the gaps is also an important step in making the data readable for humans. Human readability is especially mission-critical in health research and healthcare, where understanding and reasoning around data is part of the research process. Machine learning models and AI algorithms need to see the data in its entirety too. Any data points masked or removed could contain important intelligence that models can learn from. As a result, heavily masked or aggregated datasets won't be able to teach your models as well as complete datasets with maximum utility.

To overcome these limitations, more and more research teams turn to synthetic data as an alternative. Successfully implemented healthcare data platforms fully take advantage of the potential of synthetic data beyond privacy. Synthetic data generated from real seed data offers privacy as well as data augmentation opportunities perfect for improving the performance of machine learning algorithms. The European Commission's Joint Research Center investigated the synthetic patient data option for healthcare applications closely and found that (the):

"Resulting data not only can be shared freely, but also can help rebalance under-represented classes in research studies via oversampling, making it the perfect input into machine learning and AI models"

Healthcare data is biased

Data bias can take many shapes and forms from imbalances to missing or erroneous data on certain groups of people. Synthetic data generation is a promising tool that can help correct biases embedded in datasets during data preparation. The first important step is to find the bias in the first place. The more eyes you have on the data, the better the chances of identifying hidden biases. Clearly, this is impossible with sensitive healthcare datasets. Synthetic versions of data can increase the level of access and transparency of important data assets.

Health data is the most sensitive data type

A few years ago a brand new drug, developed for treating Spinal Muscular Atrophy, broke all previous records as the most expensive therapy in the world. If you were to query supposedly anonymous health insurance databases around that time, you could easily identify children who received this drug, simply because the price was such an outlier. But even in the absence of such an extreme value, reidentifying people by linking separate data points together is not a difficult thing to do.

Surely, locking health data up and securing the environment where the data is stored is the way to go. Except that most data leaks actually originate with a company's own employees. Usually, there is no malicious intent either. A zero-trust approach helps only to an extent and fails to protect from mistakes and accidents that are bound to happen. Locking data up also means fewer data collaboration, smaller sample sizes, less intelligence, higher costs, worse predictions, and ultimately, more suffering.

For example, the improvement of predictive accuracy of machine learning models regarding health outcomes can not only save costs for healthcare providers but also decrease suffering. The more granular the data, the better the capabilities in predicting how certain cohorts of patients will react to treatments and what the likelihood of a good outcome will be.

In one instance, MOSTLY AI's synthetic data generator was used for the synthetic rebalancing of patient records and predicting which patients would benefit from a therapy that can come with serious side effects. John Sullivan, MOSTLY AI's Head of Customer Experience, has seen how synthetic data generation can transform health predictions from up close.

"We worked on a dataset for a large North American healthcare company and achieved an increase of accuracy in true positives predicted by the down-stream ML model in the range of 7-13% against a target of 5-10%. The improved model performance means potentially hundreds of patients benefit from early identification, and more importantly, early treatment of their illness. It's huge and extremely motivating."

Considering that 85% of machine learning models don't even make it into production, synthetic training data is a massive win for data science teams and patients alike.

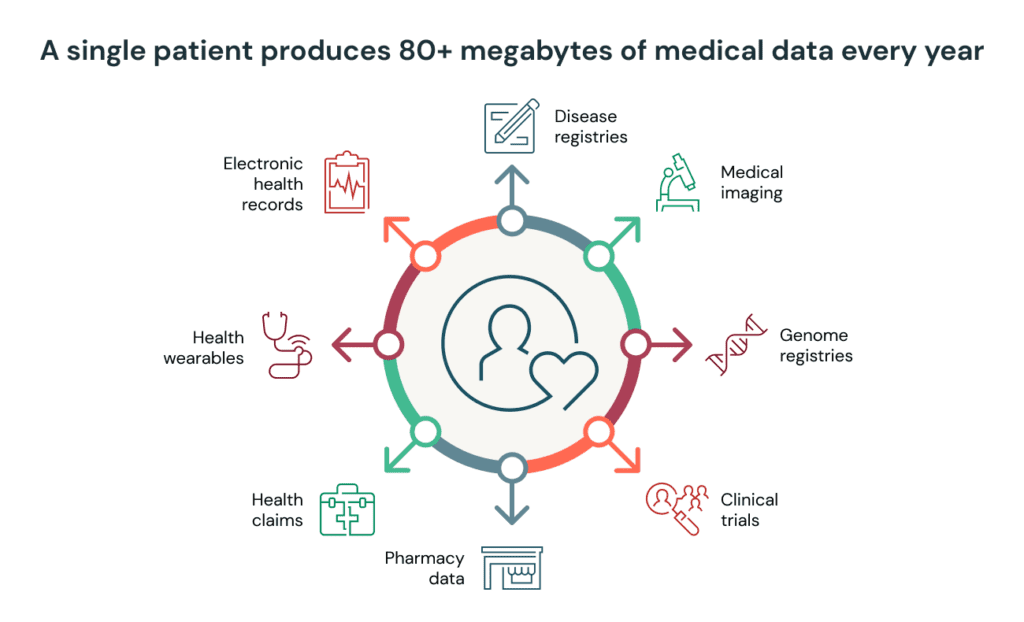

Synthetic healthcare data types

Different data types are present along the patient journey, requiring a wide range of tools to extract intelligence from these data sources at scale. Healthcare data platforms should cover the entire patient journey, providing data consumers with an environment ready for 'in-silico' experiments.

In healthcare, image data in particular receives a lot of attention. From improved breast cancer detection to AI-powered surgical guidance systems, medical science is undergoing a profound transformation. However, the AI revolution doesn't stop at image analyses and computer vision.

Tabular healthcare data is another frontier for new artificial intelligence applications. Synthetic data generated by AI trained on real datasets is one of the most versatile tools with plenty of use cases and a robust track record, allowing researchers to collaborate even across borders and institutions.

Examples of tabular healthcare data include electronic health records (EHR) of patients and populations, electronic medical records generated by patient journeys (EMR), lab results, and data from monitoring devices. These highly sensitive structured data types can all be synthesized for ethical, patient-protecting usage without utility loss. Synthetic health data can then be stored, shared, analyzed, and used for building AI and machine learning applications without additional consent, speeding up crucial steps in drug development and treatment optimization processes.

Rare disease research suffers from a lack of data the most. Almost always, the only way to produce significant results is to share datasets across national borders and between research teams. This process is sometimes near-impossible and excruciatingly slow at best. Researchers need to be working on the same data in order to make the same conclusions and validate findings. Synthetic versions of datasets can be shared and merged without compliance issues or privacy risks, allowing rare disease research to advance much quicker and at a significantly lower cost. On-boarding researchers and scientists to healthcare data platforms populated with synthetic data is easy to do, since the synthetic version of datasets does not legally qualify as personal data.

Use cases and benefits for AI-generated synthetic data platforms in healthcare

Let's summarize the most advanced healthcare data platform use cases and their benefits for AI-generated synthetic data. These are based on our experience and direct observations of the healthcare industry from up-close.

Machine learning model development with synthetic data

The reason why most machine learning projects fail is a lack of high-quality, large-quantity, realistic data. Synthetic data can be safely used in place of real patient data to train and validate machine learning models. Synthetic data generators can shorten time-to-market by unlocking valuable data assets by taking care of data prep steps such as data exploration at a granular level, data augmentation, and data imputation. Since data replaced code, healthcare data platforms became the most important part of MLOps infrastructures and machine learning product development.

Data synthesis for data privacy and compliance

Protected health information, or PHI, is heavily regulated both by HIPAA and GDPR. PHI can only be accessed by authorized individuals for specific purposes, which makes all secondary use cases and further research practically impossible. Organizations disregarding these rules face heavy fines and eroding patient trust. Synthetic data can be used to protect patient privacy by preserving sensitive information while still allowing for data analysis.

Testing and simulation with synthetic data generators

Synthetic data helps researchers to forecast the effects of greater sample size and longer follow-up duration on already existing data, thus informing the design of the research methodology. MOSTLY AI's synthetic data generator, in particular, is well suited to carry out on-the-fly explorations, allowing researchers to query what-if scenarios and reason around data effectively.

Data repurposing for research and development

Often, using data for secondary purposes is challenging or downright prohibited by regulators. Synthetic data can overcome these limitations and be used as a drop-in placement to support research and development efforts. Researchers can also use synthetic data to create a so-called 'synthetic control arm.' According to Meshari F. Alwashmi, a digital health scientist:

"Instead of recruiting patients to sign up for trials to not receive the treatment (being the control group), they can turn to an existing database of patient data. This approach has been effective for interpreting the treatment effects of an investigational product in trials lacking a control group. This approach is particularly relevant to digital health because technology evolves quickly and requires rapid iterative testing and development. Furthermore, data collected from pilot studies can be utilized to create synthetic datasets to forecast the impact of the intervention over time or with a larger sample of patients."

Population health analysis for policy-making

AI-generated synthetic data can be used to model and analyze population health, including disease outbreaks, demographics, and risk factors. According to the European Commission's Joint Research Center, synthetic data is "becoming the key enabler of AI in business and policy applications in Europe." Especially since the pandemic, the urgency to provide safe and accessible healthcare data platforms on the population level is on the increase and the idea of data democratization is no longer a far-away utopia, but a strong driver in policy-making.

Data collaborations for innovation

Creating healthcare data platforms by proactively synthesizing and publishing health data is a winning strategy we see at large organizations. Humana, one of the largest health insurance providers in North America, launched a synthetic data exchange platform to facilitate third-party product development. By joining the sandbox, developers can access synthetic, highly granular datasets and create products with laser-sharp personalizations, solving real-life problems. In a similar project, Merkur Insurance in Austria uses MOSTLY AI’s synthetic data platform to develop new services and personalized customer experiences. For example, to develop machine learning models using privacy-safe and GDPR-compliant training data.

Ethical and explainable AI

Synthetic data generation provides additional benefits to AI and machine learning development. Ethical AI is one area where synthetic data research is advancing rapidly. Fair models and predictions need fair data inputs and the process of data synthesis allows research teams to explore definitions of fairness and their effects on predictions with fast iterations. Furthermore, the introduction of the first AI regulations is only a matter of time. With regulations comes the need for explainability. Explainable AI needs synthetic data - a window into the souls and inner workings of algorithms, without trust can never be truly established.

If you would like to explore what a synthetic healthcare data platform can do for your company or research, contact us and we'll be happy to share our experience and know-how.

According to Gartner, "data and analytics leaders who share data externally generate three times more measurable economic benefit than those who do not." Yet, organizations even struggle to collaborate on data within their own walls. No matter the architecture, somehow, everyone ends up with rigid silos and uncooperative departments. Why? Because data collaboration is a lot of work.

The data mesh approach to collaboration

Treating data as a product and assigning ownership to people closest to the origins of the particular data stream makes perfect sense. The data mesh architecture attempts to reassign data ownership from a central focal point to decentralized data owners with domain knowledge embedded into teams across the entire organization. But the data mesh is yet to solve the cultural issues. What we see time and time again at large organizations is people becoming overly protective of the data they were entrusted to govern. Understandably so. The zero trust approach is easy to adopt in the world of data, where erring on the side of caution is justified. Data breaches are multimillion-dollar events, damaging reputations on all levels, from organizational to personal. Without trusted tools to automatically embed governance policies into data product development, data owners will always remain reluctant to share and collaborate, no matter the gains interconnecting data products offer.

The synthetic data mesh for data collaboration

Data ecosystems are already built with synthetic data, accelerating AI adoption in the most data-critical industries, such as finance. When talking about accelerating data science in finance, Jochen Papenbrock, Head of Financial Technology at NVIDIA said:

"Synthetic data is a key component for evaluating AI models, and it's also a key component of collaboration in the ecosystem. My personal belief is that as we see a strong growth of AI adoption, and we'll see a strong growth in the adoption of synthetic data at the same speed."

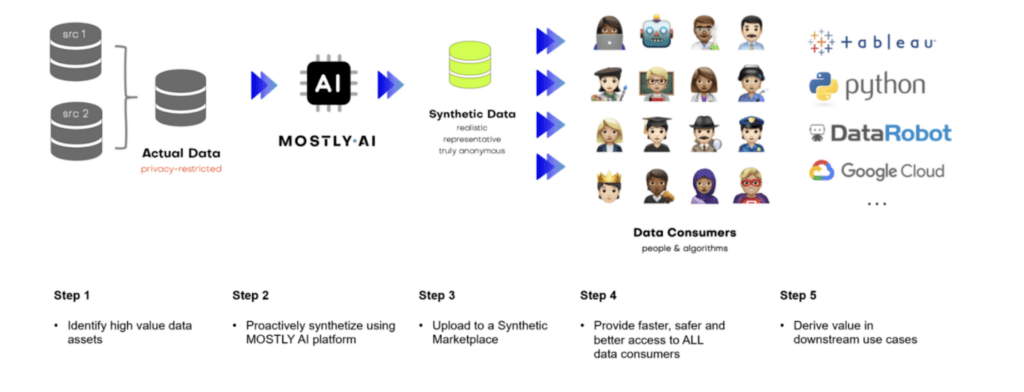

So making synthetic data generation tools readily available for data owners should be considered a critical component of the data mesh. Proactively synthesizing and serving data products across domains is the next step on your journey of weaving the data mesh and scaling data collaborations. Readily available synthetic data repositories create new, unexpected value for data consumers and the business.

Examples of synthetic data products

Accelerating AI innovation is already happening at companies setting the standards for data collaborations. Humana, one of the largest North American health insurance providers, launched a synthetic data exchange to accelerate data-driven collaborations with third-party vendors and developers. Healthcare data platforms populated with realistic, granular and privacy safe synthetic patient data are mission-critical for accelerating research and product development.

Sometimes data silos are legal requirements, and certain data assets cannot be joined for compliance reasons. Synthetic data versions of these datasets serve as drop-in placements and can interconnect the domain knowledge contained in otherwise separated data silos. In these cases, synthetic data products are the only option for data collaboration.

In other cases, we've seen organizations with a global presence use synthetic data generation for massive HR analytics projects, connecting employee datasets from all over the world in a way that is compliant with the strictest regulations, including GDPR.

The wide adoption of AI-enabled data democratization represents breakthrough moments in how data consumers access data and create value. The intelligence data contains should no longer be locked away in carefully guarded vaults but flowing freely between teams and organizations.

The benefits of data collaborations powered by synthetic data

Shareable synthetic data helps data owners who want to collaborate and share data in and out of organizations by reducing time-to-data and governance costs, enabling innovation, democratizing data, and increasing data literacy. Unlike legacy data anonymization, which reduces data utility. The reduction in time-to-data in itself is significant.

"According to our estimates, creating synthetic data products results in a 90%+ reduction in time-to-consumption in downstream use cases. Less new ideas are left on the cutting room floor, and more data is making an impact in the business.” says John Sullivan, Head of Customer Experience at MOSTLY AI.

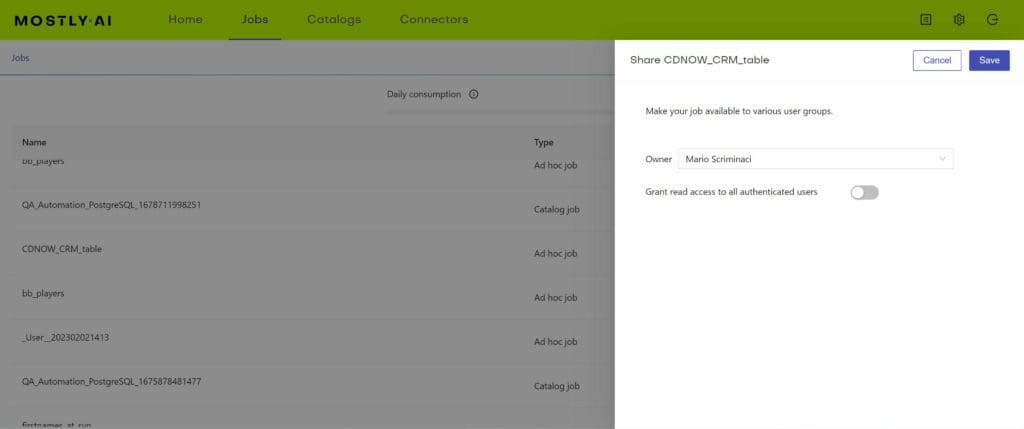

MOSTLY AI's synthetic data platform was created with synthetic data products in mind - synthetic data can be shared directly from the platform together with the automatically generated quality assurance report.

Data mesh vs. data fabric with synthetic data in mind

Mario Scriminaci, MOSTLY AI’s Chief Product Officer thinks, that the concept of the data mesh and data fabric is often perceived as antithetical.

“The difference between the two architectures is that the data mesh pushes for de-centralization, while the data fabric tries to aggregate all of the knowledge about metadata. In reality, they are not mutually exclusive. The concepts of the data mesh and the data fabric can be applied simultaneously in big organizations, where the complexity of data architecture calls for a harmonized view of data products. With the data consumption and data discovery initiatives, synthetic data generation will help centralize the knowledge of data and datasets (aka. the data fabric) and, at the same time, will also help customize datasets to domain-specific needs (aka. data mesh).”

In a data mesh architecture, data ownership and privacy are crucial considerations. Synthetic data generation techniques allow organizations to create realistic data that maintains privacy. It enables data collaboration between teams across organizations to produce and share synthetic data products with high utility.

Data mesh architectures promote the idea of domain-oriented, self-serve data teams. Synthetic data allows teams to experiment, develop, and test data pipelines and applications independently, fostering agility and making data democratization an everyday reality.

Synthetic data products also eliminate the need to replicate or move vast volumes of real data across systems, making it easier to scale and optimize data processing pipelines and enabling data collaboration at scale.

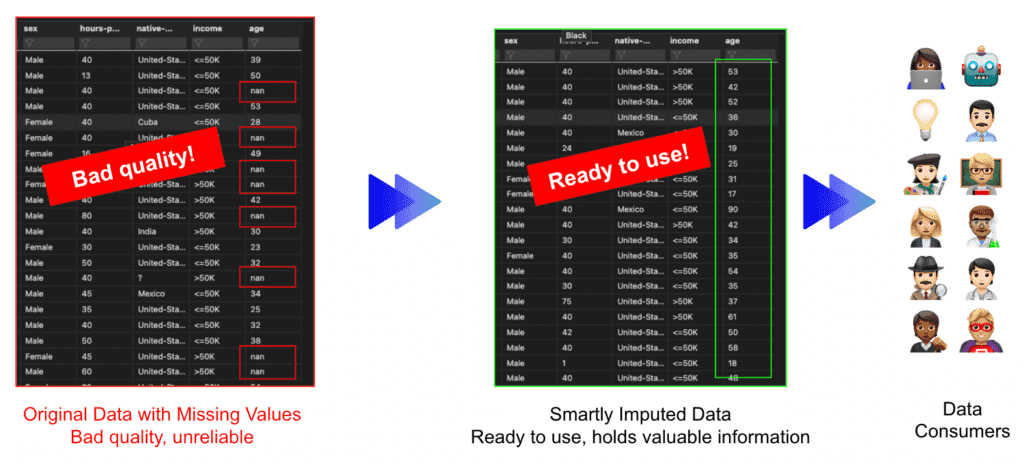

Smart data imputation with AI-generated synthetic data is superior to all other methods out there. Synthetic data generated with MOSTLY AI is highly representative, highly realistic, granular-level data that can be considered ‘as good as real’. While maintaining complete protection of each data subject's privacy, it can be openly processed, used, and shared among your peers. MOSTLY AI’s synthetic data serves various use cases and the initiatives that our customers have so far achieved, prove and demonstrate the value that our synthetic data platform has to offer.

To date, most of our customers' use cases centered around generating synthetic data for data sharing while maintaining privacy standards. However, we believe that there is more on offer for data consumers around the world. Poor-quality data is a problem for data scientists across all industries.

Real-world datasets have missing information for various reasons. This is one of the most common issues data professionals have to deal with. The latest version of MOSTLY AI's synthetic data generator introduces features that can be utilized by users to interact with their original dataset - the so-called 'data augmentation' features. Among those is our ‘Smart Imputation’ technique which can accurately recreate the original distribution while filling the gaps for the missing values. This is gold for analytical purposes and data exploration!

What is smart data imputation?

Data imputation is the process of replacing missing values in a dataset with non-missing values. This is of particular interest, if the analysis or the machine learning algorithm cannot handle missing values on its own, and would otherwise need to discard partially incomplete records.

Many real-world datasets have missing values. On the one hand, some of the missing values may exist as they may hold important information depending on the business. For instance, a missing value in the ‘Death Date’ column means that the customer is still alive or a missing value in the ‘Income’ column means that the customer is unemployed or under-aged. On the other hand, oftentimes the missing values are caused by an organization's inability to capture this information.

Thus, organizations look for methods to impute missing values for the latter case because these gaps in the data can result in several problems:

- Missing data occasionally causes results to be biased. This means that because your data came from a non-representative sample, your findings could not be directly applicable to situations outside of your study.

- Dataset distortion occurs when there is a great deal of missing data, which can change the distributions that people could see in real-world situations. Consequently, the numbers do not accurately reflect reality.

As a result, data scientists may employ rather simplistic methods to impute missing values which are likely to distort the overall value distribution of their dataset. These strategies include frequent category imputation, mean/median imputation, and arbitrary value imputation. Thoughtful consideration should be given to the fact that well-known machine learning libraries like scikit-learn have introduced data scientists to several univariate and multivariate imputation algorithms, including, respectively, "SimpleImputer" and "IterativeImputer."

Finally, the scikit-learn 'KNNImputer' class, which offers imputation for filling in missing data using the k-Nearest Neighbors approach, is a popular technique that has gained considerable attention recently.

MOSTLY AI’s smart imputation technique seeks to produce precise and accurate synthetic data so that it is obvious right away that the final product is of "better" quality than the original dataset. At this point, it is important to note that MOSTLY AI is not yet another tool that merely replaces a dataset's missing values. Instead, we give our customers the ability to create entirely new datasets free of any missing values. Go ahead and continue reading if this piques your curiosity so you may verify the findings for yourself.

Evaluating smart imputation

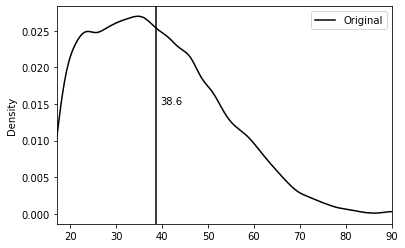

We devise the below technique to compare the originally targeted distribution with the synthetic one to evaluate MOSTLY AI's smart imputation feature.

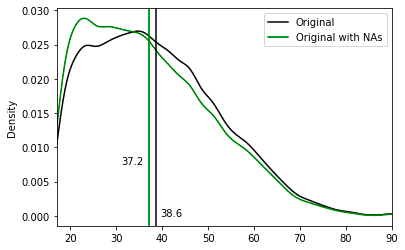

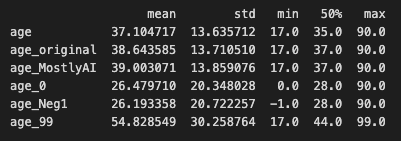

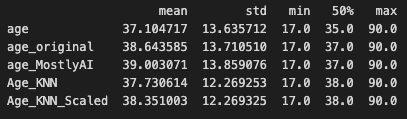

Starting with the well-known US-Census dataset, we use column ‘age’ as our targeted column. The dataset has approximately 50k records and includes 2 numerical variables and 9 categorical variables. The average age of the targeted column is 38.6 years, with a range of 17 to 90 years and a standard deviation of 13.7 years.

Our research begins by introducing semi-randomly some missing values into the US-Census dataset's "age" column. The goal is to compare the original distribution with the smartly imputed distribution and see whether we can correctly recover the original one.

We applied the following logic to introduce missing values in the original dataset, to artificially bias the non-missing values towards younger age segments. The age attribute was randomly set to missing for:

- 10% of all records

- 60% of records, whose education level was either ‘Doctorate', 'Prof-school' or ‘Masters’

- 60% of records, whose marital status was either ‘Widowed’ or 'Divorced'

- 60% of records, whose occupation level was set to ‘Exec-managerial’

It's important to note that by doing this, the algorithm won't be able to find any patterns or rules on where the missing values are located.

As a result, the 'age' column now has missing numbers that appear to be missing semi-randomly. The column's remaining non-missing values are therefore skewed in favor of younger people:

- Original age mean: 38.6

- Original age mean with missing values: 37.2

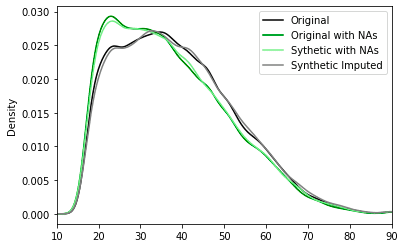

As a next step, we synthesized and smartly imputed the US-Census dataset with the semi-random missing values on the "age" column using the MOSTLY AI synthetic data platform.

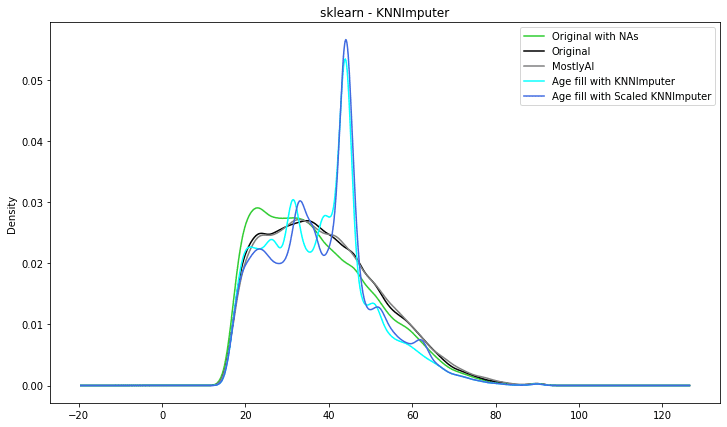

We carried out two generations of synthetic data. The first one is for generating synthetic data without enabling imputation and as expected the synthetic dataset matches the distribution of the data used to train the model (Synthetic with NAs - light green). The second one is for generating synthetic data enabling MOSTLY AI’s Smart imputation feature for the ‘age’ column. As we can see, the smartly imputed synthetic data perfectly recovers the original distribution!

After adding the missing values to the original dataset, we started with an average age of 37.2 and used the "Smart imputation" technique to reconstruct the "age" column. The initial distribution of the US-Census data, which had an average age of 38.6, is accurately recovered in the reconstructed column, which now has an average age of 39.

These results are great for analytical purposes. Data scientists now have access to a dataset that allows them to operate without being hindered by missing values. Now let's see how the synthetic data generation method compares to other data imputation methods.

Data imputation methods: a comparison

Below we are describing 6 of the main imputation techniques for numerical variables and we are going to compare the results with our Smart Imputation algorithm. For each technique below we are presenting a general summary statistics of the ‘age’ distribution as well as a visual representation of the results against MOSTLY AI’s results.

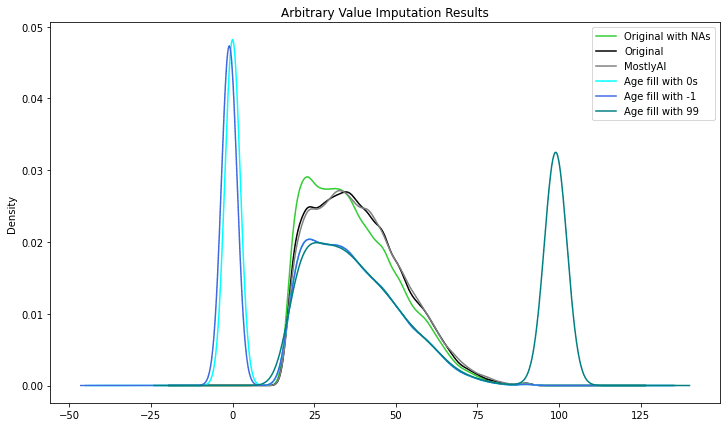

Arbitrary value data imputation

Arbitrary value imputation is a type of data imputation technique used in machine learning to fill in missing values in datasets. It involves replacing missing values with a specified arbitrary value, such as 0, 99, 999, or negative values. Instead of imputing the numbers using statistical averages or other methods, the goal is to flag the values.

This strategy is quite simple to execute, but it has a number of disadvantages. For starters, if the arbitrary number utilized is not indicative of the underlying data, it can inject bias into the dataset. For example, if the mean is used to fill in missing values in a dataset with outliers or extreme values, the imputed values may not accurately reflect the underlying distribution of the data.

Using an arbitrary value can limit dataset variability, making it more difficult for machine learning algorithms to find meaningful patterns in the data. As a result, forecast accuracy and model performance may suffer.

As you can see, the variable was given new peaks, drastically altering the initial distribution.

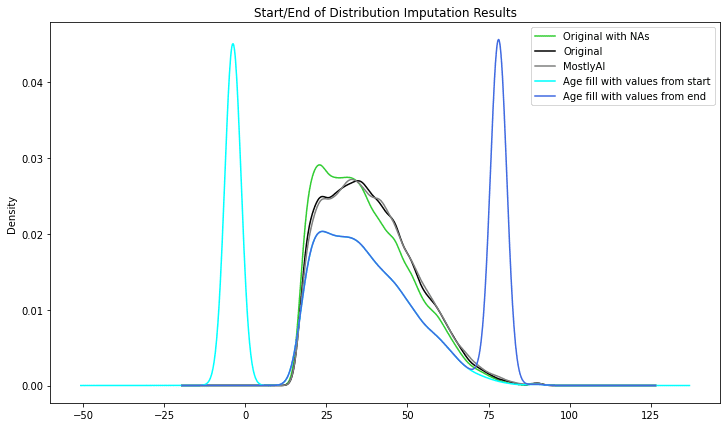

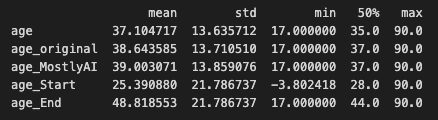

Start/End of Distribution data imputation

Start/End of Distribution data imputation is a form of data imputation technique used to fill in missing values in datasets. It involves replacing missing values with values at the beginning or end of the distribution of non-missing values in the dataset.

If the missing values are numeric, for example, the procedure involves replacing the missing values with the lowest or maximum value of the dataset's non-missing values. If the missing values are categorical, the procedure involves filling in the gaps with the most often occurring category (i.e., the mode).

Similar to the previous technique, as an advantage, is a simple technique to implement and our ML models could capture the significance of any missing values. The main drawback is that we might end up with a distorted dataset as the mean and variance of distribution might change significantly.

Similar to the previous technique, the variable was given new peaks, drastically altering the initial distribution.

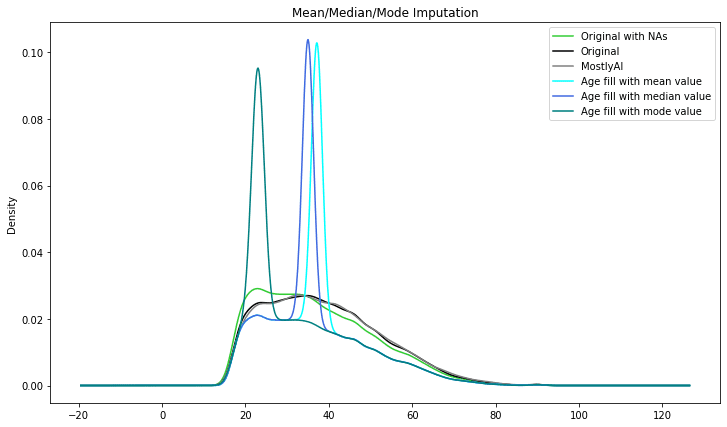

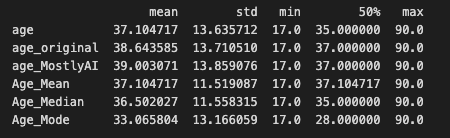

Mean/Median/Mode Imputation

Mean/Median/Mode imputation is probably the most popular data imputation method, at least among beginners. The Mean/Median/Mode data imputation method tries to impute missing numbers using statistical averages.

Mean data imputation involves filling the missing values with the mean of the non-missing values in the dataset. Median imputation involves filling the missing values with the median of the non-missing values in the dataset. Mode imputation involves filling the missing values with the mode (i.e., the most frequently occurring value) of the non-missing values in the dataset.

These techniques are straightforward to implement and beneficial when dealing with missing values in small datasets or datasets with a simple structure. However, if the mean, median, or mode is not indicative of the underlying data, they can add bias into the dataset.

The results start looking better than the previous techniques, however as can be seen the imputed distributions are still distorted.

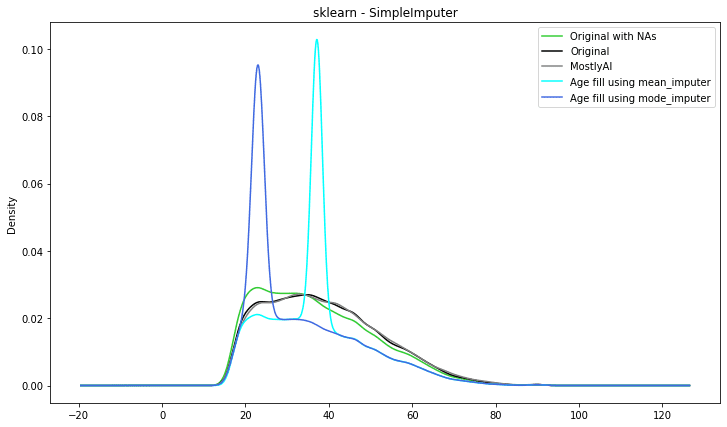

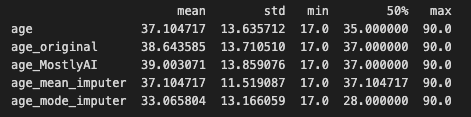

Scikitlearn - SimpleImputer data imputation

Scikit-learn is a well-known Python machine learning library. The SimpleImputer class in one of its modules, sklearn.impute, provides a simple and efficient technique to impute missing values in datasets.

The SimpleImputer class can be used to fill in missing data using several methodologies such as mean, median, mode, or a constant value. It can also be used to fill in missing values by selecting the most common value along each column or row, depending on the axis. SimpleImputer is a univariate imputation algorithm that comes out of the box with the sci-kit learn library.

The results below are similar to the results of the previous technique:

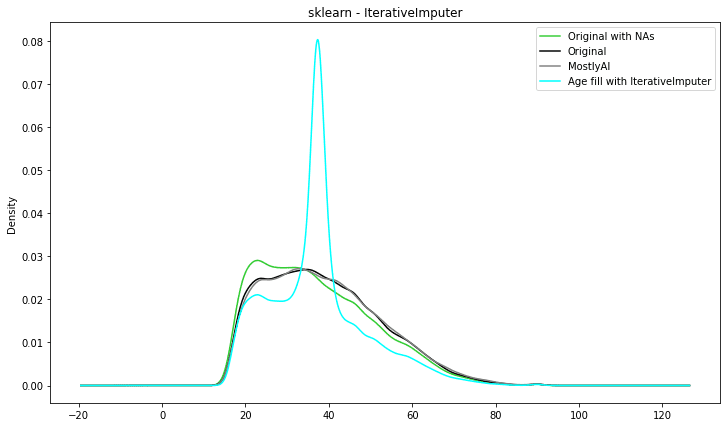

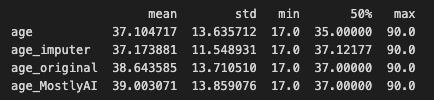

Scikitlearn - IterativeImputer data imputation

Another class in the Scikit-learn's sklearn.impute module that may be used to impute missing values in datasets is IterativeImputer. IterativeImputer, as opposed to SimpleImputer, uses a model-based imputation strategy to impute missing values by modelling the link between variables.

IterativeImputer estimates missing values using a machine learning model. A variety of models are supported by the class, including linear regression, Bayesian Ridge regression, k-nearest neighbours regression, decision trees, and random forests.

Entering the more sophisticated techniques you can see that the imputed distribution is getting closer to the original ‘age’ distribution.

Scikitlearn - KNNImputer data imputation

Let’s look at something a little more complex. K-Nearest Neighbors, or KNN, is a straightforward method that bases predictions on a specified number of nearest neighbours. It determines the separations between each instance in the dataset and the instance you want to classify. Here, classification refers to imputation.

It is simple to implement and optimize. In comparison to the other methods employed so far, it is also a little bit ‘smarter’. Unfortunately, it is prone to outliers. It can be used only on numerical variables hence only those used from the US-census dataset to produce the results below.

The summary statistics look very close to the original distribution. However, the visual representation is not that good. The imputed distribution is getting closer to the original one and visually it seems that KNNImputer produces the best results so far.

Comparison of data imputation methods: conclusion

Six different techniques were used to impute the US-census ‘age’ column. Starting with the simplest ones we have seen that the distributions are distorted and the utility of the new dataset drops significantly. Moving to the more advanced methodologies, the imputed distributions are starting to look like the original ones but are still not perfect.

We have plotted all the imputed distributions against the original as well as the distribution generated by MOSTLY AI’s Smart Imputation feature. We can clearly conclude that AI-powered synthetic data imputation captures the original distribution better.

We at MOSTLY AI are excited about the potential that ‘Smart Imputation’ and the rest of our 'Data Augmentation' and 'Data Diversity' features have to offer to our customers. More specifically, we would like to see more organizations using synthetic data across industries and to reduce the time-consuming task of dealing with missing data - time that data professionals can use to produce valuable insights for their organizations.

We are eager to explore these paths further with our customers to assist their ML/AI endeavours, at a fraction of the time and expense, since the explorations in this blog post have shown the potential to support such a scenario. If you are currently facing the same struggle of dealing with missing values in your data, check out MOSTLY AI's synthetic data generator to try Smart imputation on your own.

Learn how to generate high-quality synthetic datasets from real data samples without coding or credit cards in your pocket. Here is everything you need to know to get started.

In this blogpost you will learn:

- What do you need to generate synthetic data?

- What is a data sample?

- What are data subjects?

- What is a subject table?

- How and when to synthesize a single subject table?

- What is not a subject table?

- How to launch your first synthetic data generation?

- How and when to synthesize data in two tables?

- What are linked tables?

- How to create linked tables?

- What is the perfect setup for synthetic data generation?

- How to connect subject and linked tables for synthesization?

- What types of synthetic data can you synthesize?

- How to optimize synthetic data generation for speed or accuracy?

- What are the most common synthetic data use cases?

- How to get expert help with synthetic data generation?

What do you need to generate synthetic data?

If you want to know how to generate synthetic data, the good news is, that you need absolutely no coding knowledge to be able to synthesize datasets on MOSTLY AI’s synthetic data platform. What is even better news is that you can have access to the world’s best quality synthetic data generation for free, generating up to 100K rows daily. Not even a credit card is required, only a suitable sample dataset.

First, you need to register a free synthetic data generation account using your email address. Second, you need a suitable data sample. If you want to know how to generate synthetic data using an AI-powered tool, like MOSTLY AI, you need to know how to prepare your sample dataset, that the AI algorithm will learn from. We'll tell you all about what makes a dataset ready for synthesization in this blogpost.

When do you need a synthetic data generation tool?

Generating synthetic data based on real data makes sense in a number of different use cases and data protection is only one of them. Thanks to how the process of synthetic data generation works, you can use an AI-powered synthetic data generator to create bigger, smaller or more balanced, yet realistic versions of your original data. It’s not rocket science. It’s better than that - it’s data science automated.

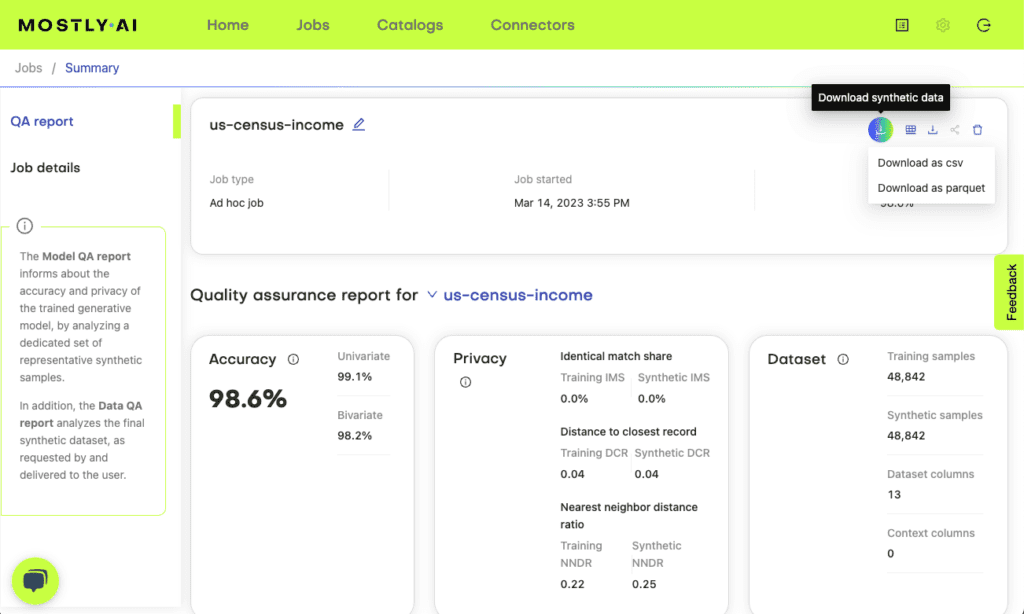

When choosing a synthetic data generation tool, you should take two very important benchmarks into consideration: accuracy and privacy. Some synthetic data generators are better than others, but all synthetic data should be quality assured and MOSTLY AI’s platform generates an automatic privacy and accuracy report for each synthetic data set. What’s more, MOSTLY AI’s synthetic data is better quality than open source synthetic data.

If you know what you are doing, it's really easy to generate realistic and privacy-safe synthetic data alternatives for your structured datasets. MOSTLY AI's synthetic data platform offers a user interface that is easy to navigate and requires absolutely no coding. All you need is sample data and a good understanding of the building blocks of synthetic data generation. Here is what you need to understand how to generate synthetic data.

Generate synthetic data straight from your browser

What is a data sample?

Generative tabular data is based on real data samples. In order to create AI-generated synthetic data, you need to provide a data sample of your original data to the synthetic data generator to learn its statistical properties, like correlations, distributions and hidden patterns.

Ideally your sample data set should contain at least 5,000 data subjects (= rows of data). If you don't have that much data that doesn't mean you shouldn’t try - go ahead and see what happens. But don't be too disappointed if the achieved data quality is not satisfactory. Automatic privacy protection mechanisms are in place to protect your data subjects, so you won’t end up with something potentially dangerous in any case.

What are data subjects?

The data subject is the person or entity whose identity you want to protect. Before considering synthetic data generation, always ask yourself whose privacy you want to protect. Do you want to protect the anonymity of the customers in your webshop? Or the employees in your company? Think about whose data is included in the data samples you will be using for synthetic data generation. They or those will be your data subjects.

The first step of privacy protection is to clearly define the protected entity. Before starting the synthetic data generation process, make sure you know who or what the protected entities of a given synthesization are.

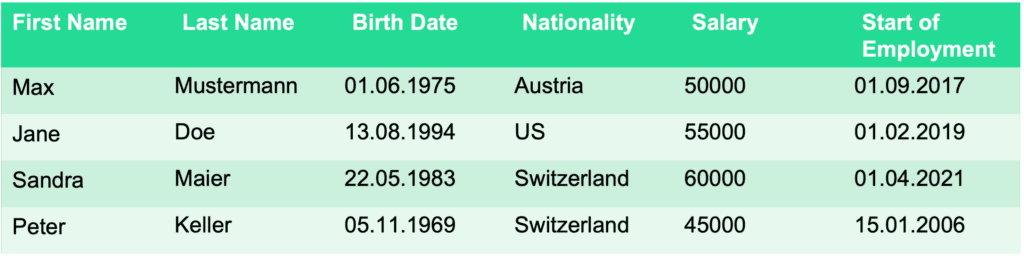

What is a subject table?

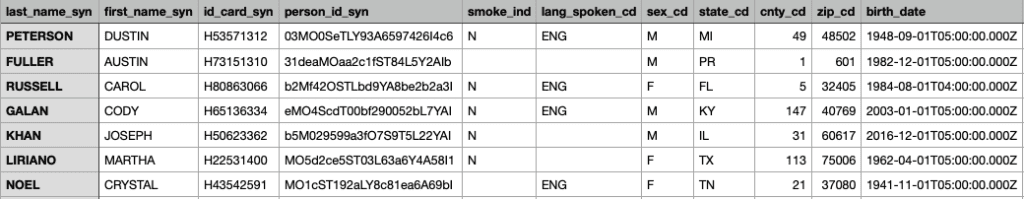

The data subjects are defined in the subject table. The subject table has one very crucial requirement: one row is one data subject. All the information which belongs to a single subject - e.g. a customer, or an employee - needs to be contained in the row that belongs to the specific data subject. In the most basic data synthesization process, there is only one table, the subject table.

This is called a single-table synthesization and is commonly used to quickly and efficiently anonymize datasets describing certain populations or entities. In contrast with old data anonymization techniques like data masking, aggregation or randomization, the utility of your data will not be affected by the synthesization.

How and when to synthesize a single subject table?

Synthesizing a single subject table is the easiest and fastest way to generate highly realistic and privacy safe synthetic datasets. If you are new to synthetic data generation, a single table should be the first thing you try.

If you want to synthesize a single subject table, your original or sample table needs to meet the following criteria:

- Each subject is a distinct, real-world entity. A customer, a patient, an employee, or any other real entity.

- Each row describes only one subject.

- Each row can be treated independently - the content of one row does not affect the content of other rows. In other words, there are no dependencies between the rows.

- When synthesizing single tables, the order of the rows will not be preserved throughout the synthesization process. For example, alphabetical order will not be maintained. However, you can re-introduce such ordering in post-processing.

Information entered into the subject table should not be time-dependent. Time-series data should be handled in two or more tables, called linked tables, which we will talk about later.

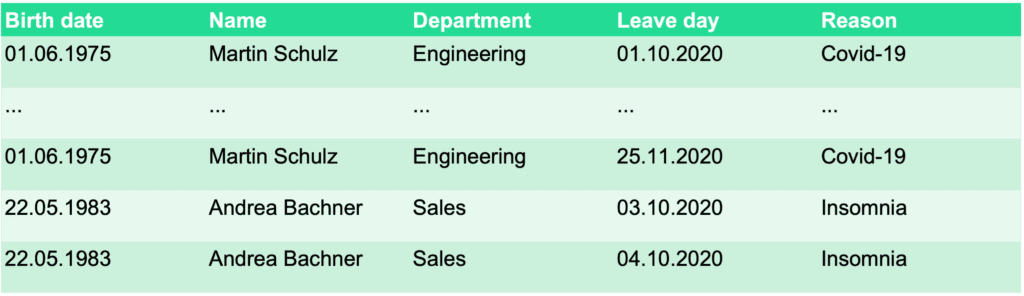

What is not a subject table?

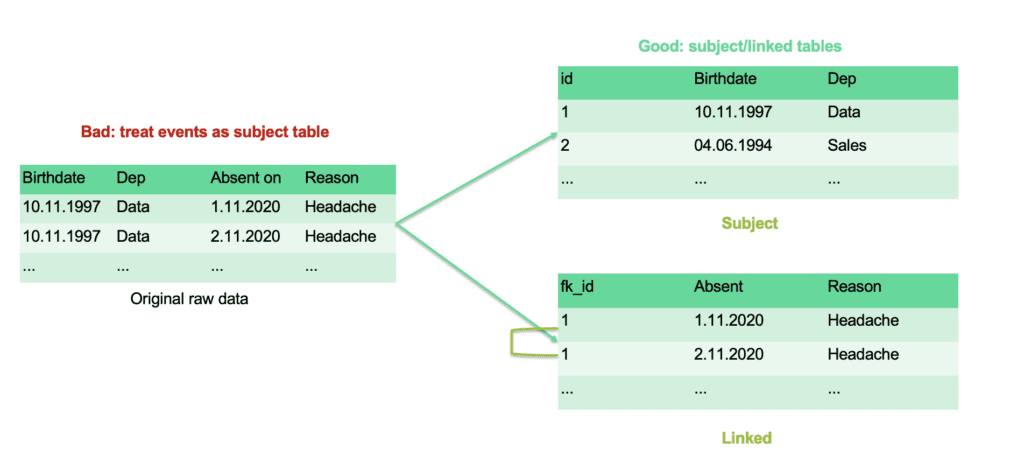

So, how do you spot it if your table that you would like to synthesize is not a subject table? If you see the same data subjects in the table twice, in different rows, it’s fair to say that the table you have cannot be a subject table as it is. In the example below, you can see a company’s records of sick leaves. Since more than one row belongs to the same person, this table would not work as a subject table.

There are some other examples when a table cannot be considered a subject table. For example, when the rows contain overlapping groups, the table cannot be used as a subject table, because the requirement of independent rows is not met.

Another example of datasets not suitable for single-table synthesization are datasets that contain behavioral or time-series data. Here the different rows come with time dependencies. Tables containing data about events need to be synthesized in a two-table set up.

If your dataset is not suitable as a subject table "out of the box" you will need to perform some pre-processing of the data to make it suitable for data synthesization.

It’s time to launch your first synthetic data generation job!

How to generate synthetic data step by step

The good news is, that you need absolutely no coding knowledge to be able to synthesize datasets on MOSTLY AI’s synthetic data platform. What is even better news is that you can have access to the world’s best quality synthetic data generation for free, generating up to 100K rows daily. Not even a credit card is required, only a suitable subject table. First, you need to register a free synthetic data generation account using your email address.

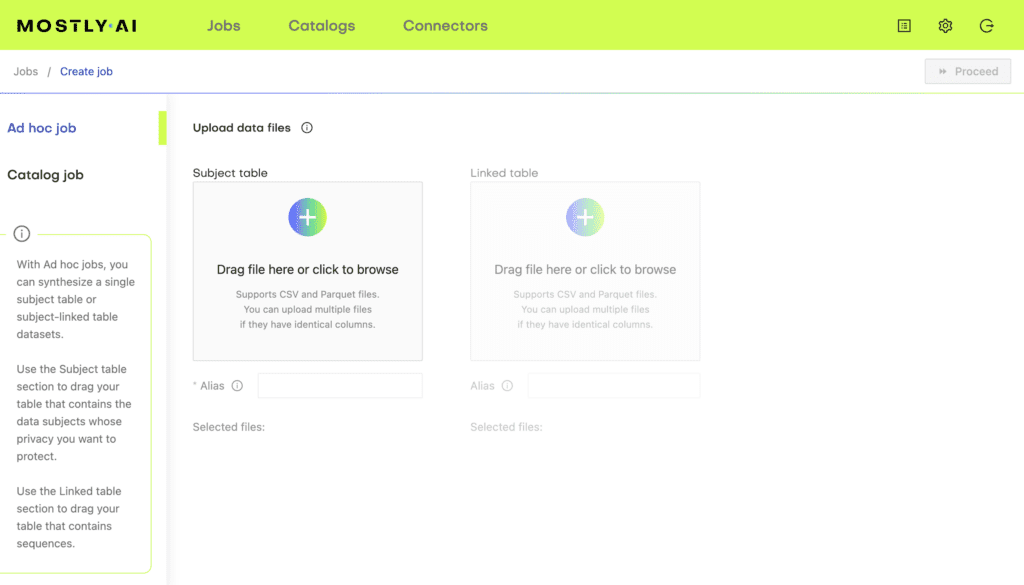

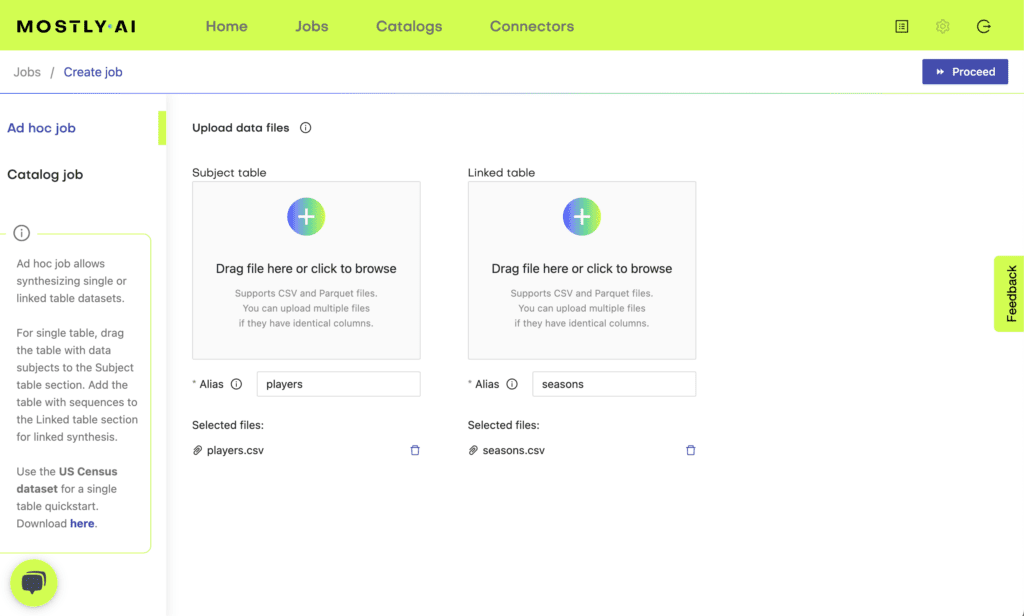

Step 1 - Upload your subject table

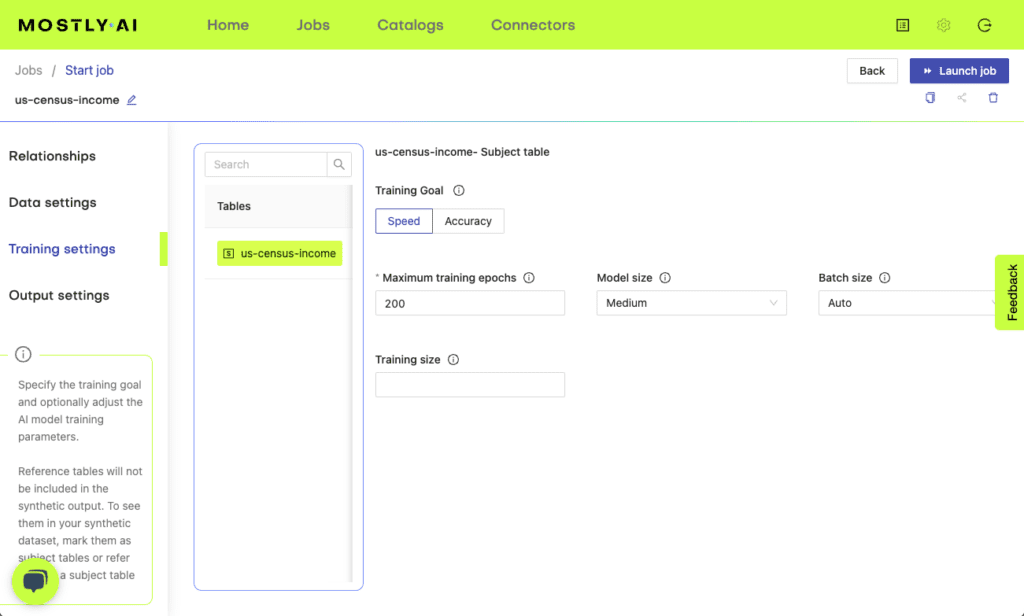

Once you are inside MOSTLY AI’s synthetic data platform, you can upload your subject table. Click on Jobs, then on Launch a new job. Your subject table needs to be in CSV or Parquet format. We recommend using Parquet files.

Feel free to upload your own dataset - it will be automatically deleted once the synthetic data generation has taken place. MOSTLY AI’s synthetic data platform runs in a secure cloud environment and your data is kept safe by the strictest data protection laws and policies on the globe, since we are a company with roots in the EU.

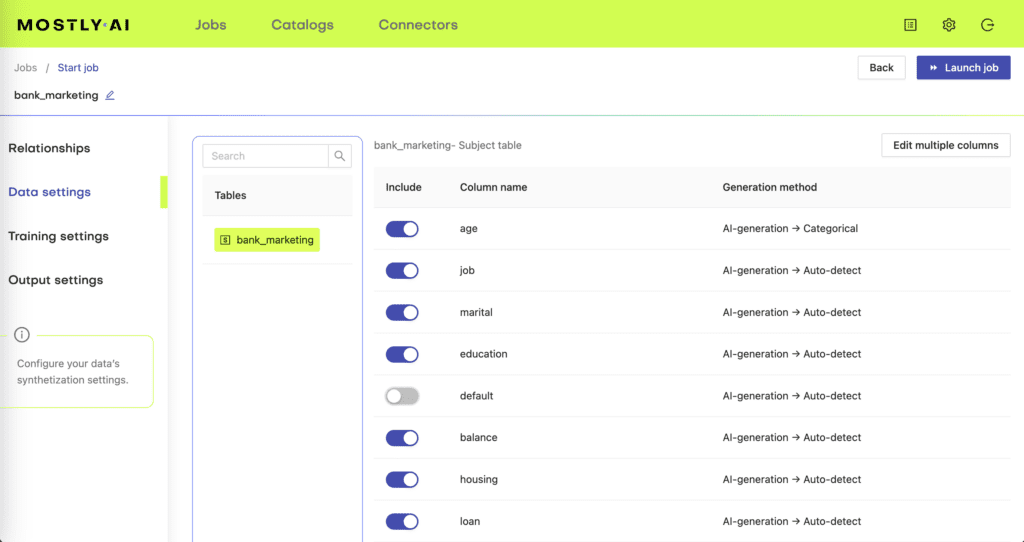

Step 2 - Check column types

Once you upload your subject table, it’s time to check your table’s columns under the Table details tab. MOSTLY AI’s synthetic data platform automatically identifies supported column types. However, you might want to change these from what was automatically detected. Your columns can be:

- Numerical - any number with up to eight digits after the decimal point

- Datetime - with hours, minutes, seconds or milliseconds if available, but a simple date format with YYYY-MM-DD also works

- Categorical - with defined categories, such as gender, marital status, language or educational level

There are other column types you can use too, for example, text or location coordinates, but these are the main column types automatically detected by the synthetic data generator.

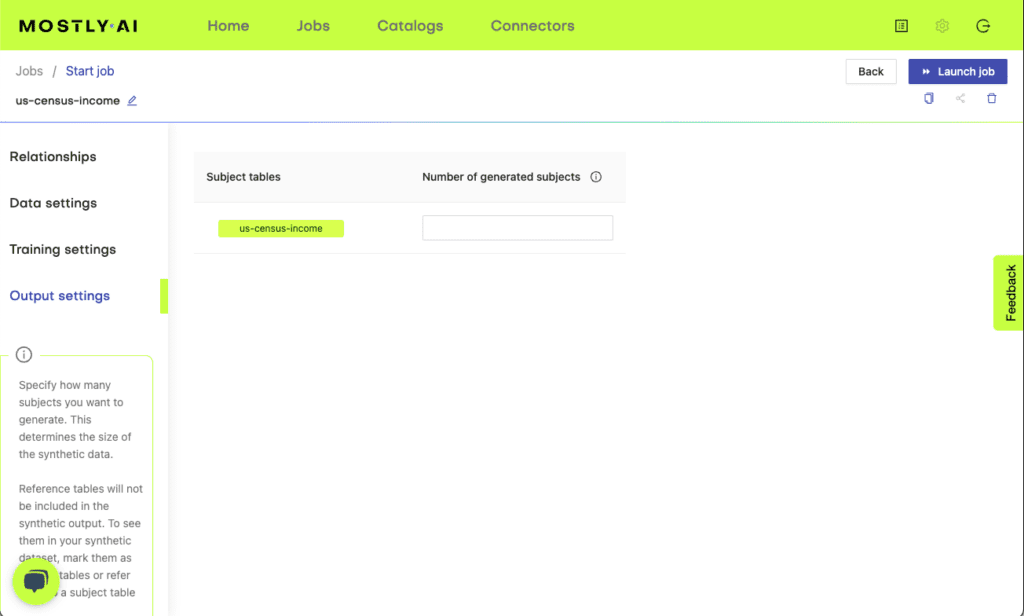

Step 3 - Train and generate

Under the Settings tabs you have the option to change how the synthesization is done. You can specify how many data subjects you want the synthetic data generator to learn from and how many you want to generate. Changing these would make sense for different use cases.

For example, if you want to generate synthetic data for software testing, you might choose to downsample your original dataset into smaller, more manageable chunks. You can do this by entering a smaller number of generated subjects under Output settings than what is in your original subject tables.

Pro tip from our data scientists: enter a smaller number of training subjects than what your original dataset has to launch a trial synthesization. Let’s say you have 1M rows of data. Use only 10K of the entire data set for a test run. This way you can check for any issues quickly. Once you complete a successful test run, you can use all of your data for training. If you leave the Number of training subjects field empty, the synthetic data generator will use all of the subjects of your original dataset for the synthesization.

Generating more data samples than what was in the original dataset can be useful too. Using synthetic data for machine learning model training can significantly improve model performance. You can simply boost your model with more synthetic samples than what you have in production or upsample minority records with synthetic examples.

You can also optimize for a quicker synthesization by changing the Training goal to Speed from Accuracy.

Once the process of synthesization is complete, you can download your very own synthetic data! Your trained model is saved for future synthetic data generation jobs, so you can always go back and generate more synthetic records based on the same original data. You can also choose to generate more data or to download the Quality Assurance report.

Step 4 - Check the quality of your synthetic data

Each synthetic data set generated on MOSTLY AI’s synthetic data platform comes with an interactive quality assurance report. If you are new to synthetic data generation or less interested in the data science behind generative AI, simply check if the synthetic data set passed your accuracy expectations. If you would like to dive deeper into the specific distributions and correlations, take a closer look at the interactive dashboards of the QA report.

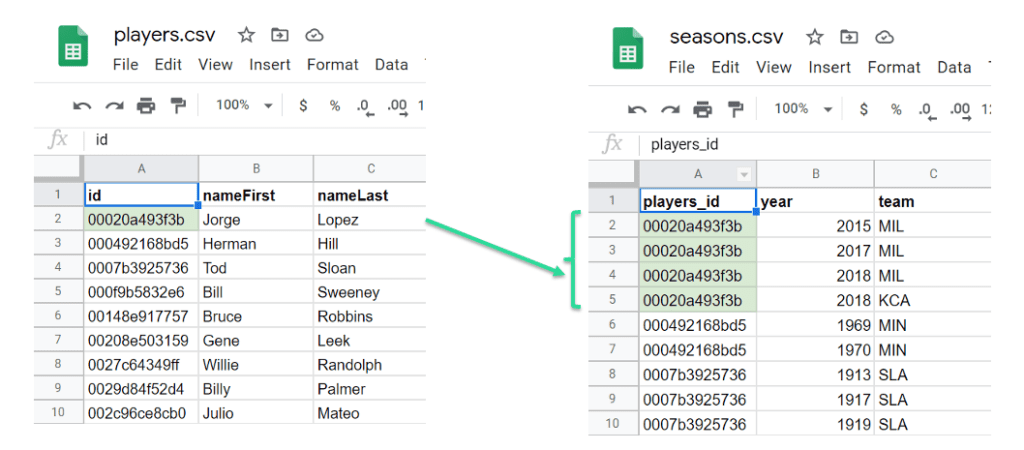

How and when to synthesize data in two tables?

Synthesizing data in two separate tables is necessary when your data set contains temporal information. In more simple terms, to synthesize events, you need to separate your data subjects - the people or entities to whom the events or behavior belong to - and the events themselves. For example, credit card transaction data or patient journeys are events that need to be handled differently than descriptive subject tables. This so-called time-series or behavioral data needs to be included in linked tables.

What are linked tables?

Now we are getting to the exciting part of synthetic data generation, that is able to unlock the most valuable behavioral datasets, like transaction data, CRM data or patient journeys. Linked tables containing rich behavioral data is where AI-powered synthetic data generators really shine. This is due to their ability to pick up on patterns in massive data sets that would otherwise be invisible to the naked eyes of data scientists and BI experts.

These are also among the most sensitive data types, full of extremely valuable (think customer behavior), yet off-limits, personally identifiable, juicy details. Behavioral data is hard to anonymize without destroying the utility of the data. Synthetic behavioral data generation is a great tool for overcoming this so-called privacy-utility trade off.

How to create linked tables?

The structure of your sample needs to follow the subject table - linked table framework. We already discussed subject tables - here the trick is to make sure that information about one data subject must be contained in one row only. You should move columns that are static to the subject table and model the rest as a linked table.

MOSTLY AI’s algorithm learns statistical patterns distributed in rows, so if you have information that belongs to a single individual across multiple rows, you’ll be creating phantom data subjects. The resulting synthetic data might include phantom behavioral patterns not present in the original data.

The perfect set up for synthetic data generation

Your ideal synthetic data generation set up is where the subject table’s IDs refer to the events contained in the linked table. The linked table contains several rows that refer to the same subject ID - these are events that belong to the same individual.

Keeping your subject table and linked table aligned is the most important part of a successful synthetic data generation. Include the ID columns in both tables as primary and foreign keys for establishing the referential relationship.

How to connect subject and linked tables for synthesization?

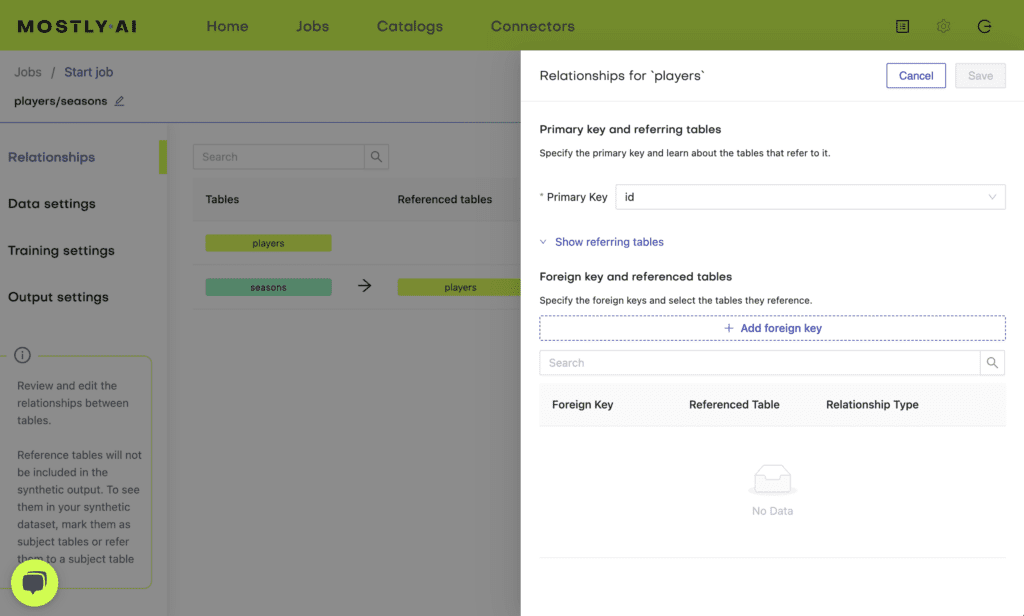

MOSTLY AI’s synthetic data generation platform offers an easy-to-use, no-code interface where tables can be linked and synthesized. Simply upload your subject table and linked tables.

The platform automatically detects primary keys (the id column) and foreign keys (the <subject_table_name> _id column) once the subject table and the linked tables are specified. You can also select these manually. Once you defined the relationship between your tables, you are ready to launch your synthesization for two tables.

Synthetic data types and what you should know about them

The most common data types - numerical, categorical and datetime - are recognized by MOSTLY AI and handled accordingly. Here is what you should know when generating synthetic data from different types of input data.

Synthetic numeric data

Numeric data contains only numbers and are automatically treated as numeric columns. Synthetic numeric data keeps all the variable statistics such as mean, variance and quantiles. N/A values are handled separately and the proportion of that is retained in the synthetic data. MOSTLY AI automatically detects missing values and reproduces it in the synthetic data, for example, if the likelihood of N/A changes depending on other variables. N/A needs to be encoded as empty strings.

Extreme values in numeric data have a high risk of disclosing sensitive information, for example, by exposing the CEO in a payroll database as the highest earner. MOSTLY AI’s built-in privacy mechanism replaces the smallest and largest outliers with the smallest and largest non-outliers to protect the subjects’ privacy.

If the synthetic data generation relies on only a few individuals for minimum and maximum values, the synthetic data can differ in these. One more reason to give the CEO’s salary to as many people in your company as possible is to protect his or her privacy - remember this argument next time equal payment comes up. 🙂 Kidding aside, removing these extreme outliers is a necessary step to protect from membership inference attacks. MOSTLY AI’s synthetic data platform does this automatically, so you don’t have to worry about outliers.

Synthetic datetime data type

Columns in datetime format are treated automatically as datetime columns. Just like in the case of synthetic numeric data, extreme datetime values are also protected and the distribution of N/As is preserved. In linked tables, using the ITT encoding for inter-transaction time improves the accuracy of your synthetic data on time between events, for example when synthesizing ecommerce data with order statuses of order time, dispatch time, arrival time.

Synthetic categorical data type

Categorical data comes with a fixed number of possible values. For example, marital status, qualifications or gender in a database describing a population of people. Synthetic data retains the probability distribution of the categories, containing only those categories present in the original data. Rare categories are protected independently for each categorical column.

Synthetic location data type

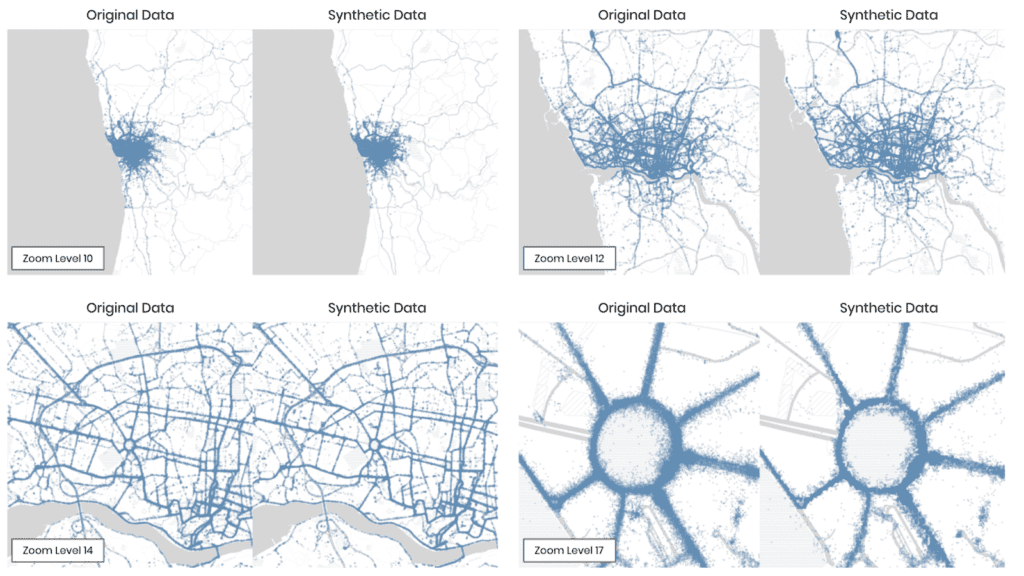

MOSTLY AI’s synthetic data generator can synthesize geolocation coordinates with high accuracy. You need to make sure that latitude and longitude coordinates are in a single field, separated by a comma, like this: 37.311, 173.8998

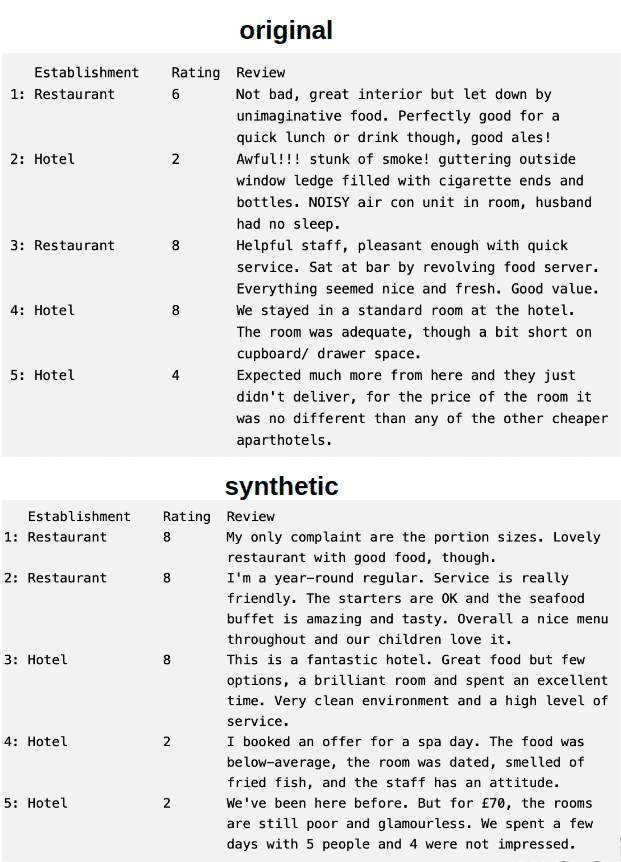

Synthetic text data type

MOSTLY AI’s synthetic data generator can synthesize up to 1000 character long unstructured texts. The resulting synthetic text is representative of the terms, tokens, their co-occurrence and sentiment of the original. Synthetic text is especially useful for machine learning use cases, such as sentiment analysis and named-entity recognition. You can use it to generate synthetic financial transactions, user feedback or even medical assessments. MOSTLY AI is language agnostic, so you won’t experience biases in synthetic text.

You can improve the quality of your text columns that include specific patterns, like email addresses, phone numbers, transaction IDs, social security numbers, if you change these to character sequence data type.

Configure synthetic data generation model training

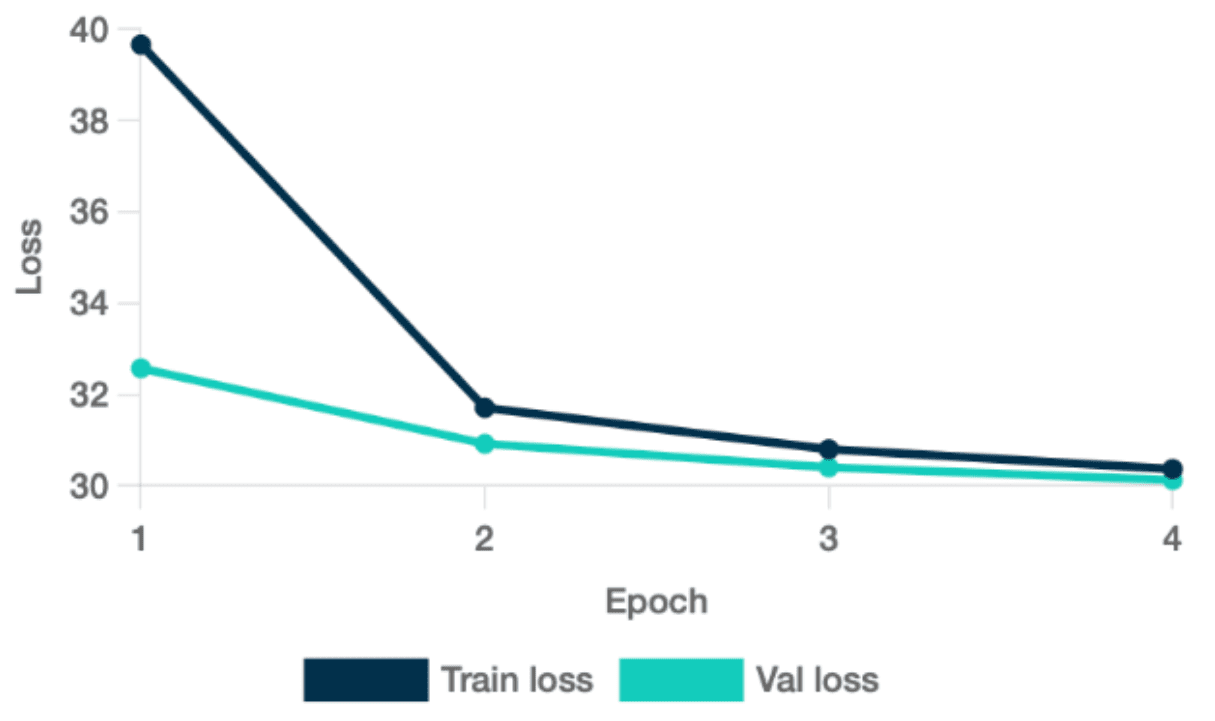

You can optimize the synthetic data generation process for accuracy or speed, depending on what you need the most. Since the main statistical patterns are learned by the synthetic data generator in the first epochs, you can choose to stop the training by selecting the Speed option if you don’t need to include minority classes and detailed relationships in your synthetic data.

When you optimize for accuracy, the training continues until no further model improvement can be achieved. Optimizing for accuracy is a good idea when you are generating synthetic data for data analytics use cases or outlier detection. If you want to generate synthetic data for software testing, you can optimize for speed, since high statistical accuracy is not an important feature of synthetic test data.

Synthetic data use cases from no-brainer to genius

The most common synthetic data use cases range from simple, like data sharing to complex, like explainable AI, which is part data sharing and part data simulation.

Another entry level synthetic data generation project can be generating realistic synthetic test data, for example for stress testing and for the delight of your off-shore QA teams. As we all know, production data should never, ever see the inside of test environments (right?). However, mock data generators cannot mimic the complexity of production data. Synthetic test data is the perfect solution combining realism and safety in one.

Synthetic data is also one of the most mature privacy-enhancing technologies. If you want to share your data with third parties safely, it’s a good idea to run it through a synthetic data generator first. And the genius part? Synthetic data is set to become a fundamental part of explainable and fair AI, ready to fix human biases embedded in datasets and to provide a data window into the souls of algorithms.

Expert synthetic data help every step of the way

No matter which use case you decide to tackle first, we are here for you from the first steps to the last and beyond! If you would like to dive deeper into synthetic data generation, please feel free to browse and search through MOSTLY AI’s Documentation. But most importantly, practice makes best, so register your free forever account and launch your first synthetic data generation job now.

✅ Data prep checklist for synthetic data generation

1. SPLIT SINGLE SEQUENTIAL DATASETS INTO SUBJECT AND LINKED TABLES

If your raw data includes events and is contained in a single table, you need to split it into a subject table and a linked table. If your single table contains event data, move these sequential data points into another table. Make sure that the new table is linked by the foreign key to the primary key in the subject table. That is, each individual or entity in the subject table is referred to by the linked table with the relevant ID.

How sequential data is structured also matters. If your events are contained in columns, make sure you model them into rows. Each row should describe a separate event.

Some examples of typical dataset synthesized for a wide variety of use cases include different types of time series data, like patient journeys where a list of medical events are linked to individual patients. Synthetic data in banking is often created from transaction datasets where subject tables contain accounts and the linked table contains the transactions that belong to certain accounts, referenced in the subject table. These are all time-dependent, sequential datasets where chronological order is an important part of the data’s intelligence.

When preparing your datasets for synthesization, always consider the following list of requirements:

| Subject Table | Linked table |

| Each row belongs to a different individual | Several rows belong to the same individual |

| The subject ID (primary key in SQL) must be unique | Each row needs to be linked to one of the unique IDs in the subject table (foreign key in SQL) |

| Rows should be treated independently | Several rows can be interrelated |

| Includes only static information | Includes only dynamic information where sequences must be time-ordered if available |

| The focus is on columns | The focus is on rows and columns |

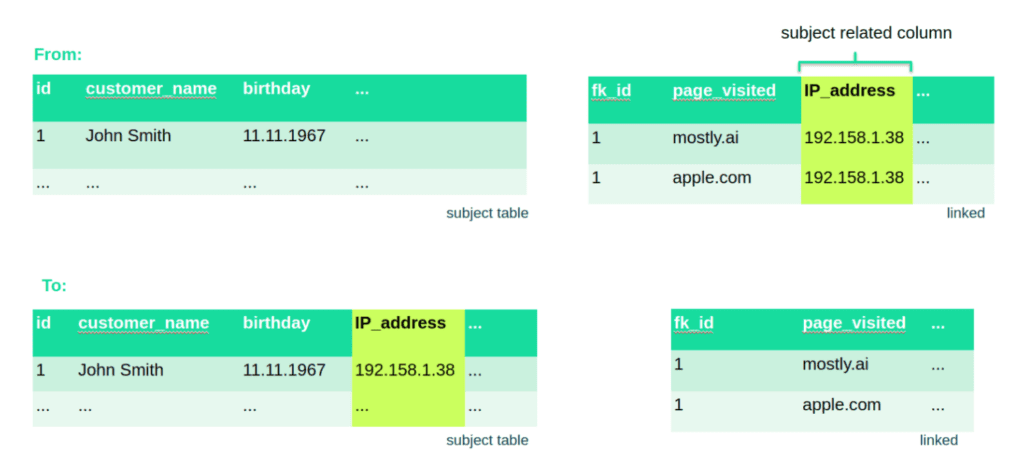

2. MOVE ALL STATIC DATA TO THE SUBJECT TABLE

Check your linked table containing events. If you have static information in the linked table, that is describing the subject, you should move that column to the subject table. A good example would be a list of page visits, where each page visit is an event that belongs to certain users. The IP address of users is the same across different events. It’s static and describes the user, not the event. In this case, the IP_address column needs to be moved over to the subject table.

3. CHECK AND CONFIGURE DATA TYPES

The most common data types, numerical, categorical and datetime are automatically detected by MOSTLY AI’s synthetic data platform. Check if the data types were detected correctly and change the encoding where you have to. If the automatically detected data types don’t match your expectations, double check the input data. Chances are, that a formatting error is leading detection astray and you might want to fix that before synthesization. Here are the cases when you should check and manually adjust data types:

- If your categories are defined by numbers, those will be automatically detected as digit

- If your column format is not correct or if empty data is encoded as non-empty string/token, your digit/datetime column might be treated as a categorical column

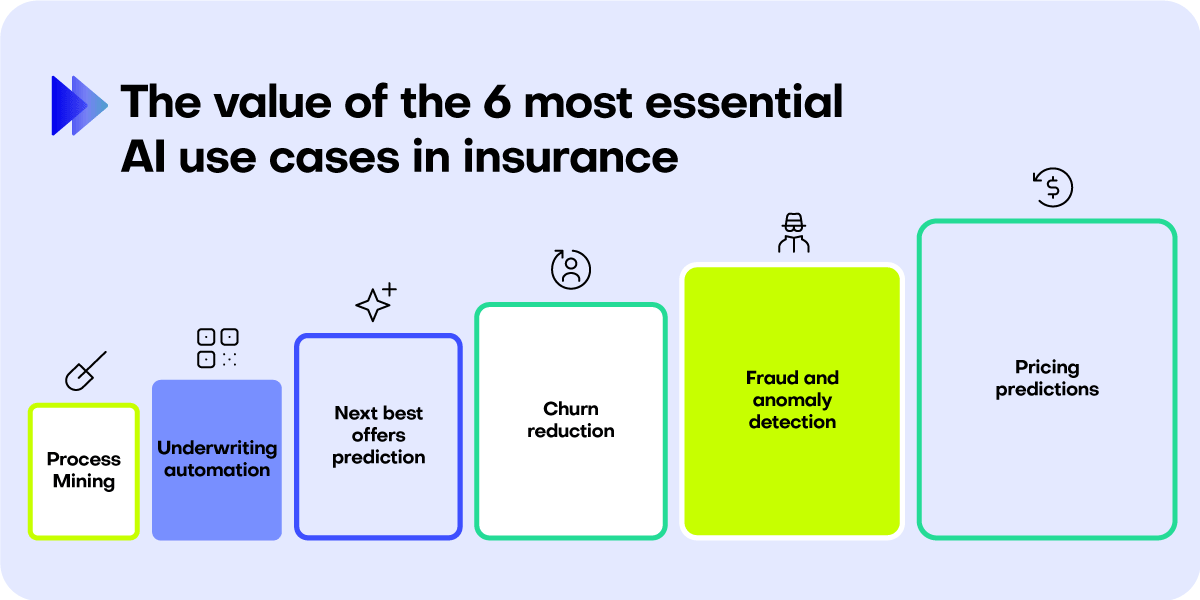

Data intelligence is locked up. Machine learning and AI in insurance is hard to scale and legal obligations make the job of data scientists and actuaries extremely difficult. How can you still innovate under these circumstances? The insurance industry is being disrupted as we speak by agile, fast moving insurtech start-ups and new, AI-enabled services by forward-thinking insurance companies.

According to McKinsey, AI will “increase productivity in insurance processes and reduce operational expenses by up to 40% by 2030”. At the same time, old-school insurance companies struggle to make use of the vast troves of data they are sitting on and sooner or later, ambitious insurtechs will turn their B2B products into business to consumer offerings, ready to take sizeable market shares. Although traditional insurance companies’ drive to become data-driven and AI-enabled is strong, organizational challenges are hindering efforts.

Laggers have a lot to lose. If they are to stay competitive, insurance companies need to redesign their data and analytics processes and treat the development of data-centric AI in insurance with urgency.

The bird's-eye view on data in the insurance industry

Data has always been the bread and butter of insurers and data-driven decisions in the industry predate even the appearance of computers. Business-critical metrics have been the guiding force in everything insurance companies do from pricing to risk assessment.

But even today, most of these metrics are hand-crafted with traditional, rule-based tools that lack dynamism, speed and contextual intelligence. The scene is ripe for an AI revolution.

The insurance industry relies heavily on push sales techniques. Next best products and personalized offers need to be data-driven. For cross selling and upselling activities to succeed, an uninterrupted data flow across the organization is paramount, even though often Life and Non-Life business lines are completely separated. Missed data opportunities are everywhere and require a commitment to change the status quo.

Interestingly, data sharing is both forbidden and required by law, depending on the line of business and properties of data assets in question. Regulations are plenty and vary across the globe, making it difficult to follow ambitious global strategies and turning compliance into a costly and tedious business. Privacy enhancing technologies or PETs for short can be of help and a modern data stack cannot do without them. Again, insurance companies should carefully consider how they build PETs into their data pipelines for maximum effect.

The fragmented, siloed nature of insurance companies' data architecture can benefit hugely from using PETs, like synthetic data generators, enabling cloud adoption, data democratization and the creation of a consolidated data intelligence across the organization.

Structured data

Semi Structured data

wearables, smart home IoT, etc.)

Unstructured data

External Data Sources

Structure data

Intelligent Automation

Data Science/Analytics

Data Sharing

Insurtech companies willing to change traditional ways and adopting AI in insurance with earnestness have been stealing the show left, right and center. As far back as 2017, a US insurtech company, Lemonade announced to have paid out the fastest claim in the history of the insurance industry - in three seconds.

If insurance companies are to stay competitive, they need to turn their thinking around and start redesigning their business with sophisticated algorithms and data in mind. Instead of programming the data, the data should program AI and machine learning algorithms. That is the only way for truly data-driven decisions, everything else is smoke and mirrors. Some are wary of artificial intelligence and would prefer to stick to the old ways. That is simply no longer possible.

The shift in how things get done in the insurance industry is well underway already. Knowing how AI in insurance systems works and what its potential is with best practices, standards and use cases is what will make this transition safe and painless, promising larger profits, better services and frictionless products throughout the insurance market.

What drives you? The three ways AI can have an impact

AI and machine learning typically can help achieving impact in three ways.

1. Increase profits

Examples include campaign optimization, targeting, next best offer/action, and best rider based on contract and payment history. According to the AI in insurance 2031 report by Allied Market Research, “the global AI in the insurance industry generated $2.74 billion in 2021, and is anticipated to generate $45.74 billion by 2031.”

2. Decrease costs

Examples of cost reduction includes reduced claims cost which is made up of two elements - claim amount and claim survey cost. According to KPMG, “investment in AI in insurance is expected to save auto, property, life and health insurers almost US$1.3 billion while also reducing the time to settle claims and improving customer loyalty.”

3. Increase customer satisfaction

AI-supported customer service and quality assurance AI systems can optimize claim processing and customer experience. Even small tweaks, optimized by AI algorithms can have massive effects. According to an IBM report, “claimants are more satisfied if they receive 80% of the requested compensation after 3 days, than receiving 100% after 3 weeks.”

What stops you? Five data challenges when building AI/ML models in traditional insurance companies

We spoke to insurance data practitioners about their day-to-day challenges regarding data consumption. There is plenty of room for improvement and for new tools making data more accessible, safer and compliant throughout the data product life-cycle. AI in insurance suffers from a host of problems, not entirely unrelated to each other. Here is a list of their five most pressing concerns.

1. Not enough data

Contrary to popular belief, insurance companies aren’t drowning in a sea of customer data. Since insurance companies have far fewer interactions with customers in comparison with banks or telcos, there is less data available for making important decisions, health insurance being the notable exception. Typically the only time customers interact with insurance providers is when the contract is signed and if everything goes well, the company never hears from them again.

External datasources, like credit scoring data won’t give you a competitive edge either - after all it’s what all your competitors are looking at too. Also, the less data there is, the more important architecture, data quality and the ability to augment existing data assets becomes. Hence, investment into data engineering tools and capabilities should be at the top of the agenda for insurance companies. AI in insurance cannot be made a reality without meaningful, high-touch data assets.

2. Data assets are fragmented

To make things even more complicated, data sits in different systems. In some countries, insurance companies are prevented by law from merging different datasets or even managing them within the same system. For example property-related and life-related insurances often have to be separated. Data integration is therefore extremely challenging.

Cloud solutions could solve some of these issues. However, due to the sensitive nature of customer data, moving to the cloud is often impossible, and a lot of traditional insurers still keep all their data assets on premise. As a result, a well-designed data architecture is mission-critical for ensuring data consumption. Today there often are no data integrations in place and a consolidated view across all the different systems is hard to create.

Access authorizations are also in place as well as curated datasets, but if you want to access any data, that is not part of usual business intelligence activities, the process is very slow and cumbersome, keeping data scientists away from what they do best: come up with new insights.

3. Cybersecurity is a growing problem

Very often cybersecurity is thought of as the task of protecting perimeters from outside attacks. But did you know, that 59% of privacy incidents originate with an organization’s own employees? No amount of security training can guarantee that mistakes won’t be made and so, the next frontier for cybersecurity efforts should tackle the data itself.

How can data assets themselves be turned into less hazardous pieces of themselves? Can old school data masking techniques withstand a privacy attack? New types of attacks are popping up attacking data with AI-powered tools, trying to reidentify individuals based on their behavioral patterns.

These AI-based re-identification attacks are yet another reason to ditch data masking and opt for new-age privacy enhancing technologies instead. Minimizing the amount of production data in use should be another goal added to the already long list of cybersec professionals.

4. Insurance data is regulated to the extreme

Since insurance is a strategically important business for governments, they are subjected to extremely high levels of regulation. While in some instances insurance companies are required by law to share their data with competitors, in others, they are prevented from using certain parts of datasets, such as gender, by law.