Table of Contents

What is data bias?

Data bias is the systematic error introduced into data workflows and machine learning (ML) models due to inaccurate, missing, or incorrect data points which fail to accurately represent the population. Data bias in AI systems can lead to poor decision-making, costly compliance issues as well as drastic societal consequences. Amazon’s gender-biased HR model and Google’s racially-biased hate speech detector are some well-known examples of data bias with significant repercussions in the real world. It is no surprise, then, that 54% of top-level business leaders in the AI industry say they are “very to extremely concerned about data bias”.

With the massive new wave of interest and investment in Large Language Models (LLMs) and Generative AI, it is crucial to understand how data bias can affect the quality of these applications and the strategies you can use to mitigate this problem.

In this article, we will dive into the nuances of data bias. You will learn all about the different types of data bias, explore real-world examples involving LLMs and Generative AI applications, and learn about effective strategies for mitigation and the crucial role of synthetic data.

Data bias types and examples

There are many different types of data bias that you will want to watch out for in your LLM or Generative AI projects. This comprehensive Wikipedia list contains over 100 different types, each covering a very particular instance of biased data. For this discussion, we will focus on 5 types of data bias that are highly relevant to LLMs and Generative AI applications.

- Selection bias

- Automation bias

- Temporal bias

- Implicit bias

- Social bias

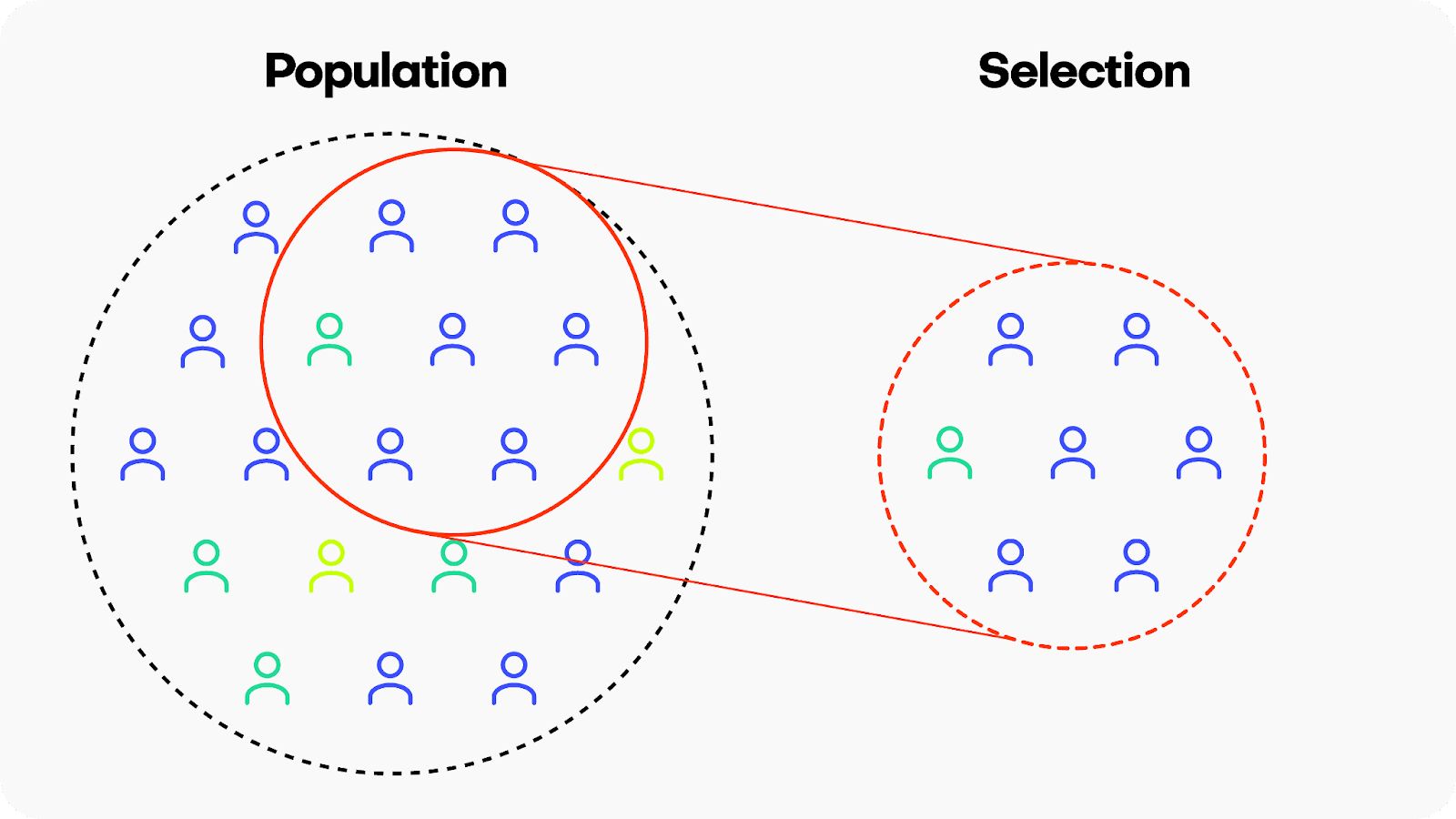

Selection bias

Selection bias occurs when the data used for training a machine learning model is not representative of the population it is intended to generalize to. This means that certain groups or types of data are either overrepresented or underrepresented, leading the model to learn patterns that may not accurately reflect the broader population. There are many different kinds of selection bias, such as sampling bias, participation bias and coverage bias.

Example: Google’s hate-speech detection algorithm Perspective is reported to exhibit bias against black American speech patterns, among other groups. Because the training data did not include sufficient examples of the linguistic patterns typical of the black American community, the model ended up flagging common slang used by black Americans as toxic. Leading generative AI companies like OpenAI, Anthropic and others are using Perspective daily at massive scale to determine the toxicity of their LLMs, potentially perpetuating these biased predictions.

Solution: Invest in high-quality, diverse data sources. When your data still has missing values or imbalanced categories, consider using synthetic data with rebalancing and smart imputation methods.

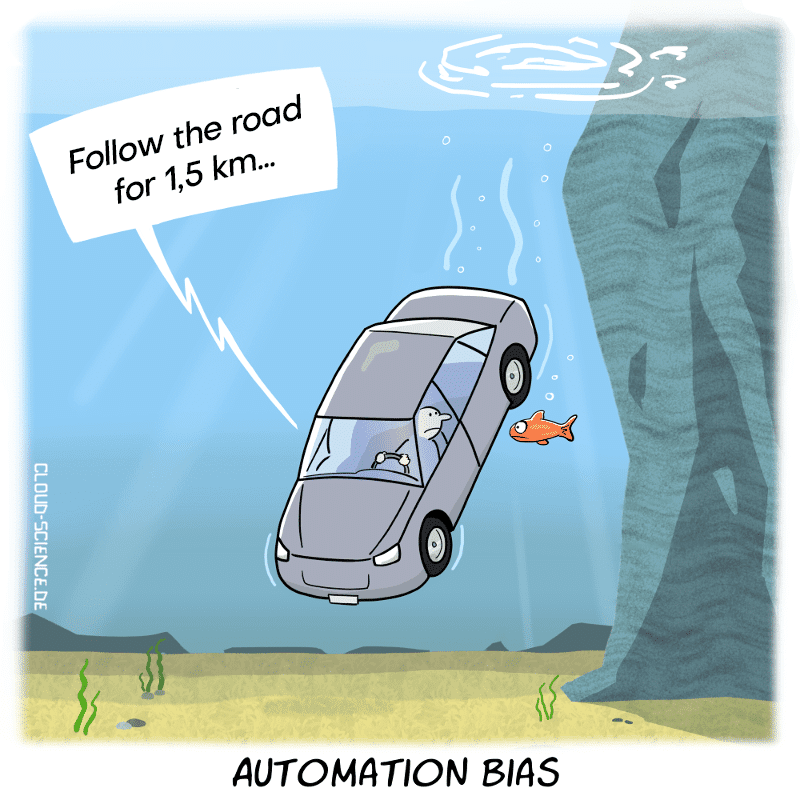

Automation bias

Automation bias is the tendency to favor results generated by automated systems over those generated by non-automated systems, irrespective of the relative quality of their outputs. This is becoming an increasingly relevant type of bias to watch out for as people, including top-level business leaders, may rush to implement automatically generated AI applications with the underlying assumption that simply because these applications use the latest, most popular tech their output will be inherently more trustworthy or performant.

Example: In a somewhat ironic overlap of generative technologies, a 2023 study found that some Mechanical Turk workers were using LLMs to generate the data which they were being paid to generate themselves. Later studies have since shown that training generative models on generated data can create a negative loop, also called “the curse of recursion”, which can significantly reduce output quality.

Solution: Include human supervision safeguards in any mission-critical AI application.

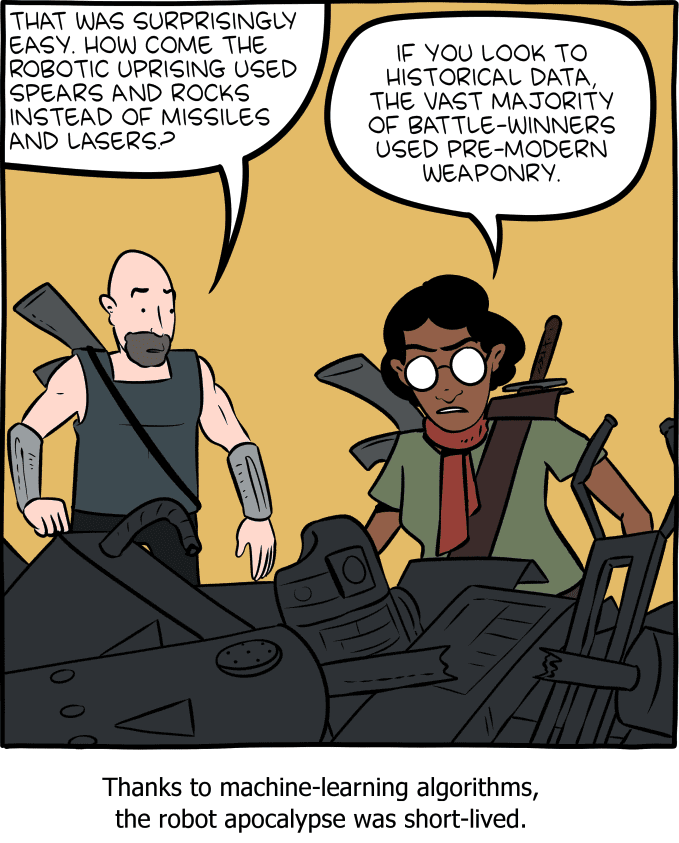

Temporal or historical bias

Temporal or historical bias arises when the training data is not representative of the current context in terms of time. Imagine a language model trained on a dataset from a specific time period, adopting outdated language or perspectives. This temporal bias can limit the model's ability to generate content that aligns with current information.

Example: ChatGPT’s long-standing September 2021 cut-off date is a clear example of a temporal bias that we have probably all encountered. Until recently, the LLM could not access training data after this date, severely limiting its applicability for use cases that required up-to-date data. Fortunately, in most cases the LLM was aware of its own bias and communicated it clearly with responses like "'I'm sorry, but I cannot provide real-time information".

Solution: Invest in high-quality data, up-to-date data sources. If you are still lacking data records, it may be possible to simulate them using synthetic data’s conditional generation feature.

Implicit bias

Implicit bias can happen when the humans involved in ML building or testing operate based on unconscious assumptions or preexisting judgments that do not accurately match the real world. Implicit biases are typically ingrained in individuals based on societal and cultural influences and can impact perceptions and behaviors without conscious awareness. Implicit biases operate involuntarily and can influence judgments and actions even when an individual consciously holds no biased beliefs. Because of the implied nature of this bias, it is a particularly challenging type of bias to address.

Example: LLMs and generative AI applications require huge amounts of labeled data. This labeling or annotation is largely done by human workers. These workers may operate with implicit biases. For example, in assigning a toxicity score for specific language prompts, a human annotation worker may assign an overly cautious or liberal score depending on personal experiences related to that specific word or phrase.

Solution: Invest in fairness and data bias training for your team. Whenever possible, involve multiple, diverse individuals in important data processing tasks to balance possible implicit biases.

Social bias

Social bias occurs when machine learning models reinforce existing social stereotypes present in the training data, such as negative racial, gender or age-dependent biases. Generative AI applications can inadvertently perpetuate biased views if their training data includes data that reflects societal prejudices. This can result in responses that reinforce harmful societal narratives. As ex-Google researcher Timit Gebru and colleagues cautioned in their 2021 paper: “In accepting large amounts of web text as ‘representative’ of ‘all’ of humanity [LLMs] risk perpetuating dominant viewpoints, increasing power imbalances and further reifying inequality.”

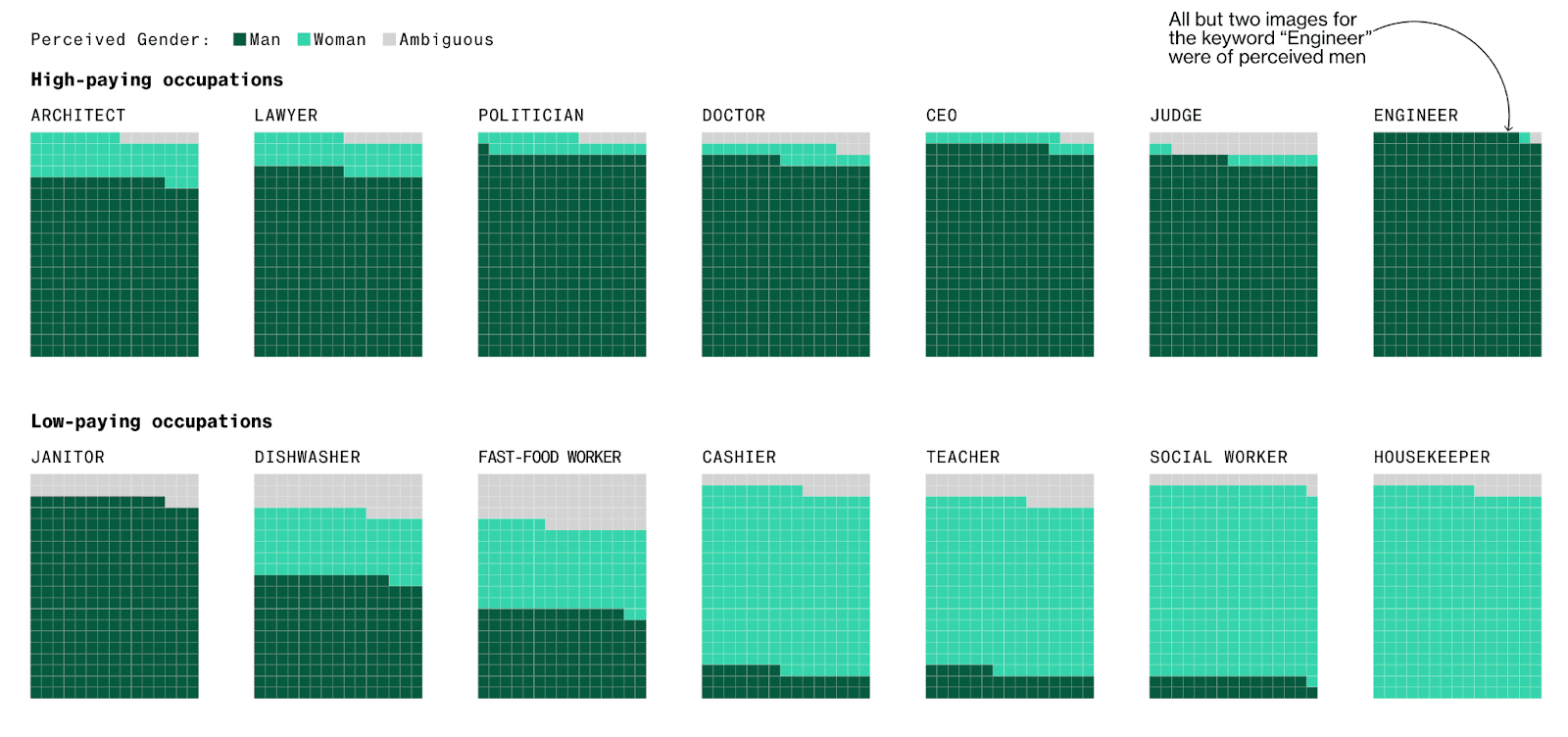

Example: Stable Diffusion and other generative AI models have been reported to exhibit socially biased behavior due to the quality of their training datasets. One study reported that the platform tends to underrepresent women in images of high-performing occupations and overrepresent darker-skinned people in images of low-wage workers and criminals. Part of the problem here seems to be the size of the training data. Generative AI models require massive amounts of training data and in order to achieve this data volume, the selection controls are often relaxed leading to poorer quality (i.e. more biased) input data.

Solution: Invest in high-quality, diverse data sources as well as data bias training for your team. It may also be possible to build automated safeguarding checks that will spot social bias in model outputs.

Perhaps more than any other type of data bias, social bias shows us the importance of the quality of the data you start with. You may build the perfect generative AI model but if your training data contains implicit social biases (simply because these biases existed in the subjects who generated the data) then your final model will most likely reproduce or even amplify these biases. For this reason, it’s crucial to invest in high-quality training data that is fair and unbiased.

Strategies for reducing data bias

Recognizing and acknowledging data bias is of course just the first step. Once you have identified data bias in your project you will also want to take concrete action to mitigate it. Sometimes, identifying data bias while your project is ongoing is already too late; for this reason it’s important to consider preventive strategies as well.

To mitigate data bias in the complex landscape of AI applications, consider:

- Investing in dataset diversity and data collection quality assurances.

- Performing regular algorithmic auditing to identify and rectify bias.

- Including humans in the loop for supervision.

- Investing in model explainability and transparency.

Let’s dive into more detail for each strategy.

Diverse dataset curation

There is no way around the old adage: “garbage in, garbage out”. Because of this, the cornerstone of combating bias is curating high-quality, diverse datasets. In the case of LLMs, this involves exposing the model to a wide array of linguistic styles, contexts, and cultural nuances. For Generative AI models more generally, it means ensuring to the best of your ability that training data sets are sourced from as varied a population as possible and actively working to identify and rectify any implicit social biases. If, after this, your data still has missing values or imbalanced categories, consider using synthetic data with rebalancing and smart imputation methods.

Algorithmic auditing

Regular audits of machine learning algorithms are crucial for identifying and rectifying bias. For both LLMs and generative AI applications in general, auditing involves continuous monitoring of model outputs for potential biases and adjusting the training data and/or the model’s architecture accordingly.

Humans in the loop

When combating data bias it is ironically easy to fall into the trap of automation bias by letting programs do all the work and trusting them blindly to recognize bias when it occurs. This is the core of the problem with the widespread use of Google’s Perspective to avoid toxic LLM output. Because the bias-detector in this case is not fool-proof, its application is not straightforward. This is why the builders of Perspective strongly recommend continuing to include human supervision in the loop.

Explainability and transparency

Some degree of data bias is unavoidable. For this reason, it is crucial to invest in the explainability and transparency of your LLMs and Generative AI models. For LLMs, providing explanations and sources for generated text can offer insights into the model's decision-making process. When done right, model explainability and transparency will give users more context on the generated output and allow them to understand and potentially contest biased outputs.

Synthetic data reduces data bias

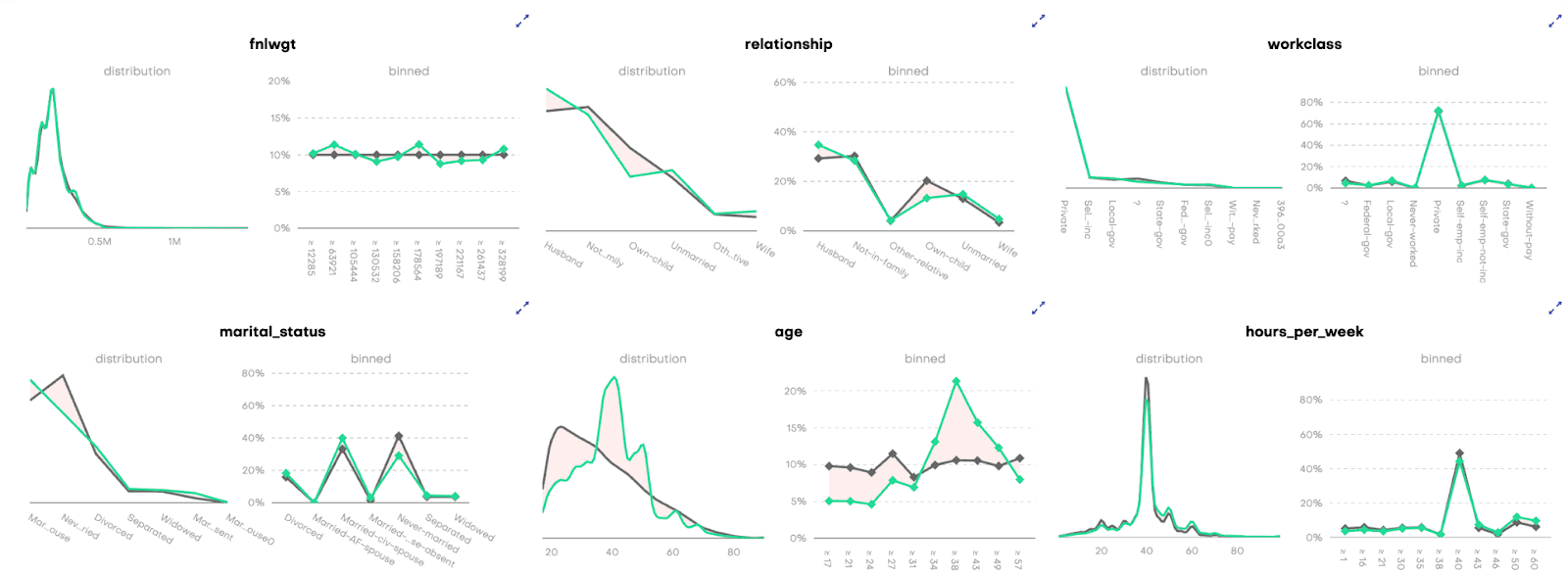

Synthetic data can help you mitigate data bias. During the data synthesization process, it is possible to introduce different kinds of constraints, such as fairness. The result is fair synthetic data, without any bias. You can also use synthetic data to improve model explainability and transparency by removing privacy concerns and significantly expanding the group of users you can share the training data with.

More specifically, you can mitigate the following types of data bias using synthetic data:

Selection Bias

If you are dealing with imbalanced datasets due to selection bias, you can use synthetic data to rebalance your datasets to include more samples of the minority population. For example, you can use this feature to provide more nuanced responses for polarizing topics (e.g. book reviews, which generally tend to be overly positive or negative) to train your LLM app.

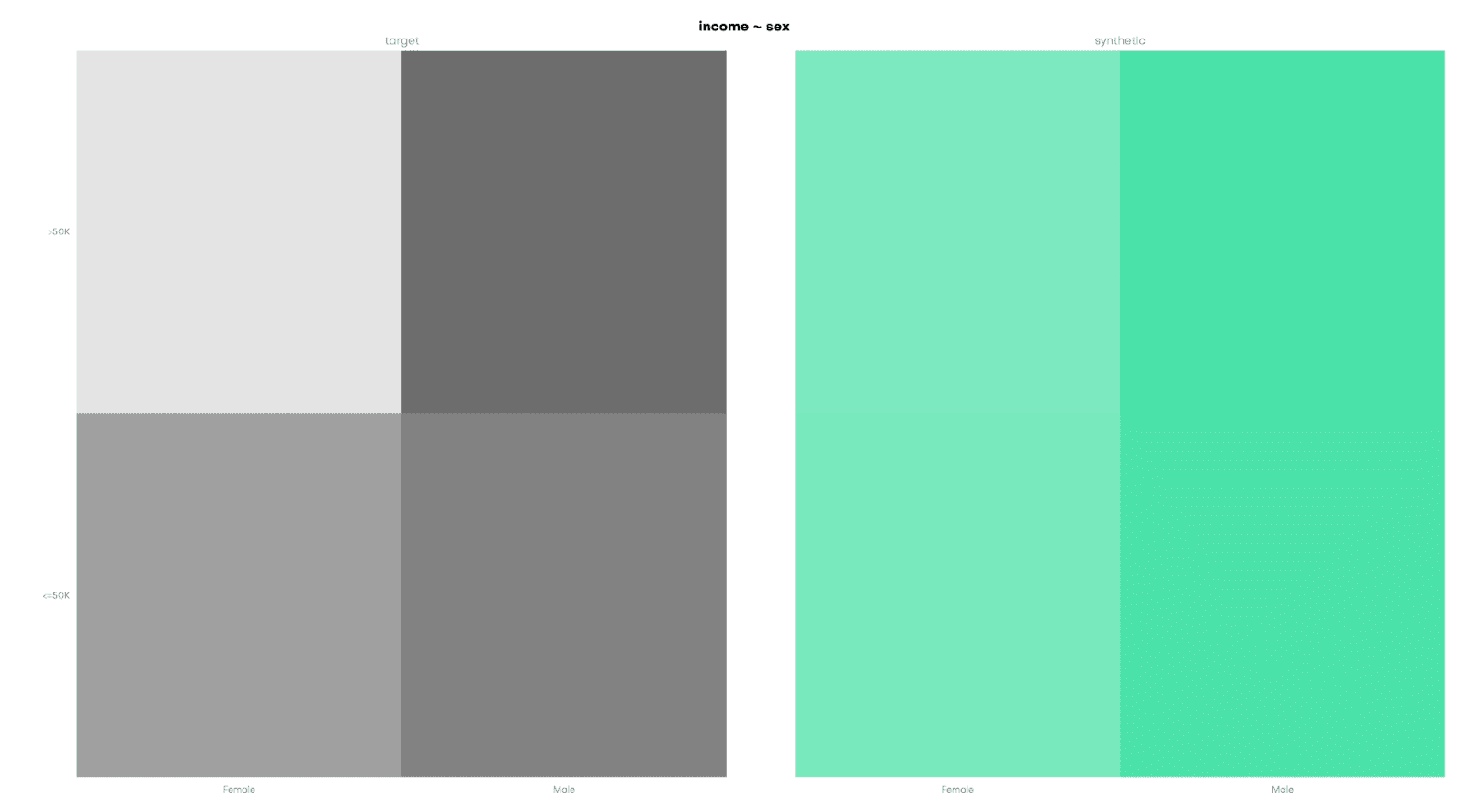

Social Bias

Conditional data generation enables you to take a gender- or racially-biased dataset and simulate what it would look like without the biases included. For example, you can simulate what the UCI Adult Income dataset would look like without a gender income gap. This can be a powerful tool in combating social biases.

Reporting or Participation Bias

If you are dealing with missing data points due to reporting or participation bias, you can use smart imputation to impute the missing values in a high-quality, statistically representative manner. This allows you to avoid data loss by allowing you to use all the records available. Using MOSTLY AI’s Smart Imputation feature it is possible to recover the original population distribution which means you can continue to use the dataset as if there were no missing values to begin with.

Mitigating data bias in LLM and generative AI applications

Data bias is a pervasive and multi-faceted problem that can have significant negative impacts if not dealt with appropriately. The real-world examples you have seen in this article show clearly that even the biggest players in the field of AI struggle to get this right. With tightening government regulations and increasing social pressure to ensure fair and responsible AI applications, the urgency to identify and rectify data bias at all points of the LLM and Generative AI lifecycle is only becoming stronger.

In this article you have learned how to recognise the different kinds of data bias that can affect your LLM or Generative AI applications. You have explored the impact of data bias through real-world examples and learned about some of the most effective strategies for mitigating data bias. You have also seen the role synthetic data can play in addressing this problem.

If you’d like to put this new knowledge to use directly, take a look at our hands-on coding tutorials on conditional data generation, rebalancing, and smart imputation. MOSTLY AI's free, state-of-the-art synthetic data generator allows you to try these advanced data augmentation techniques without the need to code.

For a more in-depth study on the importance of fairness in AI and the role that synthetic data can play, read our series on fair synthetic data.

What is time series data?

Time series data is a sequence of data points that are collected or recorded at intervals over a period of time. What makes a time series dataset unique is the sequence or order in which these data points occur. This ordering is vital to understanding any trends, patterns, or seasonal variations that may be present in the data.

In a time series, data points are often correlated and dependent on previous values in the series. For example, when a financial stock price moves every fraction of a second, its movements are based on previous positions and trends. Time series data becomes a valuable asset in predicting future values based on these past patterns, a process known as forecasting.

Time series forecasting employs specialized statistical techniques to effectively model and generate future predictions. It is commonly used in business, finance, environmental science, and many other areas for decision-making and strategic planning.

Types of time series data

Time series data can be categorized in various ways, each with its own characteristics and analytical approaches.

Metric-based time series data

When measurements are taken at regular intervals, these are known as time series metrics. Metrics are crucial for observing trends, detecting anomalies, and forecasting future values based on historical patterns.

This type of time series data is commonly seen in financial datasets, where stock prices are recorded at consistent intervals, or in environmental monitoring, where temperature, pressure, or humidity data is collected periodically.

Source: Unsplash

Event-based time series

Event-based time series data captures occurrences that happen at specific points in time, but not necessarily at regular intervals. While this data can still be aggregated into snapshots over traditional periods, the event-based time series data forms a more complex series of related activities.

Source: Unsplash

Examples include system logging in IT networks, where each entry records an event like a system error or a transaction. Electronic health records capture patient interactions with doctors, with medical devices capturing complex health telemetry over time. City-wide sensor networks capture the telemetry from millions of individual transport journeys, including bus, subway, and taxi routes.

Event-based data is vital to understanding the sequences and relationships between occurrences that help drive decision-making in cybersecurity, customer behavioral analysis, and many other domains.

Linear time series data

Time series data can also be categorized based on how the patterns within the time series behave over time. Linear time series data is more straightforward to model and forecast, with consistent behavior from one time period to the next.

Stock prices are a classic example of a linear time series. The value of a company’s shares is recorded at regular intervals, reflecting the latest market valuation. Analyzing this data over extended periods helps investors make informed decisions about buying and selling stocks based on historical performance and predicted trends.

Non-linear time series data

In contrast, non-linear time series data is often more complex, with changes that do not follow a predictable pattern. Such time series are often found in more dynamic systems when external factors force changes in behavior that may be short-lived.

For example, short-term demand modeling for public transport after an event or incident will likely follow a complex pattern that combines the time of day, geolocation information, and other factors, making reliable predictions more complicated. With IoT wearables for health, athletes are constantly monitored for early warning signals of injury or fatigue. These data points do not follow a traditional linear time series model; instead, they require a broader range of inputs to assess and predict areas of concern.

Behavioral time series data

Capturing time series data around user interactions or consumer patterns produces behavioral datasets that can provide insights into habits, preferences, or individual decisions. Behavioral time series data is becoming increasingly important to social scientists, designers, and marketers to better understand and predict human behavior in various contexts.

From measuring whether daily yoga practice can impact device screen time habits to analyzing over 285 million user events from an eCommerce website, behavioral time series data can exist as either metrics- or event-based time series datasets.

Metrics-based behavioral analytics are widespread in financial services, where customer activity over an extended period is used to assess suitability for loans or other services. Event-based behavioral analytics are often deployed as prescriptive analytics against sequences of events that represent transactions, visits, clicks, or other actions.

Organizations use behavioral analytics at scale to provide customers visiting websites, applications, or even brick-and-mortar stores with a “next best action” that will add value to their experience.

Despite the immense growth of behavioral data captured through digital transformation and investment programs, there are still major challenges to driving value from this largely untapped data asset class.

Since behavioral data typically stores thousands of data points per customer, individuals are increasingly likely to be re-identified, resulting in privacy breaches. Legacy data anonymization techniques, such as data masking, fail to provide strong enough privacy controls or remove so much from the data that it loses its utility for analytics altogether.

Examples of time series data

Let’s explore some common examples of time series data from public sources.

From the US Federal Reserve, a data platform known as the Federal Reserve Economic Database (FRED) collects time series data related to populations, employment, socioeconomic indicators, and many more categories.

Some of FRED’s most popular time series datasets include:

| Category | Source | Frequency | Data Since |

|---|---|---|---|

| Population | US Bureau of Economic Analysis | Monthly | 1959 |

| Employment (Nonfarm Private Payroll) | Automatic Data Processing, Inc. | Weekly | 2010 |

| National Accounts (Federal Debt) | US Department of the Treasury | Quarterly | 1966 |

| Environmental (Jet Fuel CO2 Emissions) | US Energy Information Administration | Annually | 1973 |

Beyond socioeconomic and political indicators, time series data plays a critical role in the decision-making processes behind financial services, especially banking activities such as trading, asset management, and risk analysis.

| Category | Source | Frequency | Data Since |

|---|---|---|---|

| Interest Rates (e.g., 3-Month Treasury Bill Secondary Market Rates) | Federal Reserve | Daily | 2018 |

| Exchange Rates (e.g., USD to EUR Spot Exchange Rate) | Federal Reserve | Daily | 2018 |

| Consumer Behavior (e.g., Large Bank Consumer Credit Card Balances) | Federal Reserve Bank of Philadelphia | Quarterly | 2012 |

| Markets Data (e.g., commodities, futures, equities, etc.) | Bloomberg, Reuters, Refinitiv, and many others | Real-Time | N/A |

The website kaggle.com provides an extensive repository of publicly available datasets, many recorded as time series.

| Category | Source | Frequency | Data Range |

|---|---|---|---|

| Environmental (Jena Climate Dataset) | Max Planck Institute for Biogeochemistry | Every 10 minutes | 2009-2016 |

| Transportation (NYC Yellow Taxi Trip Data) | NYC Taxi & Limousine Commission (TLC) | Monthly updates, with individual trip records | 2009- |

| Public Health (COVID-19) | World Health Organization | Daily | 2020- |

An emerging category of time series data relates to the growing use of Internet of things (IoT) devices that capture and transmit information for storage and processing. IoT devices, such as smart energy meters, have become extremely popular in both industrial applications (e.g., manufacturing sensors) and commercial use.

| Category | Source | Frequency | Data Range |

|---|---|---|---|

| IoT Consumer Energy (Smart Meter Telemetry) | Jaganadh Gopinadhan (Kaggle) | Minute | 12-month period |

| IoT Temperature Measurements | Atul Anand (Kaggle) | Second | 12-month period |

How to store time series data

Once time series data has been captured, there are several popular options for storing, processing, and querying these datasets using standard components in a modern data stack or via more specialist technologies.

File formats for time series data

Storing time series data in file formats like CSV, JSON, and XML is common due to their simplicity and broad compatibility. With CSV files especially, this makes them ideal for smaller datasets, where ease of use and portability are critical.

Formats such as Parquet have become increasingly popular for storing large-scale time series datasets, offering efficient compression and high performance for analysis. However, Parquet can be more complex and resource-intensive than simpler file formats, and managing large numbers of Parquet files, especially in a rapidly changing time series context, can become challenging.

When more complex data structures are involved, JSON and XML formats provide a structured way to store time series data, complete with associated metadata, especially when using APIs to transfer information between systems. JSON and XML typically require additional processing to “flatten” the data for analysis and are not ideal for large datasets.

For most time series stored in files, it’s recommended to use the more straightforward CSV format where possible, switching to Parquet when data volumes affect storage efficiency and read/write speeds, typically at the gigabyte or terabyte scale. Likewise, a synthetically generated time series can be easily exported to tabular CSV or Parquet format for downstream analysis in various tools.

Time series databases

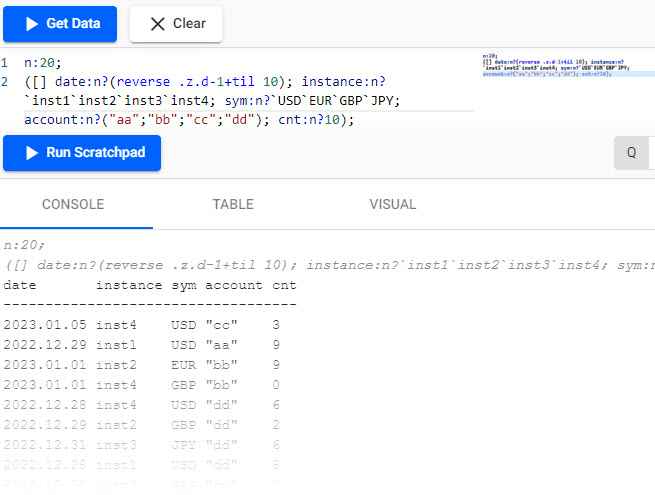

Dedicated time series databases, such as Kx Systems, are specifically designed to manage and analyze sequences of data points indexed over time. These databases are optimized for handling large volumes of data that are constantly changing or being updated, making them ideal for applications in financial markets such as high-frequency trading, IoT sensor data, or real-time monitoring.

Source: Kx query

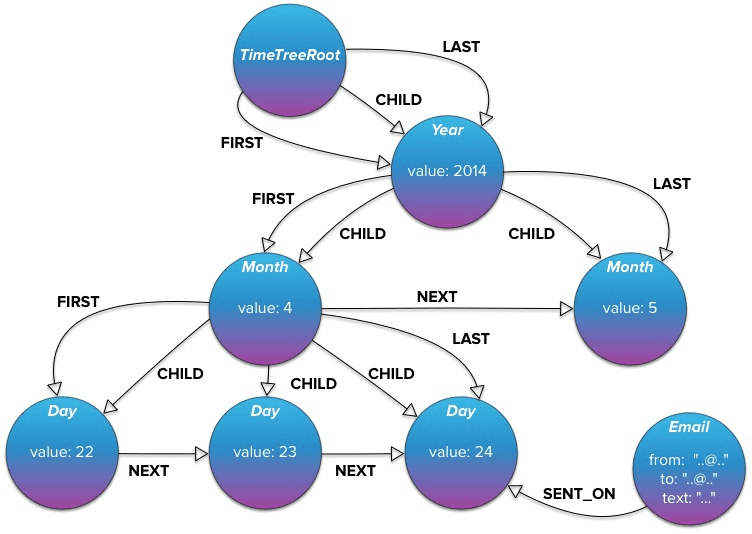

Graph databases for time series

Graph databases like Neo4j offer a unique approach to storing time series data by representing it as a network of interconnected nodes and relationships. Graph databases allow for the modeling of complex relationships, providing insights that might be difficult to extract from traditional relational data models.

The ability to explore relationships efficiently in graph databases makes them suitable for analyses that require a deep understanding of interactions over time, adding a rich layer of context to the time series data.

In the example below, Neo4j can create a “TimeTree” graph data model that captures events used in risk and compliance analysis. Exploring emails sent at different times to different parties and any associated events from that period becomes possible.

Source: Neo4j time-tree

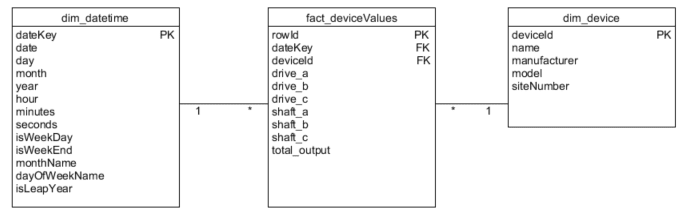

Relational databases for time series

For decades, traditional relational database management systems (RDBMS) like Snowflake, Postgres, or Redshift have been used to store, process, and analyze time series data. One of the most popular relational data models for time series analysis is known as the star schema, where a central fact table (containing the time series data such as events, transactions, or behaviors) is connected to several dimension tables (e.g., customer, store, product, etc.) that provide rich analytical context.

By capturing events at a granular level, the time series data can be sliced and diced in many different ways, giving analysts a great deal of flexibility to answer questions and explore business performance. Usually, a date dimension table contains all the relevant context for a time series analysis, with attributes such as day of the week, month, and quarter, as well as valuable references to prior periods for comparison.

In a well-designed star schema model, the number of dimensions associated with a transactional fact table generally ranges between six and 15. These dimensions, which provide the contextual details necessary to understand and analyze the facts, depend on the specific analysis needs and the complexity of the business domain. MOSTLY AI can generate highly realistic synthetic data that fully retains the correlations from the original dimensions and fact tables across star schema data models with three or more entities.

Source: Stack Exchange

How to analyze time series data

Before analyzing a time series model, there are several essential terms and concepts to review.

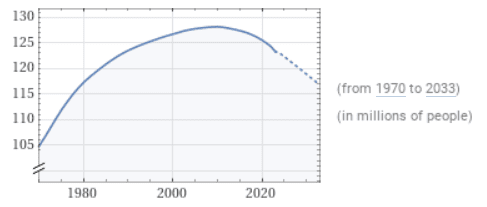

Trend

A trend is a long-term value increase or decrease within a time series. Trends do not have to be linear and may reverse direction over time.

Source: WolframAlpha

Seasonality

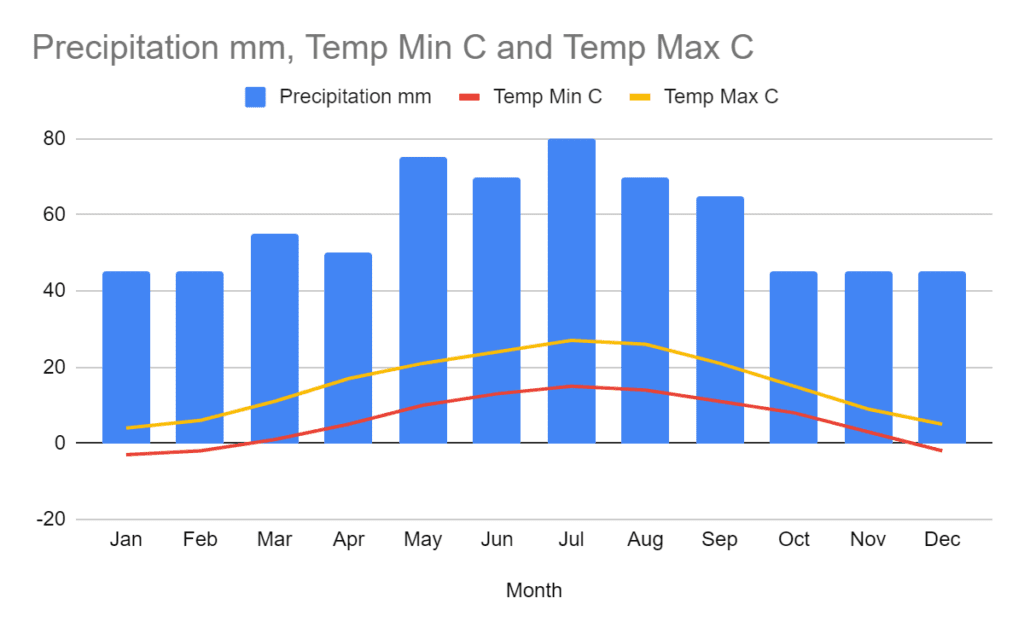

Seasonality is a pattern that occurs in a time series dataset at a fixed interval, such as the time of year or day of the week. Most commonly associated with physical properties such as temperature or rainfall, seasonality is also applied to consumer behavior driven by public holidays or promotional events.

Data retention over extended periods allows analysts to observe long-term patterns and variations. This historical perspective is essential for distinguishing between one-time anomalies and consistent seasonal fluctuations, providing valuable insights for forecasting and strategic planning.

Adapted from: Climates to Travel

Cyclicity

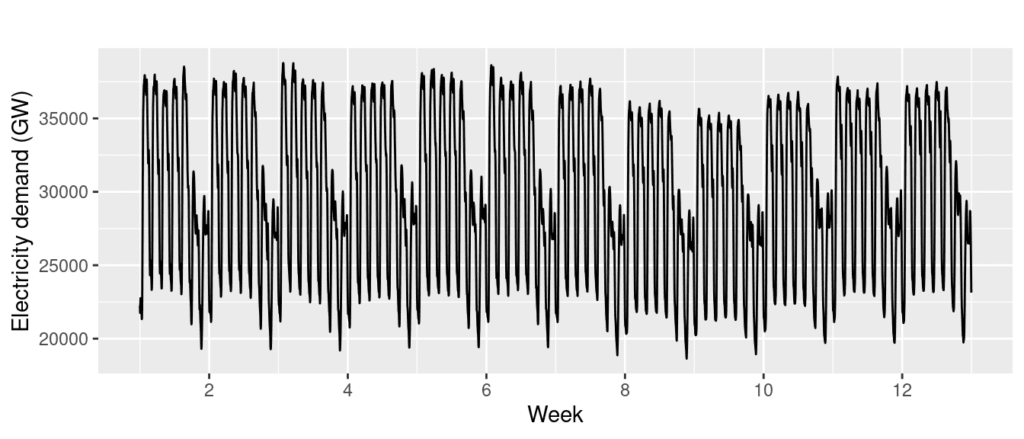

A cyclic pattern occurs when observations rise and fall at non-fixed frequencies. Often, cycles last for multiple years, but the cyclic duration can only sometimes be determined in advance.

Source: Rob J. Hyndman

Random noise

The final component to a time series is random noise, once any trends, seasonality, or cyclic signals have been accounted for. Any time series that contains too much random noise will be challenging to forecast or analyze.

Preparing data for time series analysis: Fill the gaps

Once a time series dataset has been collected, ensuring no missing dates within the sequence is vital. Review the granularity of the data set and impute any missing elements to ensure a smooth sequence. The imputation approach will vary depending on the dataset. Still, a common approach is filling any missing time series gaps with an average value based on the nearest data points.

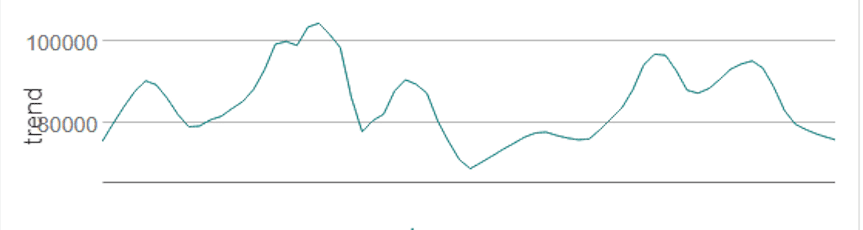

Exploring the signals within a time series with decomposition plots

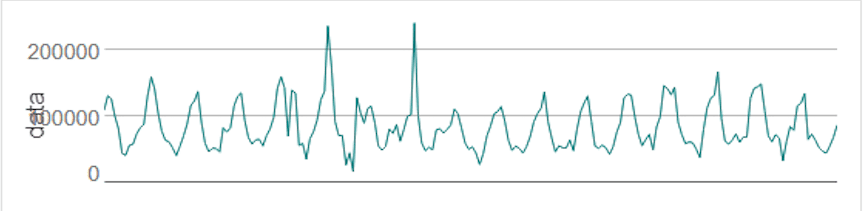

The next step in time series analysis is to explore different univariate plots of the data to determine how to develop a forecasting model.

A time series plot can help assess whether the original time series data needs to be transformed or whether any outliers are present.

Source: Alteryx

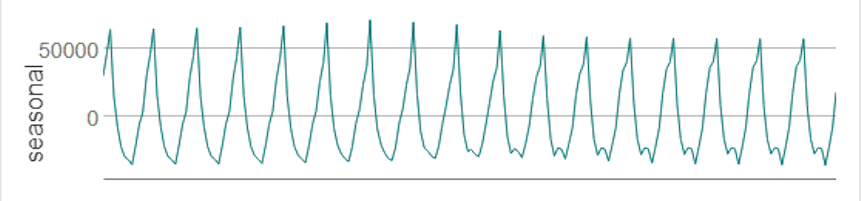

A seasonal plot helps analysts explore whether seasonality exists within the dataset, its frequency, and cyclic behaviors.

Source: Alteryx

A trend analysis can explore the magnitude of the change that is identified during the time series and is used in conjunction with the seasonality chart to explore areas of interest in the data.

Source: Alteryx

Finally, a residual analysis shows any information remaining once seasonality and trend have been taken into account.

Source: Alteryx

Time series decomposition plots of this type are available in most data science environments, including R and Python.

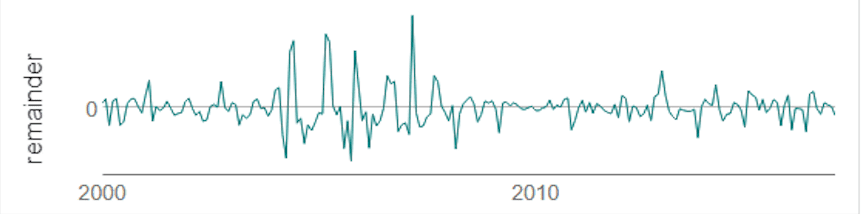

Exploring relationships between points in a time series: Autocorrelation

As explored previously, time series records have strong relationships with previous points in the data. The strength of these relationships can be measured through a statistical tool called autocorrelation.

An autocorrelation function (ACF) measures how much current data points in a time series are correlated to previous ones over different periods. It’s a method to understand how past values in the series influence current values.

Source: Alteryx

When generating synthetic data, it’s important to preserve these underlying patterns and correlations. Accurate synthetic datasets can mimic these patterns, successfully retaining both the statistical properties as well as the time-lagged behavior of the original time series.

Building predictive time series forecasts: ARIMA

Once the exploration of a time series is complete, analysts can use their findings to build predictive models against the dataset to forecast future values.

ARIMA, AutoRegressive Integrated Moving Average, is a popular statistical method effective for time series data showing patterns or trends over time. It combines three key components:

- Autoregression (AR): Using the relationship between a current observation and several lagged observations.

- Integration (I): A process to remove trends and seasonality from the data, effectively rendering it “stationary.”

- Moving Average (MA): Calculates the relationship between a current observation and the errors from previous predictions, helping to smooth out random noise in the data.

Building predictive time series forecasts: ETS

An alternative approach is to use a method known as Error, Trend, Seasonality (ETS) that focuses on decomposing a time series into its error, trend, and seasonal components to predict future values:

- Error: As seen in the decomposition plot above, the error component captures randomness or noise in the data.

- Trend: Captures the long-term progression in the time series.

- Seasonality: Captures any systematic or calendar-related patterns.

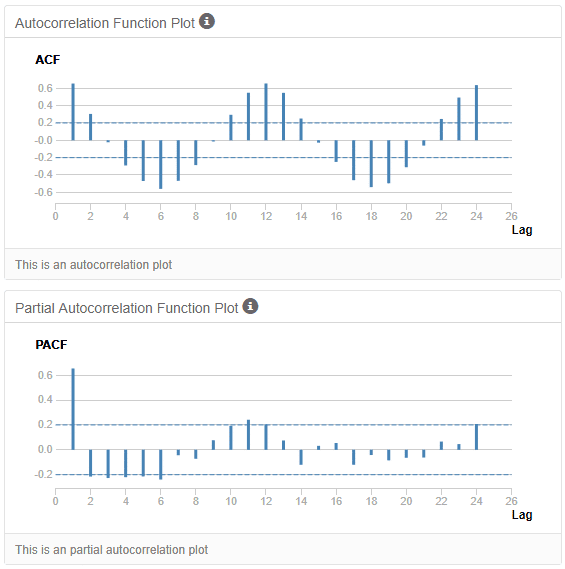

Reviewing forecasts: Visualization and statistics

Once a model (or models) have been created, they can be visualized alongside historical data to inspect how closely the forecast follows the pattern of the existing time series data.

Source: phData

A quantitative approach to measuring time series forecasts often employs either the AIC (Akaike Information Criterion) or AICc (Corrected Akaike Information Criterion), defined as follows:

- AIC: Balances a model’s fit to the data against the complexity of the model, with a lower AIC score indicating a better model. AIC is often used when the sample size is significantly larger than the number of model parameters.

- AICc: This approach prevents overfitting in time series forecasting models with a smaller sample size by adding a correction term to the AIC calculation.

How to anonymize a time series with synthetic data

Anonymizing time series data is notoriously difficult and legacy anonymization approaches fail at this challenge.

But there is an effective alternative: synthetic data which offers a solution to these privacy concerns.

Synthesizing time series data makes a lot of sense when dealing with behavioral data. Understanding the key concepts of data subjects is a crucial step in learning how to generate synthetic data in a privacy-preserving manner.

A subject is an entity or individual whose privacy you will protect. Behavioral event data must be prepared in advance so that each subject in the dataset (e.g., a customer, website visitor, hospital patient, etc.) is stored in a dedicated table, each with a unique row identifier. These subjects can have additional reference information stored in separate columns, including attributes that ideally don’t change during the captured events.

For data practitioners, the concept of the subject table is similar to a “dimension” table in a data warehouse, where common attributes related to the subjects are provided for context and further analysis.

The behavioral event data is prepared and stored in a separate linked table referencing a unique subject. In this way, one subject will have zero, one, or (likely) many events captured in this linked table.

Records in the linked table must be pre-sorted in chronological order for each subject to capture the time-sensitive nature of the original data. This model suits various types of event-based data, including insurance claims, patient health, eCommerce, and financial transactions.

In the example of a customer journey, our tables may look like this.

We see customers stored in our subject table with their associated demographic attributes.

| ID | ZONE | STATE | GENDER | AGE_CAT | AGE |

|---|---|---|---|---|---|

| 1 | Pacific | Oregon | M | Young | 26 |

| 2 | Eastern | New Jersey | M | Medium | 36 |

| 3 | Central | Minnesota | M | Young | 17 |

| 4 | Eastern | Michigan | M | Medium | 56 |

| 5 | Eastern | New Jersey | M | Medium | 46 |

| 6 | Mountain | New Mexico | M | Medium | 35 |

In the corresponding linked table, we have captured events relating to the purchasing behavior of each of our subjects.

| USER_ID | DATE | NUM_CDS | AMT |

|---|---|---|---|

| 1 | 1997-01-01 | 1 | 11.77 |

| 2 | 1997-01-12 | 1 | 12 |

| 2 | 1997-01-12 | 5 | 77 |

In this example, user 1 visited the website on January 1st, 1997, purchasing 1 CD for $11.77. User 2 visited the website twice on January 12th, 1997, making six purchases over these visits for $89.

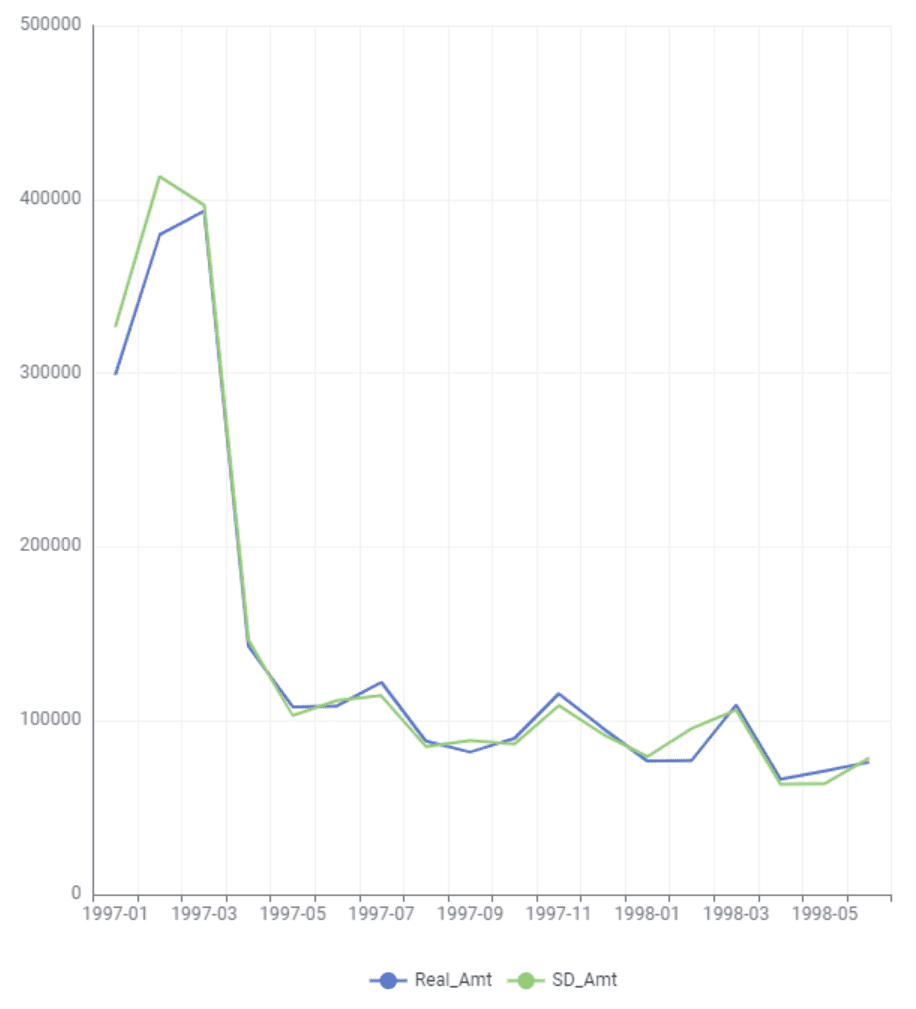

These consumer buying behaviors can be aggregated into standard metrics-based time series, such as purchases per week, month, or quarter, revealing general buying trends over time. Alternatively, the behavioral data in the linked table can be treated as discrete purchasing events happening at specific intervals in time.

Customer-centric organizations obsess around behaviors that drive revenue and retention beyond simple statistics. Analysts constantly ask questions about customer return rates, spending habits, and overall customer lifetime value.

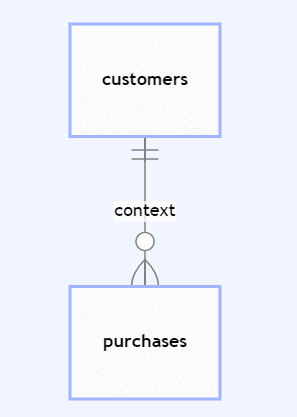

Synthetic data modeling: Relationships between subjects and linked data

Defining the relationship between customers and their purchases is an essential first step in synthetic data modeling. Ensuring that primary and foreign keys are identified between subject and linked tables enables synthetic data generation platforms to understand the context of each behavioral record (e.g., purchases) in terms of the subject (e.g., customers).

Additional configurations, such as smart imputation, dataset rebalancing, or rare category protection, can be defined at this stage.

Synthetic data modeling: Sequence lengths and continuation

A time series sequence refers to a captured set of data over time for a subject within the dataset. For synthetic data models, generating the next element in a sequence given a previous set of features is a critical capability known as sequence continuation.

Defining sequence lengths in synthetic data models involves specifying the number of time steps or data points to be considered in each sequence within the dataset. This decision determines how much historical data the synthetic model will use to predict or generate the next element in the sequence.

The choice of sequence length depends significantly on the nature of the data and the specific application. Longer sequence lengths can capture more long-term patterns and dependencies but will also require more computational resources and may need to be more responsive to recent changes. Conversely, a shorter sequence length is more sensitive to recent trends but might overlook longer-term patterns.

In synthetic modeling, selecting a sequence length that strikes a balance between capturing sufficient historical or behavioral context and maintaining computational efficiency and performance is essential.

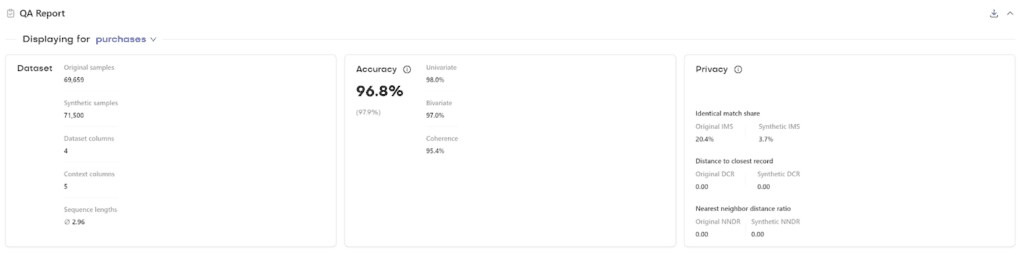

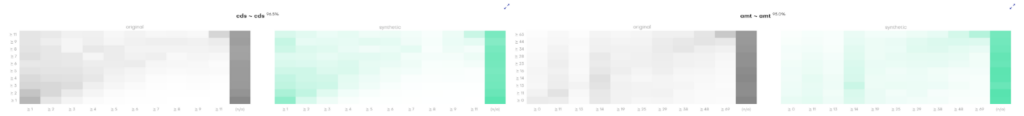

Synthetic data generation: Accurate and privacy-safe results

Synthetic data generation can produce realistic and representative behavioral time series data that mimics the original distribution found in the source data without the possibility of re-identification. With privacy-safe behavioral data, it’s possible to democratize access to datasets such as these, developing more sophisticated behavioral models and deeper insights beyond basic metrics, “average” customers, and crude segmentation methods.

Synthetic data is quickly becoming a critical tool for organizations to unlock the value of sensitive customer data while keeping the privacy of their customers protected and in compliance with data protection regulations such as GDPR and CCPA. It can be generated quickly in abundance and has been proven to drastically improve machine learning performance. As a result, it is often used for advanced analytics and AI training, such as predictive algorithms, fraud detection and pricing models.

According to Gartner, by 2024, 60% of the data used for the development of AI and analytics projects will be synthetically generated.

MOSTLY AI pioneered the creation of synthetic data for AI model development and software testing. With things moving so quickly in this space here are three trends that we see happening in AI and synthetic data in 2022:

1. Bias in AI will get worse before it gets better.

Most of the machine learning and AI algorithms currently in production, interacting with customers, making decisions about people have never been audited for fairness and discrimination, the training data has never been augmented to fix embedded biases. It is only through massive scandals that companies are finding out and learning the hard way that they need to pay more attention to biased data and to use fair synthetic data instead.

2. Companies’ data assets will freeze up due to regulations and declining customer consent.

Regulations all over the world are getting stricter every day; many countries have a personal data protection policy in place by now. Using customer data is getting increasingly difficult for a number of other reasons too - people are more privacy-conscious and are increasingly likely to refuse consent to using their data for analytics purposes. So companies literally run out of relevant and usable data assets. Companies will learn to understand that synthetic data is the way out of this dilemma.

3. Synthetic data will be standardized with globally recognized benchmarks for privacy and accuracy.

Not all synthetic data is created equal. To start off with, there is a world of difference between what we call structured and unstructured synthetic data. Unstructured data means images and text for example, while structured data is mainly tabular in nature. There are lots of open source and proprietary synthetic data providers out there for both kinds of synthetic data and the quality of their generators varies widely. It’s high time to establish a synthetic data standard to make sure that synthetic data users get consistently high-quality synthetic data. We are already working on structured synthetic data standards.

If you’d like to connect on these trends, we’re happy to set up an interview or write a byline on these topics for your publication. Please let us know - thanks.

2021 has passed in the blink of an eye, yet MOSTLY AI can be proud as this was a revolutionary year of many extraordinary achievements. While we are already excited for what 2022 holds for us, we are taking a step back to look at the highlights and major milestones we have accomplished in 2021.

Synthetic data revolution

Our developers had a busy start to the year with the new upgrade of our category-leading synthetic data generator, MOSTLY AI 1.5. Alongside many shiny new features, the big buzz was about our synthetic data generator now supporting the synthesis of geolocation data with latitude and longitude encoding types. Say goodbye to harmful digital footprints and hello to privacy-safe synthetic geodata!

This was not enough for our very ambitious team; so in the second half of the year, they pushed the boundaries even further by truly revolutionizing software testing. With this new version of our platform, MOSTLY AI 2.0 became the first synthetic data platform that can automatically synthesize complex data structures, making it ideal for software testing. By expanding the capabilities to multi-table data structures, MOSTLY AI now enables anyone – not just data scientists – to create synthetic data from databases, automatically. This improves security and compliance and accelerates time to data. Our team truly deserves a toast for this!

The Data Democratization Podcast

We’ll be soon celebrating the first birthday of “The Data Democratization Podcast”, which we started back in January 2021. With over 2000 downloads in 2021, the podcast was an absolute hit! Our listeners had the opportunity to get so many insights from knowledgeable AI and privacy experts working in top-notch companies who shared their experiences, advice, and real-life case studies. We are entering the new year with even more enthusiasm and are preparing some special surprise guests for you. Stay tuned!

Synthetic data training for superusers

In 2021 we also launched our professional services and training program intended to help create the next generation of synthetic data superusers within enterprises. Several clients have already leveraged this first-of-its-kind program to kickstart their synthetic data journeys, with very positive results. As synthetic data pioneers, we have the most experienced team in the world. Our top engineers, architects, consultants, and data scientists have seen it all. They know what makes or breaks a company's synthetic data adoption, no matter the use case. From scaling ethical and explainable AI to providing on-demand, privacy-safe test data, the know-how is here.

Synthetic data talks

Despite COVID-19 we have managed to attend multiple conferences. While most of them happened virtually, we participated in Slush 2021 in person! Our Co-Founder & Chief Strategy Officer Michael Platzer rocked the stage presenting at this year's event in Helsinki, Finland. We are proud to have been invited to present our synthetic data solution to the world and - while staying safe - connect and exchange ideas with some of the most brilliant minds.

The only synthetic data provider to achieve SOC2 and ISO certifications

With data privacy and information security at the heart of everything we do, our efforts to ensure the privacy and integrity of our customer’s sensitive data by following strict security policies and procedures have been officially recognized this year. In March, we received the SOC 2 Type 2 certification, which is an audit report capturing how a company safeguards customer data and how well internal controls are operating and later in November, we got awarded the ISO 27001 certification which is a globally recognized information security standard.

Thanks to both SOC2 and ISO certifications, our customers and partners can now speed up vetting processes and immediately get a clear picture of the advanced level of information security standards we hold.

Growing the Order of Mostlies

All this wouldn’t be possible without MOSTLY AI’s most important asset – our team (or Mostlies as we like to call them). In 2021, we welcomed quite a few new Mostlies to the team - amongst them new executives to strengthen our product, marketing and sales activities.

The first one to join the team this year was Andreas Ponikiewicz as our Vice President of Global Sales, who took the lead for MOSTLY AI's international sales team across Europe, North America and Asia and has brought our communication with the clients to the next level. Shortly afterward, we welcomed our new CTO, Kerem Erdem, onboard. As a true captain, he is leading us on the way to accelerate our tech performance and enable organizations to thrive in an ethical, responsible way with smart and safe synthetic data. To help get the word out, in early May, Sabine Klisch joined the team as VP Global Marketing and is now leading our creative marketing team on our journey to position MOSTLY AI as the global leader for smart synthetic data. And to spice up the story even more, we have added a special Italian ingredient – Mario Scriminaci, our new CPO who is making sure our synthetic data platform is the number one solution and provides our customers with better-than-real data.

The Best Employer Award

As already mentioned, Mostlies are the most important part of MOSTLY AI and it seems we are doing something right since we made it to the top 3 of Great Place to Work and received Austria's Best Employers 2021 award.

The MOSTLY AI team is truly diverse, with more than 15 different nationalities represented. Almost 40 members strong, we are organized in several teams, including data science, engineering, product, marketing, sales, and operations. The majority of us are based at our headquarter in Vienna, but an increasing number are working remotely spread across the entire world. What has started as a necessity because of COVID-19 has now become an integral part of our company culture.

Looking back, we can say this year has exceeded our expectations by far. One team of devoted professionals all united with the same vision – to empower people with data and build a smarter and fairer future together.

What’s next? 2022 is said to be the year of synthetic data. According to Gartner, by 2024, 60% of the data used for the development of AI and analytics projects will be synthetically generated. 2022 will also be the year of MOSTLY AI and we will have exciting news to share with you very soon.

Stay up to date with all things synthetic data!

If you want to stay in the loop with the latest developments in the world of synthetic data, join our newsletter group for monthly updates.