What is time series data?

Time series data is a sequence of data points that are collected or recorded at intervals over a period of time. What makes a time series dataset unique is the sequence or order in which these data points occur. This ordering is vital to understanding any trends, patterns, or seasonal variations that may be present in the data.

In a time series, data points are often correlated and dependent on previous values in the series. For example, when a financial stock price moves every fraction of a second, its movements are based on previous positions and trends. Time series data becomes a valuable asset in predicting future values based on these past patterns, a process known as forecasting.

Time series forecasting employs specialized statistical techniques to effectively model and generate future predictions. It is commonly used in business, finance, environmental science, and many other areas for decision-making and strategic planning.

Types of time series data

Time series data can be categorized in various ways, each with its own characteristics and analytical approaches.

Metric-based time series data

When measurements are taken at regular intervals, these are known as time series metrics. Metrics are crucial for observing trends, detecting anomalies, and forecasting future values based on historical patterns.

This type of time series data is commonly seen in financial datasets, where stock prices are recorded at consistent intervals, or in environmental monitoring, where temperature, pressure, or humidity data is collected periodically.

Source: Unsplash

Event-based time series

Event-based time series data captures occurrences that happen at specific points in time, but not necessarily at regular intervals. While this data can still be aggregated into snapshots over traditional periods, the event-based time series data forms a more complex series of related activities.

Source: Unsplash

Examples include system logging in IT networks, where each entry records an event like a system error or a transaction. Electronic health records capture patient interactions with doctors, with medical devices capturing complex health telemetry over time. City-wide sensor networks capture the telemetry from millions of individual transport journeys, including bus, subway, and taxi routes.

Event-based data is vital to understanding the sequences and relationships between occurrences that help drive decision-making in cybersecurity, customer behavioral analysis, and many other domains.

Linear time series data

Time series data can also be categorized based on how the patterns within the time series behave over time. Linear time series data is more straightforward to model and forecast, with consistent behavior from one time period to the next.

Stock prices are a classic example of a linear time series. The value of a company’s shares is recorded at regular intervals, reflecting the latest market valuation. Analyzing this data over extended periods helps investors make informed decisions about buying and selling stocks based on historical performance and predicted trends.

Non-linear time series data

In contrast, non-linear time series data is often more complex, with changes that do not follow a predictable pattern. Such time series are often found in more dynamic systems when external factors force changes in behavior that may be short-lived.

For example, short-term demand modeling for public transport after an event or incident will likely follow a complex pattern that combines the time of day, geolocation information, and other factors, making reliable predictions more complicated. With IoT wearables for health, athletes are constantly monitored for early warning signals of injury or fatigue. These data points do not follow a traditional linear time series model; instead, they require a broader range of inputs to assess and predict areas of concern.

Behavioral time series data

Capturing time series data around user interactions or consumer patterns produces behavioral datasets that can provide insights into habits, preferences, or individual decisions. Behavioral time series data is becoming increasingly important to social scientists, designers, and marketers to better understand and predict human behavior in various contexts.

From measuring whether daily yoga practice can impact device screen time habits to analyzing over 285 million user events from an eCommerce website, behavioral time series data can exist as either metrics- or event-based time series datasets.

Metrics-based behavioral analytics are widespread in financial services, where customer activity over an extended period is used to assess suitability for loans or other services. Event-based behavioral analytics are often deployed as prescriptive analytics against sequences of events that represent transactions, visits, clicks, or other actions.

Organizations use behavioral analytics at scale to provide customers visiting websites, applications, or even brick-and-mortar stores with a “next best action” that will add value to their experience.

Despite the immense growth of behavioral data captured through digital transformation and investment programs, there are still major challenges to driving value from this largely untapped data asset class.

Since behavioral data typically stores thousands of data points per customer, individuals are increasingly likely to be re-identified, resulting in privacy breaches. Legacy data anonymization techniques, such as data masking, fail to provide strong enough privacy controls or remove so much from the data that it loses its utility for analytics altogether.

Examples of time series data

Let’s explore some common examples of time series data from public sources.

From the US Federal Reserve, a data platform known as the Federal Reserve Economic Database (FRED) collects time series data related to populations, employment, socioeconomic indicators, and many more categories.

Some of FRED’s most popular time series datasets include:

| Category | Source | Frequency | Data Since |

|---|---|---|---|

| Population | US Bureau of Economic Analysis | Monthly | 1959 |

| Employment (Nonfarm Private Payroll) | Automatic Data Processing, Inc. | Weekly | 2010 |

| National Accounts (Federal Debt) | US Department of the Treasury | Quarterly | 1966 |

| Environmental (Jet Fuel CO2 Emissions) | US Energy Information Administration | Annually | 1973 |

Beyond socioeconomic and political indicators, time series data plays a critical role in the decision-making processes behind financial services, especially banking activities such as trading, asset management, and risk analysis.

| Category | Source | Frequency | Data Since |

|---|---|---|---|

| Interest Rates (e.g., 3-Month Treasury Bill Secondary Market Rates) | Federal Reserve | Daily | 2018 |

| Exchange Rates (e.g., USD to EUR Spot Exchange Rate) | Federal Reserve | Daily | 2018 |

| Consumer Behavior (e.g., Large Bank Consumer Credit Card Balances) | Federal Reserve Bank of Philadelphia | Quarterly | 2012 |

| Markets Data (e.g., commodities, futures, equities, etc.) | Bloomberg, Reuters, Refinitiv, and many others | Real-Time | N/A |

The website kaggle.com provides an extensive repository of publicly available datasets, many recorded as time series.

| Category | Source | Frequency | Data Range |

|---|---|---|---|

| Environmental (Jena Climate Dataset) | Max Planck Institute for Biogeochemistry | Every 10 minutes | 2009-2016 |

| Transportation (NYC Yellow Taxi Trip Data) | NYC Taxi & Limousine Commission (TLC) | Monthly updates, with individual trip records | 2009- |

| Public Health (COVID-19) | World Health Organization | Daily | 2020- |

An emerging category of time series data relates to the growing use of Internet of things (IoT) devices that capture and transmit information for storage and processing. IoT devices, such as smart energy meters, have become extremely popular in both industrial applications (e.g., manufacturing sensors) and commercial use.

| Category | Source | Frequency | Data Range |

|---|---|---|---|

| IoT Consumer Energy (Smart Meter Telemetry) | Jaganadh Gopinadhan (Kaggle) | Minute | 12-month period |

| IoT Temperature Measurements | Atul Anand (Kaggle) | Second | 12-month period |

How to store time series data

Once time series data has been captured, there are several popular options for storing, processing, and querying these datasets using standard components in a modern data stack or via more specialist technologies.

File formats for time series data

Storing time series data in file formats like CSV, JSON, and XML is common due to their simplicity and broad compatibility. With CSV files especially, this makes them ideal for smaller datasets, where ease of use and portability are critical.

Formats such as Parquet have become increasingly popular for storing large-scale time series datasets, offering efficient compression and high performance for analysis. However, Parquet can be more complex and resource-intensive than simpler file formats, and managing large numbers of Parquet files, especially in a rapidly changing time series context, can become challenging.

When more complex data structures are involved, JSON and XML formats provide a structured way to store time series data, complete with associated metadata, especially when using APIs to transfer information between systems. JSON and XML typically require additional processing to “flatten” the data for analysis and are not ideal for large datasets.

For most time series stored in files, it’s recommended to use the more straightforward CSV format where possible, switching to Parquet when data volumes affect storage efficiency and read/write speeds, typically at the gigabyte or terabyte scale. Likewise, a synthetically generated time series can be easily exported to tabular CSV or Parquet format for downstream analysis in various tools.

Time series databases

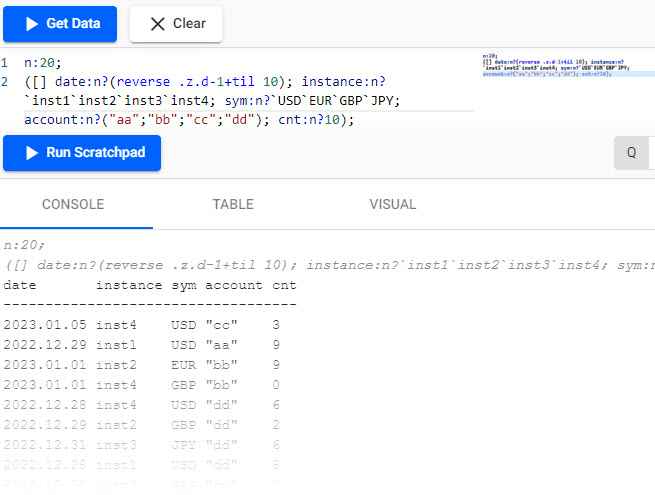

Dedicated time series databases, such as Kx Systems, are specifically designed to manage and analyze sequences of data points indexed over time. These databases are optimized for handling large volumes of data that are constantly changing or being updated, making them ideal for applications in financial markets such as high-frequency trading, IoT sensor data, or real-time monitoring.

Source: Kx query

Graph databases for time series

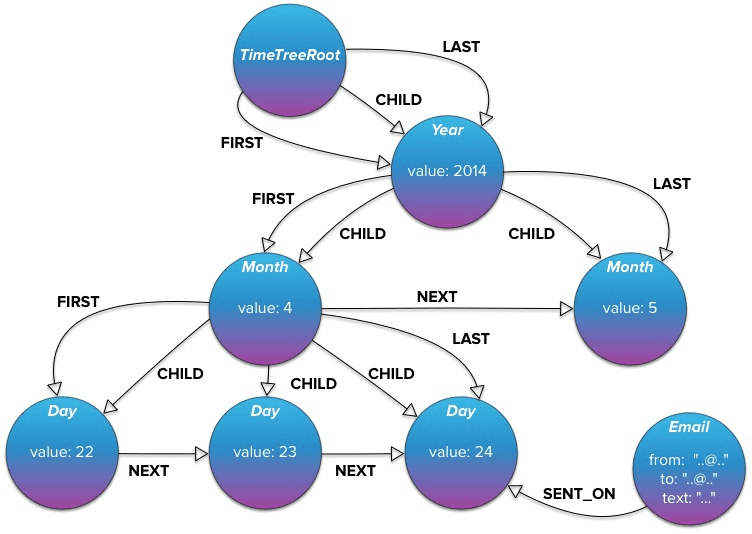

Graph databases like Neo4j offer a unique approach to storing time series data by representing it as a network of interconnected nodes and relationships. Graph databases allow for the modeling of complex relationships, providing insights that might be difficult to extract from traditional relational data models.

The ability to explore relationships efficiently in graph databases makes them suitable for analyses that require a deep understanding of interactions over time, adding a rich layer of context to the time series data.

In the example below, Neo4j can create a “TimeTree” graph data model that captures events used in risk and compliance analysis. Exploring emails sent at different times to different parties and any associated events from that period becomes possible.

Source: Neo4j time-tree

Relational databases for time series

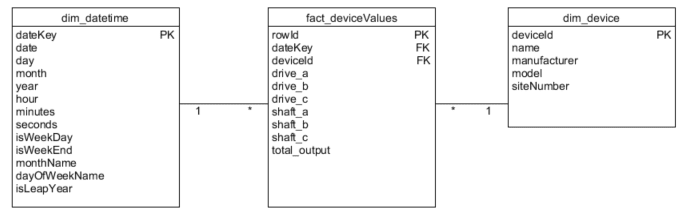

For decades, traditional relational database management systems (RDBMS) like Snowflake, Postgres, or Redshift have been used to store, process, and analyze time series data. One of the most popular relational data models for time series analysis is known as the star schema, where a central fact table (containing the time series data such as events, transactions, or behaviors) is connected to several dimension tables (e.g., customer, store, product, etc.) that provide rich analytical context.

By capturing events at a granular level, the time series data can be sliced and diced in many different ways, giving analysts a great deal of flexibility to answer questions and explore business performance. Usually, a date dimension table contains all the relevant context for a time series analysis, with attributes such as day of the week, month, and quarter, as well as valuable references to prior periods for comparison.

In a well-designed star schema model, the number of dimensions associated with a transactional fact table generally ranges between six and 15. These dimensions, which provide the contextual details necessary to understand and analyze the facts, depend on the specific analysis needs and the complexity of the business domain. MOSTLY AI can generate highly realistic synthetic data that fully retains the correlations from the original dimensions and fact tables across star schema data models with three or more entities.

Source: Stack Exchange

How to analyze time series data

Before analyzing a time series model, there are several essential terms and concepts to review.

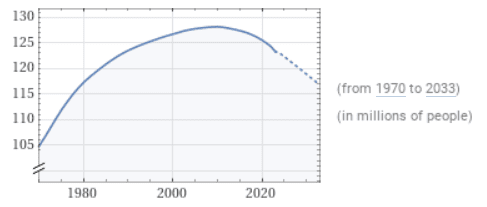

Trend

A trend is a long-term value increase or decrease within a time series. Trends do not have to be linear and may reverse direction over time.

Source: WolframAlpha

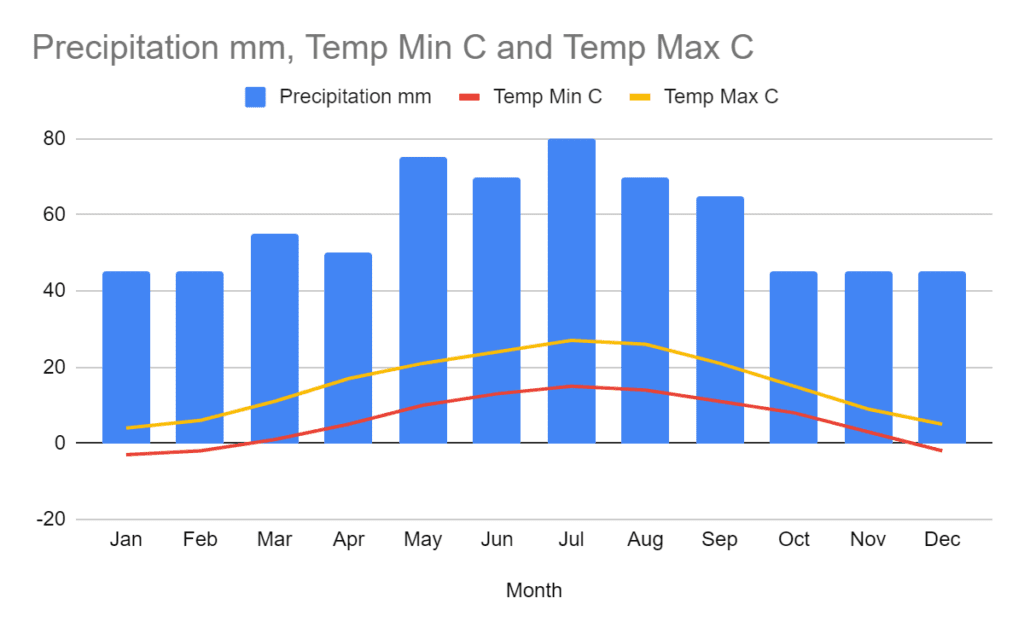

Seasonality

Seasonality is a pattern that occurs in a time series dataset at a fixed interval, such as the time of year or day of the week. Most commonly associated with physical properties such as temperature or rainfall, seasonality is also applied to consumer behavior driven by public holidays or promotional events.

Data retention over extended periods allows analysts to observe long-term patterns and variations. This historical perspective is essential for distinguishing between one-time anomalies and consistent seasonal fluctuations, providing valuable insights for forecasting and strategic planning.

Adapted from: Climates to Travel

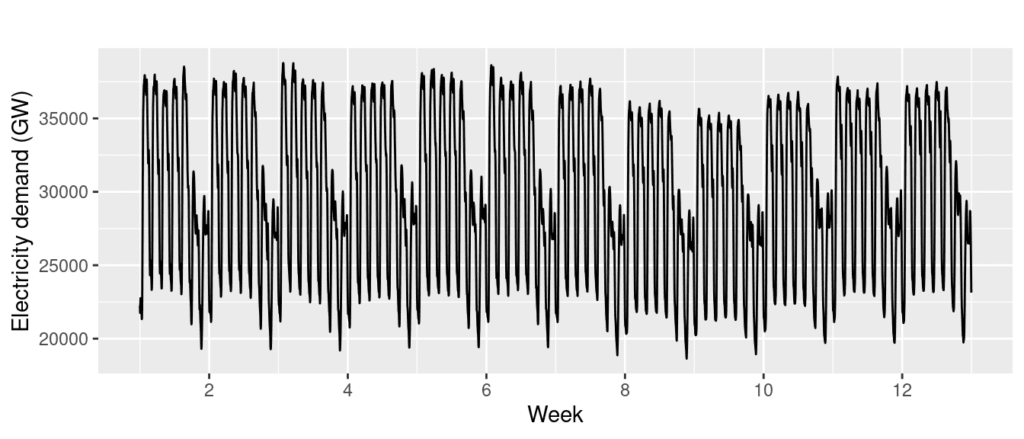

Cyclicity

A cyclic pattern occurs when observations rise and fall at non-fixed frequencies. Often, cycles last for multiple years, but the cyclic duration can only sometimes be determined in advance.

Source: Rob J. Hyndman

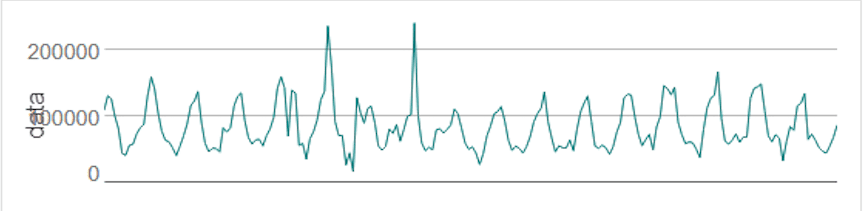

Random noise

The final component to a time series is random noise, once any trends, seasonality, or cyclic signals have been accounted for. Any time series that contains too much random noise will be challenging to forecast or analyze.

Preparing data for time series analysis: Fill the gaps

Once a time series dataset has been collected, ensuring no missing dates within the sequence is vital. Review the granularity of the data set and impute any missing elements to ensure a smooth sequence. The imputation approach will vary depending on the dataset. Still, a common approach is filling any missing time series gaps with an average value based on the nearest data points.

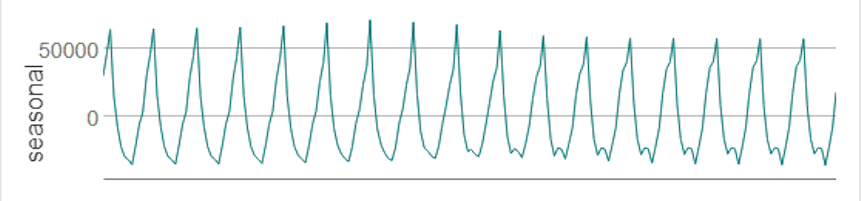

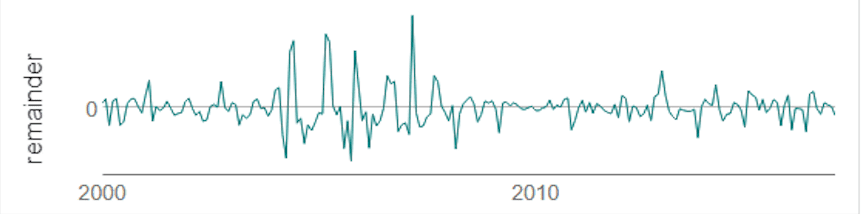

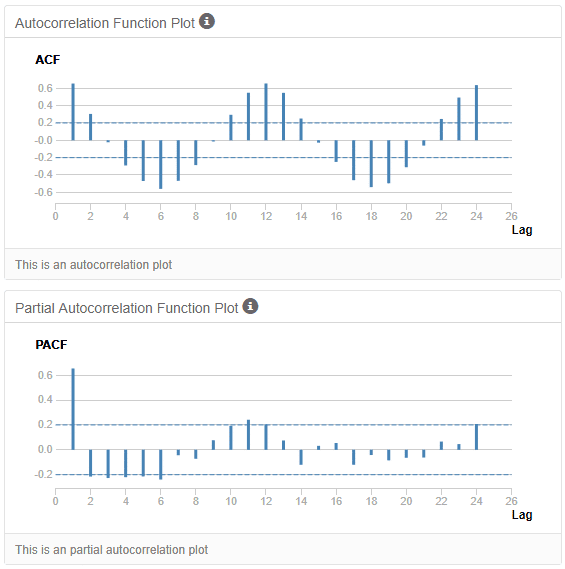

Exploring the signals within a time series with decomposition plots

The next step in time series analysis is to explore different univariate plots of the data to determine how to develop a forecasting model.

A time series plot can help assess whether the original time series data needs to be transformed or whether any outliers are present.

Source: Alteryx

A seasonal plot helps analysts explore whether seasonality exists within the dataset, its frequency, and cyclic behaviors.

Source: Alteryx

A trend analysis can explore the magnitude of the change that is identified during the time series and is used in conjunction with the seasonality chart to explore areas of interest in the data.

Source: Alteryx

Finally, a residual analysis shows any information remaining once seasonality and trend have been taken into account.

Source: Alteryx

Time series decomposition plots of this type are available in most data science environments, including R and Python.

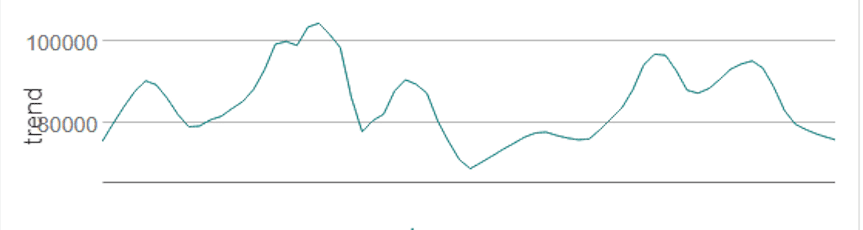

Exploring relationships between points in a time series: Autocorrelation

As explored previously, time series records have strong relationships with previous points in the data. The strength of these relationships can be measured through a statistical tool called autocorrelation.

An autocorrelation function (ACF) measures how much current data points in a time series are correlated to previous ones over different periods. It’s a method to understand how past values in the series influence current values.

Source: Alteryx

When generating synthetic data, it’s important to preserve these underlying patterns and correlations. Accurate synthetic datasets can mimic these patterns, successfully retaining both the statistical properties as well as the time-lagged behavior of the original time series.

Building predictive time series forecasts: ARIMA

Once the exploration of a time series is complete, analysts can use their findings to build predictive models against the dataset to forecast future values.

ARIMA, AutoRegressive Integrated Moving Average, is a popular statistical method effective for time series data showing patterns or trends over time. It combines three key components:

- Autoregression (AR): Using the relationship between a current observation and several lagged observations.

- Integration (I): A process to remove trends and seasonality from the data, effectively rendering it “stationary.”

- Moving Average (MA): Calculates the relationship between a current observation and the errors from previous predictions, helping to smooth out random noise in the data.

Building predictive time series forecasts: ETS

An alternative approach is to use a method known as Error, Trend, Seasonality (ETS) that focuses on decomposing a time series into its error, trend, and seasonal components to predict future values:

- Error: As seen in the decomposition plot above, the error component captures randomness or noise in the data.

- Trend: Captures the long-term progression in the time series.

- Seasonality: Captures any systematic or calendar-related patterns.

Reviewing forecasts: Visualization and statistics

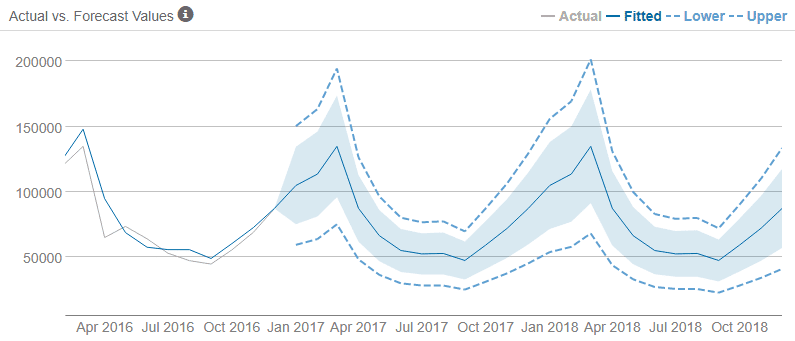

Once a model (or models) have been created, they can be visualized alongside historical data to inspect how closely the forecast follows the pattern of the existing time series data.

Source: phData

A quantitative approach to measuring time series forecasts often employs either the AIC (Akaike Information Criterion) or AICc (Corrected Akaike Information Criterion), defined as follows:

- AIC: Balances a model’s fit to the data against the complexity of the model, with a lower AIC score indicating a better model. AIC is often used when the sample size is significantly larger than the number of model parameters.

- AICc: This approach prevents overfitting in time series forecasting models with a smaller sample size by adding a correction term to the AIC calculation.

How to anonymize a time series with synthetic data

Anonymizing time series data is notoriously difficult and legacy anonymization approaches fail at this challenge.

But there is an effective alternative: synthetic data which offers a solution to these privacy concerns.

Synthesizing time series data makes a lot of sense when dealing with behavioral data. Understanding the key concepts of data subjects is a crucial step in learning how to generate synthetic data in a privacy-preserving manner.

A subject is an entity or individual whose privacy you will protect. Behavioral event data must be prepared in advance so that each subject in the dataset (e.g., a customer, website visitor, hospital patient, etc.) is stored in a dedicated table, each with a unique row identifier. These subjects can have additional reference information stored in separate columns, including attributes that ideally don’t change during the captured events.

For data practitioners, the concept of the subject table is similar to a “dimension” table in a data warehouse, where common attributes related to the subjects are provided for context and further analysis.

The behavioral event data is prepared and stored in a separate linked table referencing a unique subject. In this way, one subject will have zero, one, or (likely) many events captured in this linked table.

Records in the linked table must be pre-sorted in chronological order for each subject to capture the time-sensitive nature of the original data. This model suits various types of event-based data, including insurance claims, patient health, eCommerce, and financial transactions.

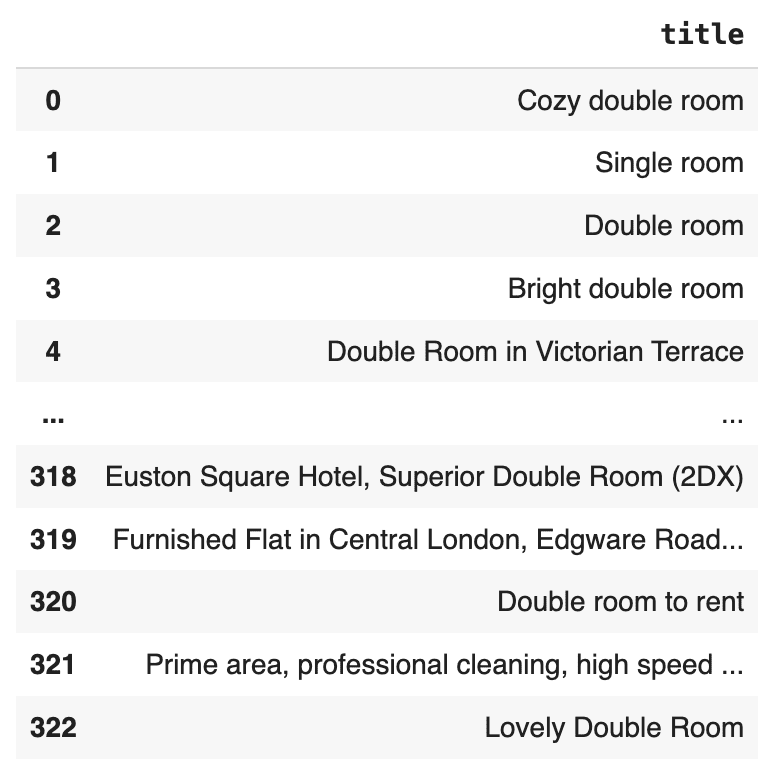

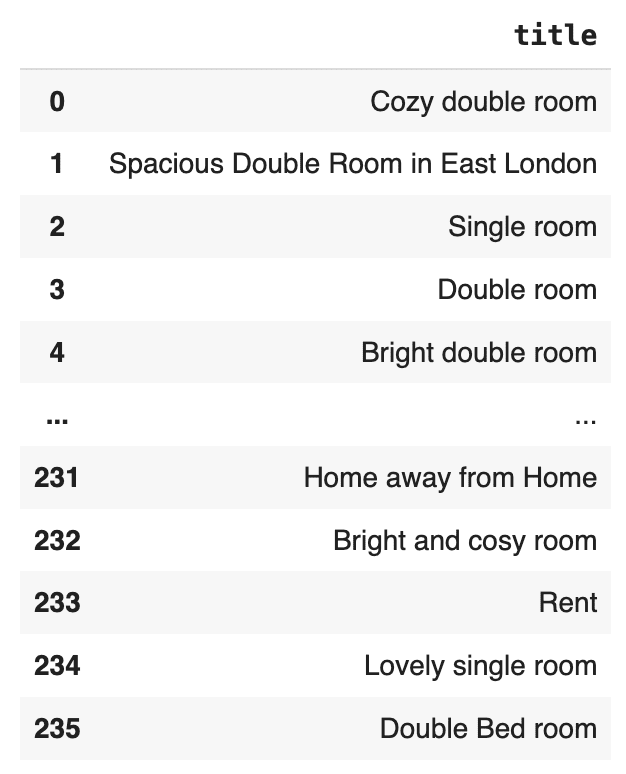

In the example of a customer journey, our tables may look like this.

We see customers stored in our subject table with their associated demographic attributes.

| ID | ZONE | STATE | GENDER | AGE_CAT | AGE |

|---|---|---|---|---|---|

| 1 | Pacific | Oregon | M | Young | 26 |

| 2 | Eastern | New Jersey | M | Medium | 36 |

| 3 | Central | Minnesota | M | Young | 17 |

| 4 | Eastern | Michigan | M | Medium | 56 |

| 5 | Eastern | New Jersey | M | Medium | 46 |

| 6 | Mountain | New Mexico | M | Medium | 35 |

In the corresponding linked table, we have captured events relating to the purchasing behavior of each of our subjects.

| USER_ID | DATE | NUM_CDS | AMT |

|---|---|---|---|

| 1 | 1997-01-01 | 1 | 11.77 |

| 2 | 1997-01-12 | 1 | 12 |

| 2 | 1997-01-12 | 5 | 77 |

In this example, user 1 visited the website on January 1st, 1997, purchasing 1 CD for $11.77. User 2 visited the website twice on January 12th, 1997, making six purchases over these visits for $89.

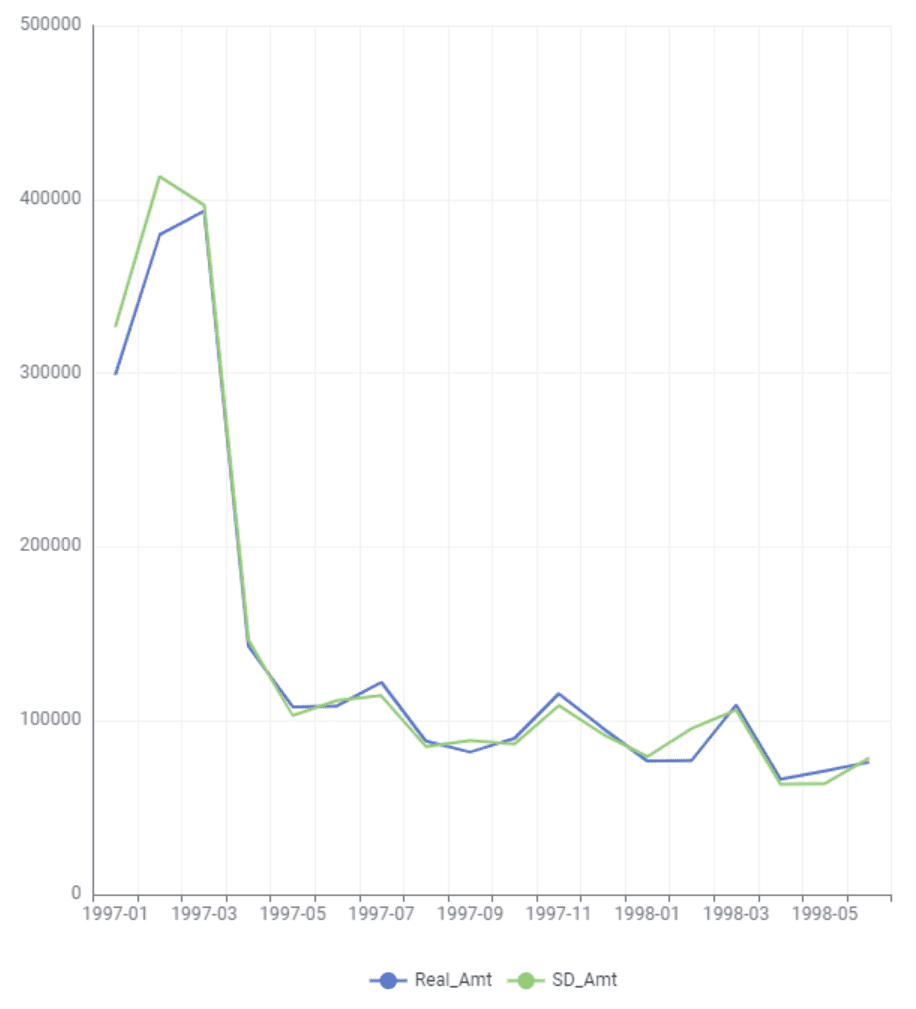

These consumer buying behaviors can be aggregated into standard metrics-based time series, such as purchases per week, month, or quarter, revealing general buying trends over time. Alternatively, the behavioral data in the linked table can be treated as discrete purchasing events happening at specific intervals in time.

Customer-centric organizations obsess around behaviors that drive revenue and retention beyond simple statistics. Analysts constantly ask questions about customer return rates, spending habits, and overall customer lifetime value.

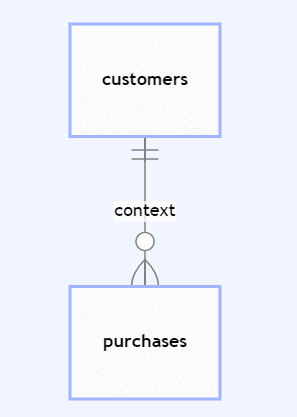

Synthetic data modeling: Relationships between subjects and linked data

Defining the relationship between customers and their purchases is an essential first step in synthetic data modeling. Ensuring that primary and foreign keys are identified between subject and linked tables enables synthetic data generation platforms to understand the context of each behavioral record (e.g., purchases) in terms of the subject (e.g., customers).

Additional configurations, such as smart imputation, dataset rebalancing, or rare category protection, can be defined at this stage.

Synthetic data modeling: Sequence lengths and continuation

A time series sequence refers to a captured set of data over time for a subject within the dataset. For synthetic data models, generating the next element in a sequence given a previous set of features is a critical capability known as sequence continuation.

Defining sequence lengths in synthetic data models involves specifying the number of time steps or data points to be considered in each sequence within the dataset. This decision determines how much historical data the synthetic model will use to predict or generate the next element in the sequence.

The choice of sequence length depends significantly on the nature of the data and the specific application. Longer sequence lengths can capture more long-term patterns and dependencies but will also require more computational resources and may need to be more responsive to recent changes. Conversely, a shorter sequence length is more sensitive to recent trends but might overlook longer-term patterns.

In synthetic modeling, selecting a sequence length that strikes a balance between capturing sufficient historical or behavioral context and maintaining computational efficiency and performance is essential.

Synthetic data generation: Accurate and privacy-safe results

Synthetic data generation can produce realistic and representative behavioral time series data that mimics the original distribution found in the source data without the possibility of re-identification. With privacy-safe behavioral data, it’s possible to democratize access to datasets such as these, developing more sophisticated behavioral models and deeper insights beyond basic metrics, “average” customers, and crude segmentation methods.

Table of Contents

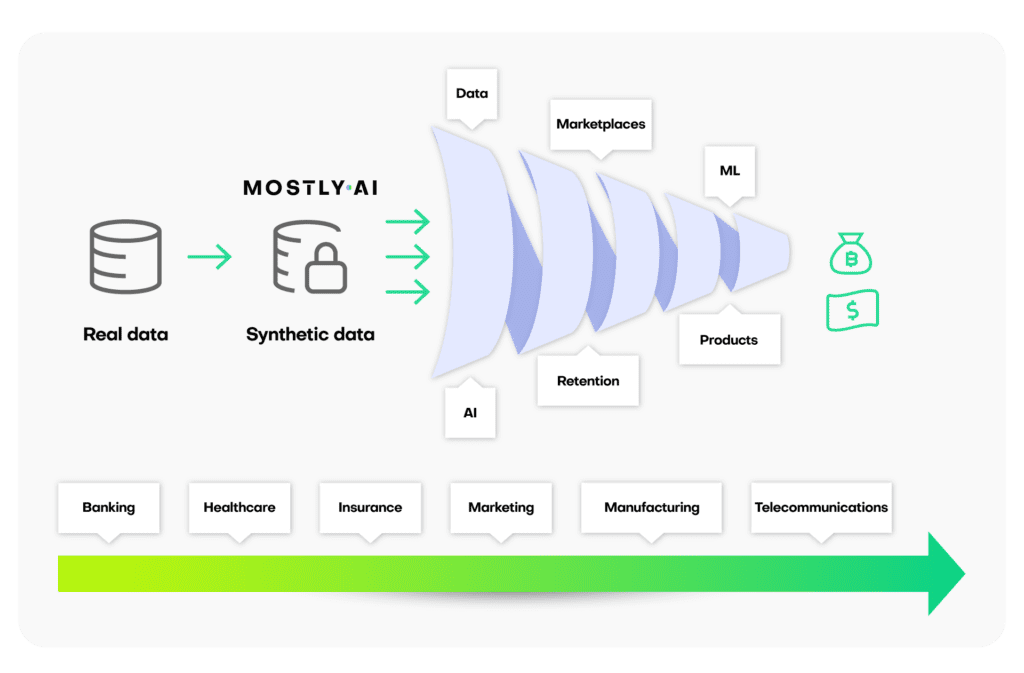

The definition of data monetization

Data monetization refers to converting data assets into revenue or value for an organization. The strategic practice of data monetization involves the collection, analysis, and sale of data to generate profits, improve decision-making, or enhance customer experiences. We can distinguish between internal and external data monetization, depending on where data consumption takes place. Businesses can acquire a competitive advantage in the digital world by exploiting insights and information from data. Data monetization is critical in today's economy, allowing businesses to maximize the value of their data resources while adhering to privacy and security policies and regulations.

Motivation for data monetization: Data as an asset

The idea that data is an asset is becoming more and more prevalent, changing how businesses function and develop. Data is now more than simply a byproduct of operations; it's a product itself, a useful tool that can be used to improve decision-making, generate new income streams, and gain a competitive edge. This paradigm shift raises a fundamental question: why not include data as an asset on a company's balance sheet, just like other tangible and intangible assets?

The recognition of data as an asset is not a theoretical proposition but a practical reality. Companies have come to understand that the enormous amounts of data they generate, gather, and retain may contain a wealth of information about consumer preferences, market trends, and insights. By evaluating this data, organizations can improve operations, create more successful marketing campaigns, and make better decisions. The outcome? Increased effectiveness, financial savings, and — above all — the generation of fresh revenue streams.

Take online retail giants like Amazon and Netflix as an example. They have transformed their business models by utilizing data. They increase revenue and retention of customers by offering personalized suggestions based on an analysis of consumer behavior. They also generate extra revenue by selling insights to outside parties, which is another way they monetize data. They have been able to disrupt established markets and prosper in the digital age because of their data-centric strategy.

However, the potential of data as an asset extends far beyond these tech giants. All organizations, no matter how big or little, produce and gather data. Data is essential for improving goods and services, finding new market possibilities, and optimizing operations in a variety of industries, including manufacturing, financial services, and healthcare. Acknowledging data on balance sheets as an asset is a natural first step towards appreciating its actual value. This action may result in a more complete and transparent representation of a business's worth, giving stakeholders a greater understanding of its potential for expansion and data-driven capabilities.

How can synthetic data help?

When it comes to data monetization techniques, synthetic data can be a useful tool. It helps businesses maximize their data resources and opens up new revenue streams. How?

- Protecting sensitive information

By using synthetic data, businesses can profit from their data assets and get insightful knowledge without disclosing private or sensitive information. This is particularly important in sectors like healthcare and finance that are governed by stringent privacy laws, since actual data frequently contains sensitive or personal information. Legacy data anonymization tools can destroy data quality and decrease the value of data assets.

- Creating synthetic data marketplaces

It is possible to create data marketplaces using synthetic datasets, in which companies can provide insightful information to researchers, data scientists, and other enterprises. By selling or licensing these artificially generated datasets, new revenue streams may be generated.

- Enhancing data products

Synthetic data may be used by organizations that offer data services and products to improve and expand their product offerings. They can combine real and synthetic data to generate more comprehensive datasets that satisfy a wider range of customer expectations.

- Improve machine learning performance

Synthetic data offers a great upsampling method. It can be used in addition to real data to train machine learning models, increasing the models' accuracy and durability. This improved model performance can be quite helpful when offering predictive analytics services to clients.

- Risk mitigation

By using synthetic data for data monetization, the dangers of sharing real data can be reduced. Potential clients or partners may be more inclined to work together and invest in data-driven solutions as a result.

- Reducing data acquisition costs

Organizations can use synthetic data to address the needs of data-hungry applications and analytics while lowering expenses associated with data collection and storage, as opposed to continually collecting and storing real data.

- Enables data retention

Internal data retention policies and external legal requirements can make data retention impossible in the long run. However, the value of retaining data as an asset lies in its potential to provide historical insights, support trend analysis, and facilitate future decision-making. Retaining synthetic versions of datasets that must be deleted is a compliant and data-friendly solution, ensuring that the organizational knowledge base remains intact for strategic planning and informed decision-making while adhering to privacy and regulatory standards.

For the aforementioned reasons, synthetic data is an invaluable enabler that helps businesses maximize their data assets while resolving privacy issues and cutting expenses. It provides more assurance over data security and privacy compliance when entering into partnerships for data sharing, developing innovative data products, and expanding revenue streams.

Data monetization use cases

Synthetic data makes granular-level information accessible without requiring individual consent to share. This makes it possible for businesses to profit from their data assets while upholding data security and privacy, adhering to legal obligations, and making use of data-driven insights to improve decision-making and open up new revenue streams. This section will examine particular use cases from a variety of sectors, demonstrating how synthetic data can revolutionize data monetization by providing a competitive advantage and ensuring compliance with data protection laws.

- Data monetization in financial services

Financial institutions can use synthetic data in various ways — for example, to create credit scoring models and assess the risk of lending to individuals or businesses. By having access to granular-level information, they can make more informed decisions without violating data privacy regulations. Synthetic data can emulate the behavior of real customers without needing their consent. Banks can monetize this synthetic data by offering their advanced credit scoring and risk assessment models to other financial institutions or third-party vendors. These models, based on synthetic data, can provide valuable insights into creditworthiness and risk assessment. By licensing or selling these models, financial institutions can create an additional revenue stream while maintaining data privacy and security, making it a win-win scenario for both the bank and its partners.

- Marketing

Marketing firms can use synthetic data to segment customer profiles and create personalized marketing campaigns. This data can be shared with clients or partners without the need for individual customer consent. For example, banks can use synthetic data to optimize product recommendations for specific customer segments, creating personalized customer experiences and increasing customer satisfaction.

Marketing firms can monetize this valuable resource by offering their expertise in customer segmentation and their personalized marketing strategies based on synthetic data to other businesses in need of such services. By providing data-driven marketing solutions, marketing agencies can not only enhance their client offerings but also establish additional revenue streams through consulting and licensing agreements. This approach drives business growth and maintains the privacy and security of customer data, ensuring compliance with data protection regulations.

- Telecommunications

Telecommunications businesses can profit from synthetic data for network optimization. Network traffic and usage patterns can be simulated with synthetic data, which helps with infrastructure design and resource allocation. This may result in lower infrastructure costs and better service quality.

Telecommunication companies can generate money from this vital expertise, which is driven by synthetic data, by charging other service providers or companies looking to improve their network performance. Telecom firms can add value proposition products to their offerings and diversify their revenue streams by delivering data-driven network solutions based on synthetic data.

Conclusion

In this era of data-driven innovation, the power of synthetic data in data monetization cannot be overstated. It allows organizations to tap into the wealth of insights hidden within their data while upholding stringent privacy regulations and ensuring data security. From financial institutions fine-tuning credit risk models to marketing firms creating personalized campaigns and telecommunication companies optimizing networks, synthetic data is the key to unlocking new revenue streams.

For those seeking a private and secure synthetic data generation solution, MOSTLY AI's platform stands as a beacon. Our “private by default” synthetic data ensures that sensitive information remains protected while still enabling organizations to make the most of their data assets.

Ready to experience the benefits of synthetic data monetization with MOSTLY AI? Get started today by registering for our free version, and don't hesitate to contact us for more details. Your journey towards smarter, more profitable data-driven decisions begins here.

Data governance is a data management framework that ensures data is accurate, accessible, consistent, and protected. For Chief Information Officers (CIOs), it’s also the strategic blueprint that communicates how data is handled and protected across the organization.

Governance ensures that data can be used effectively, ethically, and in compliance with regulations while implementing policies to safeguard against breaches or misuse. As a CIO, mastering data governance requires a delicate balance between maintaining trust, privacy, and control of valuable data assets while investing in innovation and long-term business growth.

Ultimately, successful business innovation depends on data innovation. CIOs can build a collaborative, innovative culture based on data governance where every executive stakeholder feels a closer ownership of digital initiatives.

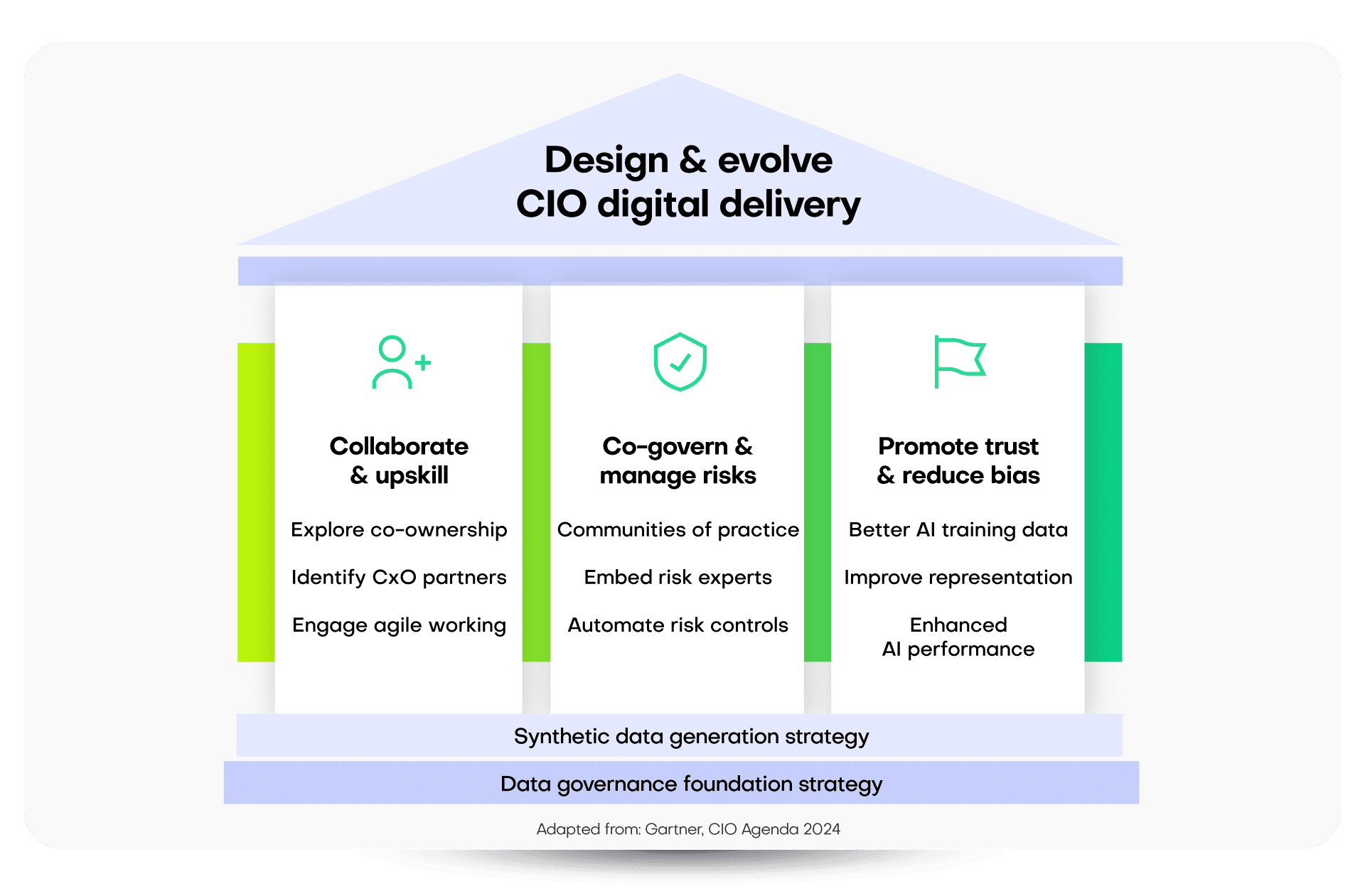

Partnerships for data governance: the CIO priority in 2024

In 2024, Gartner forecasts that CIOs will be increasingly asked to do more with less: with tighter budgets and a watchful eye on efficiency needed to meet growing demands around digital leadership, delivery, and governance.

CIOs find themselves at a critical moment that requires managing a complex digital landscape and operating model and taking responsibility and ownership for technology-enabled innovation around data, analytics, and artificial intelligence (AI).

These emerging priorities demand a new approach to data governance. CIOs and other C-suite executives must rethink their collaboration strategies. By tapping into domain expertise and building communities of practice, organizations can cut costs and reduce operational risks. This transformation helps reposition CIOs as vital strategic partners within their organizations.

Among the newest tools for CIOs is AI-generated synthetic data, which stands out as a game-changer. It sparks innovation and fosters trusted relationships between business divisions, providing a secure and versatile base for data-driven initiatives. Synthetic data is fast becoming an everyday data governance tool used by AI-savvy CIOs.

What is AI-generated synthetic data?

Synthetic data is created using AI. It replicates the statistical properties of real-world data but keeps sensitive details private. Synthetic data can be safely used instead of the original data — for example, as training data for building machine learning models and as privacy-safe versions of datasets for data sharing.

This characteristic is invaluable for CIOs who manage risk and cybersecurity, work to contract with external parties for innovation or outsourced development, or lead teams to deploy data analytics, AI, or digital platforms. This makes synthetic data a tool to transform data governance throughout organizations.

Synthetic data can be safely used instead of the original data—for example, as training data for building machine learning models and as privacy-safe versions of datasets for data sharing.

Synthetic data use cases are far-reaching, particularly in enhancing AI models, improving application testing, and reducing regulatory risk. With a synthetic data approach, CIOs and data leaders can drive data democratization and digital delivery without the logistical issues of data procurement, data privacy, and traditionally restrictive governance controls.

Synthetic data is an artificial version of your real data

Synthetic data is NOT

mock data

Synthetic data is smarter than data anonymization

Three CIO strategies for improved delivery with synthetic data

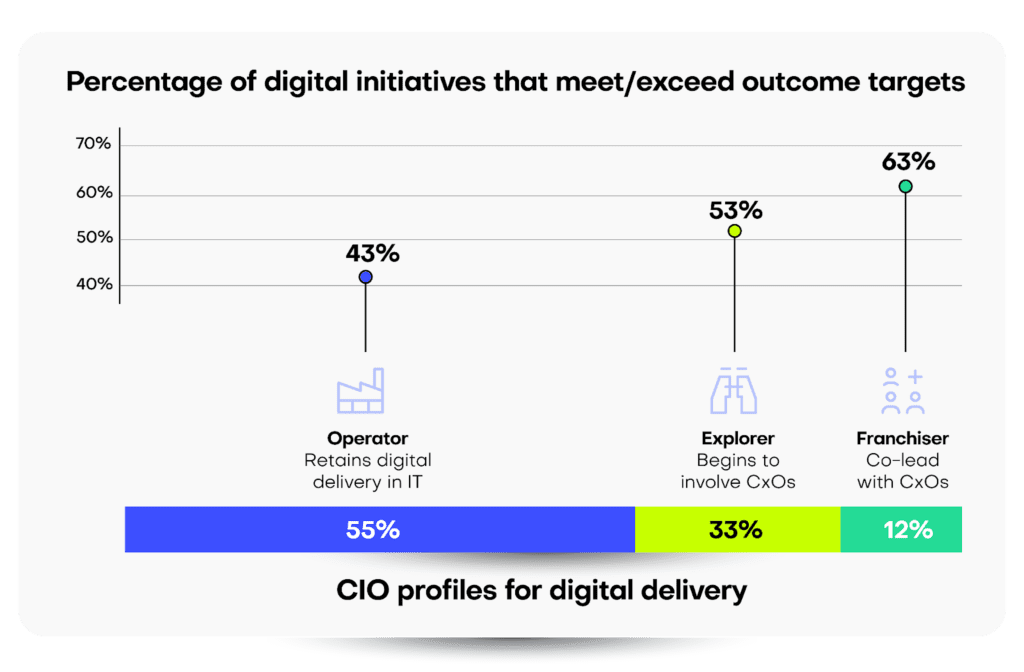

To understand the impact of synthetic data on data governance, consider the evolving role of CIOs:

- Traditional Role: 55% of CIOs surveyed maintain complete control over their digital and technical domains.

- Shift to Partnership: Conversely, 12% of CIOs now share responsibilities for digital delivery, embracing equal partnerships with their business counterparts.

The real surprise from Gartner’s findings comes from the actual outcomes of these reported digital projects, with a stark, inverse relationship between pure CIO ownership and the ability to meet or exceed project targets. Indeed, only 43% of digital initiatives meet this threshold when CIOs are in complete control, versus 63% for projects where the CIO co-leads delivery with other CxO executives.

When CIOs retain full delivery control, only 43% of digital initiatives meet or exceed their outcome targets.

So, how can CIOs leverage synthetic data to drive successful business innovation across the enterprise? What steps should a traditional Chief Information Officer take to move from legacy practices to an operating model that shares leadership, delivery, and data governance?

Strategy 1: Upskilling with synthetic data

Enhancing collaboration between CIOs and business leaders

As business and technology teams work more closely together to develop digital solutions, synthetic data can bridge the gap between these groups.

To educate and equip business leaders with the skills they need to co-lead digital initiatives, there are shared challenges to overcome. Pain points are no longer simply an “IT issue,” and new, agile ways of working are needed that share responsibility between previously siloed teams.

In these first steps, synthetic data can feel like uncharted territory, with great opportunities to democratize data across the organization and recreate real-world scenarios without compromising privacy or confidentiality.

As business and technology teams work more closely together to develop digital solutions, synthetic data can bridge the gap between these groups, offering safe and effective development and testing environments for new platforms or applications or providing domain experts with valuable insights without risking the underlying data assets.

Strategy 2: Risk management through synthetic data

Creating safe, collaborative spaces for cross-functional teams

Synthetic data alleviates the risk typically associated with sharing sensitive information, while enabling partners access to the depth of data required for meaningful innovation and delivery.

A shared responsibility model democratizes digital technology delivery, with synthetic data serving as common ground for various CxOs to collaborate on business initiatives in a safe, versatile environment.

Communities of practice (CoPs) ensure that autonomy is balanced with a collective feedback structure that can collect and centralize best practices from domain experts without overburdening a project with excessive bureaucracy.

This approach can also extend beyond traditional organizational boundaries—for example, contracting with external parties. Synthetic data alleviates the risk typically associated with sharing sensitive information (especially outside of company walls) while enabling partners access to the depth of data required for meaningful innovation and delivery.

Strategy 3: Cultural shifts in AI delivery

Using synthetic data to promote ethical AI practices

Feeding AI models better training data leads to more precise personalization, stronger fraud detection capabilities, and enhanced customer experiences.

Business demand for AI far outstrips supply on CIOs’ 2024 roadmaps. Synthetic data addresses this challenge by:

- Promoting Trust: Synthetic data allows precise control over its generation process. This enables analysts to investigate outlier data points thoroughly. Additionally, it aids data scientists in explaining and stress-testing AI models to guarantee fair representation and consistent reliability for diverse use cases.

- Reducing Bias: Synthetic data generation can rebalance data distribution across data categories without losing integrity or detail.

- Enhanced Performance: Feeding AI models better training data leads to more precise personalization, robust fraud detection capabilities, and improved customer experiences.

However, adopting synthetic data in co-led teams isn’t just a technical approach; it’s cultural, too. CIOs can lead from the front and underscore their commitment to social responsibility by promoting ethical data practices to challenge data bias or representation issues. Shlomit Yanisky-Ravid, a professor at Fordham University’s School of Law, says that it’s imperative to understand what an AI model is really doing; otherwise, we won’t be able to think about the ethical issues around it. “CIOs should be engaging with the ethical questions around AI right now,” she says. “If you wait, it’s too late.”

The data governance journey for CIOs: From technology steward to C-suite partner

A successful digital delivery operating model requires a rethink in the ownership and control of traditional CIO responsibilities. Who should lead an initiative? Who should deliver key functionality? Who should govern the resulting business platform?

The results from Gartner’s survey provide some answers to these questions. They point clearly to the effectiveness of shared responsibility — working across C-suite executives for delivery versus a more siloed approach.

Here’s how CIOs can navigate this journey:

- Encourage experimentation: Build a culture that embraces synthetic data to achieve rapid prototyping and iterative feedback for digital delivery.

- Bridge the IT-Business divide: Use synthetic data to democratize data and share digital delivery responsibilities across the C-suite.

- Expand collaboration: When data privacy or intellectual property concerns restrict data sharing, synthetic data can enable collaboration, allowing for innovation or external partnerships without increased risks.

Ultimately, successful business innovation depends on data innovation. CIOs can build a collaborative, innovative culture based on data governance where every executive stakeholder feels a closer ownership of digital initiatives.

Synthetic data enables different departments to innovate without direct reliance on overburdened IT departments. When real-world data is slow to provision and often loaded with ethical or privacy restrictions, synthetic data offers a new approach to collaboration and digital delivery.

With these strategic initiatives on the corporate agenda for 2024 and beyond, CIOs must find new and efficient ways to deliver value through partnerships while building stronger strategic relationships between executives.

Advancing data governance initiatives with synthetic data

For CIOs ready to take the next step, embracing synthetic data within a data governance strategy is just the beginning. Discover more about how synthetic data can revolutionize your enterprise!

Get in touch for a personalized demo

In this tutorial, you will learn how to tackle the problem of missing data in an efficient and statistically representative way using smart, synthetic data imputation. You will use MOSTLY AI’s Smart Imputation feature to uncover the original distribution for a dataset with a significant percentage of missing values. This skill will give you the confidence to tackle missing data in any data analysis project.

Dealing with datasets that contain missing values can be a challenge. The presence of missing values can make the rest of your dataset non-representative, which means that it provides a distorted, biased picture of the overall population you are trying to study. Missing values can also be a problem for your machine learning applications, specifically when downstream models are not able to handle missing values. In all cases, it is crucial that you address the missing values as accurately and statistically representative as possible to avoid valuable data loss.

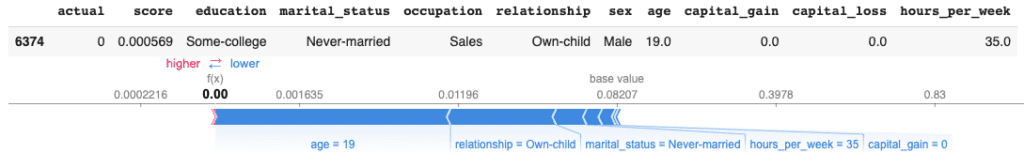

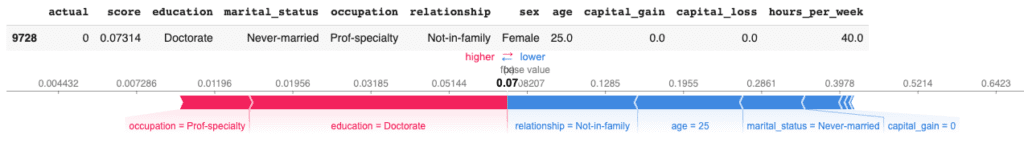

To tackle the problem of missing data, you will use the data imputation capability of MOSTLY AI’s free synthetic data generator. You will start with a modified version of the UCI Adult Income dataset that has a significant portion (~30%) of missing values for the age column. These missing values have been intentionally inserted at random, with a specific bias for the older segments of the population. You will synthesize this dataset and enable the Smart Imputation feature, which will generate a synthetic dataset that does not contain any missing values. With this smartly imputed synthetic dataset, it is then straightforward to accurately analyze the population as if all values were present in the first place.

The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

Dealing with missing data

Missing data is a common problem in the world of data analysis and there are many different ways to deal with it. While some may choose to simply disregard the records with missing values, this is generally not advised as it causes you to lose valuable data for the other columns that may not have missing data. Instead, most analysts will choose to impute the missing values.

This can be a simple data imputation by, for example, calculating the mean or median value of the column and using this to fill in the missing values. There are also more advanced data imputation methods available. Read the article comparing data imputation methods to explore some of these techniques and learn how MOSTLY AI’s Smart Imputation feature outperforms other data imputation techniques.

Explore the original data

Let’s start by taking a look at our original dataset. This is the modified version of the UCI Adult Income dataset with ~30% missing values for the age column.

If you’re following along in Google Colab, you can run the code below directly. If you are running the code locally, make sure to set the repo variable to the correct path.

import pandas as pd

import numpy as np

# let's load the original data file, that includes missing values

try:

from google.colab import files # check whether we are in Google colab

repo = "https://github.com/mostly-ai/mostly-tutorials/raw/dev/smart-imputation"

except:

repo = "."

# load the original data, with missing values in place

tgt = pd.read_csv(f"{repo}/census-with-missings.csv")

print(

f"read original data with {tgt.shape[0]:,} records and {tgt.shape[1]} attributes"

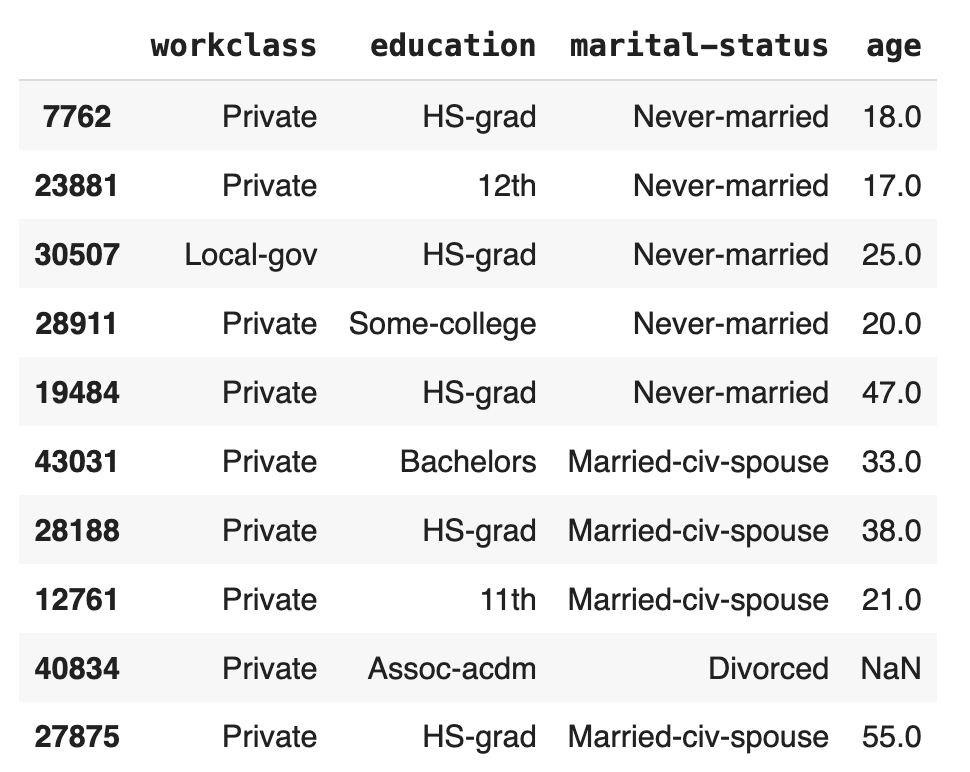

)Let’s take a look at 10 random samples:

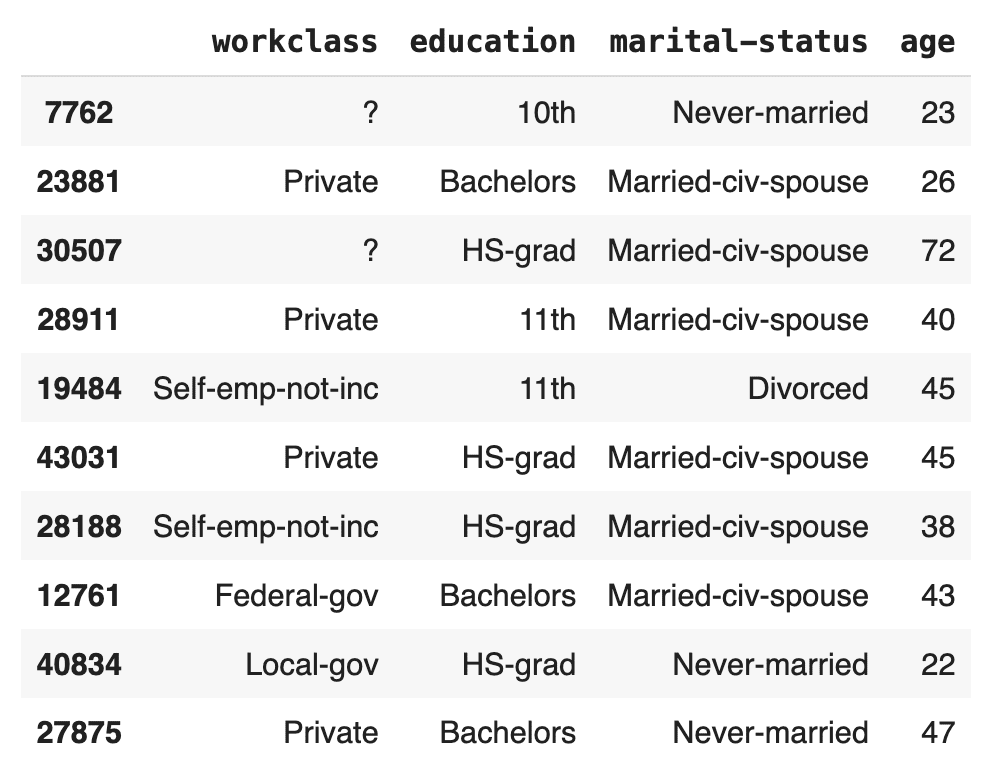

# let's show some samples

tgt[["workclass", "education", "marital-status", "age"]].sample(

n=10, random_state=42

)

We can already spot 1 missing value in the age column.

Let’s confirm how many missing values we are actually dealing with:

# report share of missing values for column `age`

print(f"{tgt['age'].isna().mean():.1%} of values for column `age` are missing")32.7% of values for column `age` are missing

Almost one-third of the age column contains missing values. This is a significant amount of relevant data – you would not want to discard these records from your analysis.

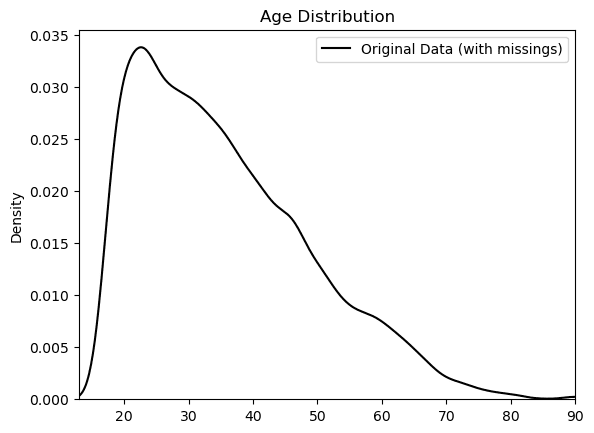

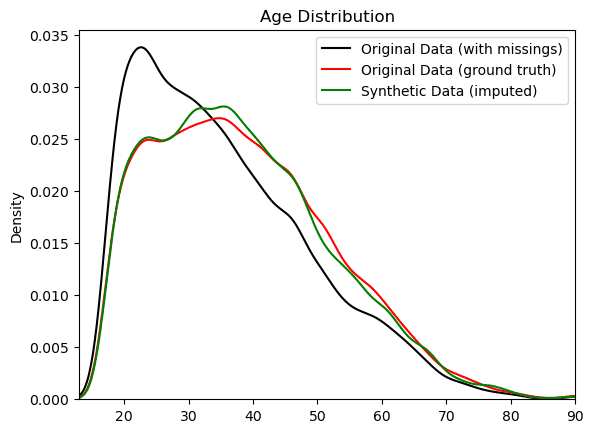

Let’s also take a look to see what the distribution of the age column looks like with these missing values.

# plot distribution of column `age`

import matplotlib.pyplot as plt

tgt.age.plot(kind="kde", label="Original Data (with missings)", color="black")

_ = plt.legend(loc="upper right")

_ = plt.title("Age Distribution")

_ = plt.xlim(13, 90)

_ = plt.ylim(0, None)

This might look reasonable but remember that this distribution is showing you only two-thirds of the actual population. If you were to analyze or build machine learning models on this dataset as it is, chances are high that you would be working with a significantly distorted view of the population which would lead to poor analysis results and decisions downstream.

Let’s take a look at how we can use MOSTLY AI’s Smart Imputation method to address the missing values and improve the quality of your analysis.

Synthesize data with smart data imputation

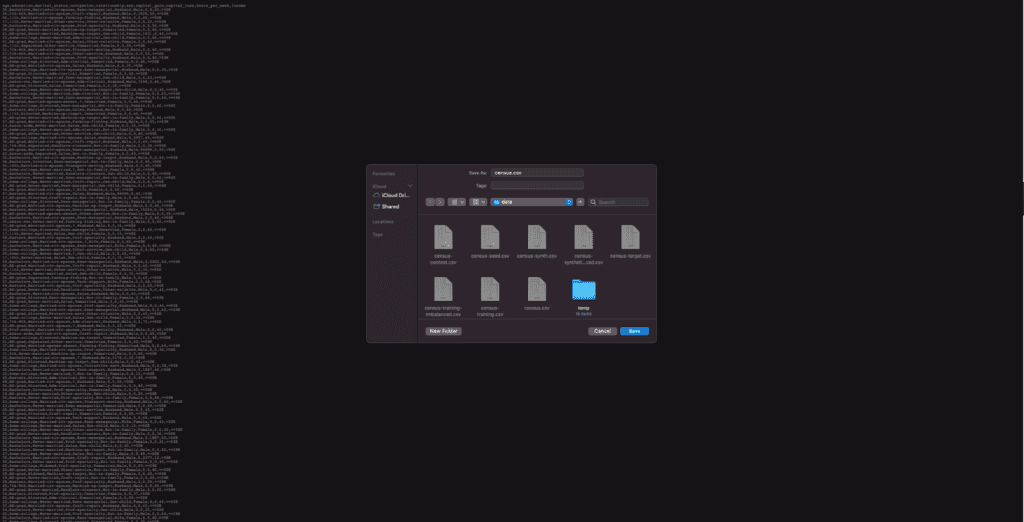

Follow the steps below to download the dataset and synthesize it using MOSTLY AI. For a step-by-step walkthrough, you can also watch the video tutorial.

- Download

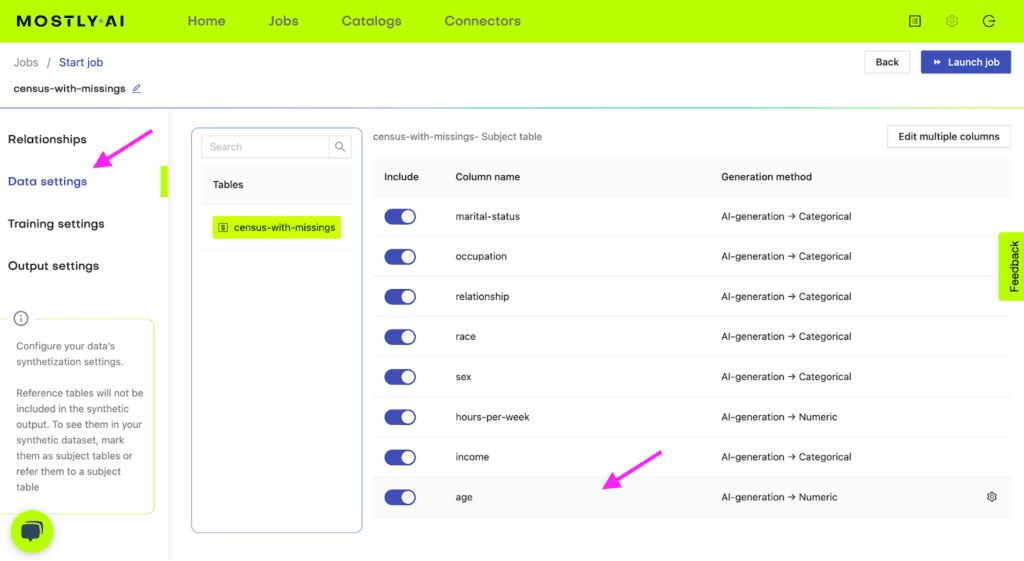

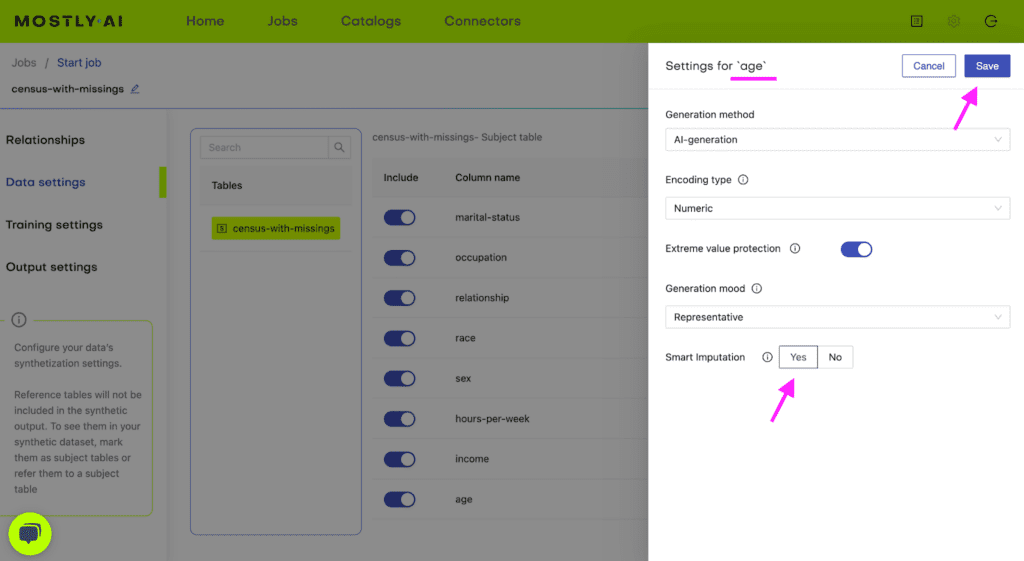

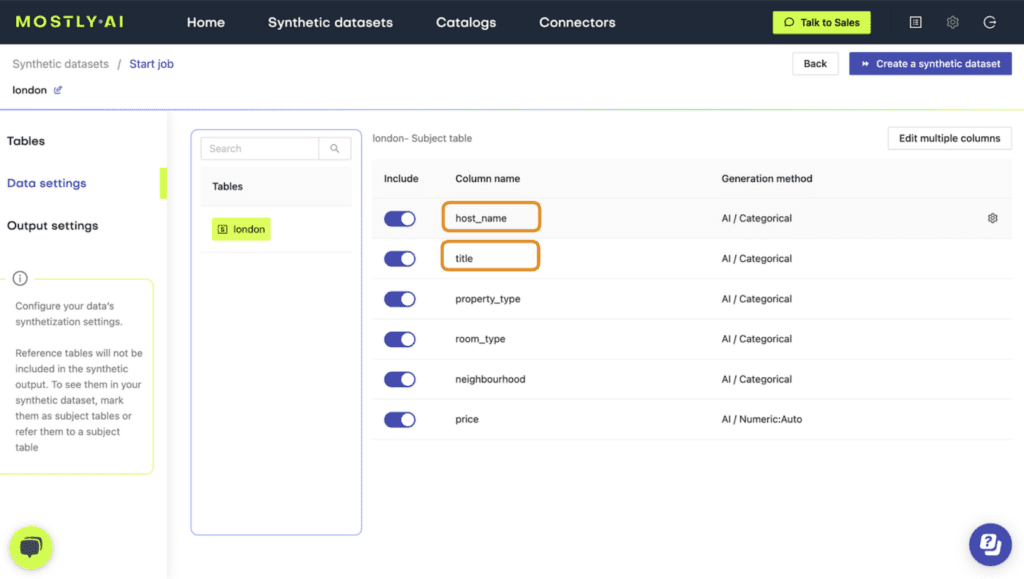

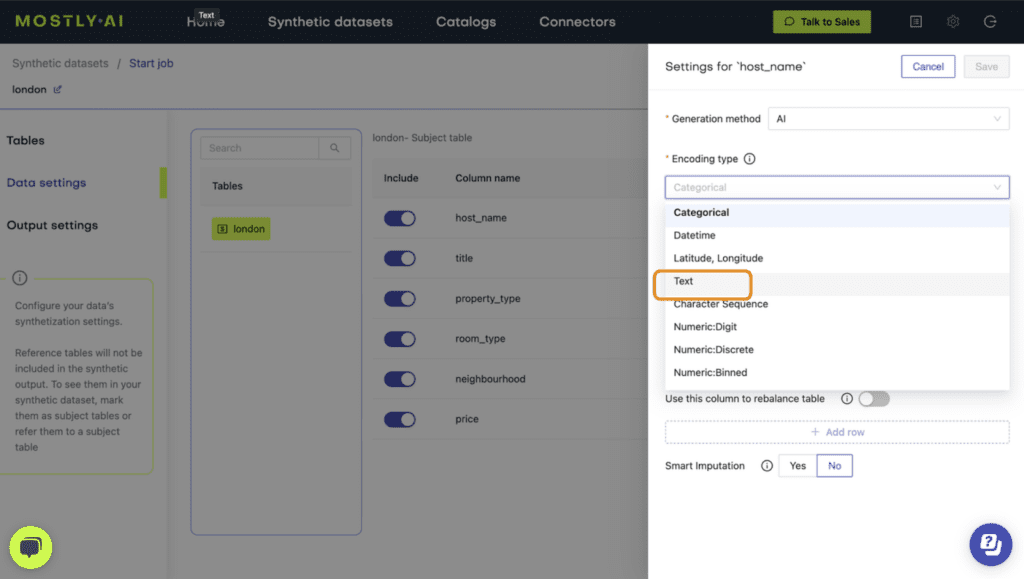

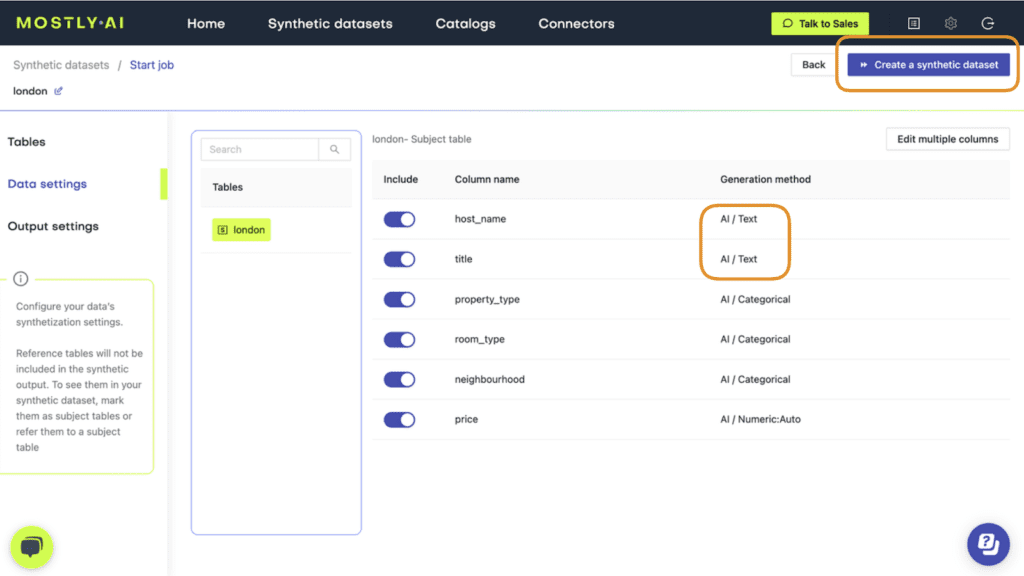

census-with-missings.csvby clicking here and pressing either Ctrl+S or Cmd+S to save the file locally. - Synthesize

census-with-missings.csvvia MOSTLY AI. Leave all settings at their default, but activate the Smart Imputation for the age column.

Fig 1 - Navigate to Data Setting and select the age column.

Fig 2 - Enable the Smart Imputation feature for the age column.

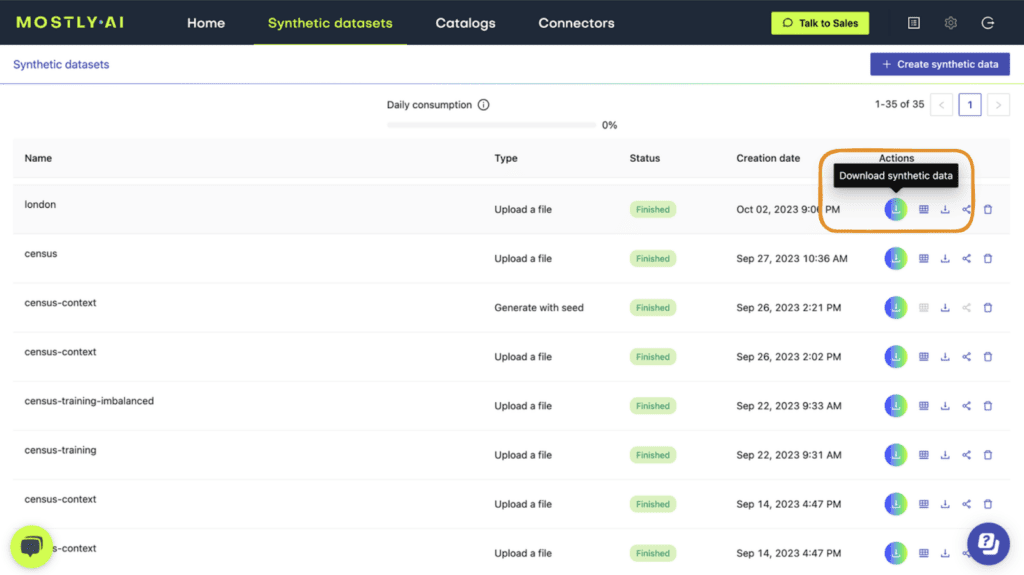

- Once the job has finished, download the generated synthetic data as a CSV file to your computer and rename it to

census-synthetic-imputed.csv. Optionally, you can also download a previously synthesized version here.

- Use the following code to upload the synthesized data if you’re running in Google Colab or to access it from disk if you are working locally:

# upload synthetic dataset

import pandas as pd

try:

# check whether we are in Google colab

from google.colab import files

import io

uploaded = files.upload()

syn = pd.read_csv(io.BytesIO(list(uploaded.values())[0]))

print(

f"uploaded synthetic data with {syn.shape[0]:,} records and {syn.shape[1]:,} attributes"

)

except:

syn_file_path = f"{repo}/census-synthetic-imputed.csv"

print(f"read synthetic data from {syn_file_path}")

syn = pd.read_csv(syn_file_path)

print(

f"read synthetic data with {syn.shape[0]:,} records and {syn.shape[1]:,} attributes"

)Now that we’ve had an overall look at the distributions of the underlying model and the generated synthetic data, let’s dive a little deeper into the synthetic data you’ve generated.

Like before, let’s sample 10 random records to see if we can spot any missing values:

# show some synthetic samples

syn[["workclass", "education", "marital-status", "age"]].sample(

n=10, random_state=42

)

There are no missing values for the age column in this random sample. Let’s verify the percentage of missing values for the entire column:

# report share of missing values for column `age`

print(f"{syn['age'].isna().mean():.1%} of values for column `age` are missing")0.0% of values for column `age` are missing

We can confirm that all the missing values have been imputed and that we no longer have any missing values for the age column in our dataset.

This is great, but only half of the story. Filling the gaps in our missing data is the easy part: we could do this by simply imputing the mean or the median, for example. It’s filling the gaps in a statistically representative manner that is the challenge.

In the next sections, we will inspect the quality of the generated synthetic data to see how well the Smart Imputation feature performs in terms of imputing values that are statistically representative of the actual population.

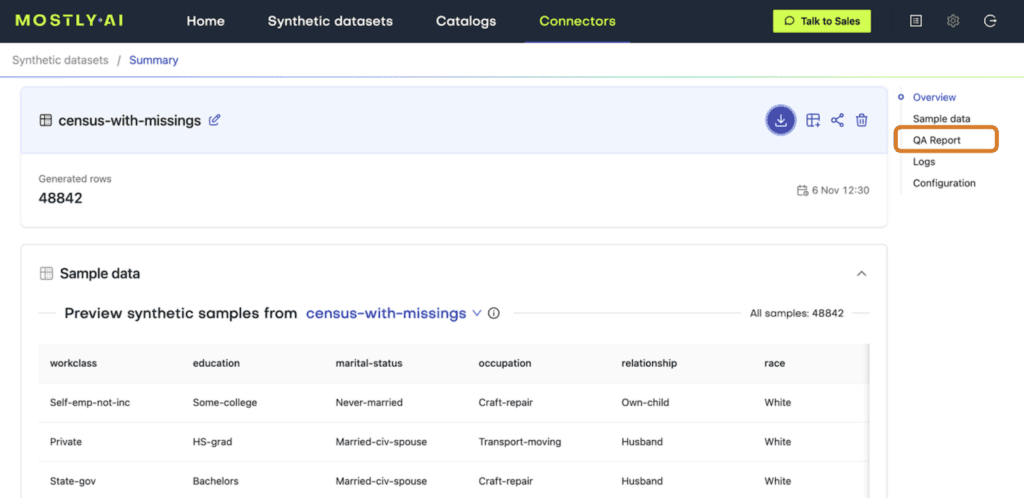

Inspecting the synthetic data quality reports

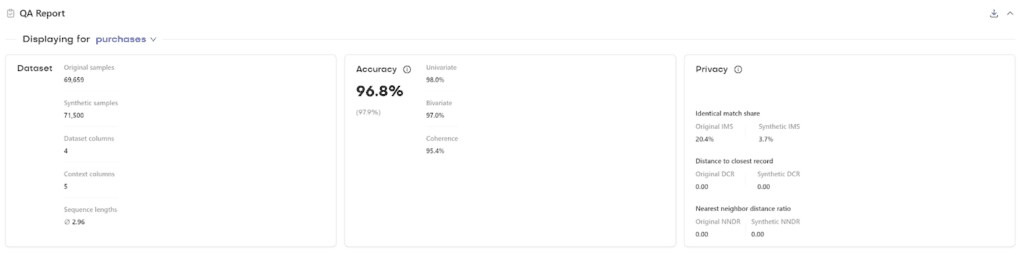

MOSTLY AI provides two Quality Assurance (QA) reports for every synthetic data generation job: a Model QA and a Data QA report. You can access these by clicking on a completed generation job and selecting the Model QA tab.

Fig 3 - Access the QA Reports in your MOSTLY AI account.

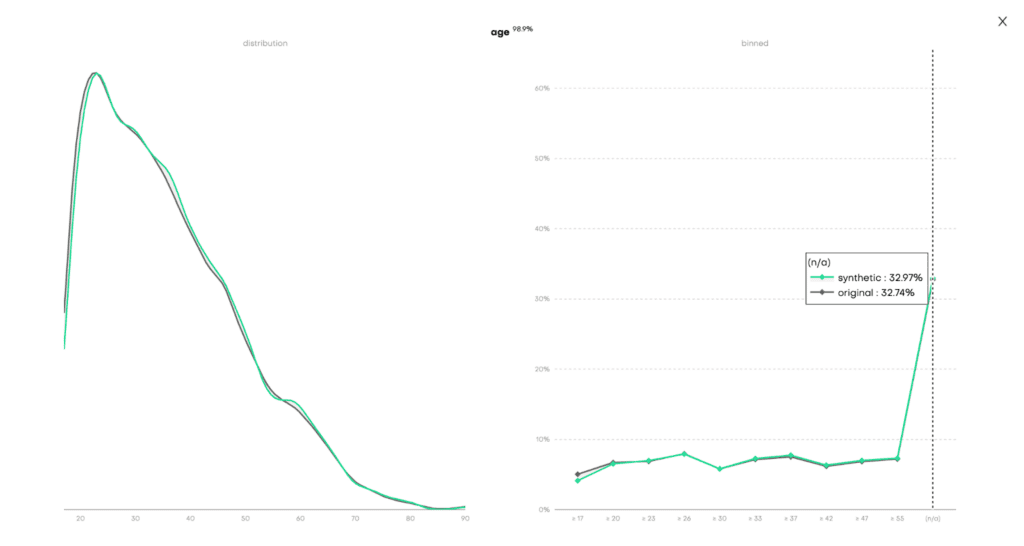

The Model QA reports on the accuracy and privacy of the trained Generative AI model. As you can see, the distributions of the training dataset are faithfully learned and also include the right share of missing values:

Fig 4 - Model QA report for the univariate distribution of the age column.

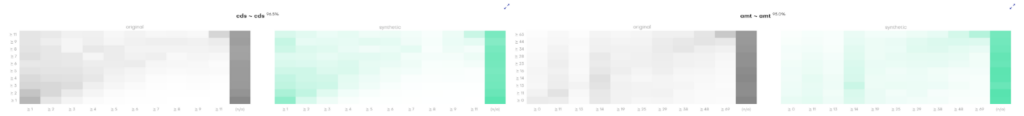

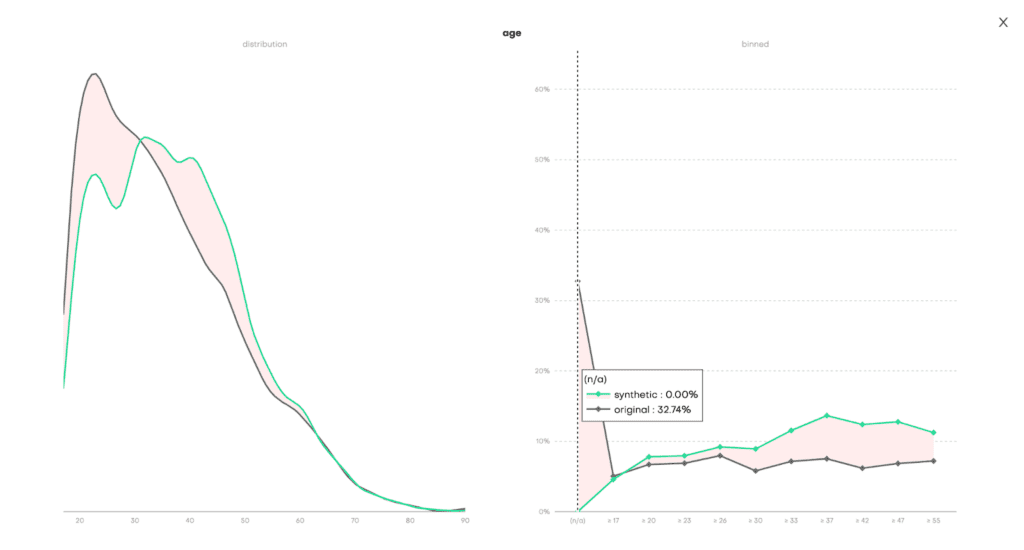

The Data QA, on the other hand, visualizes the distributions not of the underlying model but of the outputted synthetic dataset. Since we enabled the Smart Imputation feature, we expect to see no missing values in our generated dataset. Indeed, here we can see that the share of missing values (N/A) has dropped to 0% in the synthetic dataset (vs. 32.74% in the original) and that the distribution has been shifted towards older age buckets:

Fig 5 - Data QA report for the univariate distribution of the age column.

The QA reports give us a first indication of the quality of our generated synthetic data. But the ultimate test of our synthetic data quality will be to see how well the synthetic distribution of the age column compares to the actual population: the ground truth UCI Adult Income dataset without any missing values.

Let’s plot side-by-side the distributions of the age column for:

- The original training dataset (incl. ~33% missing values),

- The smartly imputed synthetic dataset, and

- The ground truth dataset (before the values were removed).

Use the code below to create this visualization:

raw = pd.read_csv(f"{repo}/census-ground-truth.csv")

# plot side-by-side

import matplotlib.pyplot as plt

tgt.age.plot(kind="kde", label="Original Data (with missings)", color="black")

raw.age.plot(kind="kde", label="Original Data (ground truth)", color="red")

syn.age.plot(kind="kde", label="Synthetic Data (imputed)", color="green")

_ = plt.title("Age Distribution")

_ = plt.legend(loc="upper right")

_ = plt.xlim(13, 90)

_ = plt.ylim(0, None)

As you can see, the smartly imputed synthetic data is able to recover the original, suppressed distribution of the ground truth dataset. As an analyst, you can now proceed with the exploratory and descriptive analysis as if the values were present in the first place.

Tackling missing data with MOSTLY AI's data imputation feature

In this tutorial, you have learned how to tackle the problem of missing values in your dataset in an efficient and highly accurate way. Using MOSTLY AI’s Smart Imputation feature, you were able to uncover the original distribution of the population. This then allows you to proceed to use your smartly imputed synthetic dataset and analyze and reason about the population with high confidence in the quality of both the data and your conclusions.

If you’re interested in learning more about imputation techniques and how the performance of MOSTLY AI’s Smart Imputation feature compares to other imputation methods, check out our smart data imputation article.

You can also head straight to the other synthetic data tutorials:

- Generate synthetic text data

- Explainable AI with synthetic data

- Perform conditional data generation

- Rebalancing your data for ML classification problems

- Optimize your training sample size for synthetic data accuracy

- Build a “fake vs real” ML classifier

As we wrap up 2023, the corporate world is abuzz with the next technological wave: Enterprise AI. Over the past few years, AI has taken center stage in conversations across newsfeeds and boardrooms alike. From the inception of neural networks to the emergence of companies like Deepmind at Google and the proliferation of Deepfake videos, AI's influence has been undeniable.

While some are captivated by the potential of these advancements, others approach them with caution, emphasizing the need for clear boundaries and ethical guidelines. Tech visionaries, including Elon Musk, have voiced concerns about AI's ethical complexities and potential dangers when deployed without stringent rules and best practices. Other advocates of AI skepticism include Timnit Gebru, one of the first people to sound alarms at Google, Dr. Hinton, the godfather of AI and even Sam Altman, CEO of OpenAI, voiced concerns, as well as the renowned historian Yuval Harari.

Now, Enterprise AI is knocking on the doors of corporate boardrooms, presenting executives with a familiar challenge: the eternal dilemma of adopting new technology. Adopt too early, and you risk venturing into the unknown, potentially inviting reputational and financial damage. Adopt too late, and you might miss the efficiency and cost-cutting opportunities that Enterprise AI promises.

What is Enterprise AI?

Enterprise AI, also known as Enterprise Artificial Intelligence, refers to the application of artificial intelligence (AI) technologies and techniques within large organizations or enterprises to improve various aspects of their operations, decision-making processes, and customer interactions. It involves leveraging AI tools, machine learning algorithms, natural language processing, and other AI-related technologies to address specific business challenges and enhance overall efficiency, productivity, and competitiveness.

In a world where failing to adapt has led to the downfall of organizations, as witnessed in the recent history of the banking industry with companies like Credit Suisse, the pressure to reduce operational costs and stay competitive is more pressing than ever. Add to this mix the current macroeconomic landscape, with higher-than-ideal inflation and lending rates, and the urgency for companies not to be left behind becomes palpable.

Moreover, many corporate leaders still find themselves grappling with the pros and cons of integrating the previous wave of technologies, such as blockchain. Just as the dust was settling on blockchain's implementation, AI burst into the spotlight. A limited understanding of how technologies like neural networks function, coupled with a general lack of comprehension regarding their potential business applications, has left corporate leaders facing a perfect storm of FOMO (Fear of Missing Out) and FOCICAD (Fear of Causing Irreversible Chaos and Damage).

So, how can industry leaders navigate the world of Enterprise AI without losing their footing and potentially harming their organizations? The answer lies in combining traditional business processes and quality management with cutting-edge auxiliary technologies to mitigate the risks surrounding AI and its outputs. Here are the questions which QuantumBlack, AI by McKinsey, suggests boards to ask about generative AI.

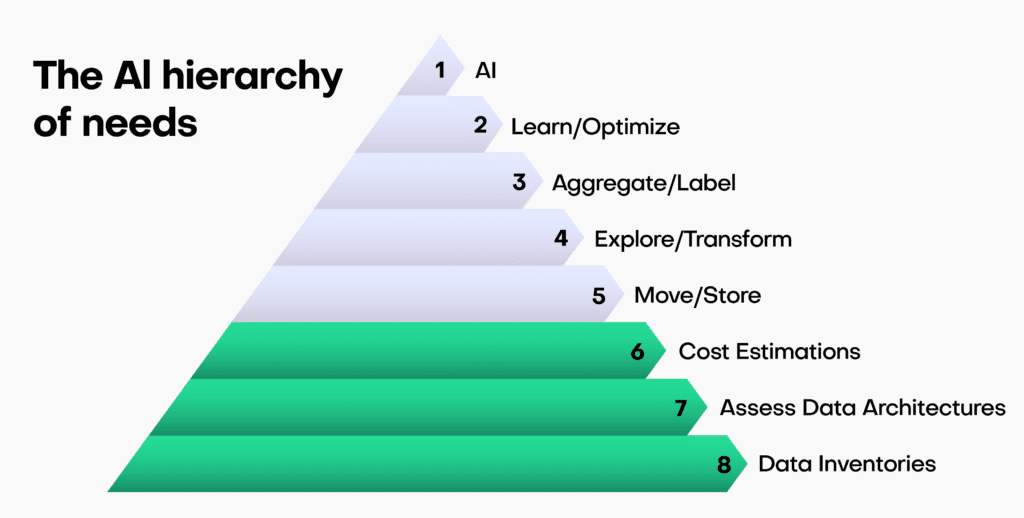

Laying the foundation for Enterprise AI adoption

To embark on a successful Enterprise AI journey, companies need to build a strong foundation. This involves several crucial steps:

- Data Inventories: Conduct thorough data inventories to gain a comprehensive understanding of your organization's data landscape. This step helps identify the type of data available, its quality, and its relevance to AI initiatives.

- Assess Data Architectures: Evaluate your existing data structures and systems to determine their compatibility with AI integration. Consider whether any modifications or updates are necessary to ensure smooth data flow and accessibility.

- Cost Estimation: Calculate the costs associated with adopting AI, including labor for data preparation and model development, technology investments, and change management expenses. This step provides a realistic budget for your AI initiatives.

By following these steps, organizations can lay the groundwork for a successful AI adoption strategy. It helps in avoiding common pitfalls related to data quality and infrastructure readiness.

Leveraging auxiliary technologies in Enterprise AI

In a recent survey by a large telecommunications company, half of all respondents said they wait up to one month for privacy approvals before they can proceed with their data processing and analytics activities. Data Processing Agreements (DPAs), Secure by Design processes, and further approvals are the main reasons behind these high lead times.

The demand for quicker, more accessible, and statistically representative data makes the case that real and mock data just aren't good enough to meet these (somewhat basic) requirements.

On the other side, however, The Wall Street Journal has recently reported that big tech companies such as Microsoft, Google, and Adobe are struggling to make AI technology profitable as they attempt to integrate it into their existing products.

The dichotomy we see here can put decision-makers into a state of paralysis: the need to act is imminent, but the price of poor action is high. Trustworthy and competent guidance, along with a sound strategy, is the only way out of the AI rabbit hole and towards AI-based solutions that can be monetized and thereby target and alleviate corporate pain points.

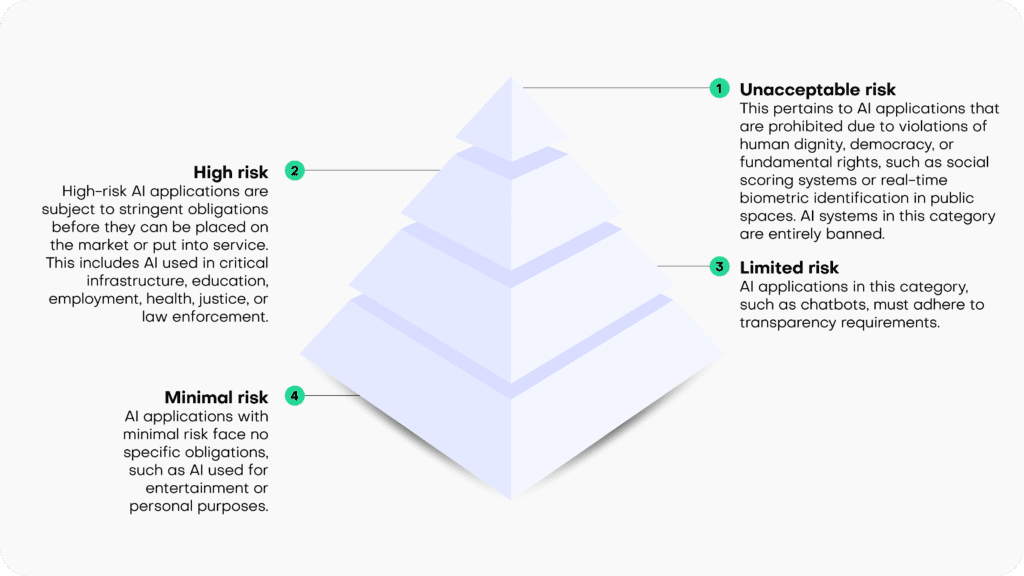

One of the key strategies to mitigate the risks associated with AI adoption is to leverage auxiliary technologies. These technologies act as force multipliers, enhancing the efficiency and safety of AI implementations. Recently, European lawmakers specifically included synthetic data in the draft of the EU's upcoming AI Act, as a data type explicitly suitable for building AI systems.

In this context, MOSTLY AI's Synthetic Data Platform emerges as a powerful ally. This innovative platform offers synthetic data generation capabilities that can significantly aid in AI development and deployment. Here's how it can benefit your organization:

- Enhancing Data Privacy: Synthetic data allows organizations to work with data that resembles their real data but contains no personally identifiable information (PII). This ensures compliance with data privacy regulations, such as GDPR and HIPAA.

- Reducing Data Bias: The platform generates synthetic data that is free from inherent biases present in real data. This helps in building fair and unbiased AI models, reducing the risk of discrimination.

- Accelerating AI Development: Synthetic data accelerates AI development by providing a diverse dataset that can be used for training and testing models. It reduces the time and effort required to collect and clean real data.

- Testing AI Systems Safely: Organizations can use synthetic data to simulate various scenarios and test AI systems without exposing sensitive or confidential information.

- Cost Efficiency: Synthetic data reduces the need to invest in expensive data collection and storage processes, making AI adoption more cost-effective.

By incorporating MOSTLY AI's Synthetic Data Platform into your AI strategy, you can significantly reduce the complexities and uncertainties associated with data privacy, bias, and development timelines.

Enterprise AI example: ChatGPT's Code Interpreter

To illustrate the practical application of auxiliary technologies, let's consider a concrete example: Chat GPT's code interpreter in conjunction with MOSTLY AI’s Synthetic Data Platform. This innovative duo-tool plays a pivotal role in ensuring companies can harness the power of AI while maintaining safety and compliance. Business teams can feed statistically meaningful synthetic data into their corporate ChatGPT instead of real corporate data and thereby meet both data accuracy and privacy objectives.

Defining guidelines and best practices for Enterprise AI

Before diving into Enterprise AI implementation, it's essential to set clear guidelines and best practices. This involves:

- Scope and Planning Strategy: Define the scope of your AI implementation, aligning it with your organization's strategic objectives. Create a comprehensive plan that outlines the steps, timelines, and resources required for a successful AI deployment.

Embracing auxiliary technologies

In the context of auxiliary technologies, MOSTLY AI's Synthetic Data Platform is an invaluable resource. This platform provides organizations with the ability to generate synthetic data that closely mimics their real data, without compromising privacy or security.

Insight: The combination of setting clear guidelines and leveraging auxiliary technologies like MOSTLY AI's Synthetic Data Platform ensures a smoother and safer AI journey for organizations, where innovation can thrive without fear of adverse consequences.

The transformative force of Enterprise AI

In summary, Enterprise AI is no longer a distant concept but a transformative force reshaping the corporate landscape. The challenges it presents are real, as are the opportunities.

We've explored the delicate balance executives must strike when considering AI adoption, the ethical concerns that underscore this technology, and a structured approach to navigate these challenges. Auxiliary technologies like MOSTLY AI's Synthetic Data Platform serve as indispensable tools, allowing organizations to harness the full potential of AI while safeguarding against risks.

As you embark on your Enterprise AI journey, remember that the right tools and strategies can make all the difference. Explore MOSTLY AI's Synthetic Data Platform to discover how it can enhance your AI initiatives and keep your organization on the path to success. With a solid foundation and the right auxiliary technologies, the future of Enterprise AI holds boundless possibilities.

If you would like to know more about synthetic data, we suggest trying MOSTLY AI's free synthetic data generator, using one of the sample dataets provided within the app or reach out to us for a personalized demo!

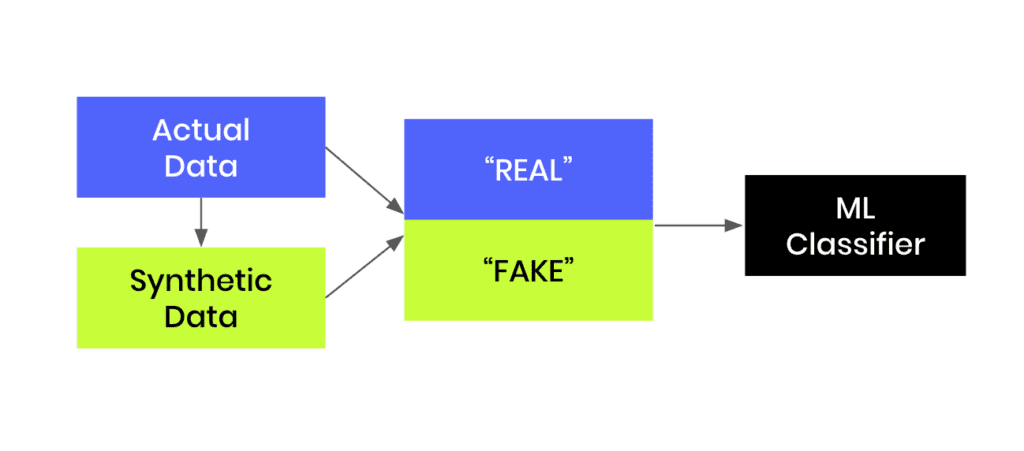

In this tutorial, you will learn how to build a machine-learning model that is trained to distinguish between synthetic (fake) and real data records. This can be a helpful tool when you are given a hybrid dataset containing both real and fake records and want to be able to distinguish between them. Moreover, this model can serve as a quality evaluation tool for any synthetic data you generate. The higher the quality of your synthetic data records, the harder it will be for your ML discriminator to tell these fake records apart from the real ones.

You will be working with the UCI Adult Income dataset. The first step will be to synthesize the original dataset. We will start by intentionally creating synthetic data of lower quality in order to make it easier for our “Fake vs. Real” ML classifier to detect a signal and tell the two apart. We will then compare this against a synthetic dataset generated using MOSTLY AI's default high-quality settings to see whether the ML model can tell the fake records apart from the real ones.

The Python code for this tutorial is publicly available and runnable in this Google Colab notebook.

Fig 1 - Generate synthetic data and join this to the original dataset in order to train an ML classifier.

Create synthetic training data

Let’s start by creating our synthetic data:

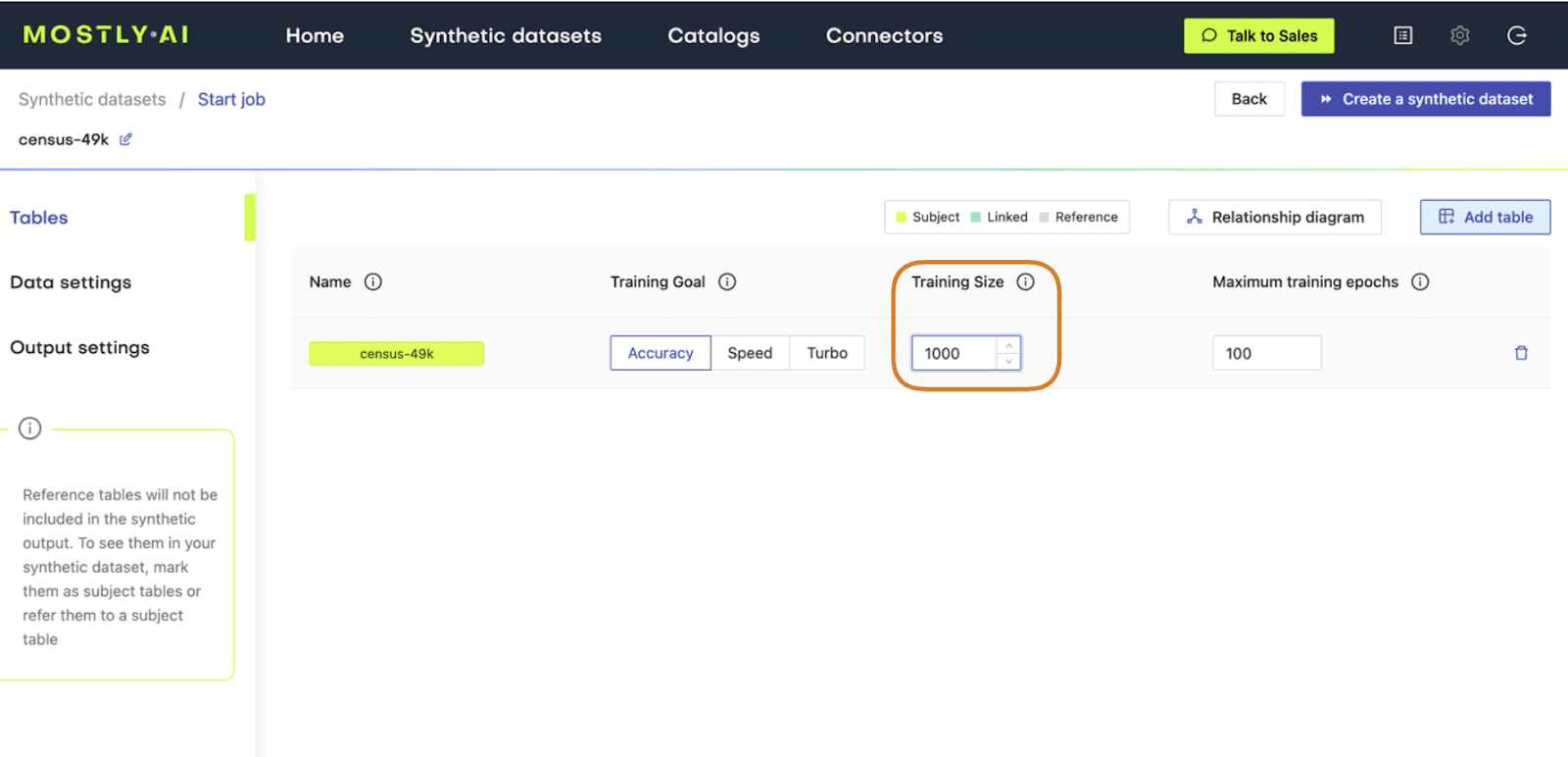

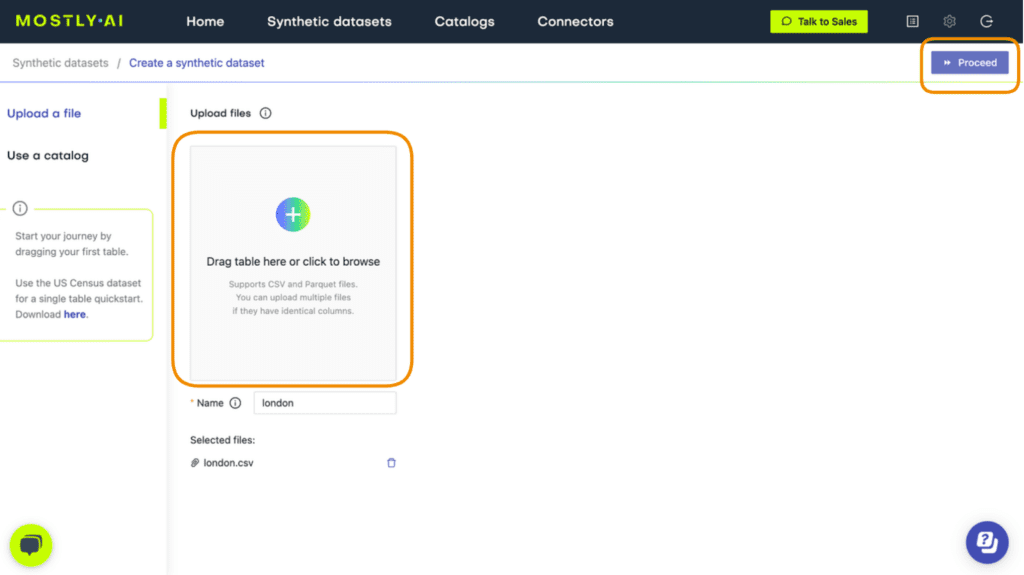

- Download the original dataset here. Depending on your operating system, use either Ctrl+S or Cmd+S to save the file locally.

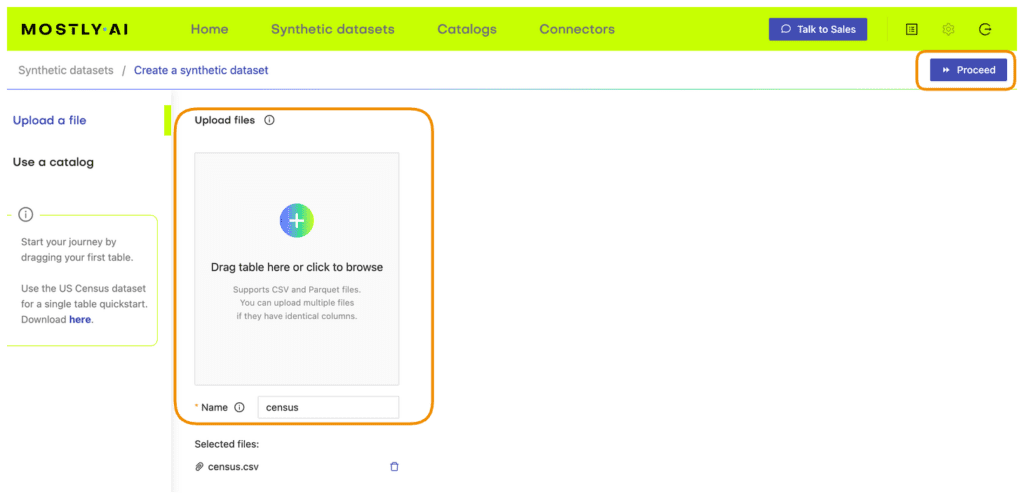

- Go to your MOSTLY AI account and navigate to “Synthetic Datasets”. Upload the CSV file you downloaded in the previous step and click “Proceed”.

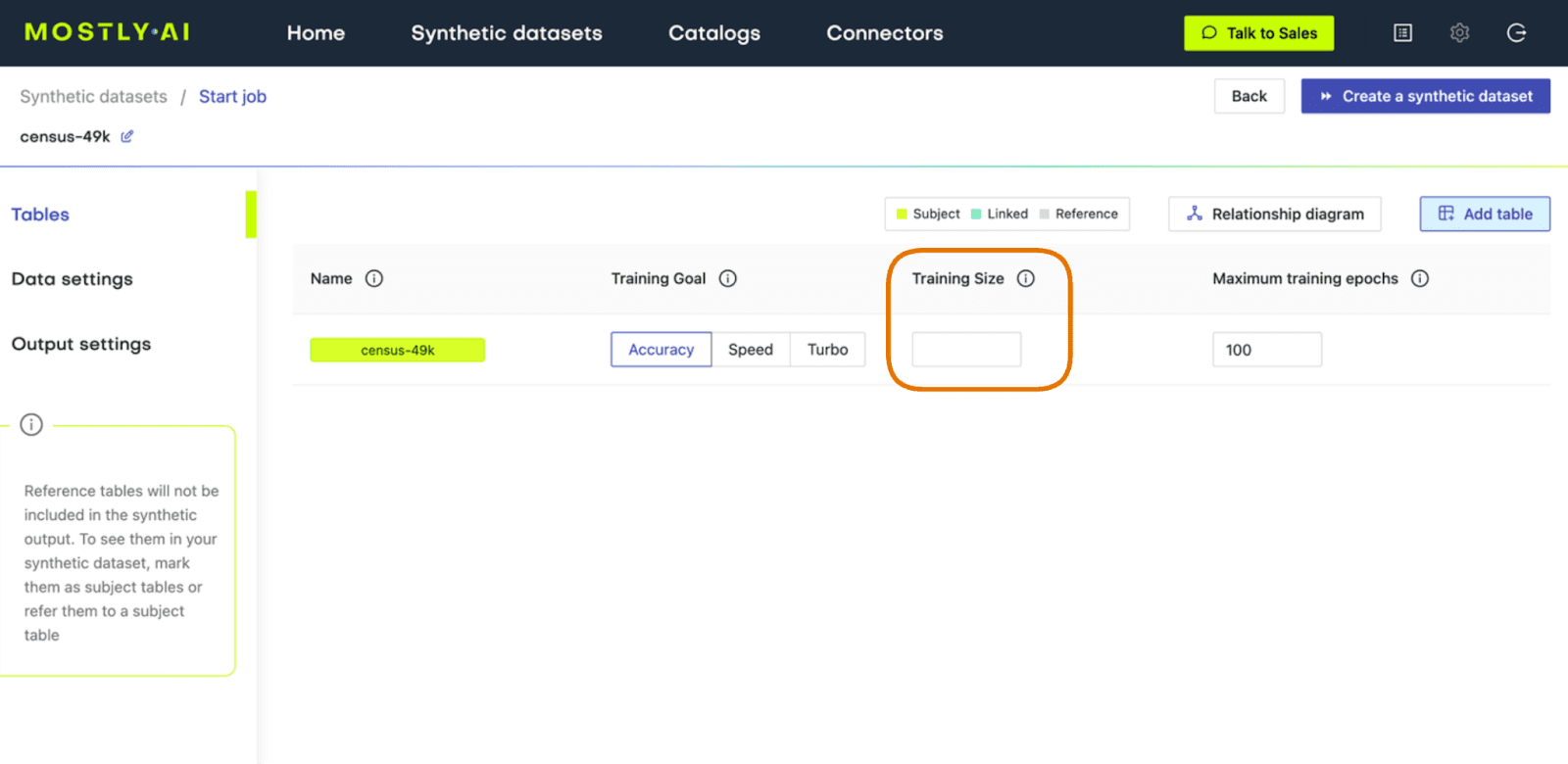

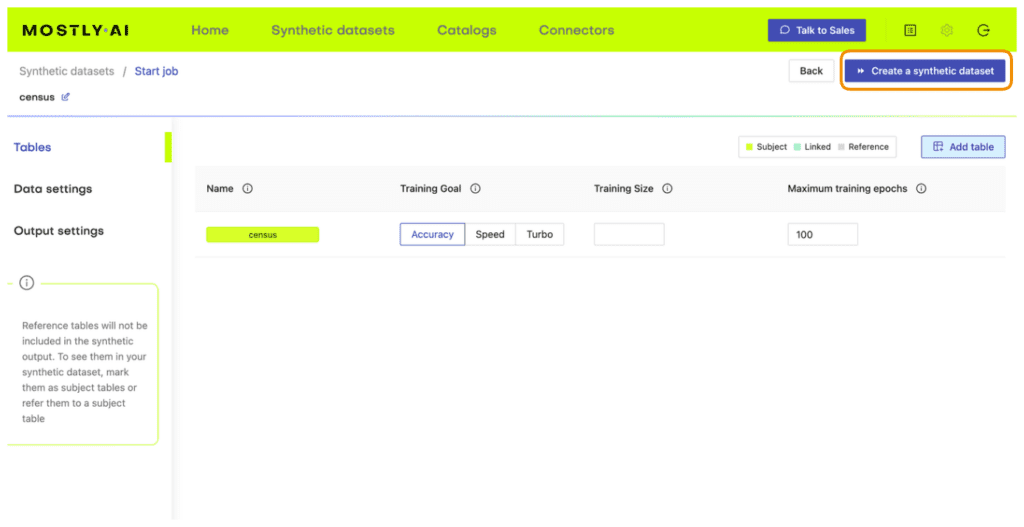

- Set the Training Size to 1000. This will intentionally lower the quality of the resulting synthetic data. Click “Create a synthetic dataset” to launch the job.

Fig 2 - Set the Training Size to 1000.

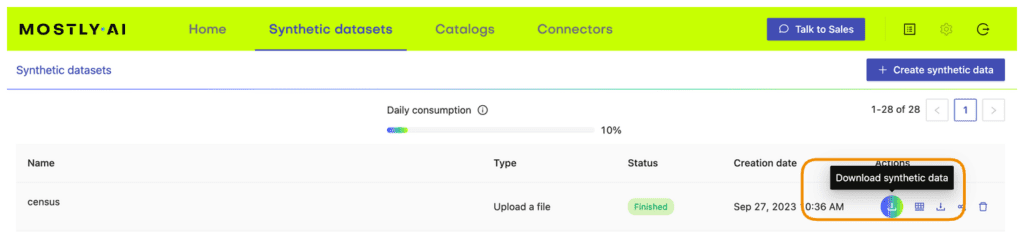

- Once the synthetic data is ready, download it to disk as CSV and use the following code to upload it if you’re running in Google Colab or to access it from disk if you are working locally:

# upload synthetic dataset

import pandas as pd

try:

# check whether we are in Google colab

from google.colab import files

print("running in COLAB mode")

repo = "https://github.com/mostly-ai/mostly-tutorials/raw/dev/fake-or-real"

import io

uploaded = files.upload()

syn = pd.read_csv(io.BytesIO(list(uploaded.values())[0]))

print(

f"uploaded synthetic data with {syn.shape[0]:,} records"

" and {syn.shape[1]:,} attributes"

)

except:

print("running in LOCAL mode")

repo = "."

print("adapt `syn_file_path` to point to your generated synthetic data file")

syn_file_path = "./census-synthetic-1k.csv"

syn = pd.read_csv(syn_file_path)

print(

f"read synthetic data with {syn.shape[0]:,} records"

" and {syn.shape[1]:,} attributes"

)Train your “fake vs real” ML classifier

Now that we have our low-quality synthetic data, let’s use it together with the original dataset to train a LightGBM classifier.

The first step will be to concatenate the original and synthetic datasets together into one large dataset. We will also create a split column to label the records: the original records will be labeled as REAL and the synthetic records as FAKE.

# concatenate FAKE and REAL data together

tgt = pd.read_csv(f"{repo}/census-49k.csv")

df = pd.concat(

[

tgt.assign(split="REAL"),

syn.assign(split="FAKE"),

],

axis=0,

)

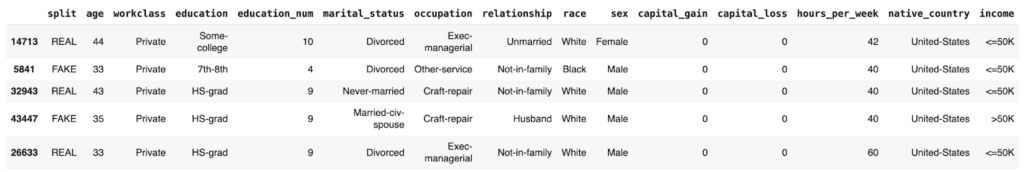

df.insert(0, "split", df.pop("split"))Sample some records to take a look at the complete dataset:

df.sample(n=5)

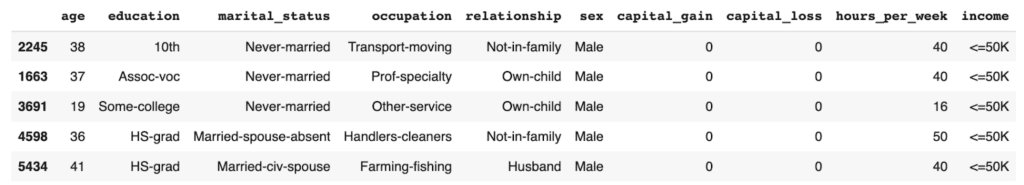

We see that the dataset contains both REAL and FAKE records.

By grouping by the split column and verifying the size, we can confirm that we have an even split of synthetic and original records:

df.groupby('split').size()split

FAKE 48842

REAL 48842

dtype: int64

The next step will be to train your LightGBM model on this complete dataset. The following code contains two helper scripts to preprocess the data and train your model:

import lightgbm as lgb

from lightgbm import early_stopping

from sklearn.model_selection import train_test_split

def prepare_xy(df, target_col, target_val):

# split target variable `y`

y = (df[target_col] == target_val).astype(int)

# convert strings to categoricals, and all others to floats

str_cols = [

col

for col in df.select_dtypes(["object", "string"]).columns

if col != target_col

]

for col in str_cols:

df[col] = pd.Categorical(df[col])

cat_cols = [

col

for col in df.select_dtypes("category").columns

if col != target_col

]

num_cols = [

col for col in df.select_dtypes("number").columns if col != target_col

]

for col in num_cols:

df[col] = df[col].astype("float")

X = df[cat_cols + num_cols]

return X, y

def train_model(X, y):

cat_cols = list(X.select_dtypes("category").columns)

X_trn, X_val, y_trn, y_val = train_test_split(

X, y, test_size=0.2, random_state=1

)

ds_trn = lgb.Dataset(

X_trn, label=y_trn, categorical_feature=cat_cols, free_raw_data=False

)

ds_val = lgb.Dataset(

X_val, label=y_val, categorical_feature=cat_cols, free_raw_data=False

)

model = lgb.train(

params={"verbose": -1, "metric": "auc", "objective": "binary"},

train_set=ds_trn,

valid_sets=[ds_val],

callbacks=[early_stopping(5)],

)

return modelBefore training, make sure to set aside a holdout dataset for evaluation. Let’s reserve 20% of the records for this:

trn, hol = train_test_split(df, test_size=0.2, random_state=1)Now train your LightGBM classifier on the remaining 80% of the combined original and synthetic data:

X_trn, y_trn = prepare_xy(trn, 'split', 'FAKE')

model = train_model(X_trn, y_trn)Training until validation scores don't improve for 5 rounds

Early stopping, best iteration is:

[30] valid_0's auc: 0.594648

Next, score the model’s performance on the holdout dataset. We will include the model’s predicted probability for each record. A score of 1.0 indicates that the model is fully certain that the record is FAKE. A score of 0.0 means the model is certain the record is REAL.

Let’s sample some random records to take a look:

hol.sample(n=5)

We see that the model assigns varying levels of probability to the REAL and FAKE records. In some cases it is not able to predict with much confidence (scores around 0.5) and in others it is quite confident and also correct: see the 0.0727 for a REAL record and 0.8006 for a FAKE record.

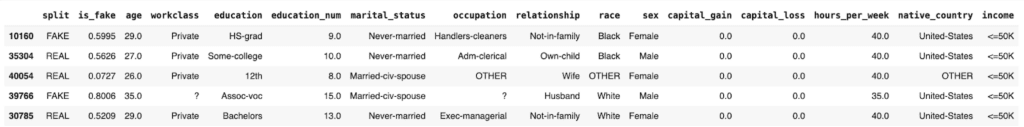

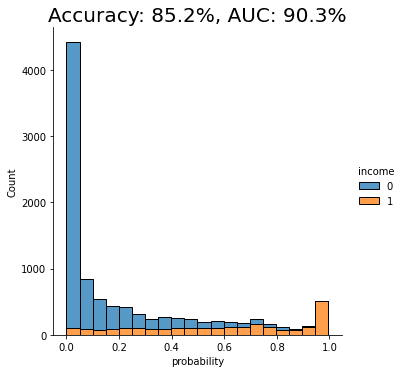

Let’s visualize the model’s overall performance by calculating the AUC and Accuracy scores and plotting the probability scores:

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.metrics import roc_auc_score, accuracy_score

auc = roc_auc_score(y_hol, hol.is_fake)

acc = accuracy_score(y_hol, (hol.is_fake > 0.5).astype(int))

probs_df = pd.concat(

[

pd.Series(hol.is_fake, name="probability").reset_index(drop=True),

pd.Series(y_hol, name="target").reset_index(drop=True),

],

axis=1,

)

fig = sns.displot(

data=probs_df, x="probability", hue="target", bins=20, multiple="stack"

)

fig = plt.title(f"Accuracy: {acc:.1%}, AUC: {auc:.1%}")

plt.show()

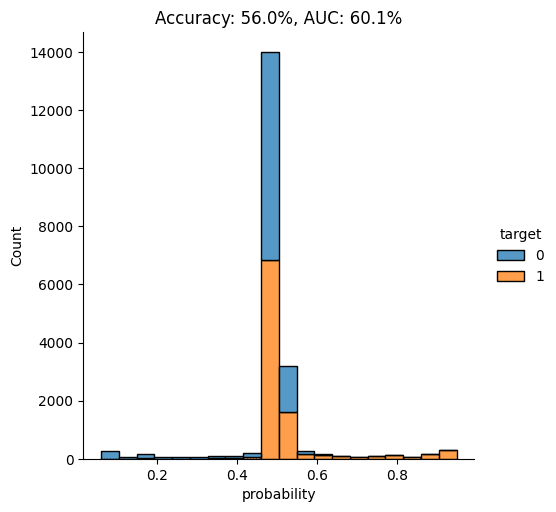

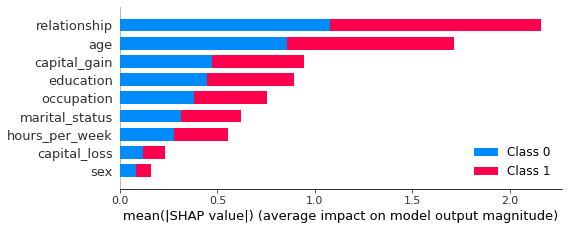

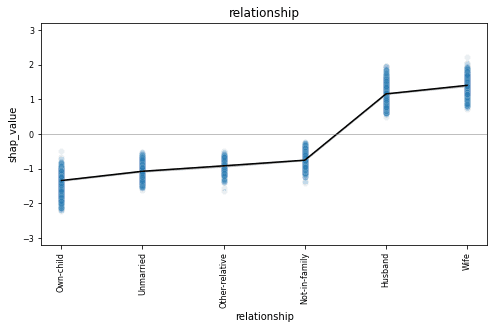

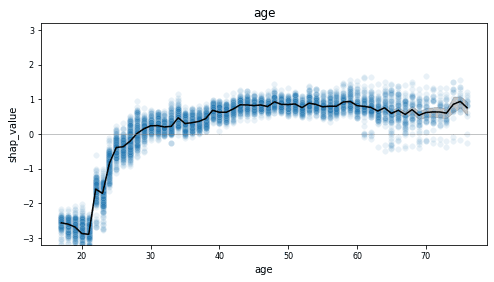

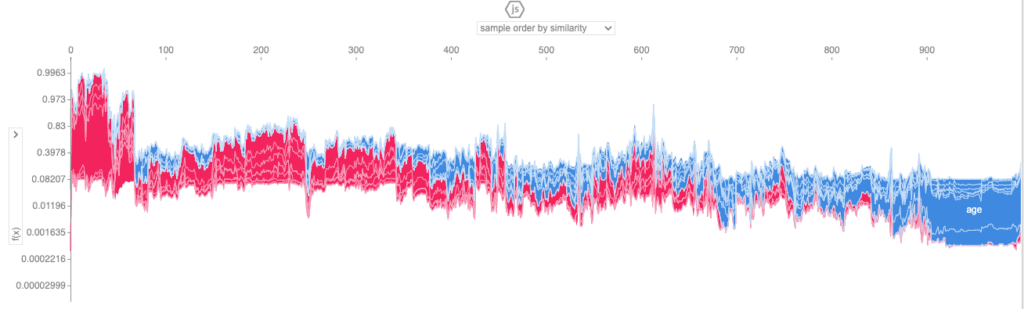

As you can see from the chart above, the discriminator has learned to pick up some signals that allow it with a varying level of confidence to determine whether a record is FAKE or REAL. The AUC can be interpreted as the percentage of cases in which the discriminator is able to correctly spot the FAKE record, given a set of a FAKE and a REAL record.

Let’s dig a little deeper by looking specifically at records that seem very fake and records that seem very real. This will give us a better understanding of the type of signals the model is learning.

Go ahead and sample some random records which the model has assigned a particularly high probability of being FAKE:

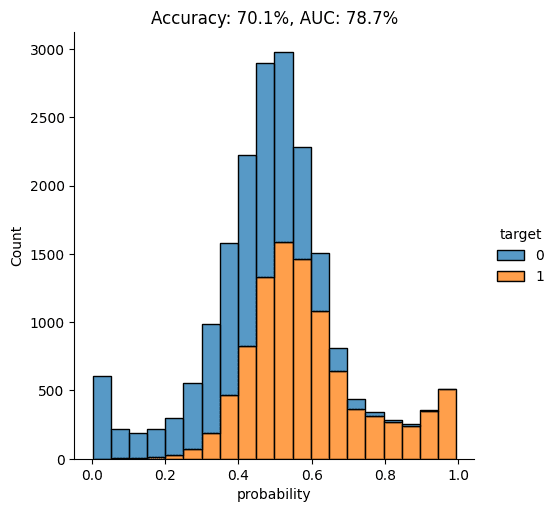

hol.sort_values('is_fake').tail(n=100).sample(n=5)

In these cases, it seems to be the mismatch between the education and education_num columns that gives away the fact that these are synthetic records. In the original data, these two columns have a 1:1 mapping of numerical to textual values. For example, the education value Some-college is always mapped to the numerical education_num value 10.0. In this poor-quality synthetic data, we see that there are multiple numerical values for the Some-college value, thereby giving away the fact that these records are fake.

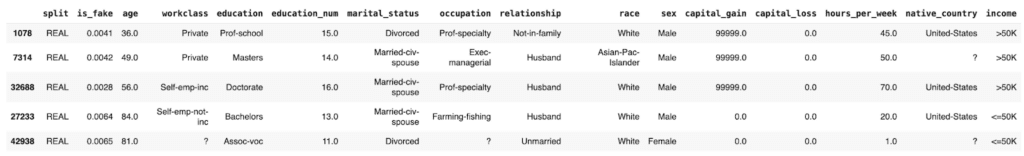

Now let’s take a closer look at records which the model is especially certain are REAL:

hol.sort_values('is_fake').head(n=100).sample(n=5)

These “obviously real” records are types of records which the synthesizer has apparently failed to create. Thus, as they are then absent from the synthetic data, the discriminator recognizes these as REAL.

Generate high-quality synthetic data with MOSTLY AI

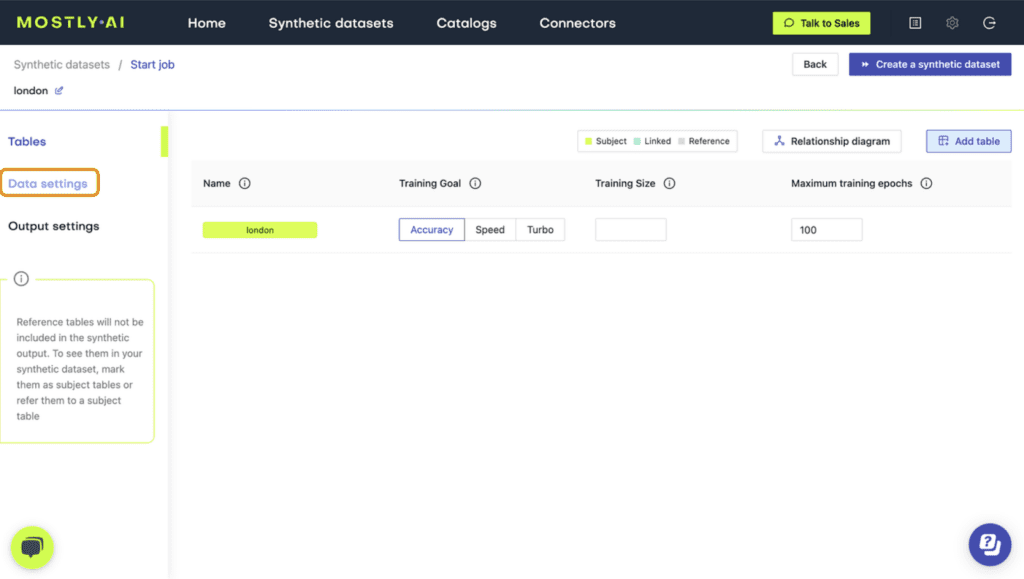

Now, let’s proceed to synthesize the original dataset again but this time using MOSTLY AI’s default settings for high-quality synthetic data. Run the same steps as before to synthesize the dataset except this time leave the Training Sample field blank. This will use all the records for the model training, ensuring the highest-quality synthetic data is generated.

Fig 3 - Leave the Training Size blank to train on all available records.

Once the job has completed, download the high-quality data as CSV and then upload it to wherever you are running your code.

Make sure that the syn variable now contains the new, high-quality synthesized data. Then re-run the code you ran earlier to concatenate the synthetic and original data together, train a new LightGBM model on the complete dataset, and evaluate its ability to tell the REAL records from FAKE.

Again, let’s visualize the model’s performance by calculating the AUC and Accuracy scores and by plotting the probability scores:

This time, we see that the model’s performance has dropped significantly. The model is not really able to pick up any meaningful signal from the combined data and assigns the largest share of records a probability around the 0.5 mark, which is essentially the equivalent of flipping a coin.

This means that the data you have generated using MOSTLY AI’s default high-quality settings is so similar to the original, real records that it is almost impossible for the model to tell them apart.

Classifying “fake vs real” records with MOSTLY AI