Speed up training

Depending on the size and complexity of your original table data, AI model training can take long. You can speed up training times by reducing the maximum training time, the amount of training epochs, decreasing the model size, or increasing the batch size.

The actual training time depends mainly on your data. Because of this, there is no "one-size-fits-all" configuration to reduce training time. Test the configuration of your models and try the suggested configurations in the sections below. Treat the values provided in the examples in each section as a demonstration.

Defaults related to training time

The training speed-related options with their default setting for each model are listed below:

- Max sample size: 100%

- Max training time: 10 min

- Max training epochs: 100

- Model: MOSTLY_AI/Medium

- Batch size: Auto

- Max sequence window (linked tables and Text models only): 100 rows

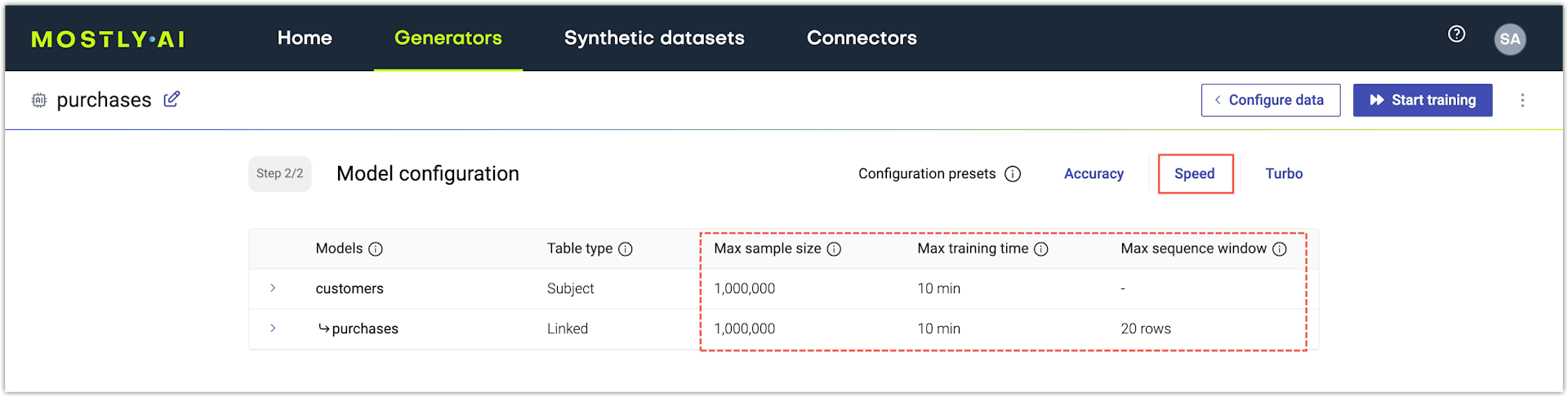

Use the Speed or Turbo presets

Select the Speed configuration preset for faster AI model training while not compromising on accuracy. The Speed preset applies the following configuration:

- Max sample size: 100%

- Max training time: 10 min

- Max sequence window (linked tables and Text models only): 20 rows

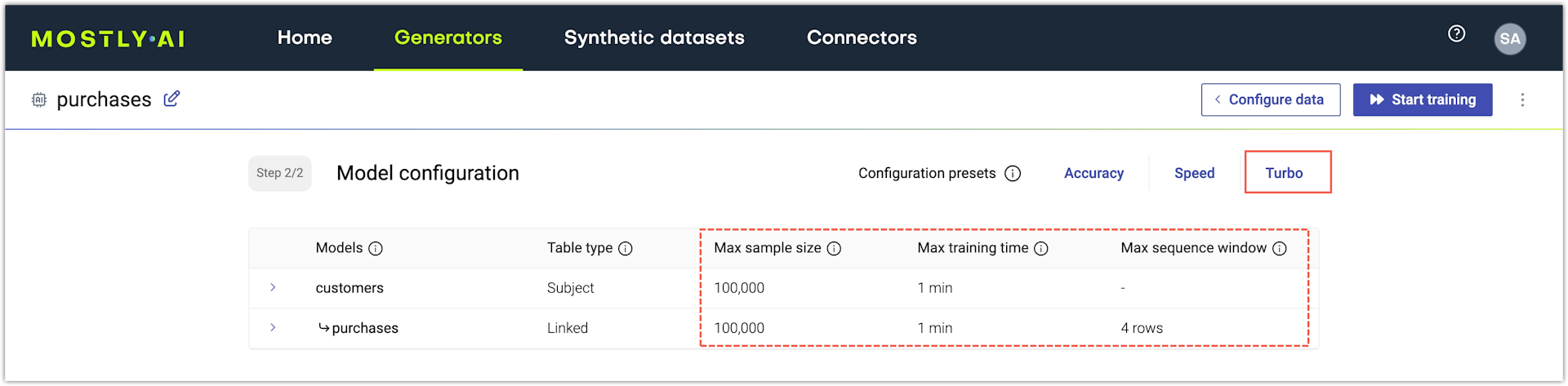

Select the Turbo configuration preset to complete AI model training as quickly as possible at the cost of accuracy. Best for quick sanity checks. The Turbo preset applies the following configuration:

- Max sample size: 100%

- Max training time: 1 min

- Max sequence window (linked tables and Text models only): 4 rows

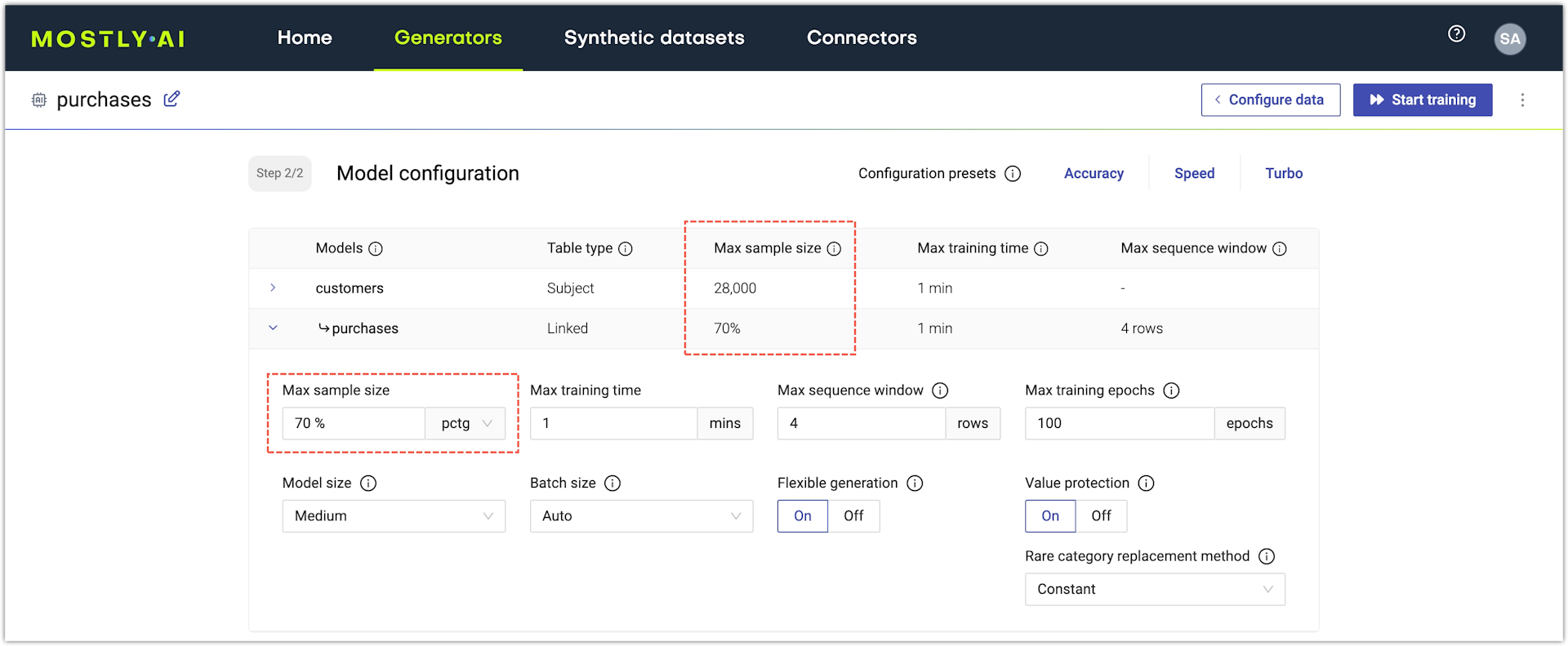

Decrease training sample size

By default, MOSTLY AI uses all records in a table to train the Generative AI model for that table. Decrease the training sample size if you want to speed up model training.

If you use the web application, you can configure the training sample size for each table from the Model configuration page of a generator.

Steps

- With an untrained generator open, go to the Model configuration page by clicking Configure models.

- Click a table to expand its model settings.

- Set the Max sample size as number of rows.

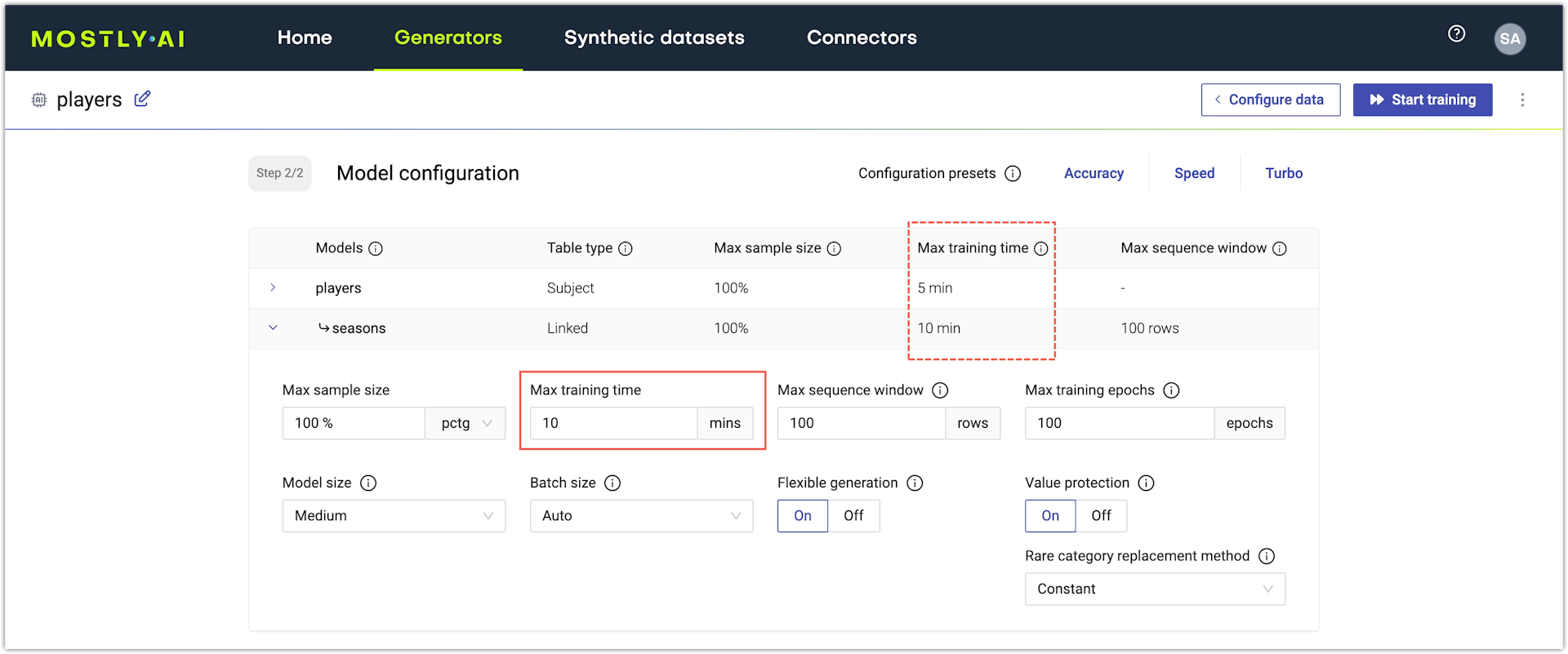

Decrease training time

MOSTLY AI sets the default maximum training time to 60 minutes for an AI model. Decrease it to speed up training.

If you use the web application, you can configure the maximum training time from the Model configuration page of a generator.

Steps

- With an untrained generator open, go to the Model configuration page by clicking Configure models.

- Click a table to expand its model settings.

- Set the Max training time in minutes.

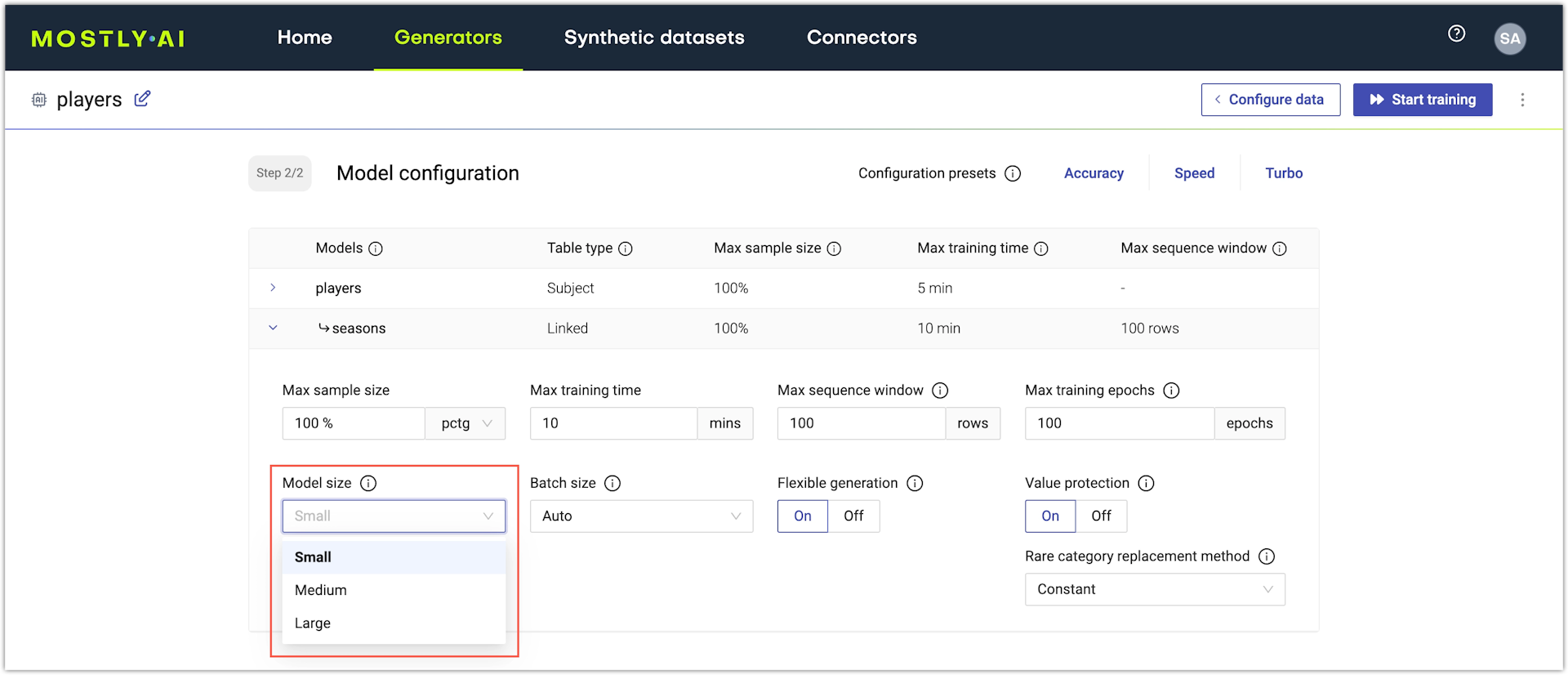

Select a smaller model

The model size defines the amount of internal parameters that the AI model uses to learn from your data. A smaller model uses less parameters to analyze and train on your data. MOSTLY AI provides models with three different sizes.

- MOSTLY_AI/Small uses fewer parameters, takes less memory and time, at the cost of accuracy.

- MOSTLY_AI/Medium uses optimal parameters and is best for most use cases.

- MOSTLY_AI/Large maximizes accuracy with more parameters but requires extra memory and time to complete training.

In most cases, the MOSTLY_AI/Medium model is the most optimal. Select a MOSTLY_AI/Small model if you can compromise on accuracy and want to speed up training.

If you use the web application, you can configure the model from the Model configuration page of a generator.

Steps

- With an untrained generator open, go to the Model configuration page by clicking Configure models.

- Click a table to expand its model settings.

- For Model, select MOSTLY_AI/Small.

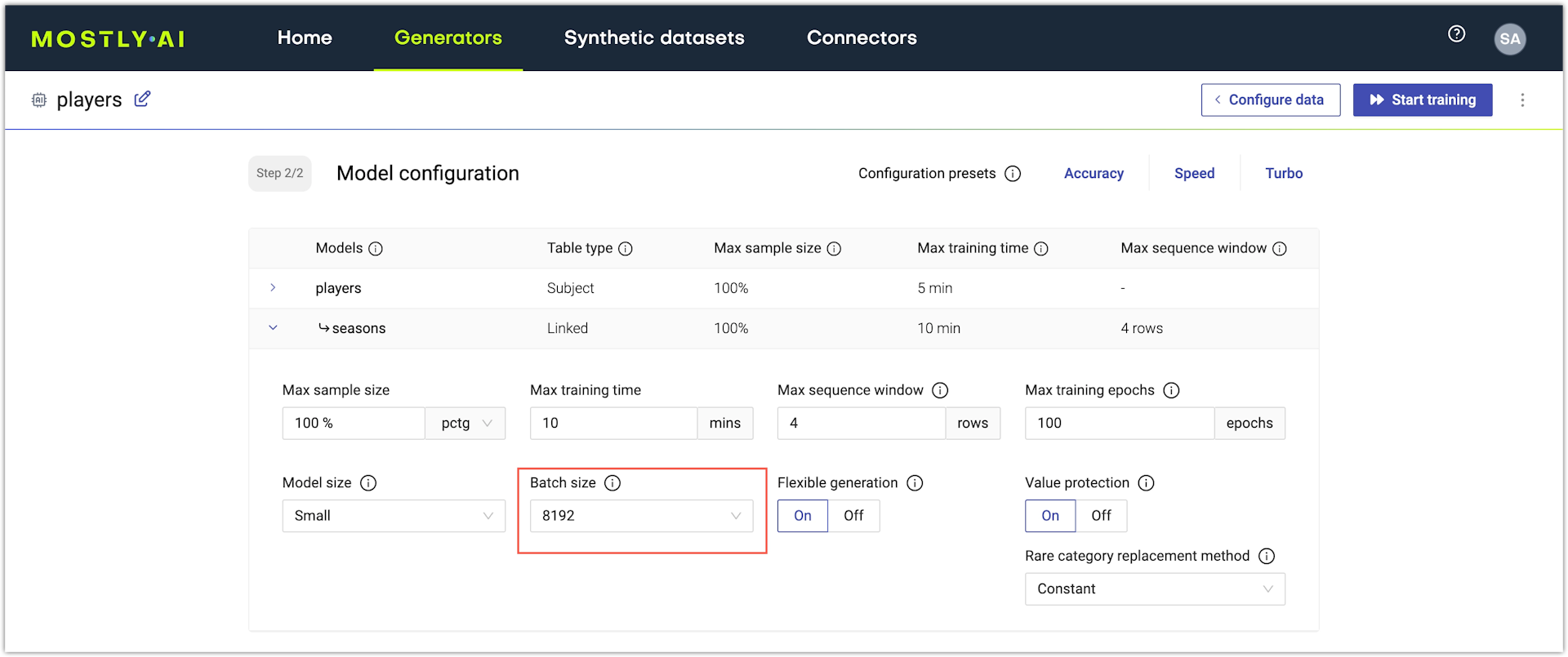

Increase batch size

Batch size refers to the number of records used to complete a training pass of the AI model. An epoch completes when all records go through AI model training (depending on the batch size and the records in a table, more than one passes might be necessary).

By default, the batch size is set to Auto and MOSTLY AI determines a batch size that is appropriate for your data. Set a high batch size for a model to speed up model training when accuracy is not a priority.

If you use the web application, you can configure the model size from the Model configuration page of a generator.

Steps

- With an untrained generator open, go to the Model configuration page by clicking Configure models.

- Click a table to expand its model settings.

- Adjust the Batch size.

- In most cases, use Auto and MOSTLY AI determines the optimal batch size for your table data.

- Otherwise, set the batch size to a powers of 2 integer from the listed options.

Decrease max sequence window

If you have linked table models (with time-series and events data) in your generator, you can speed up linked model training by decreasing the Max sequence window setting. Bear in mind that depending on your data, this can decrease the accuracy of the model.

For more information, see Configure AI models for time-series and events data.