Use Apache Hive for synthetic data

MOSTLY AI can use Apache Hive as a data source for original data as well as a destination for synthetic data. To do so, you need to create Apache Hive connectors.

Create an Apache Hive connector

For each Apache Hive data source or destination, you need a separate connector.

Prerequisites

Obtain the Apache Hive connection details. To use Kerberos, see Use Kerberos for authentication.

- host

- port

- credentials

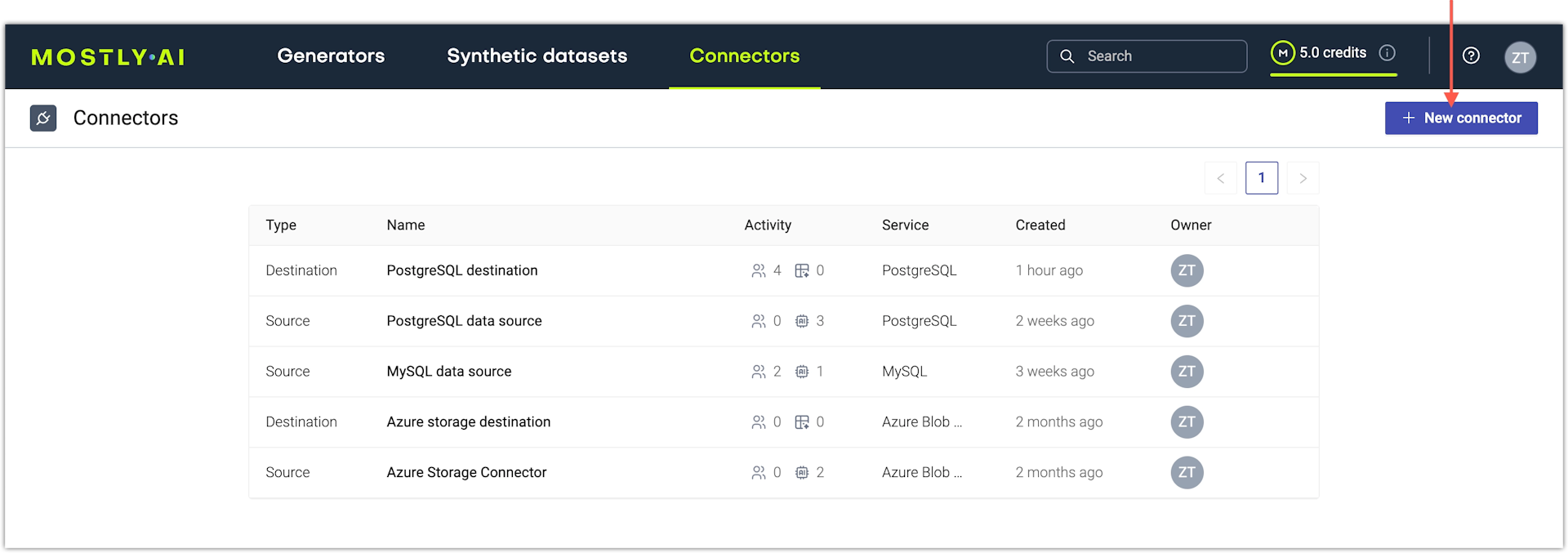

If you use the web application, create a new Apache Hive connector from the Connectors page.

Steps

- From the Connectors page, click New connector.

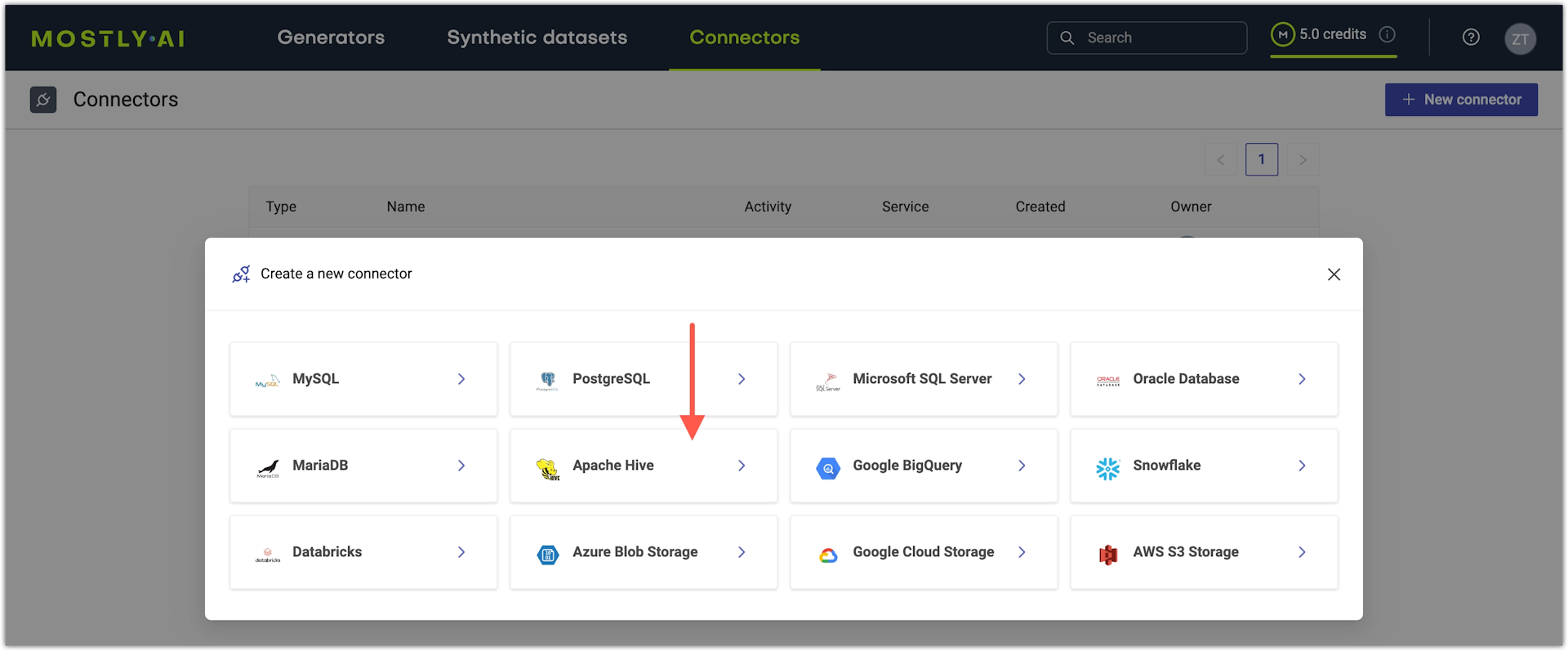

- From the Create a new connector window, select Apache Hive.

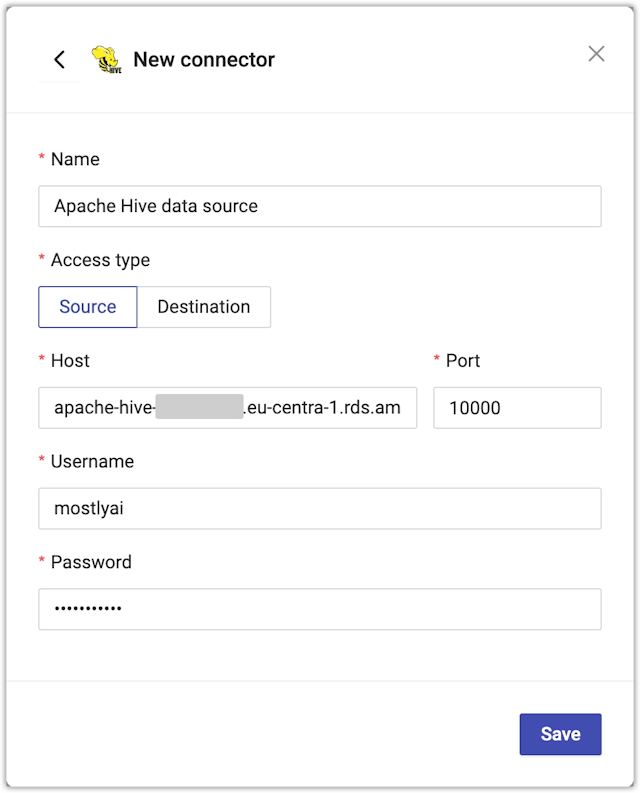

- From the New connector page, configure the connector.

- For Name, enter a name that you can distinguish from other connectors.

- For Access type, select whether you want to use the connector as a source or destination.

💡

If you plan to use Apache Hive as a destination for synthetic data, MOSTLY AI recommends that you load the data into Apache Hive using Parquet files. For more information, see Considerations for Apache Hive as a destination.

- For Host, enter the Apache Hive hostname.

- For Port, enter the port number.

The default port for Apache Hive is 10000.

- For Username and Password, enter your Apache Hive credentials.

- Click Save to save your new Apache Hive connector.

MOSTLY AI tests the connection. If you see an error, check the connection details, update them, and click Save again.

You can click Save anyway to save the connector disregarding any errors.

Use Kerberos for authentication

To create an Apache Hive connector with Kerberos authentication, contact your Kerberos system administrator to obtain the information listed below.

- Kerberos principal. A unique identity to which Kerberos can assign tickets.

- Kerberos

krb5.confconfiguration file. The contents of akrb5.confconfiguration file, such as the one listed below. For more information, seekrb5.confin the MIT Kerberos Documentation.[libdefaults] default_realm = INTERNAL dns_lookup_realm = false dns_lookup_kdc = false forwardable = true rdns = false [realms] INTERNAL = { kdc = ip-172-***-***-222 kdc = ip-172-***-***-222.hive-kerberized.domain.com kdc = 172.***.***.222 kdc = 3.***.***.111 kdc = hive-kerberized.domain.com admin_server = hive-kerberized.domain.com } [domain_realm] .hive-kerberized.domain.com = INTERNAL hive-kerberized.domain.com = INTERNAL - Kerberos keytab. Short for “key table”, keytab files are used in Kerberos authentication to store keys needed to log in to Kerberos-aware services. Keytab files allow automated processes (scripts and service authentication) to authenticate using Kerberos without requiring a human to enter a password.

- CA certificate. If you have access to your keystore file from which you want to extract the CA certificate, you can use the following command to extract the CA certificate in PEM format.

For example:shell

keytool -exportcert -alias ALIAS -keystore KEYSTORE_FILE -storepass STORE_PASSWORD -file ca-certificate.pem -rfcshellkeytool -exportcert -alias hivessl-ss -keystore /usr/lib/jvm/java-8-openjdk-amd64/jre/lib/security/hivessl-ss.p12 -storepass 123456 -file /tmp/ca-cert.pem -rfc

If you use the web application, enable the Authenticate with Kerberos checkbox in your Apache Hive connector and provide the required information.

Steps

- To use Kerberos for authentication in your Apache Hive connector, select the Authenticate with Kerberos checkbox.

- Provide the Kerberos authentication details.

- For Kerberos principal, type or paste the Kerberos principal.

- For Kerberos krb5 config, paste the contents of a

krb5.conffile. - For Kerberos keytab, click the Upload button and select a Kerberos keytab file from your local file system.

- Enable SSL and provide the CA certificate.

- Click Save.

What’s next

Depending on whether you created a source or a destination connector, you can use the connector as:

- data source for a new generator

- data destination for a new synthetic dataset

Considerations for Apache Hive as a destination

The Apache Hive connector uses a database interface to connect to your Apache Hive instance. While the database interface provides high-performance for read operations (for example, to pull original data for synthesis), using that interface to load synthetic data in batches can be too slow. It is not a recommended solution for delivering high volumes of synthetic data.

The fastest way to load data into Apache Hive is to save synthetic data as Parquet files into an S3 storage bucket and then load the data into Apache Hive.

For example:

CREATE TABLE table_name;

LOAD DATA INPATH 's3://bucket-name/path/to/parquet-file' INTO TABLE table_name;