Configure AI models for time-series and events data

MOSTLY AI supports specific AI models for training on time-series. You can configure how these models train on your time-series data.

Use Datetime and Datetime: relative column types

For any datetime columns in your data (that are in a supported format), MOSTLY AI assigns the Datetime column type. You can also use the Datetime: relative column type.

If you prioritize having valid and statistically representative dates and times, use Datetime. If you want to generate accurate intervals between each event where accurate dates are not a priority, use Datetime: relative.

Maintain correlations and referential integrity with related tables

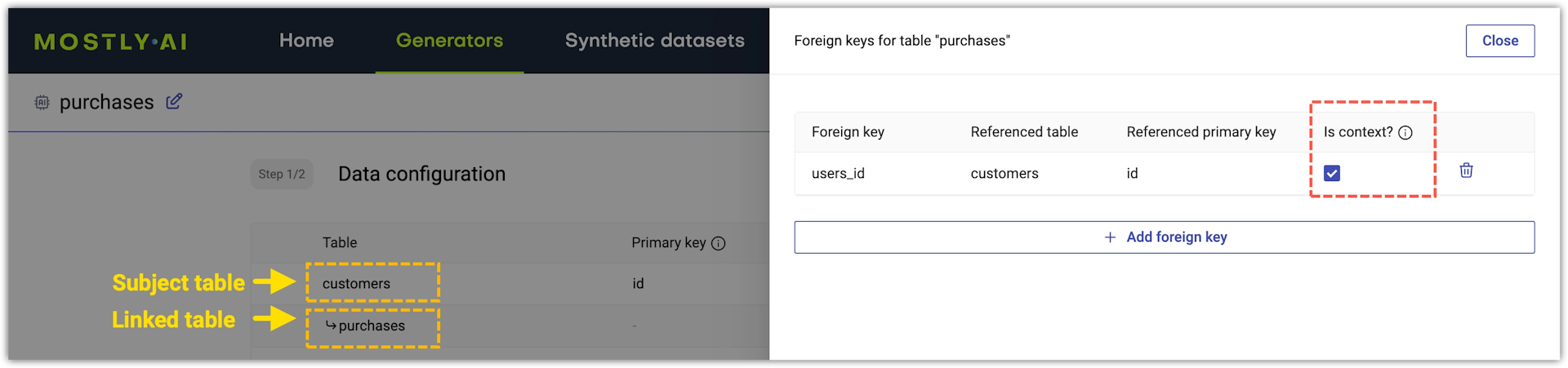

Tables containing time-series and events data usually reference subject tables that include PII information about people, organizations, and other entities. In MOSTLY AI, time-series and events tables are recognized as linked tables. To ensure that your generator learns the correlations between events and subjects and maintains the referential integrity between such tables, you should create a context relationship between subject and linked tables when you configure your generator.

For more information, see Context and non-context relationships and Context foreign key.

Set max sequence window

Max sequence window applies only to linked tables (with time-series and events data) and Text models. A linked table has a context foreign key to another table.

For linked tables, the term sequence means the events that belong to a single subject. The term sequence length indicates the number of events in the sequence. For example, if subject A has 30 related events, then the sequence length is 30.

MOSTLY AI has a specific AI model for time-series and events data that captures the sequences and event patterns in linked tables. While performing AI model training on a complete sequence can lead to high accuracy, this can also lead to out-of-memory failures if certain sequences are too long. For better training efficiency, you can adjust the Max sequence window training parameter. This sets the maximum subsequence of events the model trains on for the duration of a training epoch. During each epoch, the window shifts to consider a different subsequence with the goal to train on the entire sequence by the end of model training.

By default, Max sequence window is set to 100.

If you use the web application, you can configure the maximum sequence window from the Model configuration page of a generator.

Steps

- With an untrained generator open, go to the Model configuration page by clicking Configure models.

- Click a linked table to expand its model settings.

- Adjust the Max sequence window.